爬虫练手 爬取谚语并存入MySQL(包含如何debug scrapy 添加user agent)

scrapy框架

主要编写了三个文件,网络爬虫开发实战 这本书中是保存到了mongoDB,我自己改为了MySQL

1、spiders文件下的first.py

# -*- coding: utf-8 -*-

import scrapy

from QuotesToScrape.items import QuotestoscrapeItem

class FirstSpider(scrapy.Spider):

name = 'first'

allowed_domains = ['quotes.toscrape.com']

start_urls = ['http://quotes.toscrape.com/'] # 爬取的网站

def parse(self, response):

quotes = response.xpath('/html/body/div/div[2]/div[1]/div')

for quote in quotes:

item = QuotestoscrapeItem()

item['text'] = quote.xpath('./*[@class="text"]/text()').extract_first()

# print(item['text'])

item['author'] = quote.xpath('./span[2]/small/text()').extract_first()

# print(item['author'])

item['tags'] = ','.join(quote.xpath('.//a[@class="tag"]/text()').extract())

# print(item['tags'])

yield item

next = response.xpath('/html/body/div/div[2]/div[1]/nav/ul/li[@class="next"]/a/@href').extract_first()

next_url = response.urljoin(next)

print(next_url)

yield scrapy.Request(url=next_url, callback=self.parse)2、items.py文件

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class QuotestoscrapeItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

text = scrapy.Field()

author = scrapy.Field()

tags = scrapy.Field()

3、pipelines.py文件

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

from scrapy.exceptions import DropItem

import pymysql

class QuotestoscrapePipeline(object):

def __init__(self):

self.limit = 50 # 最多50个字符

def process_item(self, item, spider):

if item['text']:

if len(item['text']) > self.limit:

# print(type(item['text']))

item['text'] = item['text'][0:self.limit].strip('"') + '...'

# print(item['text'])

return item

else:

return item # 注意这一步不能缺

else:

return DropItem('Missing text')

class MySqlPipeline(object):

def process_item(self, item, spider):

db = pymysql.connect(host='localhost', user='root', password='password', port=3306, db='test_scrapy')

cursor = db.cursor()

sql = 'insert into quotes(text,author,tags) values(%s, %s, %s)'

try :

cursor.execute(sql, (item['text'], item['author'], item['tags']))

db.commit() # 这一步不能缺,注意数据表的表头长度限制

print("commited!")

except Exception as e:

print(e)

print("rollback!")

db.rollback()

db.close()

4、settings.py文件

ROBOTSTXT_OBEY = False # true改为false

LOG_LEVEL="WARNING" # 仅打印warning及其之上的信息

LOG_FILE="./log.log" # 将日志打印到这个文件之中

# 如何使用内建的logger呢

#相应的py文件之中

#import logging

#logger = logging.getLogger(__name__)

ITEM_PIPELINES = { # 这一部分解除注释

'QuotesToScrape.pipelines.QuotestoscrapePipeline': 300,

'QuotesToScrape.pipelines.MySqlPipeline': 400 #新添本行 并设置比300大的值

}

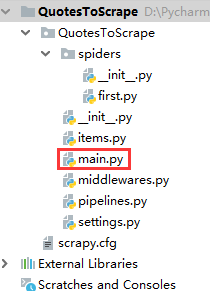

5、如何测试

将main文件放到与spiders同一级别的目录下,右键debug

#!/usr/bin/env python

#-*- coding:utf-8 -*-

from scrapy.cmdline import execute

import os

import sys

#添加当前项目的绝对地址

sys.path.append(os.path.dirname(os.path.abspath(__file__)))

#执行 scrapy 内置的函数方法execute, 使用 crawl 爬取并调试,最后一个参数jobbole 是我的爬虫文件名

execute(['scrapy', 'crawl', 'first'])6、改变user agent

参考 python爬虫之scrapy中user agent浅谈(两种方法)

使用参考文章的第一种:

settings创建user agent列表,

在setings中写一个user agent列表并设置随机方法(让setings替我们选择)

导入random,随机用choise函数调用user agent

import random

# user agent 列表

USER_AGENT_LIST = [

'MSIE (MSIE 6.0; X11; Linux; i686) Opera 7.23',

'Opera/9.20 (Macintosh; Intel Mac OS X; U; en)',

'Opera/9.0 (Macintosh; PPC Mac OS X; U; en)',

'iTunes/9.0.3 (Macintosh; U; Intel Mac OS X 10_6_2; en-ca)',

'Mozilla/4.76 [en_jp] (X11; U; SunOS 5.8 sun4u)',

'iTunes/4.2 (Macintosh; U; PPC Mac OS X 10.2)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:5.0) Gecko/20100101 Firefox/5.0',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:9.0) Gecko/20100101 Firefox/9.0',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.8; rv:16.0) Gecko/20120813 Firefox/16.0',

'Mozilla/4.77 [en] (X11; I; IRIX;64 6.5 IP30)',

'Mozilla/4.8 [en] (X11; U; SunOS; 5.7 sun4u)'

]

# 随机生成user agent

USER_AGENT = random.choice(USER_AGENT_LIST) 查看效果:

# -*- coding: utf-8 -*-

import scrapy

class MyspiderSpider(scrapy.Spider):

name = 'myspider'

allowed_domains = ['www.baidu.com']

start_urls = ['http://www.baidu.com']

def parse(self, response):

print(response.request.headers['User-Agent'])