Ceph 对 K8s 说,我们(四):K8S通过RBD使用Ceph集群

转载:k8s(十二)、分布式存储Ceph RBD使用

前言

上篇文章介绍了k8s使用pv/pvc 的方式使用cephfs, Ceph 对 K8s 说,我们(三):K8S通过Cephfs使用Ceph集群 。在本篇将测试使用ceph rbd作持久化存储后端

创建RBD/SC/PV/PVC/POD测试

1. 静态创建:

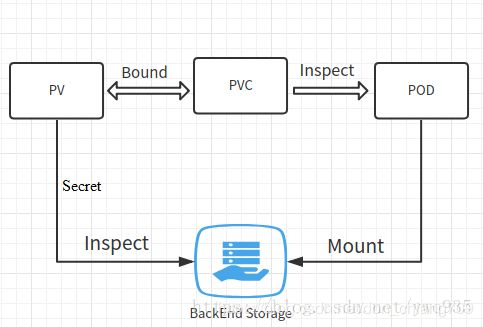

首先必须在ceph端创建image,才能创建pv/pvc,再在pod里面挂载使用.这是一个标准的静态pv的绑定使用流程.但是这样的流程就带来了一个弊端,即一个完整的存储资源挂载使用的操作被分割成了两段,一段在ceph端划分rbd image,一段由k8s端绑定pv,这样使得管理的复杂性提高,不利于自动化运维. 静态创建请参考Ceph 对 K8s 说,我们(三):K8S通过Cephfs使用Ceph集群 。

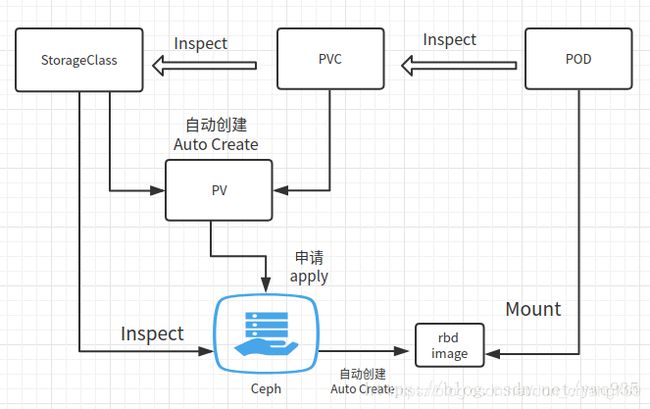

2. 动态创建:

根据pvc及sc的声明,自动生成pv,且向sc指定的ceph后端发出申请自动创建相应大小的rbd image,最终绑定使用。

下面开始演示

1.创建secret

key获取方式参考前一篇文章

# vim ceph-secret-rbd.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret-rbd

type: "kubernetes.io/rbd"

data:

key: QVFCL3E1ZGIvWFdxS1JBQTUyV0ZCUkxldnRjQzNidTFHZXlVYnc9PQ==

2.创建sc,这里制定了ceph monitors及secret 资源

# vim ceph-secret-rbd.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: cephrbd-test2

provisioner: kubernetes.io/rbd

reclaimPolicy: Retain

parameters:

monitors: 192.168.20.112:6789,192.168.20.113:6789,192.168.20.114:6789

adminId: admin

adminSecretName: ceph-secret-rbd

pool: rbd

userId: admin

userSecretName: ceph-secret-rbd

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

3. 创建pvc

# vim cephrbd-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephrbd-pvc2

spec:

storageClassName: cephrbd-test2

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

4. 创建deploy,容器挂载使用创建的pvc

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: deptest13dbdm

name: deptest13dbdm

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: deptest13dbdm

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: deptest13dbdm

spec:

containers:

image: registry.xxx.com:5000/mysql:56v6

imagePullPolicy: Always

name: deptest13dbdm

ports:

- containerPort: 3306

protocol: TCP

resources:

limits:

memory: 16Gi

requests:

memory: 512Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/mysql

name: datadir

subPath: deptest13dbdm

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: datadir

persistentVolumeClaim:

claimName: cephrbd-pvc2

这个时候,pod就正常运行起来了,查看pvc,可以看到此pvc触发自动生成了一个pv且绑定了此pv,pv绑定的StorageClass则是刚创建的sc:

# pod

~/mytest/ceph/rbd/rbd-provisioner# kubectl get pods | grep deptest13

deptest13dbdm-c9d5bfb7c-rzzv6 2/2 Running 0 6h

# pvc

~/mytest/ceph/rbd/rbd-provisioner# kubectl get pvc cephrbd-pvc2

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephrbd-pvc2 Bound pvc-d976c2bf-c2fb-11e8-878f-141877468256 20Gi RWO cephrbd-test2 23h

# 自动创建的pv

~/mytest/ceph/rbd/rbd-provisioner# kubectl get pv pvc-d976c2bf-c2fb-11e8-878f-141877468256

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-d976c2bf-c2fb-11e8-878f-141877468256 20Gi RWO Retain Bound default/cephrbd-pvc2 cephrbd-test2 23h

# sc

~/mytest/ceph/rbd/rbd-provisioner# kubectl get sc cephrbd-test2

NAME PROVISIONER AGE

cephrbd-test2 ceph.com/rbd 23h

再回到ceph节点上看看是否自动创建了rbd image

# rbd ls

kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e

test1

~# rbd info kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e

rbd image 'kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e':

size 20480 MB in 5120 objects

order 22 (4096 kB objects)

block_name_prefix: rbd_data.47d0b6b8b4567

format: 2

features: layering

flags:

可以发现,动态创建pv/rbd成功.

后续

在完成了使用cephfs和ceph rbd这两种k8s分布式存储的对接方式测试之后,下一篇文章将针对这两种方式进行数据库性能测试及适用场景讨论