hadoop2.7.2集群hive-1.2.1整合hbase-1.2.1

本文操作基于官方文档说明,以及其他相关资料,若有错误,希望大家指正

根据hive官方说明整合hbase链接如下https://cwiki.apache.org/confluence/display/Hive/HBaseIntegration

文中指出hive0.9.0匹配的Hbase版本至少要0.92,比这更早的hive版本要匹配Hbase0.89或者0.90

自Hive1.x后,hive能够兼容Hbase0.98.x或者更低版本,而Hive2.x与HBase2.x或更高版本兼容.

然后看到关键部分

The storage handler is built as an independent module, hive-hbase-handler-xyzjar , which must be available on the Hive client auxpath, along with HBase, Guava and ZooKeeper jars.

hive-hbase-handler-xxx.jar作为一个独立的模块,这个jar一定要用Hive client auxpath使用,Hive client auxpath后面要接Hbase,Cuava,Zookeeper的jar包,(请忽视本人拙劣翻译)下面跟上几个简单的用法一个是Cli单节点,一个是有zookeeper管理Hbase的集群用法

看重点的(Note that the jar locations and names have changed in Hive 0.9.0, so for earlier releases, some changes are needed.)

The handler requires Hadoop 0.20 or higher, and has only been tested with dependency versions hadoop-0.20.x, hbase-0.92.0 and zookeeper-3.3.4.If you are not using hbase-0.92.0, you will need to rebuild the handler with the HBase jar matching your version, and change the --auxpath above accordingly.Failure to use matching versions will lead to misleading connection failures such as MasterNotRunningException since the HBase RPC protocol changes often.

该Handler程序需要Hadoop 0.20或者更高的版本,并且只在hadoop-0.20.x,hbase-0.92.0和zookeeper2.2.4上测试过,如果你不使用hbase0.92.0版本,你需要重建handler,使用匹配你使用的Hive版本的Hbase的jar,并且相应的更改--auxpath版本,

这里本人使用的是hadoop2.7.2,hive1.2.1,hbase1.2.1所以,要想整合必须重新编译handler.

下面进入正题....

1.hive_hbase-handler.jar在hive-1.2.1中,首先下载官网hive-1.2.1源码src:

http://www.apache.org/dyn/closer.cgi/hive/选择apache-hive-1.2.1-src.tar.gz点击下载

2. eclipse中建立编译工程,名称随便,普通java project

我这里以hive-hbase为名

3. 将hive源码中的hbase-hadler部分导入到编译项目中

选择src右击import-->General-->FileSytem,下一步

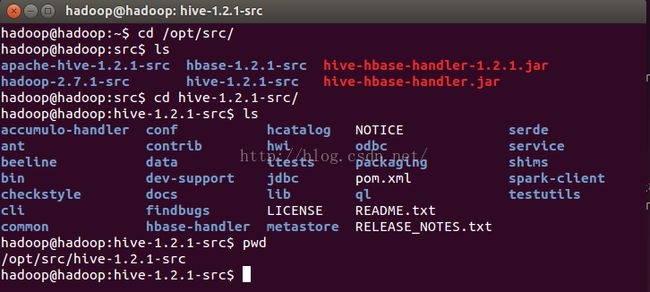

找到你下载解压的hive源码目录,找到hbase-hadler目录比如我的在/opt/src/hive-1.2.1-src

目录为hbase-handler/src/java,有java基础的都不会弄错的.确认后保证包名以org开头

4 .然后开始给eclipse项目下,创建一个lib目录,加入相关的jar包,确保顺利通过编译,根据hive的版本,导入的jar包也会有所差异,直到你的项目没有小红叉,就算完成了这里我分一下几个步骤来添加:

这里为了方便,我依次将hive,hbase,hadoop中的lib下的主要的jar包或者所有的jar包分别复制一份到桌面,以便向项目中添加使用同时不破坏集群的lib.

关于如何添加lib下的jar包看你自己,这里有两种方法,一个是根据报错信息逐个添加jar包,这个需要你对hive,hadoop以及hbase的api很清除才可以.还有一个方法是把所有的jar包一股脑全添加上,适合初级学者,不影响最终结果.

5 先来说一下第一种方法:首先把hive下的所有jar包,和hadoop的common包,mapreduce包,以及hbase/lib下所有jar包添加到项目的lib下,同时删除重复包名,版本不同的jar只保留一个,然后右键项目选择build path-->Config build path,在对话框中选择Libraies,然后Add JARs,选择这个项目下的lib目录,全选jar包,确定,应用,OK

第二种方法:编译这个handler需要的jar其实只有一下这些,分别在hive,hbase,hadoop的lib下找全下列jar包,添加到项目的lib目录下就可以了

6 . 编译打包

选择项目src目录,右击Export-->Java-->JAR file-->Next,选择项目下的src,并设置导出路径,名称可以直接写作hive-hbase-handler-1.2.1.jar其他默认,Finish后即可

然后把导出的hive-hbase-handler-1.2.1.jar包放入hive安装路径的lib下,覆盖原来的handler.

同时eclilpse编译hadler的项目下对应的lib目录中的必须的jar包也放入到hive/lib下如下,并删除多版本的jar包(这里只有zookeeper重复

hadoop@hadoop:src$ cd /home/hadoop/workspace/hive-hbase/lib/

hadoop@hadoop:lib$ ls

commons-io-2.4.jar hbase-server-1.2.1.jar

commons-logging-1.1.3.jar hive-common-1.2.1.jar

hadoop-common-2.7.2.jar hive-exec-1.2.1.jar

hadoop-mapreduce-client-core-2.7.2.jar hive-metastore-1.2.1.jar

hbase-client-1.2.1.jar jsr305-3.0.0.jar

hbase-common-1.2.1.jar metrics-core-2.2.0.jar

hbase-protocol-1.2.1.jar zookeeper-3.4.8.jar

hadoop@hadoop:lib$ cp ./* /opt/modules/hive-1.2.1/lib/

hadoop@hadoop:conf$cd /opt/modules/hive-1.2.1/conf/

hadoop@hadoop:conf$ ls /opt/modules/hive-1.2.1/lib/zookeeper-3.4.*

/opt/modules/hive-1.2.1/lib/zookeeper-3.4.6.jar

/opt/modules/hive-1.2.1/lib/zookeeper-3.4.8.jar

hadoop@hadoop:conf$ rm -f /opt/modules/hive-1.2.1/lib/zookeeper-3.4.6.jar这里的融合部分完成了

在官方文档中使用的是在hive后面跟随参数设置,这里为了简化使用,我们讲这些参数设置到hive的环境和配置文件当中

7. 更改hive中的环境变量以及添加配置

hive-env.sh

hadoop@hadoop:conf$ pwd

/opt/modules/hive-1.2.1/conf

hadoop@hadoop:conf$ ls

beeline-log4j.properties.template hive-log4j.properties.template

hive-env.sh.template hive-site.xml

hive-exec-log4j.properties.template ivysettings.xml

hadoop@hadoop:conf$ cp hive-env.sh.template hive-env.sh

hadoop@hadoop:conf$ vim hive-env.sh

##添加一下内容

export HADOOP_HOME=/opt/modules/hadoop-2.7.2

export HIVE_CONF_DIR=/opt/modules/hive-1.2.1/conf

export JAVA_HOME=/usr/local/java/jdk1.7.0_80

hadoop@hadoop:conf$ vim hive-site.xml

hive.aux.jars.path

file:///opt/modules/hive-1.2.1/lib/hive-hbase-handler-1.2.1.jar,file:///opt/modules/hive-1.2.1/lib/guava-14.0.1.jar,file:///opt/modules/hive-1.2.1/lib/hbase-common-1.2.1.jar,file:///opt/modules/hive-1.2.1/lib/zookeeper-3.4.8.jar

hbase.zookeeper.quorum

hadoop:2181,hadoop1:2182,hadoop2:2183

启动集群并检查启动情况

hadoop@hadoop:conf$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/modules/zookeeper-3.4.8/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

hadoop@hadoop:conf$ ssh hadoop1

Last login: Thu May 12 14:05:18 2016 from hadoop

[hadoop@hadoop1 ~]$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/modules/zookeeper-3.4.8/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@hadoop1 ~]$ ssh hadoop2

Last login: Thu May 12 14:05:26 2016 from hadoop1

[hadoop@hadoop2 ~]$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/modules/zookeeper-3.4.8/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@hadoop2 ~]$ jps

1728 Jps

1699 QuorumPeerMain

[hadoop@hadoop2 ~]$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/modules/zookeeper-3.4.8/bin/../conf/zoo.cfg

Mode: follower

[hadoop@hadoop2 ~]$ exit

logout

Connection to hadoop2 closed.

[hadoop@hadoop1 ~]$ exit

logout

Connection to hadoop1 closed.

hadoop@hadoop:conf$ start-dfs.sh

Starting namenodes on [hadoop]

hadoop: starting namenode, logging to /opt/modules/hadoop-2.7.2/logs/hadoop-hadoop-namenode-hadoop.out

hadoop1: starting datanode, logging to /opt/modules/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop1.out

hadoop2: starting datanode, logging to /opt/modules/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop2.out

Starting secondary namenodes [hadoop]

hadoop: starting secondarynamenode, logging to /opt/modules/hadoop-2.7.2/logs/hadoop-hadoop-secondarynamenode-hadoop.out

hadoop@hadoop:conf$ hdfs dfsadmin -report

Safe mode is ON

Configured Capacity: 32977600512 (30.71 GB)

Present Capacity: 25174839296 (23.45 GB)

DFS Remaining: 25174265856 (23.45 GB)

DFS Used: 573440 (560 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 1

-------------------------------------------------

Live datanodes (2):

Name: 192.168.2.11:50010 (hadoop2)

Hostname: hadoop2

Decommission Status : Normal

Configured Capacity: 16488800256 (15.36 GB)

DFS Used: 290816 (284 KB)

Non DFS Used: 3901227008 (3.63 GB)

DFS Remaining: 12587282432 (11.72 GB)

DFS Used%: 0.00%

DFS Remaining%: 76.34%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu May 12 18:10:50 CST 2016

Name: 192.168.2.10:50010 (hadoop1)

Hostname: hadoop1

Decommission Status : Normal

Configured Capacity: 16488800256 (15.36 GB)

DFS Used: 282624 (276 KB)

Non DFS Used: 3901534208 (3.63 GB)

DFS Remaining: 12586983424 (11.72 GB)

DFS Used%: 0.00%

DFS Remaining%: 76.34%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu May 12 18:10:50 CST 2016

hadoop@hadoop:conf$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/modules/hadoop-2.7.2/logs/yarn-hadoop-resourcemanager-hadoop.out

hadoop2: starting nodemanager, logging to /opt/modules/hadoop-2.7.2/logs/yarn-hadoop-nodemanager-hadoop2.out

hadoop1: starting nodemanager, logging to /opt/modules/hadoop-2.7.2/logs/yarn-hadoop-nodemanager-hadoop1.out

hadoop@hadoop:conf$ jps

7769 SecondaryNameNode

7328 QuorumPeerMain

7531 NameNode

8002 ResourceManager

8269 Jps

hadoop@hadoop:conf$ start-hbase.sh

starting master, logging to /opt/modules/hbase-1.2.1/logs/hbase-hadoop-master-hadoop.out

hadoop1: starting regionserver, logging to /opt/modules/hbase-1.2.1/bin/../logs/hbase-hadoop-regionserver-hadoop1.out

hadoop2: starting regionserver, logging to /opt/modules/hbase-1.2.1/bin/../logs/hbase-hadoop-regionserver-hadoop2.out

hadoop@hadoop:conf$ jps

7769 SecondaryNameNode

8551 Jps

8428 HMaster

7328 QuorumPeerMain

7531 NameNode

8002 ResourceManager 这里发现我的hfds安全模式激活了,可能是由于上电脑非分正常关机导致的,过一会儿复制副本够数就自动关闭了.或者手动关闭,没多大事

启动进入hive,由于我的hive元数据使用的是mysql存储,先启动mysql服务,然后创建hbase识别的表

hadoop@hadoop:conf$ sudo service mysqld start

Starting MySQL

.. *

hadoop@hadoop:conf$ hive

Logging initialized using configuration in jar:file:/opt/modules/hive/lib/hive-common-1.2.1.jar!/hive-log4j.properties

hive> create table hbase_table_1(key int,value string)

> stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

> with serdeproperties("hbase.columns.mapping"=":key,cf1:val")

> tblproperties("hbase.table.name"="xyz");

OK

Time taken: 2.001 seconds

hive>

hadoop@hadoop:~$ cat test.data

1 zhangsan

2 lisi

3 wangwu

hive> create table test1(id int,name string)

> row format delimited

> fields terminated by '\t'

> stored as textfile;

OK

Time taken: 0.214 seconds

hive> load data local inpath '/home/hadoop/test.data' into table test1;

Loading data to table default.test1

Table default.test1 stats: [numFiles=1, totalSize=27]

OK

Time taken: 0.714 seconds

hive> select * from hbase_table_1;

OK

讲hive中的表数据导入到hbase_table_1中,查看表内容

hive> insert overwrite table hbase_table_1 select * from test1;

Query ID = hadoop_20160512194326_ec2c3ec0-0fdc-4265-8478-668ab5df4b5c

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1463047967051_0001, Tracking URL = http://hadoop:8088/proxy/application_1463047967051_0001/

Kill Command = /opt/modules/hadoop-2.7.2/bin/hadoop job -kill job_1463047967051_0001

Hadoop job information for Stage-0: number of mappers: 1; number of reducers: 0

2016-05-12 19:43:46,127 Stage-0 map = 0%, reduce = 0%

2016-05-12 19:43:55,629 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 2.26 sec

MapReduce Total cumulative CPU time: 2 seconds 260 msec

Ended Job = job_1463047967051_0001

MapReduce Jobs Launched:

Stage-Stage-0: Map: 1 Cumulative CPU: 2.26 sec HDFS Read: 3410 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 260 msec

OK

Time taken: 29.829 seconds

hive> select * from hbase_table_1;

OK

1 zhangsan

2 lisi

3 wangwu

Time taken: 0.178 seconds, Fetched: 3 row(s)

hadoop@hadoop:~$ hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/hbase-1.2.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-2.7.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help' for list of supported commands.

Type "exit" to leave the HBase Shell

Version 1.2.1, r8d8a7107dc4ccbf36a92f64675dc60392f85c015, Wed Mar 30 11:19:21 CDT 2016

hbase(main):001:0> list

TABLE

scores

xyz

2 row(s) in 0.1950 seconds

=> ["scores", "xyz"]

hbase(main):002:0> scan 'xyz'

ROW COLUMN+CELL

0 row(s) in 0.1220 seconds

hbase(main):003:0> scan 'xyz'

ROW COLUMN+CELL

1 column=cf1:val, timestamp=1463053413954, value=zhangsan

2 column=cf1:val, timestamp=1463053413954, value=lisi

3 column=cf1:val, timestamp=1463053413954, value=wangwu

3 row(s) in 0.0550 seconds

hbase(main):004:0>

如此以来,已经hive和hbase的整合完成