ELK日志采集分析

一. ELKStack简介

ELK Stack 是 Elasticsearch、Logstash、Kibana 三个开源软件的组合。在实时数据检索和分析场合,三者通常是配合共用,而且又都先后归于 Elastic.co 公司名下,故有此简称。

大数据(big data),指无法在一定时间范围内用常规软件工具进行捕捉、管理和处理的数据集合,是需要新处理模式才能具有更强的决策力、洞察发现力和流程优化能力的海量、高增长率和多样化的信息资产。

简单来说 :把客户的访问公司的各个方面的访问量(方面很广,包括访问量 、流量峰值等等)通过新处理模式进行导入和预处理来表达,进行数据分析来直观解决掉问题。

ELK Stack 在最近两年迅速崛起,成为机器数据分析,或者说实时日志处理领域,开源界的第一选择。和传统的日志处理方案相比,ELKStack具有如下几个优点:

• 处理方式灵活。Elasticsearch 是实时全文索引,不需要像 storm 那样预先编程才能使用;

• 配置简易上手。Elasticsearch 全部采用 JSON 接口,Logstash 是 Ruby DSL 设计,都是目前业界最通用的配置语法设计;

• 检索性能高效。虽然每次查询都是实时计算,但是优秀的设计和实现基本可以达到全天数据查询的秒级响应;

• 集群线性扩展。不管是 Elasticsearch 集群还是 Logstash 集群都是可以线性扩展的;

• 前端操作炫丽。Kibana 界面上,只需要点击鼠标,就可以完成搜索、聚合功能,生成炫丽的仪表板。

二. 安装部署ELK平台进行数据分析

实验环境准备

Linux6.5系统

三台主机

server1 172.25.20.1

server2 172.25.20.2

server3 172.25.20.3

需要下载的软件包

elasticsearch-2.3.3.rpm

elasticsearch-head-master.zip

jdk-8u121-linux-x64.rpm(1)下面是单台主机实验

1.在server1上安装elasticsearch-2.3.3.rpm

[root@server1 ~]# yum install -y elasticsearch-2.3.3.rpm 2.修改elasticsearch的主配置文件

[root@server1 ~]# cd /etc/elasticsearch/

[root@server1 elasticsearch]# ls

elasticsearch.yml #主配置文件

logging.yml #日志文件

scripts #脚本文件

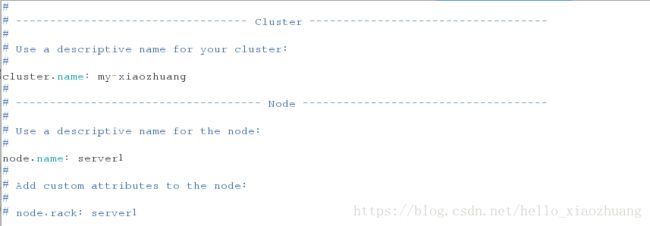

[root@server1 elasticsearch]# vim elasticsearch.yml

[root@server1 elasticsearch]# vim elasticsearch.yml

cluster.name: my-xiaozhuang #集群名字

node.name: server1 #节点名称

path.data: /var/lib/elasticsearch/ #存储目录,可配置多个磁盘

path.logs: /var/log/elasticsearch/ #日志储存目录

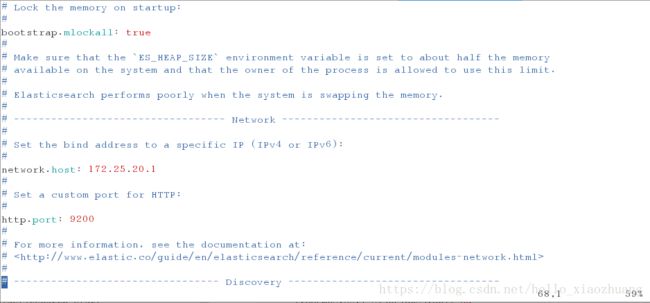

bootstrap.mlockall: true #启动时锁定内存

network.host: 172.25.20.1 #网卡ip

http.port: 9200 #http端口[root@server1 elasticsearch]# /etc/init.d/elasticsearch start #开启elasticsearch服务

which: no java in (/sbin:/usr/sbin:/bin:/usr/bin)

Could not find any executable java binary. Please install java in your PATH or set JAVA_HOME #这里会报错 缺少java环境

[root@server1 elasticsearch]# cd

[root@server1 ~]# ll

总用量 26788

-rw-r--r-- 1 root root 27430789 8月 25 09:27 elasticsearch-2.3.3.rpm

[root@server1 ~]# ll

总用量 190592

-rw-r--r-- 1 root root 27430789 8月 25 09:27 elasticsearch-2.3.3.rpm

-rw-r--r-- 1 root root 167733100 8月 25 09:34 jdk-8u121-linux-x64.rpm

[root@server1 ~]# rpm -ivh jdk-8u121-linux-x64.rpm #用rpm下载jdk

Preparing... ########################################### [100%]

1:jdk1.8.0_121 ########################################### [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

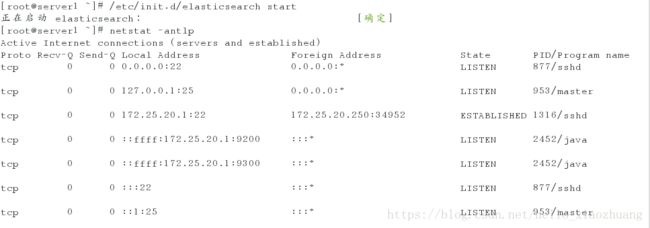

[root@server1 ~]# /etc/init.d/elasticsearch start #开启elasticsearch服务

正在启动 elasticsearch: [确定]

3. 开启服务elasticsearch服务后查看端口

[root@server1 ~]# netstat -antlp #查看端口

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 877/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 953/master

tcp 0 0 172.25.20.1:22 172.25.20.250:34952 ESTABLISHED 1316/sshd

tcp 0 0 ::ffff:172.25.20.1:9200 #出现9200端口 :::* LISTEN 2452/java

tcp 0 0 ::ffff:172.25.20.1:9300 :::* LISTEN 2452/java

tcp 0 0 :::22 :::* LISTEN 877/sshd

tcp 0 0 ::1:25 :::* LISTEN 953/master

4.配置好后在web浏览器上面输入http://172.25.20.1:9200/_plugin/head/查看

在网页上点击【复合查询】给他提交一组数据

会提示成功

{"username":"xiaozhuang","password":"westos"}提交后在【概览】中查看

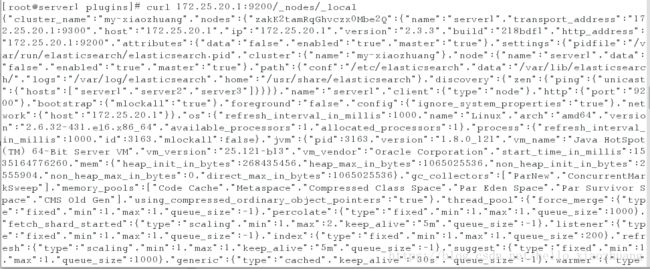

也可以在命令终端查看操作

[root@server1 plugins]# curl 172.25.20.1:9200/_nodes/_local

(2)接下来用三台主机测试

1. 给server2和server3也同样安装elasticsearch 和java环境

[root@server2 ~]# yum install -y jdk-8u121-linux-x64.rpm elasticsearch-2.3.3.rpm

[root@server3 ~]# yum install -y jdk-8u121-linux-x64.rpm elasticsearch-2.3.3.rpm

然后修改主配置文件

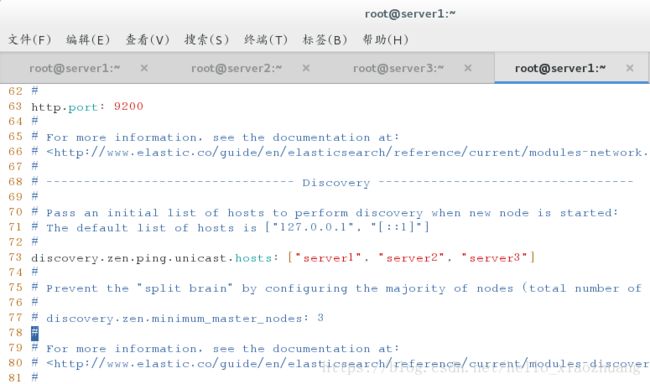

[root@server1 ~]# vim elasticsearch.yml

# server1为主节点

# server2和server3为server旗下的节点 操作相同

##在elasticsearch.yml 里面29-31行加入

29 node.master: true #主节点开启true

30 node.data: flase #主节点不记录日志

31 node.enabled: true #开启http

##在73行加入

73 discovery.zen.ping.unicast.hosts: ["server1", "server2", "server3"]

#添加自己的三台主机名 前提要在本地解析

修改vim /etc/hosts

[root@foundation20 images]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.20.1 server1

172.25.20.2 server2

172.25.20.3 server3

在server2和server3上

node.master: false #不开启主节点 目的是为主节点负载查询

node.data: true #记录日志 不开启相当没起作用

node.enabled: true #http必须开

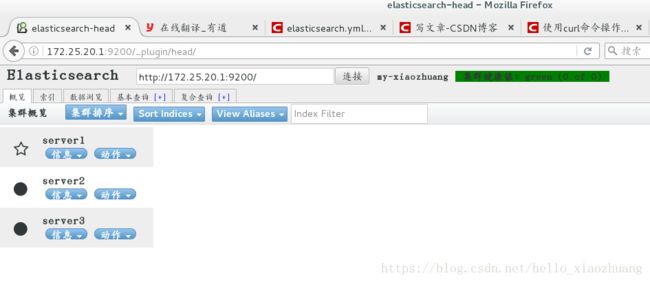

修改完各个节点的配置文件 重新加载服务

[root@server1 ~]# /etc/init.d/elasticsearch reload

停止 elasticsearch: [确定]

正在启动 elasticsearch: [确定]

[root@server2 ~]# /etc/init.d/elasticsearch reload

停止 elasticsearch: [确定]

正在启动 elasticsearch: [确定]

[root@server3 ~]# /etc/init.d/elasticsearch reload

停止 elasticsearch: [确定]

正在启动 elasticsearch: [确定]在web浏览器查看

[root@server1 conf.d]# yum install -y httpd #下载apache[root@server1 conf.d]# /etc/init.d/httpd start #启动apache服务

正在启动 httpd:httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.20.1 for ServerName

[确定]

[root@server1 conf.d]# cd /var/www/html/ #进入apache默认发布目录

[root@server1 html]# ls

[root@server1 html]# vim index.html #建立默认发布目录

[root@server1 html]# curl 172.25.20.1/80 #用命令终端查看 也可以在web浏览器输入ip查看

<html><head>

<title>404 Not Foundtitle>

head><body>

<h1>Not Foundh1>

<p>The requested URL /80 was not found on this server.p>

<hr>

<address>Apache/2.2.15 (Red Hat) Server at 172.25.20.1 Port 80address>

body>html>

[root@server1 html]# cd /var/log/httpd/ #查看apache日志 查看他们权限

[root@server1 httpd]# ll

总用量 8

-rw-r--r-- 1 root root 358 8月 25 14:41 access_log

-rw-r--r-- 1 root root 428 8月 25 14:41 error_log

[root@server1 httpd]# ll access_log

-rw-r--r-- 1 root root 358 8月 25 14:41 access_log

[root@server1 httpd]# ll error_log

-rw-r--r-- 1 root root 428 8月 25 14:41 error_log

[root@server1 ~]# vim /etc/logstash/conf.d/message1.conf #编写配置文件

[root@server1 ~]# cat /etc/logstash/conf.d/message1.conf

input { #输入

file {

path => ["/var/log/httpd/access_log", "/var/log/httpd/error_log"]

start_position => "beginning"

}

}

#filter {

# multiline {

# type => "type"

# pattern => "^/["

# negate => true

# what => "previous"

# }

#}

output { #输出

elasticsearch {

hosts => ["172.25.20.1"]

index => "apache-%{+YYYY.MM.dd}"

}

stdout { #用命令终端的方式查看

codec => rubydebug

}

}

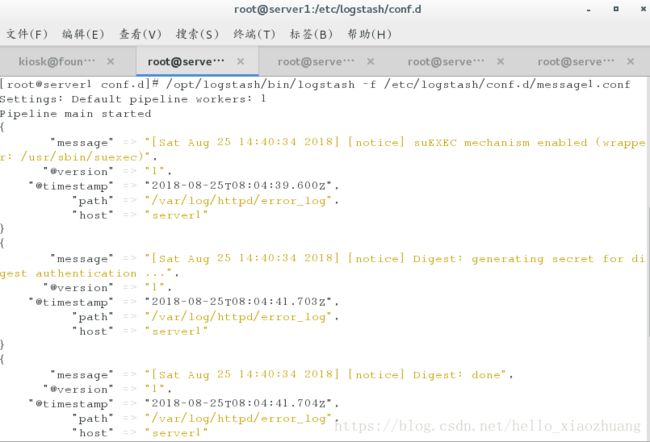

启动服务 可以看出有输出

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message1.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "[Sat Aug 25 14:40:34 2018] [notice] suEXEC mechanism enabled (wrapper: /usr/sbin/suexec)",

"@version" => "1",

"@timestamp" => "2018-08-25T08:04:39.600Z",

"path" => "/var/log/httpd/error_log",

"host" => "server1"

}

{

"message" => "[Sat Aug 25 14:40:34 2018] [notice] Digest: generating secret for digest authentication ...",

"@version" => "1",

"@timestamp" => "2018-08-25T08:04:41.703Z",

"path" => "/var/log/httpd/error_log",

"host" => "server1"

}

{

"message" => "[Sat Aug 25 14:40:34 2018] [notice] Digest: done",

"@version" => "1",

"@timestamp" => "2018-08-25T08:04:41.704Z",

"path" => "/var/log/httpd/error_log",

"host" => "server1"

}

{

"message" => "[Sat Aug 25 14:40:34 2018] [notice] Apache/2.2.15 (Unix) DAV/2 configured -- resuming normal operations",

"@version" => "1",

"@timestamp" => "2018-08-25T08:04:41.706Z",

"path" => "/var/log/httpd/error_log",

"host" => "server1"

}

{

"message" => "[Sat Aug 25 14:41:47 2018] [error] [client 172.25.20.1] File does not exist: /var/www/html/80",

"@version" => "1",

"@timestamp" => "2018-08-25T08:04:41.708Z",

"path" => "/var/log/httpd/error_log",

"host" => "server1"

}

[root@server1 ~]# vim /etc/logstash/conf.d/message1.conf

[root@server1 ~]# cat /etc/logstash/conf.d/message1.conf

input {

file {

path => ["/var/log/httpd/access_log", "/var/log/httpd/error_log"]

start_position => "beginning"

}

}

#filter {

# multiline {

# type => "type"

# pattern => "^/["

# negate => true

# what => "previous"

# }

#}

output {

elasticsearch {

hosts => ["172.25.20.1"]

index => "apache-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

[root@server1 ~]# cd /opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-2.0.5/patterns/

[root@server1 patterns]# ls

aws exim haproxy linux-syslog mongodb rails

bacula firewalls java mcollective nagios redis

bro grok-patterns junos mcollective-patterns postgresql ruby

[root@server1 patterns]# vim grok-patterns

[root@server1 patterns]# cd /etc/logstash/conf.d/

[root@server1 conf.d]# ls

dz.conf message1.conf message.conf rsyslog.conf xz.conf

[root@server1 conf.d]# vim message1.conf

[root@server1 conf.d]# ls -i /var/lo

local/ lock/ log/

[root@server1 conf.d]# ls -i /var/log/httpd/access_log

1045390 /var/log/httpd/access_log

[root@server1 conf.d]# ls -i /var/log/httpd/error_log

1045315 /var/log/httpd/error_log

[root@server1 conf.d]# cd

[root@server1 ~]# l.

. .bash_profile .oracle_jre_usage .tcshrc

.. .bashrc .sincedb_452905a167cf4509fd08acb964fdb20c .viminfo

.bash_history .cshrc .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d

.bash_logout .lftp .ssh

[root@server1 ~]# cat .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d

1045390 0 64768 358

1045315 0 64768 428

[root@server1 ~]# rm -fr .sincedb_ef0edb00900aaa8dcb520b280cb2fb7d

[root@server1 conf.d]# cat message1.conf

input {

file {

path => ["/var/log/httpd/access_log", "/var/log/httpd/error_log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

elasticsearch {

hosts => ["172.25.20.1"]

index => "apache-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message1.conf

Settings: Default pipeline workers: 1

Pipeline main started

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "172.25.20.250 - - [25/Aug/2018:16:23:07 +0800] \"GET / HTTP/1.1\" 200 - \"-\" \"Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0\"",

"@version" => "1",

"@timestamp" => "2018-08-25T08:23:07.872Z",

"path" => "/var/log/httpd/access_log",

"host" => "server1",

"clientip" => "172.25.20.250",

"ident" => "-",

"auth" => "-",

"timestamp" => "25/Aug/2018:16:23:07 +0800",

"verb" => "GET",

"request" => "/",

"httpversion" => "1.1",

"response" => "200",

"referrer" => "\"-\"",

"agent" => "\"Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0\""

}

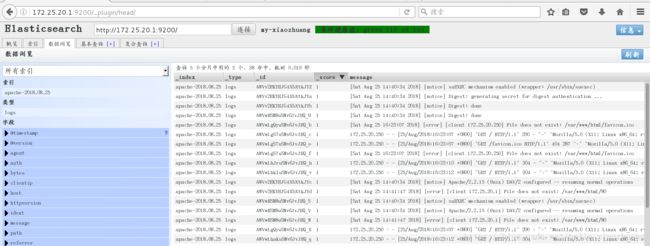

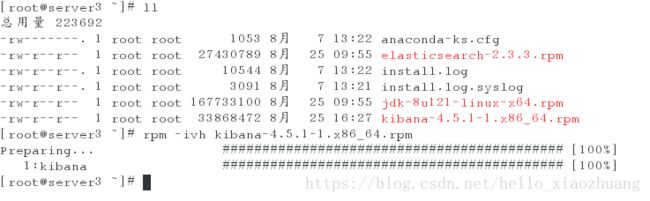

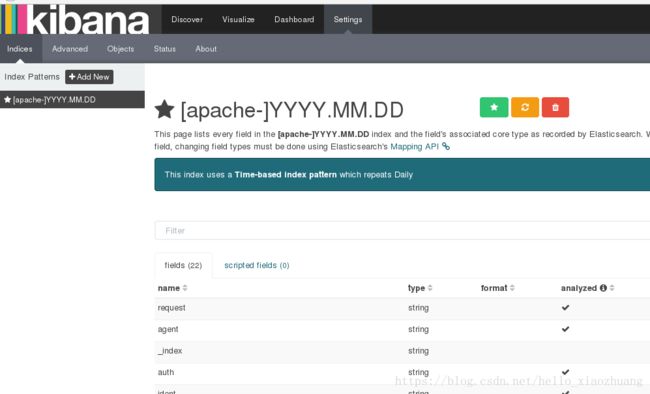

在server3上安装kibana

[root@server3 ~]# rpm -ivh kibana-4.5.1-1.x86_64.rpm 修改主配置文件

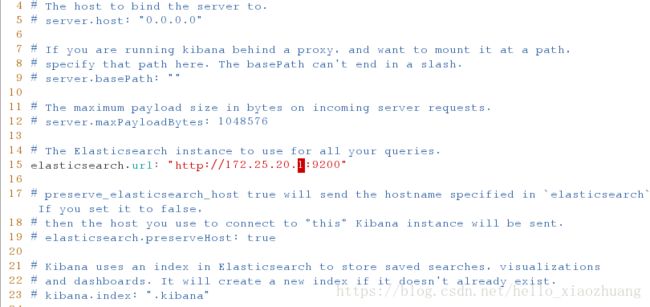

vim /opt/kibana/config/kibana.yml

15 elasticsearch.url: "http://172.25.20.1:9200"

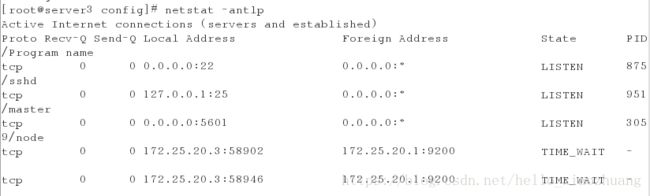

打开kibana服务

[root@server3 config]# /etc/init.d/kibana start

[root@server3 config]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 875/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 951/master

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 3059/node

tcp 0 0 172.25.20.3:58902 172.25.20.1:9200 TIME_WAIT -

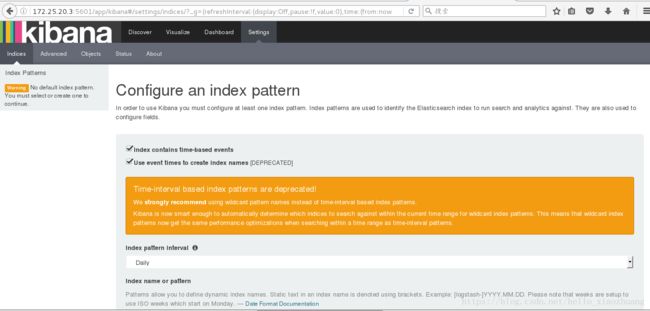

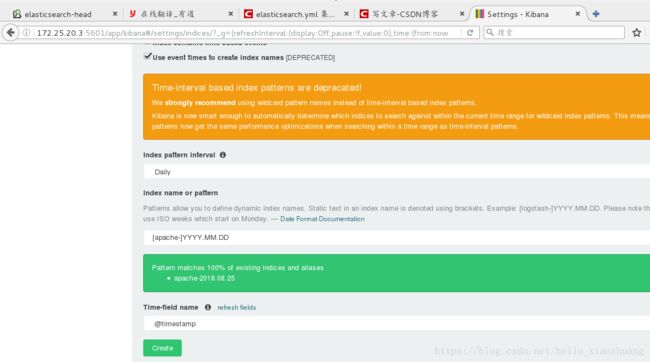

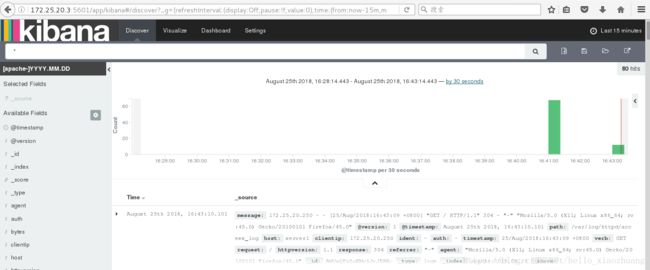

在web浏览器访问

我在这里选择通过apache的访问量 来实验

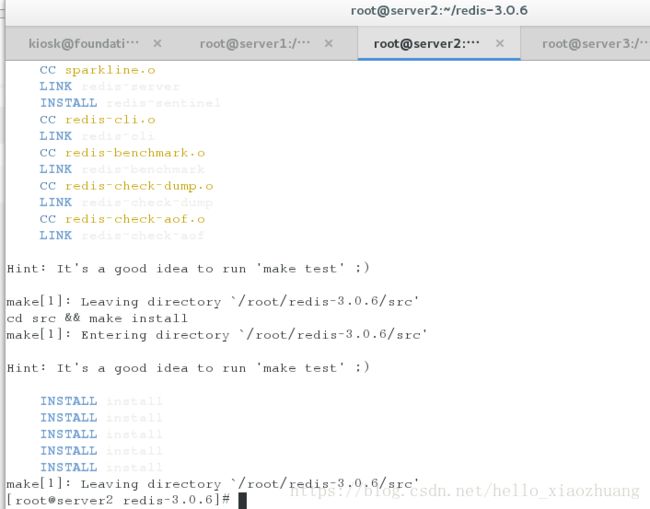

在server2用nginx代替apache

[root@server2 ~]# ll

-rw-r--r-- 1 root root 1372648 8月 25 16:46 redis-3.0.6.tar.gz

[root@server2 ~]# tar zxf redis-3.0.6.tar.gz

[root@server2 ~]# cd redis-3.0.6

[root@server2 redis-3.0.6]# make && make install #直接编译

[root@server2 ~]# cd redis-3.0.6

[root@server2 redis-3.0.6]# ls

00-RELEASENOTES CONTRIBUTING deps Makefile README runtest runtest-sentinel src utils

BUGS COPYING INSTALL MANIFESTO redis.conf runtest-cluster sentinel.conf tests

[root@server2 redis-3.0.6]# cd utils/

[root@server2 utils]# ./install_server.sh

Welcome to the redis service installer

This script will help you easily set up a running redis server

Please select the redis port for this instance: [6379]

Selecting default: 6379

Please select the redis config file name [/etc/redis/6379.conf]

Selected default - /etc/redis/6379.conf

Please select the redis log file name [/var/log/redis_6379.log]

Selected default - /var/log/redis_6379.log

Please select the data directory for this instance [/var/lib/redis/6379]

Selected default - /var/lib/redis/6379

Please select the redis executable path [/usr/local/bin/redis-server]

Selected config:

Port : 6379

Config file : /etc/redis/6379.conf

Log file : /var/log/redis_6379.log

Data dir : /var/lib/redis/6379

Executable : /usr/local/bin/redis-server

Cli Executable : /usr/local/bin/redis-cli

Is this ok? Then press ENTER to go on or Ctrl-C to abort.

Copied /tmp/6379.conf => /etc/init.d/redis_6379

Installing service...

Successfully added to chkconfig!

Successfully added to runlevels 345!

Starting Redis server...

Installation successful!

[root@server2 utils]# netstat -antpl |grep 6379

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 6114/redis-server *

tcp 0 0 :::6379 :::* LISTEN 6114/redis-server *

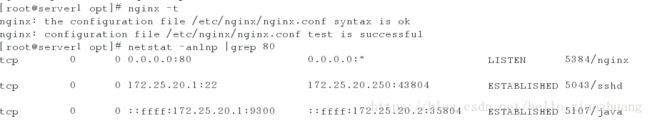

有nginx的rpm包

直接安装

[root@server1 ~]# ll

-rw-r--r-- 1 root root 360628 8月 25 17:02 nginx-1.8.0-1.el6.ngx.x86_64.rpm

[root@server1 ~]# yum install -y nginx-1.8.0-1.el6.ngx.x86_64.rpm

安装ok 开启nginx

[root@server1 ~]# nginx

[root@server1 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful[root@server1 ~]# netstat -anlnp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 5989/nginx

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 875/sshd

[root@server1 patterns]# cd /etc/logstash/

[root@server1 logstash]# cd conf.d/

[root@server1 conf.d]# ls

dz.conf message1.conf message.conf rsyslog.conf xz.conf

[root@server1 conf.d]# cp message1.conf nginx.conf

[root@server1 conf.d]# vim nginx.conf

[root@server1 conf.d]# cat nginx.conf

input {

file {

path => "/var/log/nginx/access.log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG} %{QS:x_forwarded_for}" }

}

}

output {

redis {

host => ["172.25.20.2"]

port => 6379

data_type => "list"

key => "logstash:redis"

}

stdout {

codec => rubydebug

}

}

[root@server1 ~]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/nginx.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "172.25.20.250 - - [25/Aug/2018:18:12:00 +0800] \"GET / HTTP/1.1\" 304 0 \"-\" \"Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0\" \"-\"",

"@version" => "1",

"@timestamp" => "2018-08-25T10:12:01.127Z",

"path" => "/var/log/nginx/access.log",

"host" => "server1",

"clientip" => "172.25.20.250",

"ident" => "-",

"auth" => "-",

"timestamp" => "25/Aug/2018:18:12:00 +0800",

"verb" => "GET",

"request" => "/",

"httpversion" => "1.1",

"response" => "304",

"bytes" => "0",

"referrer" => "\"-\"",

"agent" => "\"Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0\"",

"x_forwarded_for" => "\"-\""

}

[root@server2 conf.d]# vim nginx.conf

[root@server2 conf.d]# cat nginx.conf

input {

redis {

host => ["172.25.20.2"]

port => 6379

data_type => "list"

key => "logstash:redis"

}

}

output {

elasticsearch {

hosts => ["172.25.20.1"]

index => "nginx-%{+YYYY.MM.dd}"

}

}

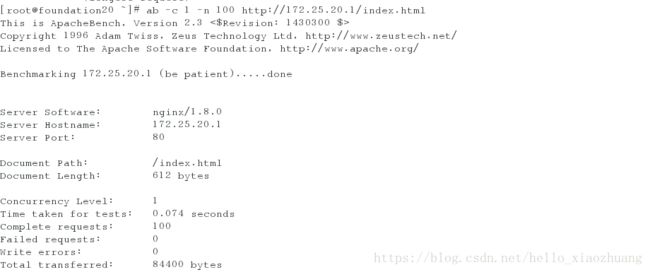

[root@foundation20 ~]# ab -c 1 -n 100 http://172.25.20.1/index.html

This is ApacheBench, Version 2.3 <$Revision: 1430300 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 172.25.20.1 (be patient).....done

Server Software: nginx/1.8.0

Server Hostname: 172.25.20.1

Server Port: 80

Document Path: /index.html

Document Length: 612 bytes

Concurrency Level: 1

Time taken for tests: 0.031 seconds

Complete requests: 100

Failed requests: 0

Write errors: 0

Total transferred: 84400 bytes

HTML transferred: 61200 bytes

Requests per second: 3262.64 [#/sec] (mean)

Time per request: 0.306 [ms] (mean)

Time per request: 0.306 [ms] (mean, across all concurrent requests)

Transfer rate: 2689.13 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.3 0 2

Processing: 0 0 0.1 0 1

Waiting: 0 0 0.1 0 1

Total: 0 0 0.3 0 3

Percentage of the requests served within a certain time (ms)

50% 0

66% 0

75% 0

80% 0

90% 1

95% 1

98% 2

99% 3

100% 3 (longest request)

[root@foundation20 ~]#

[root@server2 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/nginx.conf

Settings: Default pipeline workers: 1

Pipeline main started