kubernetes工作记录(1)——kubernetes1.7.4版集群的离线安装搭建过程记录

之前将近一个月的时间算是初步入门了kubernetes,现在对之前的学习工作进行整理记录,将所有的内容有机的串联起来。master离线安装脚本

需要安装etcd、flannel、kube-apiserver、kube-controller-manager、kube-scheduler、kubectl

etcd和flannel采用 Centos7.2学习记录(2)——yum只下载不安装以及多rpm的安装方式下载的rpm包。

kubernetes基于二进制文件的方式进行安装配置,版本为1.7.4。

下载地址为https://github.com/kubernetes/kubernetes/releases/download/v1.7.4/kubernetes.tar.gz

解压后执行./kubernetes/cluster/get-kube-binaries.sh

即可获得kubernetes-server-linux-amd64.tar.gz。

master安装过程

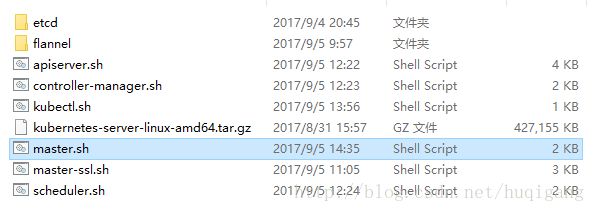

- 上传Master文件夹里的所有内容到Master。

- 执行master.sh。(示例:sh master.sh 192.168.121.140 10.254.10.2)

第一个参数为master ip;

第二个参数为集群DNS组件Cluster ip,我用的是10.254.10.2,需要与后续DNS_Service.yaml中指定的ip保持一致。

master.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

echo "===================This node is a master!==================="

#参数1:Master_ip

MASTER_ADDRESS=$1

#dns组件ip

KUBE_MASTER_DNS=$2

#安装ETCD

sh etcd/etcd.sh ${MASTER_ADDRESS}

#解压kubernetes-server-linux-amd64.tar.gz

KUBE_BIN_DIR="/usr/bin"

if [ ! -d "kubernetes" ]; then

echo "===================unzip kubernetes.tar.gz file==================="

tar -zxvf kubernetes-server-linux-amd64.tar.gz

else

echo "===================kubernetes directory already exists==================="

fi

echo '===================Install kubernetes... ==================='

#复制二进制文件到/usr/bin

echo "Copy kube-apiserver,kube-controller-manager,kube-scheduler,kubectl to ${KUBE_BIN_DIR} "

cp kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl} ${KUBE_BIN_DIR}

chmod a+x ${KUBE_BIN_DIR}/kube*

echo "===================Copy Success==================="

#生成证书

sh master-ssl.sh ${MASTER_ADDRESS} ${KUBE_MASTER_DNS}

#配置apiserver

sh apiserver.sh ${MASTER_ADDRESS}

#配置controller-manager

sh controller-manager.sh

#配置scheduler

sh scheduler.sh

#配置kubectl

sh kubectl.sh ${MASTER_ADDRESS}

#安装flannel覆盖网络

sh flannel/flannel.sh ${MASTER_ADDRESS}

systemctl daemon-reload

systemctl restart flanneld etcd kube-apiserver kube-scheduler kube-controller-manager

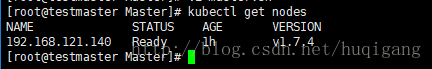

kubectl get -s http://${MASTER_ADDRESS}:8080 componentstatusmaster.sh中的执行顺序:

1) 安装etcd。参数为master ip。

即执行etcd/etcd.sh。

2) 解压kubernetes-server-linux-amd64.tar.gz并将二进制文件拷贝到/usr/bin

3) 生成证书

即执行master-ssl.sh。参数为1. master ip 2.dns cluster ip

4) 配置apiserver

即执行apiserver.sh。参数为1. master ip

5) 配置controller-manager

即执行controller-manager.sh。

6) 配置scheduler

即执行scheduler.sh。

7) 配置kubectl

即执行kubectl.sh。参数为1. master ip

8) 安装flannel

即执行flannel/flannel.sh。参数为1. master ip

etcd.sh

#/bin/bash

#第一个参数是Masterip

#关闭selinux和firewalld

echo '====================Disable selinux and firewalld...========'

if [ $(getenforce) == "Enabled" ]; then

setenforce 0

fi

systemctl disable firewalld

systemctl stop firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

echo '============Disable selinux and firewalld success!=========='

echo '=====================Install etcd... ======================='

rpm -ivh etcd/etcd-3.1.9-1.el7.x86_64.rpm

MASTER_ADDRESS=$1

sed -i 's/User=etcd//g' /usr/lib/systemd/system/etcd.service

echo "master_IP:"${MASTER_ADDRESS}

#更新ETCD配置文件

echo '==================update /etc/etcd/etcd.conf ...=================='

cat </etc/etcd/etcd.conf

#[member]

ETCD_NAME=default

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

#ETCD_LISTEN_PEER_URLS="http://localhost:2380"

ETCD_LISTEN_CLIENT_URLS="http://${MASTER_ADDRESS}:2379,http://${MASTER_ADDRESS}:4001,http://127.0.0.1:2379,http://127.0.0.1:4001"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

#ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380"

#if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

#ETCD_INITIAL_CLUSTER="default=http://localhost:2380"

#ETCD_INITIAL_CLUSTER_STATE="new"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://${MASTER_ADDRESS}:2379,http://${MASTER_ADDRESS}:4001,http://127.0.0.1:2379,http://127.0.0.1:4001"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_STRICT_RECONFIG_CHECK="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#

#[proxy]

#ETCD_PROXY="off"

EOF

echo '===================start etcd service... ==================='

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

FLAG=$(etcdctl cluster-health|grep unhealth)

echo $(etcdctl cluster-health)

if [ "${FLAG}"=="" ];then

echo '===================The etcd service is started!==================='

else

echo '===================The etcd service is failed!==================='

fi

#分配flannel网络IP段

etcdctl rm /coreos.com/network/config

etcdctl mk /coreos.com/network/config '{"Network":"10.0.0.0/16"}' master-ssl.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

#master ip

KUBE_MASTER_IP=$1

#dns组件ip

KUBE_MASTER_DNS=$2

#master节点hostname

MASTER_HOSTNAME=`hostname`

#证书存放地址

MASTER_SSL="/etc/kubernetes/ssl"

echo '===================Create ssl for kube master node...==================='

echo '===================mkdir ${MASTER_SSL}...==================='

#创建证书存放目录

rm -rf /etc/kubernetes/

mkdir /etc/kubernetes/

rm -rf ${MASTER_SSL}

mkdir ${MASTER_SSL}

###############生成根证书################

echo "===================Create ca key...==================="

#创建CA私钥

openssl genrsa -out ${MASTER_SSL}/ca.key 2048

#自签CA

openssl req -x509 -new -nodes -key ${MASTER_SSL}/ca.key -subj "/CN=${KUBE_MASTER_IP}" -days 10000 -out ${MASTER_SSL}/ca.crt

###############生成 API Server 服务端证书和私钥###############

echo "===================Create kubernetes api server ssl key...==================="

cat <${MASTER_SSL}/master_ssl.cnf

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = ${MASTER_HOSTNAME}

IP.1 = ${KUBE_MASTER_DNS}

IP.2 = ${KUBE_MASTER_IP}

EOF

#生成apiserver私钥

openssl genrsa -out ${MASTER_SSL}/server.key 2048

#生成签署请求

openssl req -new -key ${MASTER_SSL}/server.key -subj "/CN=${MASTER_HOSTNAME}" -config ${MASTER_SSL}/master_ssl.cnf -out ${MASTER_SSL}/server.csr

#使用自建CA签署

openssl x509 -req -in ${MASTER_SSL}/server.csr -CA ${MASTER_SSL}/ca.crt -CAkey ${MASTER_SSL}/ca.key -CAcreateserial -days 10000 -extensions v3_req -extfile ${MASTER_SSL}/master_ssl.cnf -out ${MASTER_SSL}/server.crt

echo "===================Create kubernetes controller manager and scheduler server ssl key...==================="

#生成 Controller Manager 与 Scheduler 进程共用的证书和私钥

openssl genrsa -out ${MASTER_SSL}/cs_client.key 2048

#生成签署请求

openssl req -new -key ${MASTER_SSL}/cs_client.key -subj "/CN=${MASTER_HOSTNAME}" -out ${MASTER_SSL}/cs_client.csr

#使用自建CA签署

openssl x509 -req -in ${MASTER_SSL}/cs_client.csr -CA ${MASTER_SSL}/ca.crt -CAkey ${MASTER_SSL}/ca.key -CAcreateserial -out ${MASTER_SSL}/cs_client.crt -days 10000

cat <${MASTER_SSL}/kubeconfig

apiVersion: v1

kind: Config

users:

- name: controllermanager

user:

client-certificate: ${MASTER_SSL}/cs_client.crt

client-key: ${MASTER_SSL}/cs_client.key

clusters:

- name: local

cluster:

certificate-authority: ${MASTER_SSL}/ca.crt

contexts:

- context:

cluster: local

user: controllermanager

name: my-context

current-context: my-context

EOF

ls ${MASTER_SSL}

echo "Success!" apiserver.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

MASTER_ADDRESS=$1

#配置文件地址

KUBE_CFG_DIR="/etc/kubernetes"

#二进制可执行文件地址

KUBE_BIN_DIR="/usr/bin"

#证书地址

MASTER_SSL="/etc/kubernetes/ssl"

echo '===================Config kube-apiserver... ================'

#公共配置该配置文件同时被kube-apiserver、kube-controller-manager、kube-scheduler使用

echo "===================Create ${KUBE_CFG_DIR}/config file==================="

cat <${KUBE_CFG_DIR}/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=https://${MASTER_ADDRESS}:6443"

EOF

echo "===================Create ${KUBE_CFG_DIR}/config file sucess==================="

#kube-apiserver配置

echo "===================Create ${KUBE_CFG_DIR}/apiserver file==================="

cat <${KUBE_CFG_DIR}/apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--bind-address=${MASTER_ADDRESS}"

KUBE_API_INSECURE_ADDRESS="--insecure-bind-address=${MASTER_ADDRESS} "

KUBE_ADVERTISE_ADDR="--advertise-address=${MASTER_ADDRESS}"

# The port on the local server to listen on.

KUBE_API_PORT="--secure-port=6443"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://${MASTER_ADDRESS}:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

# Add your own!

KUBE_API_ARGS="--client-ca-file=${MASTER_SSL}/ca.crt --tls-private-key-file=${MASTER_SSL}/server.key --tls-cert-file=${MASTER_SSL}/server.crt"

EOF

echo "===================Create /usr/lib/systemd/system/kube-apiserver.service file==================="

cat </usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-${KUBE_CFG_DIR}/config

EnvironmentFile=-${KUBE_CFG_DIR}/apiserver

ExecStart=${KUBE_BIN_DIR}/kube-apiserver \\

\$KUBE_LOGTOSTDERR \\

\$KUBE_LOG_LEVEL \\

\$KUBE_ETCD_SERVERS \\

\$KUBE_API_ADDRESS \\

\$KUBE_API_PORT \\

\$KUBELET_PORT \\

\$KUBE_ALLOW_PRIV \\

\$KUBE_SERVICE_ADDRESSES \\

\$KUBE_ADVERTISE_ADDR \\

\$KUBE_API_INSECURE_ADDRESS \\

\$KUBE_ADMISSION_CONTROL \\

\$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

echo '===================Start kube-apiserver... ================='

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

systemctl status kube-apiserver controller-manager.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

#配置文件地址

KUBE_CFG_DIR="/etc/kubernetes"

#二进制可执行文件地址

KUBE_BIN_DIR="/usr/bin"

#证书地址

MASTER_SSL="/etc/kubernetes/ssl"

echo '===================Config kube-controller-manager...========'

echo "===================Create ${KUBE_CFG_DIR}/controller-manager file==================="

cat <${KUBE_CFG_DIR}/controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS=" --service-account-private-key-file=${MASTER_SSL}/server.key --root-ca-file=${MASTER_SSL}/ca.crt --kubeconfig=${MASTER_SSL}/kubeconfig"

EOF

echo "===================Create /usr/lib/systemd/system/kube-controller-manager.service file==================="

cat </usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-${KUBE_CFG_DIR}/config

EnvironmentFile=-${KUBE_CFG_DIR}/controller-manager

ExecStart=${KUBE_BIN_DIR}/kube-controller-manager \\

\$KUBE_LOGTOSTDERR \\

\$KUBE_LOG_LEVEL \\

\$KUBE_MASTER \\

\$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

echo '===================Start kube-controller-manager... ========'

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

systemctl status kube-controller-manager scheduler.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

#配置文件地址

KUBE_CFG_DIR="/etc/kubernetes"

#二进制可执行文件地址

KUBE_BIN_DIR="/usr/bin"

#证书地址

MASTER_SSL="/etc/kubernetes/ssl"

echo '===================Config kube-scheduler...================='

echo "===================Create ${KUBE_CFG_DIR}/scheduler file==================="

cat <${KUBE_CFG_DIR}/scheduler

###

# kubernetes scheduler config

# log dir

# Add your own!

KUBE_SCHEDULER_ARGS="--address=127.0.0.1 --kubeconfig=${MASTER_SSL}/kubeconfig"

EOF

echo "===================Create /usr/lib/systemd/system/kube-scheduler.service file==================="

cat </usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-${KUBE_CFG_DIR}/config

EnvironmentFile=-${KUBE_CFG_DIR}/scheduler

ExecStart=${KUBE_BIN_DIR}/kube-scheduler \\

\$KUBE_LOGTOSTDERR \\

\$KUBE_LOG_LEVEL \\

\$KUBE_MASTER \\

\$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

echo '===================Start kube-scheduler... ================='

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

systemctl status kube-scheduler kubectl.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

MASTER_ADDRESS=$1

#证书地址

MASTER_SSL="/etc/kubernetes/ssl"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=${MASTER_SSL}/ca.crt \

--embed-certs=true \

--server=https://${MASTER_ADDRESS}:6443

# 设置客户端认证参数

kubectl config set-credentials admin \

--client-certificate=${MASTER_SSL}/cs_client.crt \

--embed-certs=true \

--client-key=${MASTER_SSL}/cs_client.key

# 设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

# 设置默认上下文

kubectl config use-context kubernetesflannel.sh

#/bin/bash

#第一个参数是Masterip

#关闭selinux和firewalld

echo '====================Disable selinux and firewalld...========'

if [ $(getenforce) == "Enabled" ]; then

setenforce 0

fi

systemctl disable firewalld

systemctl stop firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

echo '============Disable selinux and firewalld success!=========='

echo '=====================Install flannel... ======================='

rpm -ivh flannel/flannel-0.7.1-1.el7.x86_64.rpm

MASTER_ADDRESS=$1

echo "master_IP:"${MASTER_ADDRESS}

#更新ETCD配置文件

echo '==================update /etc/sysconfig/flanneld ...=================='

cat </etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://${MASTER_ADDRESS}:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/coreos.com/network"

# Any additional options that you want to pass

#FLANNEL_OPTIONS=""

EOF

echo '===================start flannel service... ==================='

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

ip addr node离线安装脚本

需要安装flannel、docker、kubectl、kube-proxy、kubelet

node安装过程

- 下载master节点上/etc/kubernetes/ssl下的ca.crt和ca.key到Node文件夹里。

- 上传Node文件夹里的所有内容到Node。执行node.sh。(示例:sh node.sh 192.168.121.140 192.168.121.141 10.254.10.2)

第一个参数为master ip;

第二个参数为node ip;

第三个参数为集群DNS组件Cluster ip,我用的是10.254.10.2,需要与后续DNS_Service.yaml中指定的ip保持一致)

noed.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

#二进制可执行文件地址

KUBE_BIN_DIR="/usr/bin"

#配置文件地址

KUBE_CFG_DIR="/etc/kubernetes"

mkdir -p ${KUBE_CFG_DIR}

echo "===================This node is a node!==================="

#master ip

MASTER_ADDRESS=$1

#node ip

NODE_ADDRESS=$2

#DNS cluster ip

KUBE_MASTER_DNS=$3

sh docker/docker.sh

if [ ! -d "kubernetes" ]; then

echo "===================unzip kubernetes.tar.gz file==================="

tar -zxvf kubernetes-server-linux-amd64.tar.gz

else

echo "===================kubernetes directory already exists==================="

fi

echo '===================Install kubernetes... ==================='

echo "===================Copy kubectl,kube-proxy,kubelet to ${KUBE_BIN_DIR}==================="

cp kubernetes/server/bin/{kubectl,kube-proxy,kubelet} ${KUBE_BIN_DIR}

chmod a+x ${KUBE_BIN_DIR}/kube*

cp sh/{mk-docker-opts.sh,remove-docker0.sh} ${KUBE_BIN_DIR}

chmod a+x ${KUBE_BIN_DIR}/mk-docker-opts.sh

chmod a+x ${KUBE_BIN_DIR}/remove-docker0.sh

echo "===================Copy Success==================="

#生成证书

sh node-ssl.sh ${MASTER_ADDRESS} ${NODE_ADDRESS} ${KUBE_MASTER_DNS}

#配置kubelet

sh kubelet.sh ${MASTER_ADDRESS} ${NODE_ADDRESS} ${KUBE_MASTER_DNS}

#配置kube-proxy

sh kube-proxy.sh ${MASTER_ADDRESS} ${NODE_ADDRESS}

#安装flannel覆盖网络

sh flannel/flannel.sh ${MASTER_ADDRESS}

systemctl restart flanneld docker kubelet kube-proxynode.sh中的执行顺序:

1) 安装docker。

即执行docker/docker.sh。

2) 解压kubernetes-server-linux-amd64.tar.gz并将二进制文件拷贝到/usr/bin

3) 生成证书

即执行node-ssl.sh。参数为1. master ip 2.node ip 3.dns cluster ip

4) 配置kubelet

即执行kubelet.sh。参数为1. master ip 2.node ip 3.dns cluster ip

5) 配置kube-proxy

即执行kube-proxy.sh。参数为1. master ip 2.node ip

6) 安装flannel

即执行flannel/flannel.sh。参数为1. master ip

mk-docker-opts.sh

#!/bin/bash

# Copyright 2014 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Generate Docker daemon options based on flannel env file.

# exit on any error

set -e

usage() {

echo "$0 [-f FLANNEL-ENV-FILE] [-d DOCKER-ENV-FILE] [-i] [-c] [-m] [-k COMBINED-KEY]

Generate Docker daemon options based on flannel env file

OPTIONS:

-f Path to flannel env file. Defaults to /run/flannel/subnet.env

-d Path to Docker env file to write to. Defaults to /run/docker_opts.env

-i Output each Docker option as individual var. e.g. DOCKER_OPT_MTU=1500

-c Output combined Docker options into DOCKER_OPTS var

-k Set the combined options key to this value (default DOCKER_OPTS=)

-m Do not output --ip-masq (useful for older Docker version)

" >/dev/stderr

exit 1

}

flannel_env="/run/flannel/subnet.env"

docker_env="/run/docker_opts.env"

combined_opts_key="DOCKER_OPTS"

indiv_opts=false

combined_opts=false

ipmasq=true

while getopts "f:d:ick:" opt; do

case $opt in

f)

flannel_env=$OPTARG

;;

d)

docker_env=$OPTARG

;;

i)

indiv_opts=true

;;

c)

combined_opts=true

;;

m)

ipmasq=false

;;

k)

combined_opts_key=$OPTARG

;;

\?)

usage

;;

esac

done

if [[ $indiv_opts = false ]] && [[ $combined_opts = false ]]; then

indiv_opts=true

combined_opts=true

fi

if [[ -f "$flannel_env" ]]; then

source $flannel_env

fi

if [[ -n "$FLANNEL_SUBNET" ]]; then

DOCKER_OPT_BIP="--bip=$FLANNEL_SUBNET"

fi

if [[ -n "$FLANNEL_MTU" ]]; then

DOCKER_OPT_MTU="--mtu=$FLANNEL_MTU"

fi

if [[ "$FLANNEL_IPMASQ" = true ]] && [[ $ipmasq = true ]]; then

DOCKER_OPT_IPMASQ="--ip-masq=false"

fi

eval docker_opts="\$${combined_opts_key}"

docker_opts+=" "

echo -n "" >$docker_env

for opt in $(compgen -v DOCKER_OPT_); do

eval val=\$$opt

if [[ "$indiv_opts" = true ]]; then

echo "$opt=\"$val\"" >>$docker_env

fi

docker_opts+="$val "

done

if [[ "$combined_opts" = true ]]; then

echo "${combined_opts_key}=\"${docker_opts}\"" >>$docker_env

firemove-docker0.sh

#!/bin/bash

# Copyright 2014 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Delete default docker bridge, so that docker can start with flannel network.

# exit on any error

set -e

rc=0

ip link show docker0 >/dev/null 2>&1 || rc="$?"

if [[ "$rc" -eq "0" ]]; then

ip link set dev docker0 down

ip link delete docker0

fidocker.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

#关闭selinux和firewalld

echo '====================Disable selinux and firewalld...========'

if [ $(getenforce) == "Enabled" ]; then

setenforce 0

fi

systemctl disable firewalld

systemctl stop firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

echo '============Disable selinux and firewalld success!=========='

echo "===================Start Install docker!==================="

rpm -ivh --force --nodeps docker/*.rpm

systemctl daemon-reload

systemctl start docker.service

systemctl enable docker.service

docker versionnode-ssl.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

#master ip

KUBE_MASTER_IP=$1

#node ip

KUBE_NODE_IP=$2

#dns组件ip

KUBE_MASTER_DNS=$3

#node hostname

MASTER_HOSTNAME=`hostname`

#证书存放目录

MASTER_SSL="/etc/kubernetes/ssl"

echo '===================Create ssl for kube node...==================='

echo '===================mkdir ${MASTER_SSL}...==================='

#创建证书存放目录

rm -rf ${MASTER_SSL}

mkdir ${MASTER_SSL}

cp {ca.key,ca.crt} ${MASTER_SSL}

openssl genrsa -out ${MASTER_SSL}/kubelet_client.key 2048

openssl req -new -key ${MASTER_SSL}/kubelet_client.key -subj "/CN=${KUBE_NODE_IP}" -out ${MASTER_SSL}/kubelet_client.csr

openssl x509 -req -in ${MASTER_SSL}/kubelet_client.csr -CA ${MASTER_SSL}/ca.crt -CAkey ${MASTER_SSL}/ca.key -CAcreateserial -out ${MASTER_SSL}/kubelet_client.crt -days 10000

cat <${MASTER_SSL}/kubeconfig

apiVersion: v1

kind: Config

users:

- name: kubelet

user:

client-certificate: ${MASTER_SSL}/kubelet_client.crt

client-key: ${MASTER_SSL}/kubelet_client.key

clusters:

- name: local

cluster:

certificate-authority: ${MASTER_SSL}/ca.crt

contexts:

- context:

cluster: local

user: kubelet

name: my-context

current-context: my-context

EOF

echo "===================Success!==================="

ls ${MASTER_SSL} kubelet.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

MASTER_ADDRESS=$1

NODE_ADDRESS=$2

CLUSTER_DNS=$3

#二进制可执行文件地址

KUBE_BIN_DIR="/usr/bin"

#配置文件地址

KUBE_CFG_DIR="/etc/kubernetes"

#证书地址

MASTER_SSL="/etc/kubernetes/ssl"

mkdir -p /var/lib/kubelet

mkdir -p /var/log/kubernetes

echo '===================Config kubelet... ================'

#公共配置该配置文件同时被kubelet、kube-proxy使用

echo "===================Create ${KUBE_CFG_DIR}/config file==================="

cat <${KUBE_CFG_DIR}/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=false"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=https://${MASTER_ADDRESS}:6443"

EOF

echo "===================Create ${KUBE_CFG_DIR}/config file sucess==================="

#kube-apiserver配置

echo "===================Create ${KUBE_CFG_DIR}/kubelet file==================="

cat <${KUBE_CFG_DIR}/kubelet

# --address=0.0.0.0: The IP address for the Kubelet to serve on (set to 0.0.0.0 for all interfaces)

KUBELET_ADDRESS="--address=${NODE_ADDRESS}"

# --port=10250: The port for the Kubelet to serve on. Note that "kubectl logs" will not work if you set this flag.

# NODE_PORT="--port=10250"

# --hostname-override="": If non-empty, will use this string as identification instead of the actual hostname.

KUBELET_HOSTNAME="--hostname-override=${NODE_ADDRESS}"

# --api-servers=[]: List of Kubernetes API servers for publishing events,

# and reading pods and services. (ip:port), comma separated.

KUBELET_API_SERVER="--api-servers=https://${MASTER_ADDRESS}:6443"

# DNS info

#kubelet pod infra container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS="--cgroup-driver=systemd --cluster_dns=${CLUSTER_DNS} --cluster_domain=cluster.local --log-dir=/var/log/kubernetes --v=2 --kubeconfig=${MASTER_SSL}/kubeconfig"

EOF

echo "===================Create /usr/lib/systemd/system/kubelet.service file==================="

cat </usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-${KUBE_CFG_DIR}/config

EnvironmentFile=-${KUBE_CFG_DIR}/kubelet

ExecStart=${KUBE_BIN_DIR}/kubelet \\

\$KUBE_LOGTOSTDERR \\

\$KUBE_LOG_LEVEL \\

\$KUBELET_API_SERVER \\

\$KUBELET_ADDRESS \\

\$KUBELET_PORT \\

\$KUBELET_HOSTNAME \\

\$KUBE_ALLOW_PRIV \\

\$KUBELET_POD_INFRA_CONTAINER \\

\$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

echo '===================Start kubelet... ================='

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

systemctl status kubelet kube-proxy.sh

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

MASTER_ADDRESS=$1

NODE_ADDRESS=$2

#二进制可执行文件地址

KUBE_BIN_DIR="/usr/bin"

#配置文件地址

KUBE_CFG_DIR="/etc/kubernetes"

#证书地址

MASTER_SSL="/etc/kubernetes/ssl"

echo '===================Config kube-proxy... ================'

echo "===================Create ${KUBE_CFG_DIR}/proxy file==================="

cat <${KUBE_CFG_DIR}/proxy

# --hostname-override="": If non-empty, will use this string as identification instead of the actual hostname.

# Add your own!

KUBE_PROXY_ARGS="--hostname-override=${NODE_ADDRESS} --master=https://${MASTER_ADDRESS}:6443 --kubeconfig=${MASTER_SSL}/kubeconfig"

EOF

echo "===================Create ${KUBE_CFG_DIR}/kube-proxy file sucess==================="

echo "===================Create /usr/lib/systemd/system/kube-proxy.service file==================="

cat </usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-${KUBE_CFG_DIR}/config

EnvironmentFile=-${KUBE_CFG_DIR}/proxy

ExecStart=${KUBE_BIN_DIR}/kube-proxy \\

\$KUBE_LOGTOSTDERR \\

\$KUBE_LOG_LEVEL \\

\$KUBE_MASTER \\

\$KUBE_PROXY_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

echo "===================Start kube-proxy... ================="

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

systemctl status kube-proxy flannel.sh

#/bin/bash

#第一个参数是Masterip

#关闭selinux和firewalld

echo "====================Disable selinux and firewalld...========"

if [ $(getenforce) == "Enabled" ]; then

setenforce 0

fi

systemctl disable firewalld

systemctl stop firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

echo "============Disable selinux and firewalld success!=========="

echo "=====================Install flannel... ======================="

rpm -ivh flannel/flannel-0.7.1-1.el7.x86_64.rpm

MASTER_ADDRESS=$1

echo "master_IP:"${MASTER_ADDRESS}

#更新ETCD配置文件

echo '==================update /etc/sysconfig/flanneld ...=================='

cat </etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://${MASTER_ADDRESS}:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/coreos.com/network"

# Any additional options that you want to pass

#FLANNEL_OPTIONS=""

EOF

echo '===================start flannel service... ==================='

ip link set docker0 down

ip link delete docker0

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld docker

ip addr