爬虫记录(6)——爬虫实战:爬取知乎网站内容,保存到数据库,并导出到Excel

前面几篇文字我们介绍了相关的爬虫的方法爬取网站内容和网站的图片,且保存到数据库中。

今天呢,我们来次实战练习,爬取知乎网站跟话题网站top的几个问题和答案,然后保存到数据库中,最后把数据库中的所有内容再导出到Excel中。我们还是继续之前的代码,同样的代码就不贴出来了,如果有不了解的同学,可以查看之前的文章,或者文章末尾有又git网站可以自己下载查看所有代码。

1、ExcelUtils Excel导出工具类

首先需要导入 org.apache.poi 这个jar包。

package com.dyw.crawler.util;

import org.apache.poi.hssf.usermodel.*;

import org.apache.poi.hssf.util.HSSFColor;

import java.io.IOException;

import java.io.OutputStream;

import java.lang.reflect.Field;

import java.lang.reflect.Method;

import java.text.SimpleDateFormat;

import java.util.Collection;

import java.util.Date;

import java.util.Iterator;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

/**

* excel 工具类

* Created by dyw on 2017/9/14.

*/

public class ExcelUtils {

public void exportExcel(String title, Collection dataset, OutputStream out) {

exportExcel(title, null, dataset, out, "yyyy-MM-dd");

}

public void exportExcel(String title, String[] headers, Collection dataset, OutputStream out) {

exportExcel(title, headers, dataset, out, "yyyy-MM-dd");

}

/**

* 这是一个通用的方法,利用了JAVA的反射机制,可以将放置在JAVA集合中并且符号一定条件的数据以EXCEL 的形式输出到指定IO设备上

*

* @param title 表格标题名

* @param headers 表格属性列名数组

* @param dataset 需要显示的数据集合,集合中一定要放置符合javabean风格的类的对象。此方法支持的

* javabean属性的数据类型有基本数据类型及String,Date,byte[](图片数据)

* @param out 与输出设备关联的流对象,可以将EXCEL文档导出到本地文件或者网络中

* @param pattern 如果有时间数据,设定输出格式。默认为"yyy-MM-dd"

*/

public void exportExcel(String title, String[] headers, Collection dataset, OutputStream out, String pattern) {

// 声明一个工作薄

HSSFWorkbook workbook = new HSSFWorkbook();

// 生成一个表格

HSSFSheet sheet = workbook.createSheet(title);

// 设置表格默认列宽度为15个字节

sheet.setDefaultColumnWidth((short) 15);

// 生成一个样式

HSSFCellStyle style = workbook.createCellStyle();

// 设置这些样式

style.setFillForegroundColor(HSSFColor.SKY_BLUE.index);

style.setFillPattern(HSSFCellStyle.SOLID_FOREGROUND);

style.setBorderBottom(HSSFCellStyle.BORDER_THIN);

style.setBorderLeft(HSSFCellStyle.BORDER_THIN);

style.setBorderRight(HSSFCellStyle.BORDER_THIN);

style.setBorderTop(HSSFCellStyle.BORDER_THIN);

style.setAlignment(HSSFCellStyle.ALIGN_CENTER);

// 生成一个字体

HSSFFont font = workbook.createFont();

font.setColor(HSSFColor.VIOLET.index);

font.setFontHeightInPoints((short) 12);

font.setBoldweight(HSSFFont.BOLDWEIGHT_BOLD);

// 把字体应用到当前的样式

style.setFont(font);

// 生成并设置另一个样式

HSSFCellStyle style2 = workbook.createCellStyle();

style2.setFillForegroundColor(HSSFColor.LIGHT_YELLOW.index);

style2.setFillPattern(HSSFCellStyle.SOLID_FOREGROUND);

style2.setBorderBottom(HSSFCellStyle.BORDER_THIN);

style2.setBorderLeft(HSSFCellStyle.BORDER_THIN);

style2.setBorderRight(HSSFCellStyle.BORDER_THIN);

style2.setBorderTop(HSSFCellStyle.BORDER_THIN);

style2.setAlignment(HSSFCellStyle.ALIGN_CENTER);

style2.setVerticalAlignment(HSSFCellStyle.VERTICAL_CENTER);

// 生成另一个字体

HSSFFont font2 = workbook.createFont();

font2.setBoldweight(HSSFFont.BOLDWEIGHT_NORMAL);

// 把字体应用到当前的样式

style2.setFont(font2);

// 声明一个画图的顶级管理器

HSSFPatriarch patriarch = sheet.createDrawingPatriarch();

// 定义注释的大小和位置,详见文档

HSSFComment comment = patriarch.createComment(new HSSFClientAnchor(0,

0, 0, 0, (short) 4, 2, (short) 6, 5));

// 设置注释内容

comment.setString(new HSSFRichTextString("可以在POI中添加注释!"));

// 设置注释作者,当鼠标移动到单元格上是可以在状态栏中看到该内容.

comment.setAuthor("leno");

// 产生表格标题行

HSSFRow row = sheet.createRow(0);

for (short i = 0; i < headers.length; i++) {

HSSFCell cell = row.createCell(i);

cell.setCellStyle(style);

HSSFRichTextString text = new HSSFRichTextString(headers[i]);

cell.setCellValue(text);

}

// 遍历集合数据,产生数据行

Iterator it = dataset.iterator();

int index = 0;

while (it.hasNext()) {

index++;

row = sheet.createRow(index);

T t = (T) it.next();

// 利用反射,根据javabean属性的先后顺序,动态调用getXxx()方法得到属性值

Field[] fields = t.getClass().getDeclaredFields();

for (short i = 0; i < fields.length; i++) {

HSSFCell cell = row.createCell(i);

cell.setCellStyle(style2);

Field field = fields[i];

String fieldName = field.getName();

String getMethodName = "get"

+ fieldName.substring(0, 1).toUpperCase()

+ fieldName.substring(1);

try {

Class tCls = t.getClass();

Method getMethod = tCls.getMethod(getMethodName, new Class[]{});

Object value = getMethod.invoke(t, new Object[]{});

// 判断值的类型后进行强制类型转换

String textValue = null;

// if (value instanceof Integer) {

// int intValue = (Integer) value;

// cell.setCellValue(intValue);

// } else if (value instanceof Float) {

// float fValue = (Float) value;

// textValue = new HSSFRichTextString(

// String.valueOf(fValue));

// cell.setCellValue(textValue);

// } else if (value instanceof Double) {

// double dValue = (Double) value;

// textValue = new HSSFRichTextString(

// String.valueOf(dValue));

// cell.setCellValue(textValue);

// } else if (value instanceof Long) {

// long longValue = (Long) value;

// cell.setCellValue(longValue);

// }

if (value instanceof Boolean) {

boolean bValue = (Boolean) value;

textValue = "男";

if (!bValue) {

textValue = "女";

}

} else if (value instanceof Date) {

Date date = (Date) value;

SimpleDateFormat sdf = new SimpleDateFormat(pattern);

textValue = sdf.format(date);

} else if (value instanceof byte[]) {

// 有图片时,设置行高为60px;

row.setHeightInPoints(60);

// 设置图片所在列宽度为80px,注意这里单位的一个换算

sheet.setColumnWidth(i, (short) (35.7 * 80));

// sheet.autoSizeColumn(i);

byte[] bsValue = (byte[]) value;

HSSFClientAnchor anchor = new HSSFClientAnchor(0, 0,

1023, 255, (short) 6, index, (short) 6, index);

anchor.setAnchorType(2);

patriarch.createPicture(anchor, workbook.addPicture(

bsValue, HSSFWorkbook.PICTURE_TYPE_JPEG));

} else {

// 其它数据类型都当作字符串简单处理

if (null == value) {

textValue = "";

} else {

textValue = value.toString();

}

}

// 如果不是图片数据,就利用正则表达式判断textValue是否全部由数字组成

if (textValue != null) {

Pattern p = Pattern.compile("^//d+(//.//d+)?$");

Matcher matcher = p.matcher(textValue);

if (matcher.matches()) {

// 是数字当作double处理

cell.setCellValue(Double.parseDouble(textValue));

} else {

HSSFRichTextString richString = new HSSFRichTextString(

textValue);

HSSFFont font3 = workbook.createFont();

font3.setColor(HSSFColor.BLUE.index);

richString.applyFont(font3);

cell.setCellValue(richString);

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

try {

workbook.write(out);

} catch (IOException e) {

e.printStackTrace();

}

}

}

2、RegularCollection 正则表达式集合类

因为提取网站内容,不同的内容会涉及到不同的正则表达式,所有我们这里把所有的则表达式提取出来,放到一个单独的类中。

package com.dyw.crawler.file;

/**

* 正则表达式集合类

* Created by dyw on 2017/9/14.

*/

public class RegularCollection {

//获取img标签正则

public static final String IMGURL_REG = "]*?>" ;

//获取href正则

public static final String AURL_REG = "href=\"(.*?)\"";

//获取http开头,png|jpg|bmp|gif结尾的 正则

public static final String IMGSRC_REG = "[a-zA-z]+://[^\\s]*(?:png|jpg|bmp|gif)";

//获取没有以http开头,png|jpg|bmp|gif结尾的 正则

public static final String IMGSRC_REG1 = "/[^\\s]*(?:png|jpg|bmp|gif)";

/* **************************知乎网址************************** */

//知乎获取 question link

public static final String ZHIHU_QUESTION_link = "_link.*target";

//匹配知乎 question uri

public static final String ZHIHU_QUESTION_URI = "/.*[0-9]{8}";

//匹配标题 匹配结果:Header-title">有哪些值得一提的生活窍门?< 还得:substring(14, title.length() - 1);

public static final String ZHIHU_TITLE = "Header-title.*?<";

//匹配问题当前回答数 匹配结果:List-headerText">344 个回答

public static final String ZHIHU_ANSWER = "List-headerText.*?;

//匹配关注者和被浏览数 匹配结果:NumberBoard-value">4894

public static final String ZHIHU_CONCERN = "NumberBoard-value.*?;

//匹配答案内容 匹配结果: 还得:substring(19, title.length() - 1);

public static final String ZHIHU_ANSWER_CONTENT = "CopyrightRichText-richText\" itemprop.*?";

//匹配答案点赞数 匹配结果: 还得:substring(40, title.length() - 1);

public static final String ZHIHU_LIKE_COUNT = "AnswerItem-extraInfo.*?";

}

3、ExcelTitleConllection excel 标题集合类

package com.dyw.crawler.file;

/**

* excel 标题集合类

* Created by dyw on 2017/9/17.

*/

public class ExcelTitleConllection {

public static final String[] ZHIHUTITLE = {"标题", "内容", "关注者数", "浏览数", "答案数",

"答案一", "点赞数一","评论数一",

"答案二", "点赞数二","评论数二",

"答案三", "点赞数三","评论数三",

"答案四", "点赞数四","评论数四",

"答案五", "点赞数五","评论数五","爬取时间"};

}

4、URICollection 需要爬取的URI的集合

package com.dyw.crawler.file;

/**

* 需要爬取的URI的集合

* Created by dyw on 2017/9/14.

*/

public class URICollection {

/* **************************知乎网址************************** */

/**

* 知乎网址url

*/

public static final String ZHIHU = "https://www.zhihu.com";

/**

* 知乎根话题网址url

*/

public static final String ZHIHUTOPIC = "https://www.zhihu.com/topic/19776749/hot";

}

5、main主方法

package com.dyw.crawler.project;

import com.dyw.crawler.file.ExcelTitleConllection;

import com.dyw.crawler.file.RegularCollection;

import com.dyw.crawler.file.URICollection;

import com.dyw.crawler.model.Zhihu;

import com.dyw.crawler.util.ConnectionPool;

import com.dyw.crawler.util.CrawlerUtils;

import com.dyw.crawler.util.ExcelUtils;

import com.dyw.crawler.util.RegularUtils;

import java.io.FileOutputStream;

import java.io.OutputStream;

import java.sql.Connection;

import java.sql.Date;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

/**

* 爬取知乎根话题 排行榜 前几 问题 答案等相关信息

* Created by dyw on 2017/9/12.

*/

public class Project5 {

public static void main(String[] args) throws Exception {

ConnectionPool connectionPool = new ConnectionPool();

connectionPool.createPool();

// 网站登录url

// String loginUrl = "https://www.zhihu.com/";

//爬取网站

String dataUrl = URICollection.ZHIHUTOPIC;

// 设置登陆时要求的信息,用户名和密码

// NameValuePair[] loginInfo = {new NameValuePair("phone_num", ""),

// new NameValuePair("password", "")};

try {

// String cookie = CrawlerUtils.post(loginUrl, loginInfo);

Map map = new HashMap<>();

//放入自己知乎账号登录时获取的cookie即可

map.put("Cookie", "z_c0=");

String html = CrawlerUtils.get(dataUrl, map);

List list = RegularUtils.match(RegularCollection.ZHIHU_QUESTION_link, html);

List uriLists = RegularUtils.match(RegularCollection.ZHIHU_QUESTION_URI, list);

uriLists.forEach(uri -> {

String url = URICollection.ZHIHU + uri;

try {

//详细页内容

String detailHtml = CrawlerUtils.get(url, map);

//匹配标题

List match = RegularUtils.match(RegularCollection.ZHIHU_TITLE, detailHtml);

String title = match.get(0);

title = title.substring(14, title.length() - 1);

//答案数

List match1 = RegularUtils.match(RegularCollection.ZHIHU_ANSWER, detailHtml);

String answerCount = match1.get(0);

answerCount = answerCount.substring(23, answerCount.length() - 2);

//关注者和被浏览数

List match2 = RegularUtils.match(RegularCollection.ZHIHU_CONCERN, detailHtml);

String concern = match2.get(0);

concern = concern.substring(19, concern.length() - 2);

String browsed = match2.get(1);

browsed = browsed.substring(19, browsed.length() - 2);

//答案内容

List match3 = RegularUtils.match(RegularCollection.ZHIHU_ANSWER_CONTENT, detailHtml);

String answer1 = match3.get(0);

answer1 = answer1.substring(44, answer1.length() - 40);

String answer2 = match3.get(1);

answer2 = answer2.substring(44, answer2.length() - 40);

//答案内容点赞数

List match4 = RegularUtils.match(RegularCollection.ZHIHU_LIKE_COUNT, detailHtml);

String like1 = match4.get(0);

like1 = like1.substring(94, like1.length() - 9);

String like2 = match4.get(1);

like2 = like2.substring(94, like2.length() - 9);

Connection conn = connectionPool.getConnection();

Zhihu zhihu = new Zhihu(title, "", concern, browsed, answerCount, answer1, like1, "", answer2, like2, "", "", "", "", "", "", "", "", "", "");

executeInsert(conn, zhihu);

connectionPool.returnConnection(conn);

} catch (Exception e) {

e.printStackTrace();

}

});

} catch (Exception e) {

e.printStackTrace();

}

OutputStream out = new FileOutputStream("C:\\Users\\dyw\\Desktop\\crawler\\a.xls");

Connection conn1 = connectionPool.getConnection();

List list = excueteQuery(conn1);

connectionPool.returnConnection(conn1);

ExcelUtils ex = new ExcelUtils<>();

ex.exportExcel("知乎根话题top5问题及答案", ExcelTitleConllection.ZHIHUTITLE, list, out);

out.close();

}

/**

* 执行sql存储

*

* @param conn sqlconn

* @param zhihu 知乎实体

*/

private static void executeInsert(Connection conn, Zhihu zhihu) throws Exception {

String insertSql = "insert into zhihu (title,content,concern,browsed,answer_count, answer1,like1,comment1, answer2,like2,comment2, answer3,like3,comment3, answer4,like4,comment4, answer5,like5,comment5, crawler_date) " +

"values (?,?,?,?,?, ?,?,?, ?,?,?, ?,?,?, ?,?,?, ?,?,?, ?)";

PreparedStatement preparedStatement = conn.prepareStatement(insertSql);

preparedStatement.setString(1, zhihu.getTitle());

preparedStatement.setString(2, zhihu.getContent());

preparedStatement.setString(3, zhihu.getConcern());

preparedStatement.setString(4, zhihu.getBrowsed());

preparedStatement.setString(5, zhihu.getAnswerCount());

preparedStatement.setString(6, zhihu.getAnswer1());

preparedStatement.setString(7, zhihu.getLike1());

preparedStatement.setString(8, zhihu.getComment1());

preparedStatement.setString(9, zhihu.getAnswer2());

preparedStatement.setString(10, zhihu.getLike2());

preparedStatement.setString(11, zhihu.getComment2());

preparedStatement.setString(12, zhihu.getAnswer3());

preparedStatement.setString(13, zhihu.getLike3());

preparedStatement.setString(14, zhihu.getComment3());

preparedStatement.setString(15, zhihu.getAnswer4());

preparedStatement.setString(16, zhihu.getLike4());

preparedStatement.setString(17, zhihu.getComment4());

preparedStatement.setString(18, zhihu.getAnswer5());

preparedStatement.setString(19, zhihu.getLike5());

preparedStatement.setString(20, zhihu.getComment5());

preparedStatement.setDate(21, new Date(System.currentTimeMillis()));

preparedStatement.executeUpdate();

preparedStatement.close();

}

private static List excueteQuery(Connection conn) throws Exception {

List list = new ArrayList<>();

String insertSql = "select * from zhihu ";

PreparedStatement preparedStatement = conn.prepareStatement(insertSql);

ResultSet resultSet = preparedStatement.executeQuery();

while (resultSet.next()) {

String title = resultSet.getString(2);

String content = resultSet.getString(3);

String concern = resultSet.getString(4);

String browsed = resultSet.getString(5);

String answerCount = resultSet.getString(6);

String answer1 = resultSet.getString(7);

String like1 = resultSet.getString(8);

String comment1 = resultSet.getString(9);

Zhihu zhihu = new Zhihu(title, content, concern, browsed, answerCount, answer1, like1, comment1, "", "", "", "", "", "", "", "", "", "", "", "");

list.add(zhihu);

}

return list;

}

}

6、Zhihu 知乎实体

package com.dyw.crawler.model;

import java.sql.Date;

/**

* 知乎实体

* Created by dyw on 2017/9/17.

*/

public class Zhihu {

//标题

private String title;

//内容

private String content;

//关注者

private String concern;

//浏览数

private String browsed;

//爬取时答案数

private String answerCount;

//回答1

private String answer1;

//点赞数1

private String like1;

//评论数1

private String comment1;

private String answer2;

private String like2;

private String comment2;

private String answer3;

private String like3;

private String comment3;

private String answer4;

private String like4;

private String comment4;

private String answer5;

private String like5;

private String comment5;

private Date crawler_date;

public String getAnswerCount() {

return answerCount;

}

public void setAnswerCount(String answerCount) {

this.answerCount = answerCount;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public String getConcern() {

return concern;

}

public void setConcern(String concern) {

this.concern = concern;

}

public String getBrowsed() {

return browsed;

}

public void setBrowsed(String browsed) {

this.browsed = browsed;

}

public String getAnswer1() {

return answer1;

}

public void setAnswer1(String answer1) {

this.answer1 = answer1;

}

public String getLike1() {

return like1;

}

public void setLike1(String like1) {

this.like1 = like1;

}

public String getComment1() {

return comment1;

}

public void setComment1(String comment1) {

this.comment1 = comment1;

}

public String getAnswer2() {

return answer2;

}

public void setAnswer2(String answer2) {

this.answer2 = answer2;

}

public String getLike2() {

return like2;

}

public void setLike2(String like2) {

this.like2 = like2;

}

public String getComment2() {

return comment2;

}

public void setComment2(String comment2) {

this.comment2 = comment2;

}

public String getAnswer3() {

return answer3;

}

public void setAnswer3(String answer3) {

this.answer3 = answer3;

}

public String getLike3() {

return like3;

}

public void setLike3(String like3) {

this.like3 = like3;

}

public String getComment3() {

return comment3;

}

public void setComment3(String comment3) {

this.comment3 = comment3;

}

public String getAnswer4() {

return answer4;

}

public void setAnswer4(String answer4) {

this.answer4 = answer4;

}

public String getLike4() {

return like4;

}

public void setLike4(String like4) {

this.like4 = like4;

}

public String getComment4() {

return comment4;

}

public void setComment4(String comment4) {

this.comment4 = comment4;

}

public String getAnswer5() {

return answer5;

}

public void setAnswer5(String answer5) {

this.answer5 = answer5;

}

public String getLike5() {

return like5;

}

public void setLike5(String like5) {

this.like5 = like5;

}

public String getComment5() {

return comment5;

}

public void setComment5(String comment5) {

this.comment5 = comment5;

}

public Date getCrawler_date() {

return crawler_date;

}

public void setCrawler_date(Date crawler_date) {

this.crawler_date = crawler_date;

}

public Zhihu(){

}

//构造函数

public Zhihu(String title, String content, String concern, String browsed,String answerCount,String answer1, String like1, String comment1, String answer2, String like2, String comment2, String answer3, String like3, String comment3, String answer4, String like4, String comment4, String answer5, String like5, String comment5) {

this.title = title;

this.content = content;

this.concern = concern;

this.browsed = browsed;

this.answerCount = answerCount;

this.answer1 = answer1;

this.like1 = like1;

this.comment1 = comment1;

this.answer2 = answer2;

this.like2 = like2;

this.comment2 = comment2;

this.answer3 = answer3;

this.like3 = like3;

this.comment3 = comment3;

this.answer4 = answer4;

this.like4 = like4;

this.comment4 = comment4;

this.answer5 = answer5;

this.like5 = like5;

this.comment5 = comment5;

}

}

7、结果

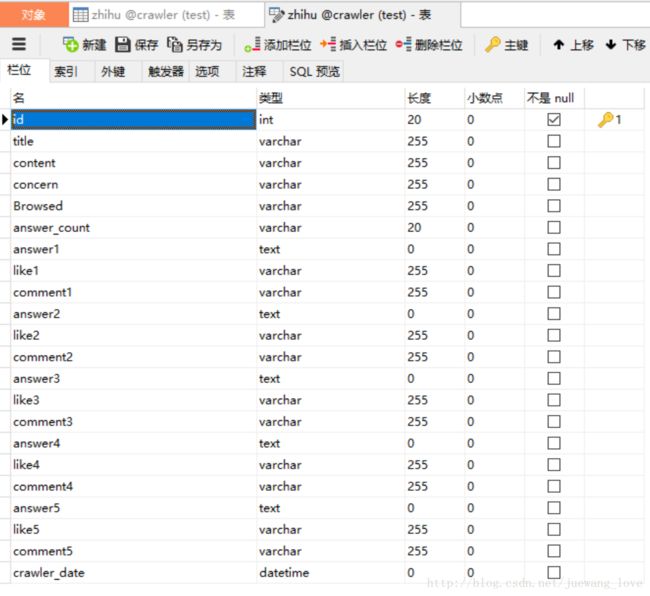

表结构:

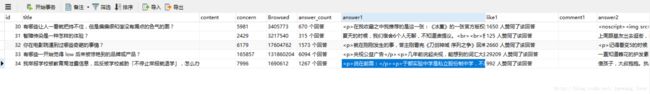

结果:

注:

在用httpclient进行模拟登录的时候,我遇到一个问题。获取到的cookie并不是浏览器登陆的时候获取到的完整的cookie,所有我就暂时先用浏览器中的cookie直接进行页面爬取。因为时间有限,先没有去理会这个问题,如果您知道如何修改,请告知一二,我在这谢谢了。

还有其中我没有获取答案的 评论数,这个大家可以自己写相应的正则,应该不是什么问题。

还有就是答案只获取了2个,表中我是设计了5个,剩下的3个可以先不用考虑。

如果对本文中方法有不了解的可以看之前的爬虫记录系列文章,有具体代码。

具体代码我上传在github上,需要完整代码的可以自己下载 https://github.com/dingyinwu81/crawler

如果有什么代码修改的建议,请给我留言呗! ☺☺☺

欢迎加入 扣扣群 371322638 一起学习研究爬虫技术