【十五】SparkSQL访问日志分析:数据清洗、数据分析(分组、排序、窗口函数)、入库(MySQL)、性能优化

概述:

1.第一次数据清洗:从原始日志中抽取出需要的列的数据,按照需要的格式。

2.第二步数据清洗:解析第一步清洗后的数据, 处理时间,提出URL中的产品编号、得到产品类型, 由IP得到城市信息(用到开源社区的解析代码,该部分具体介绍:ipdatabase解析出IP地址所属城市) ,按照天分区进行存储 (用parquet格式)。

3.统计分析(分组、排序、窗口函数)。

4.结果写入MySQL。

5.性能优化:代码中代码优化部分有注释,其他常用优化会另写一篇,一会儿附上链接。

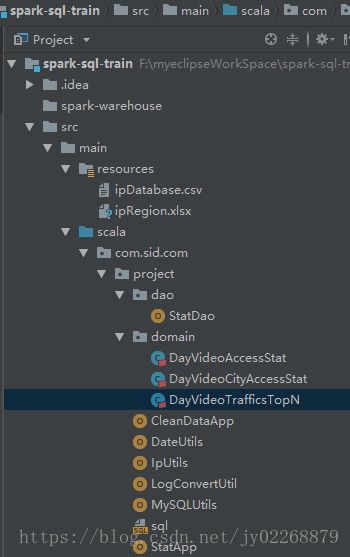

项目目录

pom.xml

4.0.0

com.sid.com

sparksqltrain

1.0-SNAPSHOT

2008

2.11.8

2.2.0

scala-tools.org

Scala-Tools Maven2 Repository

http://scala-tools.org/repo-releases

scala-tools.org

Scala-Tools Maven2 Repository

http://scala-tools.org/repo-releases

org.scala-lang

scala-library

${scala.version}

org.apache.spark

spark-sql_2.11

${spark.version}

org.apache.spark

spark-hive_2.11

${spark.version}

org.spark-project.hive

hive-jdbc

1.2.1.spark2

provided

mysql

mysql-connector-java

5.1.38

com.ggstar

ipdatabase

1.0

org.apache.poi

poi-ooxml

3.14

org.apache.poi

poi

3.14

src/main/scala

src/test/scala

org.scala-tools

maven-scala-plugin

compile

testCompile

${scala.version}

-target:jvm-1.5

org.apache.maven.plugins

maven-eclipse-plugin

true

ch.epfl.lamp.sdt.core.scalabuilder

ch.epfl.lamp.sdt.core.scalanature

org.eclipse.jdt.launching.JRE_CONTAINER

ch.epfl.lamp.sdt.launching.SCALA_CONTAINER

org.scala-tools

maven-scala-plugin

${scala.version}

MySQL创建数据库

create table day_video_access_topn_stat(

day varchar (8) not null,

cms_id bigint(10) not null,

times bigint(10) not null,

primary key (day,cms_id)

);

create table day_video_city_access_topn_stat(

day varchar (8) not null,

cms_id bigint(10) not null,

city varchar (20) not null,

times bigint(10) not null,

times_rank int not null,

primary key (day,city,cms_id)

);

create table day_video_traffics_access_topn_stat(

day varchar (8) not null,

cms_id bigint(10) not null,

traffics bigint (10) not null,

primary key (day,cms_id)

);DateUtils.scala

package com.sid.com.project

import java.text.SimpleDateFormat

import java.util.{Date, Locale}

import org.apache.commons.lang3.time.FastDateFormat

/**

* 日期时间转化工具

* */

object DateUtils {

// 10/Nov/2016:00:01:02 +0800

//输入日期格式

//SimpleDateFormat是线程不安全的,解析的时候有些时间会解析错

val YYYYMMDDHHMM_TIME_FORMAT = FastDateFormat.getInstance("dd/MMM/yyyy:HH:mm:ss Z",Locale.ENGLISH)

//目标日期格式

val TARGET_FOMAT = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

def parse(time:String)={

TARGET_FOMAT.format(new Date(getTime(time)))

}

/**

* 获取输入日志Long类型的时间

* [10/Nov/2016:00:01:02 +0800]

* */

def getTime(time:String) ={

try {

YYYYMMDDHHMM_TIME_FORMAT.parse(time.substring(time.indexOf("[") + 1, time.lastIndexOf("]"))).getTime()

}catch{

case e :Exception =>{

0L

}

}

}

def main(args: Array[String]): Unit = {

println(parse("[10/Nov/2016:00:01:02 +0800]"))

}

}

IpUtils.scala

package com.sid.com.project

import com.ggstar.util.ip.IpHelper

object IpUtils {

def getCity(ip:String) ={

IpHelper.findRegionByIp(ip)

}

def main(args: Array[String]): Unit = {

println(getCity("117.35.88.11"))

}

}

LogConvertUtil.scala

package com.sid.com.project

import org.apache.spark.sql.Row

import org.apache.spark.sql.types.{LongType, StringType, StructField, StructType}

/**

* 访问日志转换工具类

*

* 输入:访问时间、访问URL、耗费的流量、访问IP地址信息

* 2016-11-10 00:01:02 http://www.主站地址.com/code/1852 2345 117.35.88.11

*

* 输出:URL、cmsType(video/article)、cmsId(编号)、流量、ip、城市信息、访问时间、按天分区存储

* */

object LogConvertUtil {

//输出字段的schema

val struct = StructType(

Array(

StructField("url", StringType),

StructField("cmsType", StringType),

StructField("cmsId", LongType),

StructField("traffic", LongType),

StructField("ip", StringType),

StructField("city", StringType),

StructField("time", StringType),

StructField("day", StringType)

)

)

//根据输入的每行信息转换成输出的样式

def parseLog(log: String)={

try{

val splits = log.split("\t")

val url = splits(1)

val traffic = splits(2).toLong

val ip = splits(3)

val domain = "http://www.imooc.com/"

val cms = url.substring(url.indexOf(domain) + domain.length)

val cmsTypeId = cms.split("/")

var cmsType = ""

var cmsId = 0L

if (cmsTypeId.length > 1) {

cmsType = cmsTypeId(0)

cmsId = cmsTypeId(1).toLong

}

val city = IpUtils.getCity(ip)

val time = splits(0)

val day = time.substring(0, 10).replaceAll("-", "")

//row中的字段要和struct中的字段对应上

Row(url, cmsType, cmsId, traffic, ip, city, time, day)

}catch{

case e : Exception =>Row(0)

}

}

}

MySQLUtils.scala

package com.sid.com.project

import java.sql.{Connection, DriverManager, PreparedStatement}

/**

* MySQL操作工具类

* */

object MySQLUtils {

//获取数据库连接

def getConnection()={

DriverManager.getConnection("jdbc:mysql://localhost:3306/sid?user=root&password=密码")

}

//释放数据库连接的资源

def release(connection:Connection,pstmt:PreparedStatement)={

try{

if(pstmt!=null){

pstmt.close()

}

}catch {

case e:Exception => e.printStackTrace()

}finally {

if(connection!=null){

connection.close()

}

}

}

def main(args: Array[String]): Unit = {

println(getConnection())

}

}

CleanDataApp.scala

package com.sid.com.project

import org.apache.spark.sql.{SaveMode, SparkSession}

import com.sid.com.project.LogConvertUtil

object CleanDataApp {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().master("local[3]").appName("CleanDataApp").getOrCreate()

/**

* 第一步数据清洗,从原始日志中抽取出需要的列的数据,按照需要的格式

* */

//dataCleaning(spark)

/**

* 第二步数据清洗,解析第一步清洗后的数据,

* 处理时间,提出URL中的产品编号、得到产品类型,

* 由IP得到城市信息(用到开源社区的解析代码)

* 按照天分区进行存储

*/

dataCleaning2(spark)

spark.stop()

}

//输入数据 .log文件

//183.162.52.7 - - [10/Nov/2016:00:01:02 +0800] "POST /api3/getadv HTTP/1.1" 200 813 "www.主站地址.com" "-" cid=0×tamp=1478707261865&uid=2871142&marking=androidbanner&secrect=a6e8e14701ffe9f6063934780d9e2e6d&token=f51e97d1cb1a9caac669ea8acc162b96 "mukewang/5.0.0 (Android 5.1.1; Xiaomi Redmi 3 Build/LMY47V),Network 2G/3G" "-" 10.100.134.244:80 200 0.027 0.027

//输出数据 没有schema

//2016-11-10 00:01:02 http://www.主站地址.com/code/1852 2345 117.35.88.11

def dataCleaning(spark:SparkSession): Unit ={

val acc = spark.sparkContext.textFile("file:///F:\\mc\\SparkSQL\\data\\access1000.log")

//acc.take(10).foreach(println)

//line:183.162.52.7 - - [10/Nov/2016:00:01:02 +0800] "POST /api3/getadv HTTP/1.1" 200 813 "www.主站地址.com" "-" cid=0×tamp=1478707261865&uid=2871142&marking=androidbanner&secrect=a6e8e14701ffe9f6063934780d9e2e6d&token=f51e97d1cb1a9caac669ea8acc162b96 "mukewang/5.0.0 (Android 5.1.1; Xiaomi Redmi 3 Build/LMY47V),Network 2G/3G" "-" 10.100.134.244:80 200 0.027 0.027

acc.map(line => {

val splits = line.split(" ")

val ip = splits(0)

val time = splits(3)+" "+splits(4)//第四个字段和第五个字段拼接起来就是完成的访问时间。

val url = splits(11).replaceAll("\"","")

val traffic = splits(9)//访问流量

// (ip,DateUtils.parse(time),url,traffic)

DateUtils.parse(time)+"\t"+url+"\t"+traffic+"\t"+ip

}).saveAsTextFile("file:///F:\\mc\\SparkSQL\\data\\access1000step1")

}

//输入数据

//2016-11-10 00:01:02 http://www.主站地址.com/code/1852 2345 117.35.88.11

//输出数据 带schema信息 parquet格式存储

//http://www.主站地址.com/code/1852 code 1852 2345 117.35.88.11 陕西省 2016-11-10 00:01:02 20161110

def dataCleaning2(spark:SparkSession): Unit ={

val logRDD = spark.sparkContext.textFile("file:///F:\\mc\\SparkSQL\\data\\access.log")

//val logRDD = spark.sparkContext.textFile("file:///F:\\mc\\SparkSQL\\data\\access1000step1.log\\part-00000")

//logRDD.take(10).foreach(println)

val logDF = spark.createDataFrame(logRDD.map(x => LogConvertUtil.parseLog(x)),LogConvertUtil.struct)

//logDF.printSchema()

//logDF.show(false)

/**partitionBy("day") 数据写的时候要按照天进行分区

coalesce(1) 生成的part-00000文件只有一个且较大,不然3个线程会生成3个part-0000*文件 可以用此参数调优

mode(SaveMode.Overwrite) 指定路径下如果有文件,覆盖*/

logDF.coalesce(1).write.format("parquet")

.mode(SaveMode.Overwrite)

.partitionBy("day")

.save("file:///F:\\mc\\SparkSQL\\data\\afterclean")//存储清洗后的数据

}

}

StatApp.scala

package com.sid.com.project

import com.sid.com.project.dao.StatDao

import com.sid.com.project.domain.{DayVideoAccessStat, DayVideoCityAccessStat, DayVideoTrafficsTopN}

import org.apache.spark.sql.expressions.Window

import org.apache.spark.sql.{DataFrame, SparkSession}

import org.apache.spark.sql.functions._

import scala.collection.mutable.ListBuffer

/**

* 统计分析

* */

object StatApp {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.master("local[3]")

/**参数优化

* 关闭分区字段的数据类型自动推导,

* 不然day分区字段我们要string类型,会被自动推导成integer类型

* 而且没有必要浪费这个资源*/

.config("spark.sql.sources.partitionColumnTypeInference.enabled",false)

.appName("statApp").getOrCreate()

val logDF = spark.read.format("parquet")

.load("file:///F:\\mc\\SparkSQL\\data\\afterclean")

val day = "20170511"

/**

* 代码优化:复用已有数据

* 既然每次统计都是统计的当天的视频,

* 先把该数据拿出来,然后直接传到每个具体的统计方法中

* 不要在每个具体的统计方法中都执行一次同样的过滤

*

* 用$列名得到列值,需要隐式转换 import spark.implicits._

* */

import spark.implicits._

val dayVideoDF = logDF.filter($"day" ===day&&$"cmsType"==="video")

/**

* 将这个在后文中会复用多次的dataframe缓存到内存中

* 这样后文在复用的时候会快很多

*

* default storage level (`MEMORY_AND_DISK`).

* */

dayVideoDF.cache()

//logDF.printSchema()

//logDF.show()

StatDao.deletaDataByDay(day)

//统计每天最受欢迎(访问次数)的TopN视频产品

videoAccessTopNStatDFAPI(spark,dayVideoDF)

//按照地势统计每天最受欢迎(访问次数)TopN视频产品 每个地市只要最后欢迎的前三个

cityAccessTopNStat(spark,dayVideoDF)

//统计每天最受欢迎(流量)TopN视频产品

videoTrafficsTopNStat(spark,dayVideoDF)

//清除缓存

dayVideoDF.unpersist(true)

spark.stop()

}

/**

* 统计每天最受欢迎(访问次数)的TopN视频产品

*

* 取出20170511那天访问的视频类产品。

* 统计每种视频类产品的访问次数,并按访问次数降序排列

* 用DataFrame API 来写逻辑

* */

def videoAccessTopNStatDFAPI(spark:SparkSession,logDF:DataFrame): Unit ={

//agg(count())需要导入sparksql的函数部分 import org.apache.spark.sql.functions._

import spark.implicits._

val videoLogTopNDF = logDF

.groupBy("day","cmsId")

.agg(count("cmsId").as("times"))

.orderBy($"times".desc)

//videoLogTopNDF.show(false)

/**

* 将统计结果写入MySQL中

* 代码优化:

* 在进行数据库操作的时候,不要每个record都去操作一次数据库

* 通常写法是每个partition操作一次数据库

**/

try {

videoLogTopNDF.foreachPartition(partitionOfRecords => {

val list = new ListBuffer[DayVideoAccessStat]

partitionOfRecords.foreach(info => {

val day = info.getAs[String]("day")

val cmsId = info.getAs[Long]("cmsId")

val times = info.getAs[Long]("times")

list.append(DayVideoAccessStat(day, cmsId, times))

})

StatDao.insertDayVideoTopN(list)

})

}catch{

case e:Exception =>e.printStackTrace()

}

}

/**

* 统计每天最受欢迎(访问次数)的TopN视频产品

*

* 取出20170511那天访问的视频类产品。

* 统计每种视频类产品的访问次数,并按访问次数降序排列

* 用SQL API 来写逻辑

* */

def videoAccessTopNStatSQLAPI(spark:SparkSession,logDF:DataFrame): Unit = {

logDF.createOrReplaceTempView("tt_log")

val videoTopNDF = spark.sql("select day,cmsId,count(1) as times from tt_log group by day,cmsId order by times desc")

//videoTopNDF.show(false)

/**

* 将统计结果写入MySQL中

**/

try {

videoTopNDF.foreachPartition(partitionOfRecords => {

val list = new ListBuffer[DayVideoAccessStat]

partitionOfRecords.foreach(info => {

val day = info.getAs[String]("day")

val cmsId = info.getAs[Long]("cmsId")

val times = info.getAs[Long]("times")

list.append(DayVideoAccessStat(day, cmsId, times))

})

StatDao.insertDayVideoTopN(list)

})

}catch{

case e:Exception =>e.printStackTrace()

}

}

/**

* 按照地势统计每天最受欢迎(访问次数)TopN视频产品 每个地市只要最后欢迎的前三个

* */

def cityAccessTopNStat(spark:SparkSession,logDF:DataFrame): Unit ={

//用$列名得到列值,需要隐式转换 import spark.implicits._

//agg(count())需要导入sparksql的函数部分 import org.apache.spark.sql.functions._

val videoCityLogTopNDF = logDF.groupBy("day","city","cmsId")

.agg(count("cmsId").as("times"))

//这里的结果是

//日期 地域 产品ID 访问次数

//20170511 北京市 14390 11175

//20170511 北京市 4500 11014

//20170511 浙江省 14390 11110

//20170511 浙江省 14322 11151

//然后最后需要的结果是同一天、同一个地域下,按产品访问次数降序排序

//videoLogTopNDF.show(false)

//window函数在Spark SQL中的使用 分组后组内排序

val top3DF = videoCityLogTopNDF.select(

videoCityLogTopNDF("day"),

videoCityLogTopNDF("city"),

videoCityLogTopNDF("cmsId"),

videoCityLogTopNDF("times"),

row_number().over(Window.partitionBy(videoCityLogTopNDF("city")).

orderBy(videoCityLogTopNDF("times").desc)

).as("times_rank")

).filter("times_rank <= 3")//.show(false)//只要每个地势最受欢迎的前3个

//结果

//日期 地域 产品ID 访问次数

//20170511 北京市 14390 11175

//20170511 北京市 4500 11014

//20170511 浙江省 14322 11151

//20170511 浙江省 14390 11110

//将统计结果写入MySQL中

try {

top3DF.foreachPartition(partitionOfRecords => {

val list = new ListBuffer[DayVideoCityAccessStat]

partitionOfRecords.foreach(info => {

val day = info.getAs[String]("day")

val cmsId = info.getAs[Long]("cmsId")

val city = info.getAs[String]("city")

val times = info.getAs[Long]("times")

val timesRank = info.getAs[Int]("times_rank")

list.append(DayVideoCityAccessStat(day,cmsId,city,times,timesRank))

})

StatDao.insertDayCityVideoTopN(list)

})

}catch{

case e:Exception =>e.printStackTrace()

}

}

/**

* 统计每天最受欢迎(流量)TopN视频产品

* */

def videoTrafficsTopNStat(spark:SparkSession,logDF:DataFrame): Unit ={

//用$列名得到列值,需要隐式转换 import spark.implicits._

//agg(count())需要导入sparksql的函数部分 import org.apache.spark.sql.functions._

import spark.implicits._

val videoLogTopNDF = logDF.groupBy("day","cmsId")

.agg(sum("traffic").as("traffics"))

.orderBy($"traffics".desc)

//videoLogTopNDF.show(false)

/**

* 将统计结果写入MySQL中

**/

try {

videoLogTopNDF.foreachPartition(partitionOfRecords => {

val list = new ListBuffer[DayVideoTrafficsTopN]

partitionOfRecords.foreach(info => {

val day = info.getAs[String]("day")

val cmsId = info.getAs[Long]("cmsId")

val traffics = info.getAs[Long]("traffics")

list.append(DayVideoTrafficsTopN(day, cmsId, traffics))

})

StatDao.insertDayTrafficsVideoTopN(list)

})

}catch{

case e:Exception =>e.printStackTrace()

}

}

}

StatDao.scala

package com.sid.com.project.dao

import java.sql.{Connection, PreparedStatement}

import com.sid.com.project.MySQLUtils

import com.sid.com.project.domain.{DayVideoAccessStat, DayVideoCityAccessStat, DayVideoTrafficsTopN}

import scala.collection.mutable.ListBuffer

/**

* 统计各个维度的DAO操作

* */

object StatDao {

/**

* 批量保存DayVideoAccessStat到MySQL

* */

def insertDayVideoTopN(list:ListBuffer[DayVideoAccessStat]): Unit ={

var connection:Connection = null

var pstmt : PreparedStatement = null

try{

connection = MySQLUtils.getConnection()

connection.setAutoCommit(false)//关闭自动提交

val sql = "insert into day_video_access_topn_stat(day,cms_id,times) values(?,?,?)"

pstmt = connection.prepareStatement(sql)

for(ele <- list){

pstmt.setString(1,ele.day)

pstmt.setLong(2,ele.cmsId)

pstmt.setLong(3,ele.times)

//加入到批次中,后续再执行批量处理 这样性能会好很多

pstmt.addBatch()

}

//执行批量处理

pstmt.executeBatch()

connection.commit() //手工提交

}catch {

case e :Exception =>e.printStackTrace()

}finally {

MySQLUtils.release(connection,pstmt)

}

}

/**

* 批量保存DayVideoCityAccessStat到MySQL

* */

def insertDayCityVideoTopN(list:ListBuffer[DayVideoCityAccessStat]): Unit ={

var connection:Connection = null

var pstmt : PreparedStatement = null

try{

connection = MySQLUtils.getConnection()

connection.setAutoCommit(false)//关闭自动提交

val sql = "insert into day_video_city_access_topn_stat(day,cms_id,city,times,times_rank) values(?,?,?,?,?)"

pstmt = connection.prepareStatement(sql)

for(ele <- list){

pstmt.setString(1,ele.day)

pstmt.setLong(2,ele.cmsId)

pstmt.setString(3,ele.city)

pstmt.setLong(4,ele.times)

pstmt.setInt(5,ele.timesRank)

//加入到批次中,后续再执行批量处理 这样性能会好很多

pstmt.addBatch()

}

//执行批量处理

pstmt.executeBatch()

connection.commit() //手工提交

}catch {

case e :Exception =>e.printStackTrace()

}finally {

MySQLUtils.release(connection,pstmt)

}

}

/**

* 批量保存DayVideoTrafficsTopN到MySQL

* */

def insertDayTrafficsVideoTopN(list:ListBuffer[DayVideoTrafficsTopN]): Unit ={

var connection:Connection = null

var pstmt : PreparedStatement = null

try{

connection = MySQLUtils.getConnection()

connection.setAutoCommit(false)//关闭自动提交

val sql = "insert into day_video_traffics_access_topn_stat(day,cms_id,traffics) values(?,?,?)"

pstmt = connection.prepareStatement(sql)

for(ele <- list){

pstmt.setString(1,ele.day)

pstmt.setLong(2,ele.cmsId)

pstmt.setLong(3,ele.traffics)

//加入到批次中,后续再执行批量处理 这样性能会好很多

pstmt.addBatch()

}

//执行批量处理

pstmt.executeBatch()

connection.commit() //手工提交

}catch {

case e :Exception =>e.printStackTrace()

}finally {

MySQLUtils.release(connection,pstmt)

}

}

/**

* 删除指定日期的数据

* */

def deletaDataByDay(day:String): Unit ={

val tables = Array("day_video_access_topn_stat",

"day_video_city_access_topn_stat",

"day_video_traffics_access_topn_stat")

var connection:Connection=null

var pstmt : PreparedStatement = null

try{

connection = MySQLUtils.getConnection()

for(table <- tables){

val deleteSql = s"delete from $table where day = ?"

pstmt = connection.prepareStatement(deleteSql)

pstmt.setString(1,day)

pstmt.executeUpdate()

}

}catch {

case e :Exception =>e.printStackTrace()

}finally {

MySQLUtils.release(connection,pstmt)

}

}

}

DayVideoAccessStat.scala

package com.sid.com.project.domain

/**

*每天视频课程访问次数实体类

* */

case class DayVideoAccessStat (day:String,cmsId:Long,times:Long)

DayVideoCityAccessStat.scala

package com.sid.com.project.domain

case class DayVideoCityAccessStat (day:String,cmsId:Long,city:String,times:Long,timesRank:Int)

DayVideoTrafficsTopN.scala

package com.sid.com.project.domain

case class DayVideoTrafficsTopN(day:String, cmsId:Long, traffics:Long)