HTTP请求的完整流程(tomcat)

1、HTTP协议的简单介绍

网络通信协议的本质就是规则,软件和硬件必须遵循的共同守则。我们先看下HTTP协议的请求体和响应体是什么样子:

GET /servlet/myServlet HTTP/1.1

Host: localhost:8080

Connection: keep-alive

Cache-Control: max-age=0

Upgrade-Insecure-Requests: 1

User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8

Accept-Encoding: gzip, deflate, br

Accept-Language: zh-CN,zh;q=0.9,en;q=0.8

Cookie: Idea-368c88e1=0ab9bad3-7c85-4ff3-8eb9-43b05fdcc241; onstarcar_username=pepsi; onstarcar_token=dd54ae011558e6fd; JSESSIONID=1FABECE8924A8D29B064221474BF165E

HTTP/1.1 200 OK

Server: Apache-Coyote/1.1

Content-Type: text/html

Data: Thu, 27 Dec 2018 08:18:48 GMT

Content-Length: 22

Pepsi is Cool

请求体和响应体中的参数含义可以参照这篇文章。

tomcat官方中文解释:一个轻量级应用服务器,是支持运行Servlet/JSP应用程序的容器,运行在jvm上,绑定IP地址并监听TCP端口。那么按这个解释无非就是干了以下2件事情:

1、对于网络请求的接受、处理。

2、请求分发到对应Servlet上进行业务处理、返回结果。

那么下面就看下tomcat对于请求的处理。

2、tomcat容器对请求的处理(NIO)

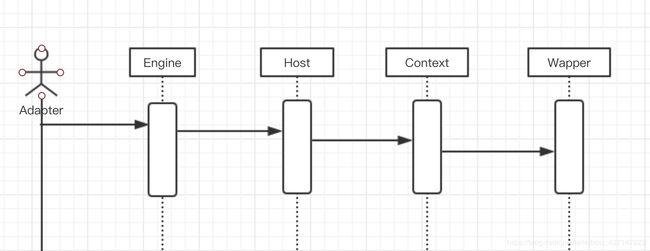

- 先看下tomcat整体的结构和一些组件介绍(盗来的一张图): 其实可以更直接一点看tomcat中conf/server.xml文件,结构很清晰。这里面最主要的部分是Connector和Container。Connector用于客户端的链接,而针对不同的协议可以有不同的实现方式,如HTTP和AJP等。Container主要作用处理业务逻辑,包含Engine,Host,Context,Wrapper等。这2个组件可以理解为一个对外接受请求,一个对内处理请求交给对应的业务处理(Servlet service方法),组合在一起就可以提供对外服务了。其他的几个组件这里就不介绍了。

|-->Container(Enginer、Wapper等)

tomcat启动时的初始化时是按层级来,如下:Catalina-->Server-->Service|-->executor

|-->Connetor-->ProtocolHandler

基本初始化就这样一个流程(这个流程图是不是很赞?),通过Digester读取server.xml、挨个初始化组件,下面主要以这2个组件为主叙说一下请求的链路。 - Connetor组件:

Connector的功能上面做过描述就是接受网络请求、根据协议转换成我们想要的请求和响应对象。Connetor的初始化是由它的上层Service初始化中拉起(详情见StandardService#startInternal())的。此方法最终的实现是调用了AbstractEndpoint.start()方法,这个方法有2个动作,一个绑定端口bind(),另一个是调用了NioEndpoint#startInternal()方法,下面我们看下NioEndpoint#startInternal()这个方法:public void startInternal() throws Exception { if (!running) { running = true; paused = false; processorCache = new SynchronizedStack<>(SynchronizedStack.DEFAULT_SIZE, socketProperties.getProcessorCache()); eventCache = new SynchronizedStack<>(SynchronizedStack.DEFAULT_SIZE, socketProperties.getEventCache()); nioChannels = new SynchronizedStack<>(SynchronizedStack.DEFAULT_SIZE, socketProperties.getBufferPool()); // Create worker collection if ( getExecutor() == null ) { createExecutor(); } initializeConnectionLatch(); // Start poller threads 轮询线程 pollers = new Poller[getPollerThreadCount()]; for (int i=0; i

这个方法执行了如下2个操作:新建唤起Poller线程和开启接受线程,疯狂接受网络请求。这2个玩意组合起来是不是很像生产者和消费模式,还有这里面初始化了很多cache,主要作用就是对象重复使用(通过reset属性值实现),避免频繁GC回收。下面我们看下这acceptor和poller:

Acceptor 接受者:是一个用于接受网络请求,将accept到的SocketChannel封装成NioSocketChannel,再将NioSocketChannel封装成PollerEvent,最后register到poller的队列中。接受和注册的代码如下:

###NioEndpoint.Acceptor#run() if (running && !paused) { //socket给我交了 。 // setSocketOptions() will hand the socket off to // an appropriate processor if successful if (!setSocketOptions(socket)) { closeSocket(socket); } } else { closeSocket(socket); } } ###NioEndpoint.setSocketOptions protected boolean setSocketOptions(SocketChannel socket) { // Process the connection try { //disable blocking, APR style, we are gonna be polling it socket.configureBlocking(false); Socket sock = socket.socket(); socketProperties.setProperties(sock); NioChannel channel = nioChannels.pop(); if (channel == null) { SocketBufferHandler bufhandler = new SocketBufferHandler( socketProperties.getAppReadBufSize(), socketProperties.getAppWriteBufSize(), socketProperties.getDirectBuffer()); if (isSSLEnabled()) { channel = new SecureNioChannel(socket, bufhandler, selectorPool, this); } else { channel = new NioChannel(socket, bufhandler); } } else { channel.setIOChannel(socket); channel.reset(); } //添加到Poller的队列中~ getPoller0().register(channel); } .... return true; } ###NioEndpoint.Poller#register() public void register(final NioChannel socket) { socket.setPoller(this); NioSocketWrapper ka = new NioSocketWrapper(socket, NioEndpoint.this); socket.setSocketWrapper(ka); ka.setPoller(this); ka.setReadTimeout(getSocketProperties().getSoTimeout()); ka.setWriteTimeout(getSocketProperties().getSoTimeout()); ka.setKeepAliveLeft(NioEndpoint.this.getMaxKeepAliveRequests()); ka.setSecure(isSSLEnabled()); ka.setReadTimeout(getConnectionTimeout()); ka.setWriteTimeout(getConnectionTimeout()); //PollerEvent 缓存池获取,避免对象的重复创建。只需要reset即可~~ PollerEvent r = eventCache.pop(); ka.interestOps(SelectionKey.OP_READ);//this is what OP_REGISTER turns into. if ( r==null) r = new PollerEvent(socket,ka,OP_REGISTER); else r.reset(socket,ka,OP_REGISTER); //加入队列 addEvent(r); }Poller轮训者:在上一个Acceptor中,已经将PollerEvent放入了Poller类中的SynchronizedQueue

中,那么这个轮训者的线程就是从这个队列中获取数据出来,这里面PollerEvent其实是一个SocketChannel,大家知道SocketChannel必须要注册到Selector上后才会能被selectKeys,所在poller线程执行的时候肯定有这个注册选择器的动作(详情见events()方法),在PollerEvent中就是一个这样注册的动作: @Override public void run() { if (interestOps == OP_REGISTER) { try { //SocketChannel注册到Selector socket.getIOChannel().register( socket.getPoller().getSelector(), SelectionKey.OP_READ, socketWrapper); } catch (Exception x) { log.error(sm.getString("endpoint.nio.registerFail"), x); } } .... }poller启动后会去SynchronizedQueue中拉取当前的头节点,这个头节点是PollerEvent(SocketChannel),调用上面PollerEvent的run方法注册到Selector上面,下面就是Selector.selectedKeys。进行判断处理等。poller线程执行的代码如下:

@Override public void run() { // Loop until destroy() is called while (true) { boolean hasEvents = false; ....去除队列头节点,注册到Selector中 //either we timed out or we woke up, process events first if ( keyCount == 0 ) hasEvents = (hasEvents | events()); Iteratoriterator = keyCount > 0 ? selector.selectedKeys().iterator() : null; // Walk through the collection of ready keys and dispatch // any active event. while (iterator != null && iterator.hasNext()) { SelectionKey sk = iterator.next(); NioSocketWrapper attachment = (NioSocketWrapper)sk.attachment(); // Attachment may be null if another thread has called // cancelledKey() if (attachment == null) { iterator.remove(); } else { iterator.remove(); processKey(sk, attachment); } }//while //process timeouts timeout(keyCount,hasEvents); }//while getStopLatch().countDown(); }}

processKey方法处理逻辑主要判断是读取数据还是写数据,这个就是tomcat读/写的脉络了,里面调用了processSocket方法。下面调用processSocket方法,我们看下这个方法:

public boolean processSocket(SocketWrapperBase socketWrapper,

SocketEvent event, boolean dispatch) {

try {

....

//processorCache 缓存池,也是避免对象重复创建

SocketProcessorBase sc = processorCache.pop();

if (sc == null) {

sc = createSocketProcessor(socketWrapper, event);

} else {

sc.reset(socketWrapper, event);

}

Executor executor = getExecutor();

if (dispatch && executor != null) {

executor.execute(sc);

} else {

sc.run();

}

} catch (RejectedExecutionException ree) {

getLog().warn(sm.getString("endpoint.executor.fail", socketWrapper) , ree);

return false;

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

// This means we got an OOM or similar creating a thread, or that

// the pool and its queue are full

getLog().error(sm.getString("endpoint.process.fail"), t);

return false;

}

return true;

}这个方法里面做了下面这个事情:SocketWrapper。

1、新建、启动SocketProcessor线程去处理那么直接一点,我们直接看其最后调的方法:EndPoint.SocketProcessor#doRun()方法:

protected void doRun() {

NioChannel socket = socketWrapper.getSocket();

SelectionKey key = socket.getIOChannel().keyFor(socket.getPoller().getSelector());

try {

int handshake = -1;

....

if (handshake == 0) {

SocketState state = SocketState.OPEN;

// Process the request from this socket

if (event == null) {

state = getHandler().process(socketWrapper, SocketEvent.OPEN_READ);

} else {

//找到对应的ConnectionHandler进行处理

state = getHandler().process(socketWrapper, event);

}

if (state == SocketState.CLOSED) {

close(socket, key);

}

} else if (handshake == -1 ) {

close(socket, key);

} else if (handshake == SelectionKey.OP_READ){

socketWrapper.registerReadInterest();

} else if (handshake == SelectionKey.OP_WRITE){

socketWrapper.registerWriteInterest();

}

}

....

} 在这里简单叙说下,前面描述过,Connector将具体的协议处理托管给了不同的ProtocolHandler实现类,ProtocolHandler的抽象类AbstractProtocol中有一个属性: AbstractEndpoint,那么对于不同协议的请求处理过程中,共同的处理逻辑基本上都在这2个抽象类中,涉及到非共通的部分在对应的实现类中实现。抽象使其拓展性更强!比如现在新增一个协议的处理只需要继承这些抽象类即可~

言归正传,我们看下AbstractProtocol.ConnectionHandler#hprocess这个方法:

@Override

public SocketState process(SocketWrapperBase wrapper, SocketEvent status) {

S socket = wrapper.getSocket();

Processor processor = connections.get(socket);

if (processor != null) {

// Make sure an async timeout doesn't fire

getProtocol().removeWaitingProcessor(processor);

} else if (status == SocketEvent.DISCONNECT || status == SocketEvent.ERROR) {

// Nothing to do. Endpoint requested a close and there is no

// longer a processor associated with this socket.

return SocketState.CLOSED;

}

ContainerThreadMarker.set();

try {

if (processor == null) {

String negotiatedProtocol = wrapper.getNegotiatedProtocol();

if (negotiatedProtocol != null) {

UpgradeProtocol upgradeProtocol =

getProtocol().getNegotiatedProtocol(negotiatedProtocol);

if (upgradeProtocol != null) {

processor = upgradeProtocol.getProcessor(

wrapper, getProtocol().getAdapter());

} else if (negotiatedProtocol.equals("http/1.1")) {

// Explicitly negotiated the default protocol.

// Obtain a processor below.

} else {

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString(

"abstractConnectionHandler.negotiatedProcessor.fail",

negotiatedProtocol));

}

return SocketState.CLOSED;

/*

* To replace the code above once OpenSSL 1.1.0 is

* used.

// Failed to create processor. This is a bug.

throw new IllegalStateException(sm.getString(

"abstractConnectionHandler.negotiatedProcessor.fail",

negotiatedProtocol));

*/

}

}

}

if (processor == null) {

processor = recycledProcessors.pop();

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.processorPop",

processor));

}

}

if (processor == null) {

processor = getProtocol().createProcessor();

register(processor);

}

processor.setSslSupport(

wrapper.getSslSupport(getProtocol().getClientCertProvider()));

// Associate the processor with the connection

connections.put(socket, processor);

SocketState state = SocketState.CLOSED;

do {

//处理类

state = processor.process(wrapper, status);

....

}ConnectionHandler是类似一个中转站,最终调用具体处理协议的Processor处理这个请求。当然还是先走抽象类Processor接下来先看processor(AbstractProcessorLight)process方法:

@Override

public SocketState process(SocketWrapperBase socketWrapper, SocketEvent status)

throws IOException {

....

do {

if (dispatches != null) {

DispatchType nextDispatch = dispatches.next();

state = dispatch(nextDispatch.getSocketStatus());

} else if (status == SocketEvent.DISCONNECT) {

// Do nothing here, just wait for it to get recycled

} else if (isAsync() || isUpgrade() || state == SocketState.ASYNC_END) {

state = dispatch(status);

if (state == SocketState.OPEN) {

// There may be pipe-lined data to read. If the data isn't

// processed now, execution will exit this loop and call

// release() which will recycle the processor (and input

// buffer) deleting any pipe-lined data. To avoid this,

// process it now.

// 处理这个封装类

state = service(socketWrapper);

}

} else if (status == SocketEvent.OPEN_WRITE) {

// Extra write event likely after async, ignore

state = SocketState.LONG;

} else if (status == SocketEvent.OPEN_READ){

state = service(socketWrapper);

} else {

// Default to closing the socket if the SocketEvent passed in

// is not consistent with the current state of the Processor

state = SocketState.CLOSED;

}

...

} while (state == SocketState.ASYNC_END ||

dispatches != null && state != SocketState.CLOSED);

return state;

}这个service方法是一个抽象方法,我们这里使用是http协议,所以使用的是Http11Processor。这个方法做了很多事情,比如Buffer变身Request和Response、Request和Response交给Adpter等。代码如下:

@Override

public SocketState service(SocketWrapperBase socketWrapper)

throws IOException {

RequestInfo rp = request.getRequestProcessor();

rp.setStage(org.apache.coyote.Constants.STAGE_PARSE);

// Setting up the I/O

setSocketWrapper(socketWrapper);

inputBuffer.init(socketWrapper);

outputBuffer.init(socketWrapper);

// Flags

keepAlive = true;

openSocket = false;

readComplete = true;

boolean keptAlive = false;

SendfileState sendfileState = SendfileState.DONE;

while (!getErrorState().isError() && keepAlive && !isAsync() && upgradeToken == null &&

sendfileState == SendfileState.DONE && !endpoint.isPaused()) {

....很多设置 ,看的头昏。省去

// Process the request in the adapter

if (!getErrorState().isError()) {

try {

rp.setStage(org.apache.coyote.Constants.STAGE_SERVICE);

// 尼玛 交给 Adapter 手中了 !!!

getAdapter().service(request, response);

...

}以上终于把请求转成了我们比较熟悉的Request和Response了。并且把Request和Response交给了Adapter处理了。d.Adapter,顾名思义适配器,大家也知道有个模式是适配器模式,将一个类的接口转换成客户希望的另一个接口。那么直接进入其实现类CoyoteAdapter#service方法中。

3、Container容器:

继续上面的方法CoyoteAdapter#service(),代码如下:

@Override

public void service(org.apache.coyote.Request req, org.apache.coyote.Response res)

throws Exception {

... coyote 的Request转换为Connector中request~

try {

// Parse and set Catalina and configuration specific

// request parameters

postParseSuccess = postParseRequest(req, request, res, response);

if (postParseSuccess) {

//check valves if we support async

request.setAsyncSupported(

connector.getService().getContainer().getPipeline().isAsyncSupported());

// Calling the container

//进入pipeLine---->执行valve方法 tomcat中另一个大块

//最终执行到basic方法~就是standardValveWarpper,这里面有分配到对应的Servlet的方法~

connector.getService().getContainer().getPipeline().getFirst().invoke(

request, response);

}

....

}其中这个方法中最重要的部分是:connector.getService().getContainer().getPipeline().getFirst().invoke(request, response);

采用Pipeline+valve实现了请求在Container容器的流转,最终进入Wapper中,这个Wapper就是servlet的封装类。流程如下:

Engine、Host、Context、Waper都是属于Container,每个都有一个标准的实现,如:StandardEngine、StandardHost、StandardWrapper等。每个Container作为一个valve也有一个标准的实现:StandardEngineValve、StandardWrapperValve等,在每个Container初始化的时候作为一个Valve加入了肯德基豪华套餐(StandardPipeline中),最后一个就是StandardWrapperValve,这里看下StandardWrapperValve的是如何加入StandardPipeline:

public StandardWrapper() {

super();

swValve=new StandardWrapperValve();

//加入pipeline

pipeline.setBasic(swValve);

broadcaster = new NotificationBroadcasterSupport();

}

valve都在初始化的时候加入了pipeline,第一个加的是first同时也是basic,当addValve之后,First向前移动,basic始终指向最后一个valve,添加结束后就是挨个执行valve的invoke方法,这里只看最后一个也是最重要的一个valve,StandardWrapperValve类中invoke方法:

@Override

public final void invoke(Request request, Response response)

throws IOException, ServletException {

.....

// Allocate a servlet instance to process this request

try {

if (!unavailable) {

//获取执行此请求对应的servlet

servlet = wrapper.allocate();

}

}

.....

// Create the filter chain for this request request调用连

ApplicationFilterChain filterChain =

ApplicationFilterFactory.createFilterChain(request, wrapper, servlet);

// Call the filter chain for this request

// NOTE: This also calls the servlet's service() method

try {

if ((servlet != null) && (filterChain != null)) {

// Swallow output if needed

if (context.getSwallowOutput()) {

try {

SystemLogHandler.startCapture();

if (request.isAsyncDispatching()) {

request.getAsyncContextInternal().doInternalDispatch();

} else {

//进入我们的熟悉的调用链,ApplicationFilterChain,

filterChain.doFilter(request.getRequest(),

response.getResponse());

}

.....

}这个方法为请求找到对应的Servlet和为请求创建了FilterChain。filterChain.doFilter最终会带着我们进入Servlet的service方法中:先看下doFilter方法中调用的internalDoFilter方法:

private void internalDoFilter(ServletRequest request,

ServletResponse response)

throws IOException, ServletException {

....

// We fell off the end of the chain -- call the servlet instance

try {

if (ApplicationDispatcher.WRAP_SAME_OBJECT) {

lastServicedRequest.set(request);

lastServicedResponse.set(response);

}

if (request.isAsyncSupported() && !servletSupportsAsync) {

request.setAttribute(Globals.ASYNC_SUPPORTED_ATTR,

Boolean.FALSE);

}

// Use potentially wrapped request from this point

if ((request instanceof HttpServletRequest) &&

(response instanceof HttpServletResponse) &&

Globals.IS_SECURITY_ENABLED ) {

final ServletRequest req = request;

final ServletResponse res = response;

Principal principal =

((HttpServletRequest) req).getUserPrincipal();

Object[] args = new Object[]{req, res};

SecurityUtil.doAsPrivilege("service",

servlet,

classTypeUsedInService,

args,

principal);

} else {

//进入service方法

servlet.service(request, response);

}

....

}在此方法中我们看到了servlet.service(),终于拨开云雾见青天了,这里的Servlet是HttpServlet。在这个方法中request被转成HttpServletRequest,response被转成HttpServletResponse。其中servlet.service()方法又再次service(request, response)方法确定使用doGet、doPost等方法。调用一个如下:

@Override

public void service(ServletRequest req, ServletResponse res)

throws ServletException, IOException {

HttpServletRequest request;

HttpServletResponse response;

try {

request = (HttpServletRequest) req;

response = (HttpServletResponse) res;

} catch (ClassCastException e) {

throw new ServletException("non-HTTP request or response");

}

service(request, response);

}

//执行doGet、Post等方法

protected void service(HttpServletRequest req, HttpServletResponse resp)

throws ServletException, IOException {

String method = req.getMethod();

if (method.equals(METHOD_GET)) {

long lastModified = getLastModified(req);

if (lastModified == -1) {

// servlet doesn't support if-modified-since, no reason

// to go through further expensive logic

doGet(req, resp);

} else {

long ifModifiedSince;

try {

ifModifiedSince = req.getDateHeader(HEADER_IFMODSINCE);

} catch (IllegalArgumentException iae) {

// Invalid date header - proceed as if none was set

ifModifiedSince = -1;

}

if (ifModifiedSince < (lastModified / 1000 * 1000)) {

// If the servlet mod time is later, call doGet()

// Round down to the nearest second for a proper compare

// A ifModifiedSince of -1 will always be less

maybeSetLastModified(resp, lastModified);

doGet(req, resp);

} else {

resp.setStatus(HttpServletResponse.SC_NOT_MODIFIED);

}

}

} else if (method.equals(METHOD_HEAD)) {

long lastModified = getLastModified(req);

maybeSetLastModified(resp, lastModified);

doHead(req, resp);

} else if (method.equals(METHOD_POST)) {

doPost(req, resp);

} else if (method.equals(METHOD_PUT)) {

doPut(req, resp);

....

}至此我们可以在doGet、doPost等执行我们的业务逻辑了。另这里是如何对接到Spring中,主要可以看下spring 中FrameworkServlet类,此类重写了service方法,最终进入DispatcherServlet中,所有的请求都进入doDispatch进行过分发进入不同的handler处理业务。到这里请求的流程结束了,下面简单看下响应~~NioSocketWrapper类,而此类中有个doWrite方法,就是用于输出Response的,代码如下:

f.response,在NioEndpoint中我们说到buffer的读写,主要操作在

@Override

protected void doWrite(boolean block, ByteBuffer from) throws IOException {

long writeTimeout = getWriteTimeout();

Selector selector = null;

try {

selector = pool.get();

} catch (IOException x) {

// Ignore

}

try {

pool.write(from, getSocket(), selector, writeTimeout, block);

if (block) {

// Make sure we are flushed

do {

if (getSocket().flush(true, selector, writeTimeout)) {

break;

}

} while (true);

}

updateLastWrite();

} finally {

if (selector != null) {

pool.put(selector);

}

}

}基本一个完整的请求和响应流程到此结束。