hadoop学习之-使用ODCH工具实现oralce外部表访问HDFS数据文件

实验说明:

本实验目的是通过使用Oracle的HDFS直接连接器从oracle数据库的外部表中直接访问HDFS的数据文件。支持的数据文件格式取决于ORACLE_LOADER的驱动程序。

一、 安装准备

1. 软件下载:

Oracle Big Data Connectors:ODCH

http://www.oracle.com/technetwork/bdc/big-data-connectors/downloads/index.html

Jave SE 下载地址:

http://www.oracle.com/technetwork/java/javase/downloads/jdk6u38-downloads-1877406.html

Oracle11g下载地址:

http://www.oracle.com/technetwork/database/enterprise-edition/downloads/index.html

Oracle Enterprise Linux下载地址:需要注册oracle帐号才能下载

https://edelivery.oracle.com/linux

2. 安装环境

软件版本:

OS:Oracle Enterprise Linux 5.6

Oracle DB:Oracle 11.2.0.2

Jave SE:jdk1.6.0_18

ODCH:Oracle SQL Connector for Hadoop Distributed File System Release 2.0 for Linux

Hadoop:hadoop-0.20.2

Hadoop\Oracle架构:

| 主机名 |

IP |

系统版本 |

Oracle node |

Hadoop node |

hadoop进程名 |

| gc |

192.168.2.100 |

OEL5.6 |

Oracle11.2.0.2 |

master |

namenode,jobtracker |

| rac1 |

192.168.2.101 |

OEL5.6 |

/ |

slave |

datanode,tasktracker |

| rac2 |

192.168.2.102 |

OEL5.6 |

/ |

slave |

datanode,tasktracker |

主要环境变量:

| 变量名 |

变量值 |

| ORACLE_HOME |

/u01/app/ora11g/product/11.2.0/db_1 |

| ORACLE_SID |

gcdb |

| HADOOP_HOME |

/home/grid/hadoop-0.20.2 |

| HADOOP_CONF_DIR |

$HADOOP_HOME/conf |

| OSCH_HOME |

/opt/odch/orahdfs-2.0.0 |

| ORAHDFS_JAR |

$OSCH_HOME/jlib/orahdfs.jar |

| HDFS_BIN_PATH |

/opt/odch/orahdfs-2.0.0 |

| HADOOP_CLASSPATH |

$OSCH_HOME/jlib/* |

3. 软件安装

linux和oracle安装见:

http://f.dataguru.cn/thread-55231-1-1.html

Hadoop安装见:

http://blog.csdn.net/lichangzai/article/details/8206834

二、 通过ODCH把HDFS->ORACLE

1. 上传odch文件并解压

[grid@gc odch]$ pwd

/opt/odch

[grid@gc odch]$ unzip orahdfs-2.0.0.zip

2. 修改环境变量

--添加hadoop\oracle\ODCH主要要环境变量

[grid@gc ~]$ vi .bash_profile

export ORACLE_BASE=/u01/app/ora11g

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export ORACLE_SID=gcdb

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib64:/usr/lib64:/usr/local/lib64:/usr/X11R6/lib64/

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export HADOOP_HOME=/home/grid/hadoop-0.20.2

PATH=$PATH:$HOME/bin:/usr/java/jdk1.6.0_18/bin: $HADOOP_HOME/bin

export HADOOP_CONF_DIR=$HADOOP_HOME/conf

export OSCH_HOME=/opt/odch/orahdfs-2.0.0

export ORAHDFS_JAR=$OSCH_HOME/jlib/orahdfs.jar

export HDFS_BIN_PATH=$OSCH_HOME/bin

export JAVA_HOME=/usr

export PATH

3. 修改用户属组

说明:因为之前的hadoop安装在grid系统用户下,而oracle安装在了oracle系统用户下,为了操作方便,使grid用户有操作oracle操作库的权限,现在修改grid系统用户属组,添加到和oracle同样的用户组下。

--查看之前的用户属组

[root@gc ~]# id grid

uid=501(grid) gid=54326(hadoop) groups=54326(hadoop)

[root@gc ~]# id oracle

uid=500(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54323(oper),54324(asmadmin)

--修改用户属组

[root@gc ~]# vi /etc/group

oinstall:x:54321:grid

dba:x:54322:oracle,grid

oper:x:54323:oracle,grid

asmadmin:x:54324:oracle,grid

--再次查看用户属组

[root@gc ~]# id grid

uid=501(grid) gid=54326(hadoop) groups=54326(hadoop),54322(dba),54321(oinstall),54324(asmadmin),54323(oper)

[root@gc ~]# id oracle

uid=500(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),54323(oper),54324(asmadmin)

注意:添加用户的用户组后,可能需要启系统才能真正生效,虽然id命令已经显示在所在的用户组。

--测试grid用户能访问oracle

[grid@gc ~]$ sqlplus "/as sysdba"

SQL*Plus: Release 11.2.0.2.0 Production on 星期三 1月 23 03:36:21 2013

Copyright (c) 1982, 2010, Oracle. All rights reserved.

连接到:

Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> show parameter db_name;

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

db_name string gcdb

4. 配置hdfs_stream script文件

--添加以下内容

[grid@gc ~]$ vi ${OSCH_HOME}/bin/hdfs_stream

export HADOOP_HOME=/home/grid/hadoop-0.20.2

export OSCH_HOME=/opt/odch/orahdfs-2.0.0

export PATH=/usr/bin:/bin:$HADOOP_HOME/bin

5. 确保oracle用户对$ODCH_LOG_DIR/logs目录有读写权限

因为Oracle用户需要在 {$ODCH_LOG_DIR} 目录中创建log/bad文件,所以要确保oracle用户对$ODCH_LOG_DIR/log目录有读写权限

--修改目录权限,测试读写文件

[root@gc opt]# chmod -R 777 odch/

[root@gc opt]# su - oracle

[oracle@gc ~]$ cd /opt/odch/orahdfs-2.0.0/log/

[oracle@gc log]$ touch ora_access_test

[oracle@gc log]$ rm ora_access_test

6. 配置操作系统目录和数据库的Directory对象

--创建所用的系统目录

[root@gc ~]# mkdir -p /opt/odch/orahdfs-2.0.0/logs

[root@gc ~]# mkdir -p /opt/odch/orahdfs-2.0.0/extdir

[root@gc ~]# chmod 777 /opt/odch/orahdfs-2.0.0/logs

[root@gc ~]# chmod 777 /opt/odch/orahdfs-2.0.0/extdir

--创建oracle Directory对象

[root@gc ~]# su - grid

[grid@gc ~]$ sqlplus "/as sysdba"

SQL*Plus: Release 11.2.0.2.0 Production on 星期三 1月 23 05:35:48 2013

Copyright (c) 1982, 2010, Oracle. All rights reserved.

连接到:

Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> --可以放在命令行批量执行下面的语句

create or replace directory ODCH_LOG_DIR as '/opt/odch/orahdfs-2.0.0/logs';

grant read, write on directory ODCH_LOG_DIR to SCOTT;

create or replace directory ODCH_DATA_DIR as '/opt/odch/orahdfs-2.0.0/extdir';

grant read, write on directory ODCH_DATA_DIR to SCOTT;

create or replace directory HDFS_BIN_PATH as '/opt/odch/orahdfs-2.0.0/bin';

grant execute on directory HDFS_BIN_PATH to SCOTT;

grant read, write on directory HDFS_BIN_PATH to SCOTT;

--目录对象说明

HDFS_BIN_PATH::hdfs_stream脚本所在目录.

HDFS_DATA_DIR:用来存放“位置文件”(location files)的目录。“位置文件”(location files) 是一个配置文件,里面包含HDFS的文件路径/文件名以及文件编码格式。

ODCH_LOG_DIR:Oracle用来存放外部表的log/bad等文件的目录.

7. 创建oracle外部表

SQL> conn scott/tiger

已连接。

SQL> --可以放在命令行批量执行下面的语句

CREATE TABLE admin_ext_employees

(employee_id NUMBER(4),

first_name VARCHAR2(20),

last_name VARCHAR2(25),

job_id VARCHAR2(10),

manager_id NUMBER(4),

hire_date DATE,

salary NUMBER(8,2),

commission_pct NUMBER(2,2),

department_id NUMBER(4),

email VARCHAR2(25)

)

ORGANIZATION EXTERNAL

(

TYPE ORACLE_LOADER

DEFAULT DIRECTORY ODCH_DATA_DIR

ACCESS PARAMETERS

(

records delimited by newline

preprocessor HDFS_BIN_PATH:hdfs_stream --使用hadoop预处理器

badfile ODCH_LOG_DIR:'empxt%a_%p.bad'

logfile ODCH_LOG_DIR:'empxt%a_%p.log'

fields terminated by ','

missing field values are null

( employee_id, first_name, last_name, job_id, manager_id,

hire_date char date_format date mask "dd-mon-yyyy",

salary, commission_pct, department_id, email

)

)

--在LOCATION对应的2个文件现在还不存在,我们会在下面的步骤中生成

--在LOCATION中使用多个文件,可以使Oracle可以多个程序并行访问HDFS

LOCATION ('empxt1.dat', 'empxt2.dat')

)

PARALLEL

REJECT LIMIT UNLIMITED;

SQL>

Table created

注:外部表使用实例

http://docs.oracle.com/cd/E11882_01/server.112/e25494/tables013.htm#ADMIN12896

8. 在Hadoop中放入示例文件

因ODCH要从Hadoop文件系统中读取数据. 所以我们先要在Hadoop中放入几个的数据文件。

--示例文件内容

[grid@gc ~]$ cat empxt1.dat

360,Jane,Janus,ST_CLERK,121,17-MAY-2001,3000,0,50,jjanus

361,Mark,Jasper,SA_REP,145,17-MAY-2001,8000,.1,80,mjasper

362,Brenda,Starr,AD_ASST,200,17-MAY-2001,5500,0,10,bstarr

363,Alex,Alda,AC_MGR,145,17-MAY-2001,9000,.15,80,aalda

[grid@gc ~]$ cat empxt2.dat

401,Jesse,Cromwell,HR_REP,203,17-MAY-2001,7000,0,40,jcromwel

402,Abby,Applegate,IT_PROG,103,17-MAY-2001,9000,.2,60,aapplega

403,Carol,Cousins,AD_VP,100,17-MAY-2001,27000,.3,90,ccousins

404,John,Richardson,AC_ACCOUNT,205,17-MAY-2001,5000,0,110,jrichard

--先在Hadoop中建立一个目录,然后把empxt*.dat放入该目录中

[grid@gc ~]$ hadoop dfs -mkdir odch_data

[grid@gc ~]$ wc -l empxt*

4 empxt1.dat

4 empxt2.dat

8 总计

[grid@gc ~]$ hadoop dfs -put empxt*.dat odch_data

[grid@gc ~]$ hadoop dfs -ls odch_data

Found 2 items

-rw-r--r-- 2 grid supergroup 228 2013-01-24 23:13 /user/grid/odch_data/empxt1.dat

-rw-r--r-- 2 grid supergroup 252 2013-01-24 23:13 /user/grid/odch_data/empxt2.dat

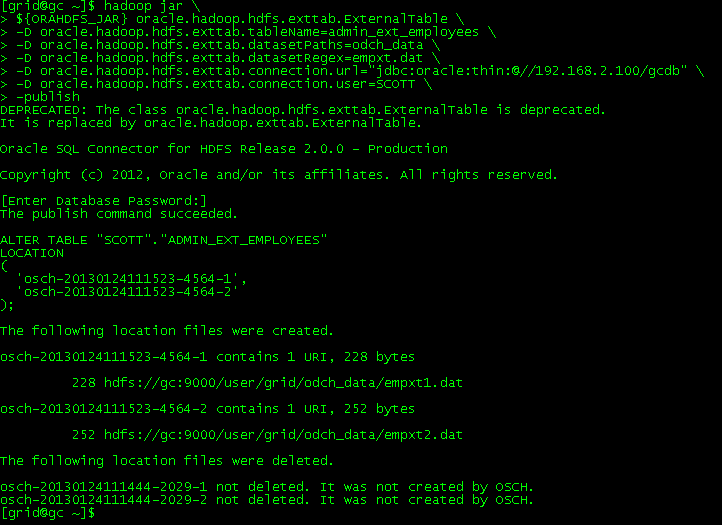

9. 生成“位置文件”

--执行下面的命令

export HADOOP_CLASSPATH="$OSCH_HOME/jlib/*"

hadoop jar \

${ORAHDFS_JAR} oracle.hadoop.hdfs.exttab.ExternalTable \

-D oracle.hadoop.hdfs.exttab.tableName=admin_ext_employees \

-D oracle.hadoop.hdfs.exttab.datasetPaths=odch_data \

-D oracle.hadoop.hdfs.exttab.datasetRegex=empxt.dat \

-D oracle.hadoop.hdfs.exttab.connection.url="jdbc:oracle:thin:@//192.168.2.100/gcdb" \

-D oracle.hadoop.hdfs.exttab.connection.user=SCOTT \

-publish

--输出如图

参数说明:

ExternalTable:使用hadoop ExternalTable命令工具

-D:指定相关参数

tableName:外部表名字

datasetPaths:源数据存放路径(HDFS)

datasetRegex:数据源格式

connection.url:oracle数据库连接串

connection.user:数据库用户名scott

命令执行后还要输入用户名密码:tiger

注意:

上面的输出结果中提示下面的内容,

DEPRECATED: The class oracle.hadoop.hdfs.exttab.ExternalTable is deprecated.

It is replaced by oracle.hadoop.exttab.ExternalTable.

意思类已经过时了,但是测试还是能用的,不用管它。被替换的新类使用方法可以见官方文档。

10. 查看“位置文件”内容

[grid@gc ~]$ cd /opt/odch/orahdfs-2.0.0/extdir/

[grid@gc extdir]$ cat osch-20130124045151-3641-1

参数说明:

CompressionCodec:HDFS 文件指向

默认值 hdfs://gc:9000/user/grid/odch_data/empxt1.dat

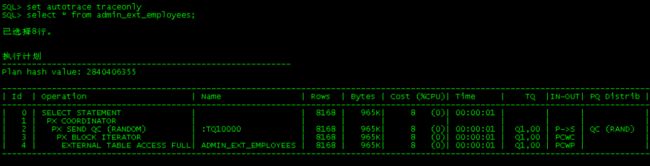

11. 检查结果

--查看外部表数据

--查看执行计划

--把外部表导入到数据库当中

SQL> create table ext1 as select * from admin_ext_employees;

表已创建。

到此完成了从HDFS数据到oracle数据库的导入!

三、 经验总结

1. 各种目录权限

如:odch和hadoop最好不要安装在/home/usr下,因linux系统的用户之间的/home/usr是不相互访问的,即使/home/usr设置了比较大的访问权限

2. 各种软件版本

可以参考:

http://www.dataguru.cn/forum.php?mod=viewthread&tid=39412

四、 参考资料

http://docs.oracle.com/cd/E37231_01/doc.20/e36961/sqlch.htm#CHDHHHAJ

http://docs.oracle.com/cd/E11882_01/server.112/e25494/tables013.htm#ADMIN12896

http://docs.oracle.com/cd/E37231_01/doc.20/e36961/odi.htm

http://www.dataguru.cn/forum.php?mod=viewthread&tid=39412

tigerfish ppt

五、 遇到的问题

1. 执行生成“位置文件”命令后,不显示结果跳出

现象:

当执行生成“位置文件”命令后,没有报错,没提示输入密码,也没有结果跳出执行。

[grid@gc logs]$ hadoop jar \

> ${ORAHDFS_JAR} oracle.hadoop.hdfs.exttab.ExternalTable \

> -D oracle.hadoop.hdfs.exttab.tableName=odch_ext_table \

> -D oracle.hadoop.hdfs.exttab.datasetPaths=odch_data \

> -D oracle.hadoop.hdfs.exttab.datasetRegex=odch1.dat \

> -D oracle.hadoop.hdfs.exttab.connection.url="jdbc:oracle:thin:@//192.168.2.100:1521/gcdb" \

> -D oracle.hadoop.hdfs.exttab.connection.user=SCOTT \

> -publish

DEPRECATED: The class oracle.hadoop.hdfs.exttab.ExternalTable is deprecated.

It is replaced by oracle.hadoop.exttab.ExternalTable.

Oracle SQL Connector for HDFS Release 2.0.0 - Production

Copyright (c) 2012, Oracle and/or its affiliates. All rights reserved.

解决:

检查执行命令正确无误后,感觉就是环境变量的问题

设置环境变量HADOOP_CLASSPATH后,再次运行命令正常。

export HADOOP_CLASSPATH="$OSCH_HOME/jlib/*"

2. IO 错误: The Network Adapter could not establish the connection

现象:

当使用新版本的ODCH的oracle数据库连接串时,出现下面的错误

[grid@gc ~]$ export HADOOP_CLASSPATH="$OSCH_HOME/jlib/*"

[grid@gc ~]$ hadoop jar \

> ${ORAHDFS_JAR} oracle.hadoop.exttab.ExternalTable \

> -D oracle.hadoop.exttab.tableName=odch_ext_table \

> -D oracle.hadoop.exttab.datasetPaths=odch_data \

> -D oracle.hadoop.exttab.datasetRegex=odch1.dat \

> -D oracle.hadoop.exttab.connection.url=jdbc:oracle:thin:@localhost:1521/gcdb \

> -D oracle.hadoop.exttab.connection.user=SCOTT \

> -publish

Oracle SQL Connector for HDFS Release 2.0.0 - Production

Copyright (c) 2012, Oracle and/or its affiliates. All rights reserved.

[Enter Database Password:]

java.sql.SQLRecoverableException: IO 错误: The Network Adapter could not establish the connection

oracle.net.ns.NetException: The Network Adapter could not establish the connection

To get a complete stacktrace, rerun the command with property: oracle.hadoop.exttab.printStackTrace=true

--使用printStackTrace参数出现帮助信息

[grid@gc ~]$ hadoop jar \

> ${ORAHDFS_JAR} oracle.hadoop.exttab.ExternalTable \

> -D oracle.hadoop.exttab.tableName=odch_ext_table \

> -D oracle.hadoop.exttab.datasetPaths=odch_data \

> -D oracle.hadoop.exttab.datasetRegex=odch1.dat \

> -D oracle.hadoop.exttab.connection.url=jdbc:oracle:thin:@localhost:1521/gcdb \

> -D oracle.hadoop.exttab.connection.user=SCOTT \

> -D oracle.hadoop.exttab.printStackTrace=true\

> -publish

Oracle SQL Connector for HDFS Release 2.0.0 - Production

Copyright (c) 2012, Oracle and/or its affiliates. All rights reserved.

Usage: bin/hadoop jar orahdfs.jar oracle.hadoop.exttab.ExternalTable [genericOptions] [-publish | -createTable [--noexecute]] | [-listLocations [--details]] | [-getDDL]

-createTable: creates an external table and also publishes HDFS data URIs to location files of the table

-publish: publishes the HDFS data URIs to the location files of an external table

-listLocations: prints the location files of an external table

-getDDL: prints the DDL of an external table

[genericOptions] : the generic command-line options supported by the Hadoop Tool interface

[options] : the following options can be used with thecommands

--noexecute : prints the execution plan of the -createTable or -publish command

--details : prints a detailed listing of the location files for the -listLocations command.

Example:

$HADOOP_HOME/bin/hadoop jar orahdfs.jar oracle.hadoop.exttab.ExternalTable -D oracle.hadoop.exttab.tableName=SALES_EXT_TAB -D oracle.hadoop.exttab.dataPaths=hdfs:/user/scott/data/ -D oracle.hadoop.connection.url=jdbc:oracle:thin:@myhost:1521/example.example.com -D oracle.hadoop.connection.user=scott -publish

[grid@gc ~]$

解决:

还是使用旧版本的ODCH连接oracle数据库的方法

hadoop jar \

/opt/odch/orahdfs-2.0.0/jlib/orahdfs.jar oracle.hadoop.hdfs.exttab.ExternalTable \

-D oracle.hadoop.hdfs.exttab.tableName=admin_ext_employees \

-D oracle.hadoop.hdfs.exttab.datasetPaths=odch_data \

-D oracle.hadoop.hdfs.exttab.datasetRegex=empxt.dat \

-D oracle.hadoop.hdfs.exttab.connection.url="jdbc:oracle:thin:@//192.168.2.100:1521/gcdb" \

-D oracle.hadoop.hdfs.exttab.connection.user=SCOTT \

-publish

3. OSCH_HOME is not set

现象:

在执行查询外部时,数据加载错误,在bad文件中出现以下内容

[grid@gc ~]$ cd /opt/odch/orahdfs-2.0.0/logs

[grid@gc logs]$ cat odch_ext_table000_14910.bad

OSCH_HOME is not set

解决:

原因是没有在hdfs_stream文件中设置环境变量

--在hdfs_stream文件中添加如下内容,

export OSCH_HOME=/opt/odch/orahdfs-2.0.0

4. Error opening job jar

现象:

当刚配置完成后命令是正常可用,过了一段时间后,再次执行时,却突然出现下面的错误。

[grid@gc ~]$ hadoop jar \

> ${ORAHDFS_JAR} oracle.hadoop.hdfs.exttab.ExternalTable \

> -D oracle.hadoop.hdfs.exttab.tableName=admin_ext_employees \

> -D oracle.hadoop.hdfs.exttab.datasetPaths=odch_data \

> -D oracle.hadoop.hdfs.exttab.datasetRegex=empxt.dat \

> -D oracle.hadoop.hdfs.exttab.connection.url="jdbc:oracle:thin:@//192.168.2.100/gcdb" \

> -D oracle.hadoop.hdfs.exttab.connection.user=SCOTT \

> -publish

Exception in thread "main" java.io.IOException: Error opening job jar: /opt/odch/orahdfs-2.0.0/jlib/orahdfs.jar

at org.apache.hadoop.util.RunJar.main(RunJar.java:90)

Caused by: java.util.zip.ZipException: error in opening zip file

at java.util.zip.ZipFile.open(Native Method)

at java.util.zip.ZipFile.

at java.util.jar.JarFile.

at java.util.jar.JarFile.

at org.apache.hadoop.util.RunJar.main(RunJar.java:88)

解决:

把orahdfs.jar包从安装文件里再重新copy一份,把原来的jar包覆盖掉,重新运行就好了。

[root@gc jlib]# mv orahdfs.jar /opt/odch/orahdfs-2.0.0/jlib/

mv:是否覆盖“/opt/odch/orahdfs-2.0.0/jlib/orahdfs.jar”? y

[root@gc jlib]# chown grid:hadoop /opt/odch/orahdfs-2.0.0/jlib/orahdfs.jar

[root@gc jlib]# chmod 777 /opt/odch/orahdfs-2.0.0/jlib/orahdfs.jar

[root@gc jlib]# ll /opt/odch/orahdfs-2.0.0/jlib/orahdfs.jar

-rwxrwxrwx 1 grid hadoop 117405 11-29 09:46 /opt/odch/orahdfs-2.0.0/jlib/orahdfs.jar