Skywalking6.1.0试水

今天看了下apache官网,skywalking已经正式从Apache毕业了,最新版本是6.1.0。

今天就来看看最新版本的搭建和监控界面。

具体的代码就以我本地的sample系统在window环境为例。

整个流程如下:

- 1.下载skywalking包

- 2.配置skywalking

- 3.配置agent

- 4.编写启动脚本

- 5.启动服务并查看界面

1.下载skywalking压缩包

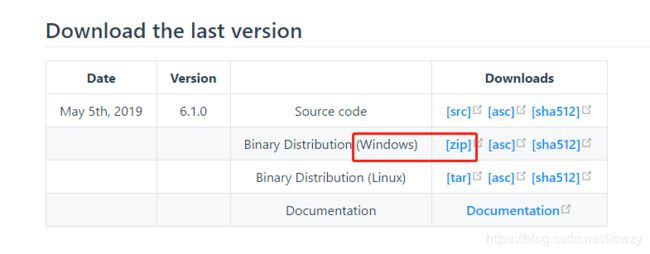

下载我们需要的版本,因为是windows环境部署(生产环境我们下载对应的linux版本就好了),所以选择相应的版本下载。

并解压到指定目录,我的本地解压目录是:

D:\softsetup\skywalking-6.1.0

2.配置skywalking

解压后我们配置服务端的配置项,暂且我们只配置基本的信息,能让系统跑起来就行,毕竟是先看看demo。

配置文件是位于D:\softsetup\skywalking-6.1.0\config目录下的application.yml文件

cluster:

standalone:

# Please check your ZooKeeper is 3.5+, However, it is also compatible with ZooKeeper 3.4.x. Replace the ZooKeeper 3.5+

# library the oap-libs folder with your ZooKeeper 3.4.x library.

# zookeeper:

# nameSpace: ${SW_NAMESPACE:""}

# hostPort: ${SW_CLUSTER_ZK_HOST_PORT:localhost:2181}

# #Retry Policy

# baseSleepTimeMs: ${SW_CLUSTER_ZK_SLEEP_TIME:1000} # initial amount of time to wait between retries

# maxRetries: ${SW_CLUSTER_ZK_MAX_RETRIES:3} # max number of times to retry

# kubernetes:

# watchTimeoutSeconds: ${SW_CLUSTER_K8S_WATCH_TIMEOUT:60}

# namespace: ${SW_CLUSTER_K8S_NAMESPACE:default}

# labelSelector: ${SW_CLUSTER_K8S_LABEL:app=collector,release=skywalking}

# uidEnvName: ${SW_CLUSTER_K8S_UID:SKYWALKING_COLLECTOR_UID}

# consul:

# serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

# Consul cluster nodes, example: 10.0.0.1:8500,10.0.0.2:8500,10.0.0.3:8500

# hostPort: ${SW_CLUSTER_CONSUL_HOST_PORT:localhost:8500}

core:

default:

# Mixed: Receive agent data, Level 1 aggregate, Level 2 aggregate

# Receiver: Receive agent data, Level 1 aggregate

# Aggregator: Level 2 aggregate

role: ${SW_CORE_ROLE:Mixed} # Mixed/Receiver/Aggregator

restHost: ${SW_CORE_REST_HOST:127.0.0.1}

restPort: ${SW_CORE_REST_PORT:12800}

restContextPath: ${SW_CORE_REST_CONTEXT_PATH:/}

gRPCHost: ${SW_CORE_GRPC_HOST:127.0.0.1}

gRPCPort: ${SW_CORE_GRPC_PORT:11800}

downsampling:

- Hour

- Day

- Month

# Set a timeout on metric data. After the timeout has expired, the metric data will automatically be deleted.

recordDataTTL: ${SW_CORE_RECORD_DATA_TTL:90} # Unit is minute

minuteMetricsDataTTL: ${SW_CORE_MINUTE_METRIC_DATA_TTL:90} # Unit is minute

hourMetricsDataTTL: ${SW_CORE_HOUR_METRIC_DATA_TTL:36} # Unit is hour

dayMetricsDataTTL: ${SW_CORE_DAY_METRIC_DATA_TTL:45} # Unit is day

monthMetricsDataTTL: ${SW_CORE_MONTH_METRIC_DATA_TTL:18} # Unit is month

storage:

elasticsearch:

nameSpace: ${SW_NAMESPACE:"find-job"}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}

user: ${SW_ES_USER:""}

password: ${SW_ES_PASSWORD:""}

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:2}

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:0}

# Batch process setting, refer to https://www.elastic.co/guide/en/elasticsearch/client/java-api/5.5/java-docs-bulk-processor.html

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:2000} # Execute the bulk every 2000 requests

bulkSize: ${SW_STORAGE_ES_BULK_SIZE:20} # flush the bulk every 20mb

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:10} # flush the bulk every 10 seconds whatever the number of requests

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000}

segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

# h2:

# driver: ${SW_STORAGE_H2_DRIVER:org.h2.jdbcx.JdbcDataSource}

# url: ${SW_STORAGE_H2_URL:jdbc:h2:mem:skywalking-oap-db}

# user: ${SW_STORAGE_H2_USER:sa}

# metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}

# mysql:

# metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}

receiver-sharing-server:

default:

receiver-register:

default:

receiver-trace:

default:

bufferPath: ${SW_RECEIVER_BUFFER_PATH:../trace-buffer/} # Path to trace buffer files, suggest to use absolute path

bufferOffsetMaxFileSize: ${SW_RECEIVER_BUFFER_OFFSET_MAX_FILE_SIZE:100} # Unit is MB

bufferDataMaxFileSize: ${SW_RECEIVER_BUFFER_DATA_MAX_FILE_SIZE:500} # Unit is MB

bufferFileCleanWhenRestart: ${SW_RECEIVER_BUFFER_FILE_CLEAN_WHEN_RESTART:false}

sampleRate: ${SW_TRACE_SAMPLE_RATE:10000} # The sample rate precision is 1/10000. 10000 means 100% sample in default.

slowDBAccessThreshold: ${SW_SLOW_DB_THRESHOLD:default:200,mongodb:100} # The slow database access thresholds. Unit ms.

receiver-jvm:

default:

receiver-clr:

default:

service-mesh:

default:

bufferPath: ${SW_SERVICE_MESH_BUFFER_PATH:../mesh-buffer/} # Path to trace buffer files, suggest to use absolute path

bufferOffsetMaxFileSize: ${SW_SERVICE_MESH_OFFSET_MAX_FILE_SIZE:100} # Unit is MB

bufferDataMaxFileSize: ${SW_SERVICE_MESH_BUFFER_DATA_MAX_FILE_SIZE:500} # Unit is MB

bufferFileCleanWhenRestart: ${SW_SERVICE_MESH_BUFFER_FILE_CLEAN_WHEN_RESTART:false}

istio-telemetry:

default:

envoy-metric:

default:

#receiver_zipkin:

# default:

# host: ${SW_RECEIVER_ZIPKIN_HOST:0.0.0.0}

# port: ${SW_RECEIVER_ZIPKIN_PORT:9411}

# contextPath: ${SW_RECEIVER_ZIPKIN_CONTEXT_PATH:/}

query:

graphql:

path: ${SW_QUERY_GRAPHQL_PATH:/graphql}

alarm:

default:

telemetry:

none:基本也就是配置下端口和存储。

存储我们使用es做存储。

3.配置agent

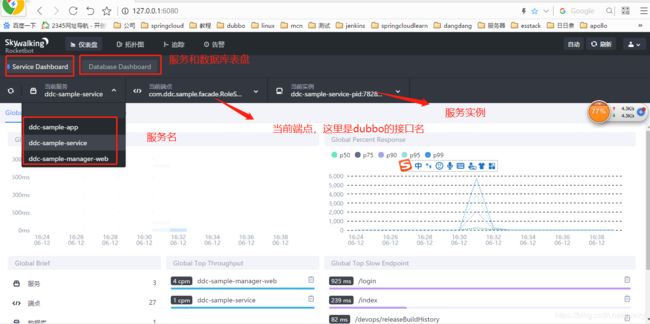

对应agent的配置,只要是配置agent的名称,方便在UI中查找相关的服务。

agent的配置文件agent.config位于

D:\softsetup\skywalking-6.1.0\agent\config目录下,我们只要修改下面一项为自己的服务名称即可:

agent.service_name=${SW_AGENT_NAME:ddc-sample-app}因为我是三个项目启动,所以拷贝了三份,方便启动时指定具体的agent。

4.编写启动脚本

sample样例是dubbo工程,均以jar的形式启动。和平时不同的是,需要加上javaagent启动项,具体脚本为:

java -javaagent:D:/softsetup/skywalking-6.1.0/agent-service/skywalking-agent.jar -jar ddc-sample-service-boot.jar

java -javaagent:D:/softsetup/skywalking-6.1.0/agent-manager/skywalking-agent.jar -jar ddc-sample-manager-web.jar

java -javaagent:D:/softsetup/skywalking-6.1.0/agent-app/skywalking-agent.jar -jar ddc-sample-app.jar

5.启动

5.1 首先启动elasticsearch,已经head插件

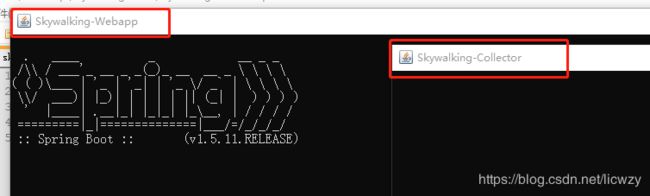

5.2启动skywalking位于D:\softsetup\skywalking-6.1.0\bin目录下的startup.bat文件。

这个文件实际是两个启动项的合并启动文件

5.3启动zk

5.4启动刚编写的三个脚本

最后,就是查看我们UI的时候了(我把UI的端口改为了6080,D:\softsetup\skywalking-6.1.0\webapp的webapp.yml中修改即可)。

具体的细节和使用说明,以后抽空再补上。