Docker搭建Spark集群(Docker & Spark & Cluster & Local & Standalone)

Docker 搭建Spark集群

- 环境准备

- 依赖

- 安装Docker

- Local模式(without Docker)

- 安装JDK

- 安装Scala

- 安装Spark

- 测试

- Standalone 模式(without Docker)

- 更改hostname

- 更改配置

- ssh免密登录

- 关闭防火墙

- 启动Spark集群

- 访问集群web

- 测试

- Local模式(with Docker)

- Docker 安装

- 测试

- Standalone模式(with Docker)

- 创建overlay网络

- 启动集群

- 测试

- Docker Stack部署

- Docker Stack测试

环境准备

依赖

CentOS7.6

安装Docker

参照安装(点击)

Local模式(without Docker)

写这篇文章的时候,spark的最新版本是2.4.3,而此时的2.4.3的一些配套库并没有更新(比如mongodb-spark-connector),这里参照BigDataEurope选择Spark2.4.1 + Scala2.11 + JDK1.8。

安装JDK

去官网上下载1.8版本的tar.gz ,如果使用yum安装或者下载rpm包安装,则会缺少Scala2.11需要的部分文件。

tar xf jdk-8u221-linux-x64.tar -C /usr/lib/jvm

rm -rf /usr/bin/java

ln -s /usr/lib/jvm/jdk1.8.0_221/bin/java /usr/bin/java

编辑文件

vim /etc/profile.d/java.sh

添加

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_221

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=${JAVA_HOME}/lib:${JRE_HOME}/lib:$CLASSPATH

export PATH=${JAVA_HOME}/bin:$PATH

然后使环境变量生效

source /etc/profile

执行以下命令检查环境变量

[root@vm1 bin]# echo $JAVA_HOME

/usr/lib/jvm/jdk1.8.0_221

[root@vm1 bin]# echo $JAVA_HOME

/usr/lib/jvm/jdk1.8.0_221

安装Scala

在官网拷贝rpm下载链接

wget --no-check-certificate https://downloads.lightbend.com/scala/2.11.12/scala-2.11.12.rpm

rpm安装

rpm -ivh scala-2.11.12.rpm

寻找SCALA_HOME

[root@vm1 /]# ls -lrt `which scala`

lrwxrwxrwx. 1 root root 24 Aug 16 01:11 /usr/bin/scala -> ../share/scala/bin/scala

编辑文件

vim /etc/profile.d/scala.sh

添加

export SCALA_HOME=/usr/share/scala

export PATH=${SCALA_HOME}/bin:$PATH

然后使环境变量生效

source /etc/profile

执行以下命令检查环境变量

[root@vm1 bin]# echo $SCALA_HOME

/usr/share/scala

[root@vm1 bin]# scala -version

Scala code runner version 2.11.12 -- Copyright 2002-2017, LAMP/EPFL

安装Spark

在官网下载页根据自己的需求寻找对应的版本,这里选择spark-2.4.1-bin-hadoop2.7.tgz ,默认使用Scala2.11且为hadoop2.7版本进行预构建(pre-build),spark启动需要依赖hadoop的一些库( org/slf4j/Logger)。

wget --no-check-certificate https://archive.apache.org/dist/spark/spark-2.4.1/spark-2.4.1-bin-hadoop2.7.tgz

tar xf spark-2.4.1-bin-hadoop2.7.tgz -C /usr/share

ln -s /usr/share/spark-2.4.1-bin-hadoop2.7 /usr/share/spark

编辑文件

vim /etc/profile.d/spark.sh

添加

export SPARK_HOME=/usr/share/spark

export PATH=${SPARK_HOME}/bin:$PATH

然后使环境变量生效

source /etc/profile

执行以下命令检查环境变量

[root@vm1 bin]# echo $SPARK_HOME

/usr/share/spark

[root@vm1 bin]# spark-shell -version

19/08/16 03:46:59 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://vm1:4040

Spark context available as 'sc' (master = local[*], app id = local-1565941626509).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.1

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_221)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

这里出现WARN NativeCodeLoader并不影响运行,原因是系统glibc(2.17)的版本和hadoop(2.14)期望的版本不一致。

测试

local 类型分为 local(等同于local[1])、local[k](k为具体值),local[*](*为cpu core的数量)

spark-submit --class org.apache.spark.examples.SparkPi --master local[*] /usr/share/spark/examples/jars/spark-examples_2.11-2.4.1.jar

运行结果

19/08/16 04:07:06 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/08/16 04:07:06 INFO SparkContext: Running Spark version 2.4.1

19/08/16 04:07:06 INFO SparkContext: Submitted application: Spark Pi

19/08/16 04:07:06 INFO SecurityManager: Changing view acls to: root

19/08/16 04:07:06 INFO SecurityManager: Changing modify acls to: root

19/08/16 04:07:06 INFO SecurityManager: Changing view acls groups to:

19/08/16 04:07:06 INFO SecurityManager: Changing modify acls groups to:

19/08/16 04:07:06 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/16 04:07:06 INFO Utils: Successfully started service 'sparkDriver' on port 43859.

19/08/16 04:07:06 INFO SparkEnv: Registering MapOutputTracker

19/08/16 04:07:07 INFO SparkEnv: Registering BlockManagerMaster

19/08/16 04:07:07 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

19/08/16 04:07:07 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

19/08/16 04:07:07 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-2dc555a4-5f5a-4dea-99fd-8e791402392d

19/08/16 04:07:07 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

19/08/16 04:07:07 INFO SparkEnv: Registering OutputCommitCoordinator

19/08/16 04:07:07 INFO Utils: Successfully started service 'SparkUI' on port 4040.

19/08/16 04:07:07 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://vm1:4040

19/08/16 04:07:07 INFO SparkContext: Added JAR file:/usr/share/spark/examples/jars/spark-examples_2.11-2.4.1.jar at spark://vm1:43859/jars/spark-examples_2.11-2.4.1.jar with timestamp 1565942827297

19/08/16 04:07:07 INFO Executor: Starting executor ID driver on host localhost

19/08/16 04:07:07 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 46740.

19/08/16 04:07:07 INFO NettyBlockTransferService: Server created on vm1:46740

19/08/16 04:07:07 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

19/08/16 04:07:07 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, vm1, 46740, None)

19/08/16 04:07:07 INFO BlockManagerMasterEndpoint: Registering block manager vm1:46740 with 366.3 MB RAM, BlockManagerId(driver, vm1, 46740, None)

19/08/16 04:07:07 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, vm1, 46740, None)

19/08/16 04:07:07 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, vm1, 46740, None)

19/08/16 04:07:07 INFO SparkContext: Starting job: reduce at SparkPi.scala:38

19/08/16 04:07:08 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 2 output partitions

19/08/16 04:07:08 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

19/08/16 04:07:08 INFO DAGScheduler: Parents of final stage: List()

19/08/16 04:07:08 INFO DAGScheduler: Missing parents: List()

19/08/16 04:07:08 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

19/08/16 04:07:08 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1936.0 B, free 366.3 MB)

19/08/16 04:07:08 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1256.0 B, free 366.3 MB)

19/08/16 04:07:08 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on vm1:46740 (size: 1256.0 B, free: 366.3 MB)

19/08/16 04:07:08 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1161

19/08/16 04:07:08 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1))

19/08/16 04:07:08 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

19/08/16 04:07:08 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7866 bytes)

19/08/16 04:07:08 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7866 bytes)

19/08/16 04:07:08 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

19/08/16 04:07:08 INFO Executor: Running task 1.0 in stage 0.0 (TID 1)

19/08/16 04:07:08 INFO Executor: Fetching spark://vm1:43859/jars/spark-examples_2.11-2.4.1.jar with timestamp 1565942827297

19/08/16 04:07:08 INFO TransportClientFactory: Successfully created connection to vm1/172.169.18.28:43859 after 31 ms (0 ms spent in bootstraps)

19/08/16 04:07:08 INFO Utils: Fetching spark://vm1:43859/jars/spark-examples_2.11-2.4.1.jar to /tmp/spark-b22cc6ec-b16c-4c1c-8528-0643c065dd39/userFiles-46cb83a1-095a-4860-bea0-538b424b7608/fetchFileTemp3334060304603096780.tmp

19/08/16 04:07:08 INFO Executor: Adding file:/tmp/spark-b22cc6ec-b16c-4c1c-8528-0643c065dd39/userFiles-46cb83a1-095a-4860-bea0-538b424b7608/spark-examples_2.11-2.4.1.jar to class loader

19/08/16 04:07:08 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 824 bytes result sent to driver

19/08/16 04:07:08 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 824 bytes result sent to driver

19/08/16 04:07:08 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 250 ms on localhost (executor driver) (1/2)

19/08/16 04:07:08 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 236 ms on localhost (executor driver) (2/2)

19/08/16 04:07:08 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

19/08/16 04:07:08 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 0.463 s

19/08/16 04:07:08 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 0.572216 s

Pi is roughly 3.143915719578598

19/08/16 04:07:08 INFO SparkUI: Stopped Spark web UI at http://vm1:4040

19/08/16 04:07:08 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

19/08/16 04:07:08 INFO MemoryStore: MemoryStore cleared

19/08/16 04:07:08 INFO BlockManager: BlockManager stopped

19/08/16 04:07:08 INFO BlockManagerMaster: BlockManagerMaster stopped

19/08/16 04:07:08 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

19/08/16 04:07:08 INFO SparkContext: Successfully stopped SparkContext

19/08/16 04:07:08 INFO ShutdownHookManager: Shutdown hook called

19/08/16 04:07:08 INFO ShutdownHookManager: Deleting directory /tmp/spark-b22cc6ec-b16c-4c1c-8528-0643c065dd39

19/08/16 04:07:08 INFO ShutdownHookManager: Deleting directory /tmp/spark-07babbac-1aff-4900-968f-596d57454e1b

Standalone 模式(without Docker)

部署三台机器vm1, vm2,vm3,基础环境和Local 模式一样,安装JDK1.8 + Scala2.11 + Spark2.7。

更改hostname

三台机器上分别设置hostname

#vm1

hostnamectl set-hostname vm1

#vm2

hostnamectl set-hostname vm2

#vm3

hostnamectl set-hostname vm3

更改配置

在vm1上修改配置

新增文件spark-env.sh,设置主节点及配置。

vi /usr/share/spark/conf/spark-env.sh

增加以下内容

#!/usr/bin/env bash

export SPARK_MASTER_HOST=vm1

新增文件slaves,增加从节点。

vi /usr/share/spark/conf/slaves

增加以下内容

vm2

vm3

拷贝配置到vm2和vm3上

yum install -y sshpass

sshpass -p xxxxx scp /usr/share/spark/conf/* root@vm3:/usr/share/spark/conf/

ssh免密登录

slave从节点需要从主节点上启动,为了简化启动过程,最好设置vm1到vm2和vm3的免密登录。

参照这里

关闭防火墙

systemctl stop firewalld

systemctl stop iptables

也可以不关闭防火墙,根据自己的实际配置情况来设置防火墙策略。

启动Spark集群

先启动主节点

sh /usr/share/spark/sbin/start-master.sh

显示

starting org.apache.spark.deploy.master.Master, logging to /usr/share/spark/logs/spark-root-org.apache.spark.deploy.master.Master-1-vm1.out

再启动从节点

sh /usr/share/spark/sbin/start-slaves.sh

显示

vm2: starting org.apache.spark.deploy.worker.Worker, logging to /usr/share/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm2.out

vm3: starting org.apache.spark.deploy.worker.Worker, logging to /usr/share/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm3.out

检查master启动日志

[root@vm1 logs]# tail -f /usr/share/spark/logs/spark-root-org.apache.spark.deploy.master.Master-1-vm1.out

19/08/17 04:30:07 INFO SecurityManager: Changing modify acls to: root

19/08/17 04:30:07 INFO SecurityManager: Changing view acls groups to:

19/08/17 04:30:07 INFO SecurityManager: Changing modify acls groups to:

19/08/17 04:30:07 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/17 04:30:07 INFO Utils: Successfully started service 'sparkMaster' on port 7077.

19/08/17 04:30:07 INFO Master: Starting Spark master at spark://vm1:7077

19/08/17 04:30:07 INFO Master: Running Spark version 2.4.1

19/08/17 04:30:08 INFO Utils: Successfully started service 'MasterUI' on port 8080.

19/08/17 04:30:08 INFO MasterWebUI: Bound MasterWebUI to 0.0.0.0, and started at http://vm1:8080

19/08/17 04:30:08 INFO Master: I have been elected leader! New state: ALIVE

检查slave vm2启动日志

[root@vm2 logs]# tail -f /usr/share/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm2.out

19/08/17 05:18:48 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/17 05:18:49 INFO Utils: Successfully started service 'sparkWorker' on port 44660.

19/08/17 05:18:49 INFO Worker: Starting Spark worker 172.169.18.60:44660 with 2 cores, 2.7 GB RAM

19/08/17 05:18:49 INFO Worker: Running Spark version 2.4.1

19/08/17 05:18:49 INFO Worker: Spark home: /usr/share/spark

19/08/17 05:18:49 INFO Utils: Successfully started service 'WorkerUI' on port 8081.

19/08/17 05:18:49 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://vm2:8081

19/08/17 05:18:49 INFO Worker: Connecting to master vm1:7077...

19/08/17 05:18:49 INFO TransportClientFactory: Successfully created connection to vm1/172.169.18.28:7077 after 44 ms (0 ms spent in bootstraps)

19/08/17 05:18:49 INFO Worker: Successfully registered with master spark://vm1:7077

检查slave vm3启动日志

[root@vm3 logs]# tail -f /usr/share/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm3.out

19/08/17 05:18:48 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/17 05:18:49 INFO Utils: Successfully started service 'sparkWorker' on port 42186.

19/08/17 05:18:49 INFO Worker: Starting Spark worker 172.169.18.2:42186 with 2 cores, 2.7 GB RAM

19/08/17 05:18:49 INFO Worker: Running Spark version 2.4.1

19/08/17 05:18:49 INFO Worker: Spark home: /usr/share/spark

19/08/17 05:18:49 INFO Utils: Successfully started service 'WorkerUI' on port 8081.

19/08/17 05:18:49 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://vm3:8081

19/08/17 05:18:49 INFO Worker: Connecting to master vm1:7077...

19/08/17 05:18:49 INFO TransportClientFactory: Successfully created connection to vm1/172.169.18.28:7077 after 44 ms (0 ms spent in bootstraps)

19/08/17 05:18:49 INFO Worker: Successfully registered with master spark://vm1:7077

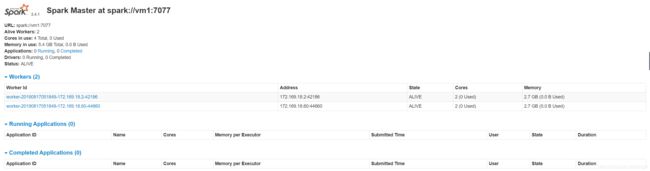

访问集群web

测试

standalone分为client和cluster两种模式,具体原理另篇再说明。在client模式下,一台机器提交多个application,比如100个,会造成网卡激增问题。在cluster模式下,启动多个application,driver会分散在worker中,解决了网卡激增问题。

不填写deploy-mode情况默认使用client模式。

在vm1上执行

spark-submit --class org.apache.spark.examples.SparkPi --master spark://vm1:7077 --deploy-mode client /usr/share/spark/examples/jars/spark-examples_2.11-2.4.1.jar 10

执行结果

19/08/17 05:34:15 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/08/17 05:34:15 INFO SparkContext: Running Spark version 2.4.1

19/08/17 05:34:15 INFO SparkContext: Submitted application: Spark Pi

19/08/17 05:34:15 INFO SecurityManager: Changing view acls to: root

19/08/17 05:34:15 INFO SecurityManager: Changing modify acls to: root

19/08/17 05:34:15 INFO SecurityManager: Changing view acls groups to:

19/08/17 05:34:15 INFO SecurityManager: Changing modify acls groups to:

19/08/17 05:34:15 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/17 05:34:15 INFO Utils: Successfully started service 'sparkDriver' on port 44103.

19/08/17 05:34:15 INFO SparkEnv: Registering MapOutputTracker

19/08/17 05:34:15 INFO SparkEnv: Registering BlockManagerMaster

19/08/17 05:34:15 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

19/08/17 05:34:15 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

19/08/17 05:34:15 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-f54c2372-e6e3-43bd-bc49-3b94206691f1

19/08/17 05:34:15 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

19/08/17 05:34:15 INFO SparkEnv: Registering OutputCommitCoordinator

19/08/17 05:34:16 INFO Utils: Successfully started service 'SparkUI' on port 4040.

19/08/17 05:34:16 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://vm1:4040

19/08/17 05:34:16 INFO SparkContext: Added JAR file:/usr/share/spark/examples/jars/spark-examples_2.11-2.4.1.jar at spark://vm1:44103/jars/spark-examples_2.11-2.4.1.jar with timestamp 1566034456184

19/08/17 05:34:16 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://vm1:7077...

19/08/17 05:34:16 INFO TransportClientFactory: Successfully created connection to vm1/172.169.18.28:7077 after 43 ms (0 ms spent in bootstraps)

19/08/17 05:34:16 INFO StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20190817053416-0002

19/08/17 05:34:16 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190817053416-0002/0 on worker-20190817051849-172.169.18.2-42186 (172.169.18.2:42186) with 2 core(s)

19/08/17 05:34:16 INFO StandaloneSchedulerBackend: Granted executor ID app-20190817053416-0002/0 on hostPort 172.169.18.2:42186 with 2 core(s), 1024.0 MB RAM

19/08/17 05:34:16 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190817053416-0002/1 on worker-20190817051849-172.169.18.60-44660 (172.169.18.60:44660) with 2 core(s)

19/08/17 05:34:16 INFO StandaloneSchedulerBackend: Granted executor ID app-20190817053416-0002/1 on hostPort 172.169.18.60:44660 with 2 core(s), 1024.0 MB RAM

19/08/17 05:34:16 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190817053416-0002/0 is now RUNNING

19/08/17 05:34:16 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190817053416-0002/1 is now RUNNING

19/08/17 05:34:16 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 36144.

19/08/17 05:34:16 INFO NettyBlockTransferService: Server created on vm1:36144

19/08/17 05:34:16 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

19/08/17 05:34:16 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, vm1, 36144, None)

19/08/17 05:34:16 INFO BlockManagerMasterEndpoint: Registering block manager vm1:36144 with 366.3 MB RAM, BlockManagerId(driver, vm1, 36144, None)

19/08/17 05:34:16 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, vm1, 36144, None)

19/08/17 05:34:16 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, vm1, 36144, None)

19/08/17 05:34:16 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

19/08/17 05:34:17 INFO SparkContext: Starting job: reduce at SparkPi.scala:38

19/08/17 05:34:17 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 3 output partitions

19/08/17 05:34:17 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

19/08/17 05:34:17 INFO DAGScheduler: Parents of final stage: List()

19/08/17 05:34:17 INFO DAGScheduler: Missing parents: List()

19/08/17 05:34:17 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

19/08/17 05:34:17 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1936.0 B, free 366.3 MB)

19/08/17 05:34:17 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1256.0 B, free 366.3 MB)

19/08/17 05:34:17 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on vm1:36144 (size: 1256.0 B, free: 366.3 MB)

19/08/17 05:34:17 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1161

19/08/17 05:34:17 INFO DAGScheduler: Submitting 3 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2))

19/08/17 05:34:17 INFO TaskSchedulerImpl: Adding task set 0.0 with 3 tasks

19/08/17 05:34:19 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.169.18.60:34014) with ID 1

19/08/17 05:34:19 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 172.169.18.60, executor 1, partition 0, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:19 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, 172.169.18.60, executor 1, partition 1, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:19 INFO BlockManagerMasterEndpoint: Registering block manager 172.169.18.60:37821 with 366.3 MB RAM, BlockManagerId(1, 172.169.18.60, 37821, None)

19/08/17 05:34:19 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.169.18.2:52382) with ID 0

19/08/17 05:34:19 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, 172.169.18.2, executor 0, partition 2, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:19 INFO BlockManagerMasterEndpoint: Registering block manager 172.169.18.2:40495 with 366.3 MB RAM, BlockManagerId(0, 172.169.18.2, 40495, None)

19/08/17 05:34:19 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.169.18.60:37821 (size: 1256.0 B, free: 366.3 MB)

19/08/17 05:34:19 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 680 ms on 172.169.18.60 (executor 1) (1/3)

19/08/17 05:34:19 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 720 ms on 172.169.18.60 (executor 1) (2/3)

19/08/17 05:34:21 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.169.18.2:40495 (size: 1256.0 B, free: 366.3 MB)

19/08/17 05:34:21 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 2299 ms on 172.169.18.2 (executor 0) (3/3)

19/08/17 05:34:21 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

19/08/17 05:34:21 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 4.343 s

19/08/17 05:34:21 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 4.512565 s

Pi is roughly 3.1425838086126956

19/08/17 05:34:21 INFO SparkUI: Stopped Spark web UI at http://vm1:4040

19/08/17 05:34:21 INFO StandaloneSchedulerBackend: Shutting down all executors

19/08/17 05:34:21 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down

19/08/17 05:34:21 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

19/08/17 05:34:21 INFO MemoryStore: MemoryStore cleared

19/08/17 05:34:21 INFO BlockManager: BlockManager stopped

19/08/17 05:34:21 INFO BlockManagerMaster: BlockManagerMaster stopped

19/08/17 05:34:21 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

19/08/17 05:34:21 INFO SparkContext: Successfully stopped SparkContext

19/08/17 05:34:21 INFO ShutdownHookManager: Shutdown hook called

19/08/17 05:34:21 INFO ShutdownHookManager: Deleting directory /tmp/spark-d7196560-2b3d-4766-8b71-3e47260f4d0b

19/08/17 05:34:21 INFO ShutdownHookManager: Deleting directory /tmp/spark-f804e08d-7449-4c29-94db-55c98673cb2f

[root@vm1 logs]# spark-submit --class org.apache.spark.examples.SparkPi --master spark://vm1:7077 /usr/share/spark/examples/jars/spark-examples_2.11-2.4.1.jar 10

19/08/17 05:34:28 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/08/17 05:34:28 INFO SparkContext: Running Spark version 2.4.1

19/08/17 05:34:28 INFO SparkContext: Submitted application: Spark Pi

19/08/17 05:34:28 INFO SecurityManager: Changing view acls to: root

19/08/17 05:34:28 INFO SecurityManager: Changing modify acls to: root

19/08/17 05:34:28 INFO SecurityManager: Changing view acls groups to:

19/08/17 05:34:28 INFO SecurityManager: Changing modify acls groups to:

19/08/17 05:34:28 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/17 05:34:29 INFO Utils: Successfully started service 'sparkDriver' on port 33704.

19/08/17 05:34:29 INFO SparkEnv: Registering MapOutputTracker

19/08/17 05:34:29 INFO SparkEnv: Registering BlockManagerMaster

19/08/17 05:34:29 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

19/08/17 05:34:29 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

19/08/17 05:34:29 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-162dd9ae-7a06-4b3a-b353-fe42a9d6c7a0

19/08/17 05:34:29 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

19/08/17 05:34:29 INFO SparkEnv: Registering OutputCommitCoordinator

19/08/17 05:34:29 INFO Utils: Successfully started service 'SparkUI' on port 4040.

19/08/17 05:34:29 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://vm1:4040

19/08/17 05:34:29 INFO SparkContext: Added JAR file:/usr/share/spark/examples/jars/spark-examples_2.11-2.4.1.jar at spark://vm1:33704/jars/spark-examples_2.11-2.4.1.jar with timestamp 1566034469382

19/08/17 05:34:29 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://vm1:7077...

19/08/17 05:34:29 INFO TransportClientFactory: Successfully created connection to vm1/172.169.18.28:7077 after 41 ms (0 ms spent in bootstraps)

19/08/17 05:34:29 INFO StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20190817053429-0003

19/08/17 05:34:29 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 45611.

19/08/17 05:34:29 INFO NettyBlockTransferService: Server created on vm1:45611

19/08/17 05:34:29 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

19/08/17 05:34:29 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190817053429-0003/0 on worker-20190817051849-172.169.18.2-42186 (172.169.18.2:42186) with 2 core(s)

19/08/17 05:34:29 INFO StandaloneSchedulerBackend: Granted executor ID app-20190817053429-0003/0 on hostPort 172.169.18.2:42186 with 2 core(s), 1024.0 MB RAM

19/08/17 05:34:29 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20190817053429-0003/1 on worker-20190817051849-172.169.18.60-44660 (172.169.18.60:44660) with 2 core(s)

19/08/17 05:34:29 INFO StandaloneSchedulerBackend: Granted executor ID app-20190817053429-0003/1 on hostPort 172.169.18.60:44660 with 2 core(s), 1024.0 MB RAM

19/08/17 05:34:29 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190817053429-0003/1 is now RUNNING

19/08/17 05:34:29 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20190817053429-0003/0 is now RUNNING

19/08/17 05:34:29 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, vm1, 45611, None)

19/08/17 05:34:29 INFO BlockManagerMasterEndpoint: Registering block manager vm1:45611 with 366.3 MB RAM, BlockManagerId(driver, vm1, 45611, None)

19/08/17 05:34:29 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, vm1, 45611, None)

19/08/17 05:34:29 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, vm1, 45611, None)

19/08/17 05:34:30 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

19/08/17 05:34:30 INFO SparkContext: Starting job: reduce at SparkPi.scala:38

19/08/17 05:34:30 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 10 output partitions

19/08/17 05:34:30 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

19/08/17 05:34:30 INFO DAGScheduler: Parents of final stage: List()

19/08/17 05:34:30 INFO DAGScheduler: Missing parents: List()

19/08/17 05:34:30 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

19/08/17 05:34:30 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1936.0 B, free 366.3 MB)

19/08/17 05:34:30 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1256.0 B, free 366.3 MB)

19/08/17 05:34:30 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on vm1:45611 (size: 1256.0 B, free: 366.3 MB)

19/08/17 05:34:30 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1161

19/08/17 05:34:30 INFO DAGScheduler: Submitting 10 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9))

19/08/17 05:34:30 INFO TaskSchedulerImpl: Adding task set 0.0 with 10 tasks

19/08/17 05:34:32 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.169.18.60:57348) with ID 1

19/08/17 05:34:32 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 172.169.18.60, executor 1, partition 0, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:32 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, 172.169.18.60, executor 1, partition 1, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:32 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.169.18.2:40198) with ID 0

19/08/17 05:34:32 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, 172.169.18.2, executor 0, partition 2, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:32 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, 172.169.18.2, executor 0, partition 3, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:32 INFO BlockManagerMasterEndpoint: Registering block manager 172.169.18.2:36636 with 366.3 MB RAM, BlockManagerId(0, 172.169.18.2, 36636, None)

19/08/17 05:34:32 INFO BlockManagerMasterEndpoint: Registering block manager 172.169.18.60:34541 with 366.3 MB RAM, BlockManagerId(1, 172.169.18.60, 34541, None)

19/08/17 05:34:33 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.169.18.60:34541 (size: 1256.0 B, free: 366.3 MB)

19/08/17 05:34:33 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.169.18.2:36636 (size: 1256.0 B, free: 366.3 MB)

19/08/17 05:34:33 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4, 172.169.18.2, executor 0, partition 4, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:33 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5, 172.169.18.2, executor 0, partition 5, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 991 ms on 172.169.18.2 (executor 0) (1/10)

19/08/17 05:34:33 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6, 172.169.18.60, executor 1, partition 6, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:33 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7, 172.169.18.60, executor 1, partition 7, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 1005 ms on 172.169.18.2 (executor 0) (2/10)

19/08/17 05:34:33 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8, 172.169.18.2, executor 0, partition 8, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 34 ms on 172.169.18.2 (executor 0) (3/10)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1055 ms on 172.169.18.60 (executor 1) (4/10)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 1025 ms on 172.169.18.60 (executor 1) (5/10)

19/08/17 05:34:33 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9, 172.169.18.2, executor 0, partition 9, PROCESS_LOCAL, 7870 bytes)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 70 ms on 172.169.18.2 (executor 0) (6/10)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 72 ms on 172.169.18.60 (executor 1) (7/10)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 76 ms on 172.169.18.60 (executor 1) (8/10)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 73 ms on 172.169.18.2 (executor 0) (9/10)

19/08/17 05:34:33 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 39 ms on 172.169.18.2 (executor 0) (10/10)

19/08/17 05:34:33 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

19/08/17 05:34:33 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 2.803 s

19/08/17 05:34:33 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 2.965956 s

Pi is roughly 3.141123141123141

19/08/17 05:34:33 INFO SparkUI: Stopped Spark web UI at http://vm1:4040

19/08/17 05:34:33 INFO StandaloneSchedulerBackend: Shutting down all executors

19/08/17 05:34:33 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down

19/08/17 05:34:33 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

19/08/17 05:34:33 INFO MemoryStore: MemoryStore cleared

19/08/17 05:34:33 INFO BlockManager: BlockManager stopped

19/08/17 05:34:33 INFO BlockManagerMaster: BlockManagerMaster stopped

19/08/17 05:34:33 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

19/08/17 05:34:33 INFO SparkContext: Successfully stopped SparkContext

19/08/17 05:34:33 INFO ShutdownHookManager: Shutdown hook called

19/08/17 05:34:33 INFO ShutdownHookManager: Deleting directory /tmp/spark-790e464a-289b-4d6c-8607-20f65e783937

19/08/17 05:34:33 INFO ShutdownHookManager: Deleting directory /tmp/spark-e66f306d-4c1f-44a8-92e7-85251aba5fa1

使用cluster模式运行

spark-submit --class org.apache.spark.examples.SparkPi --master spark://vm1:7077 --deploy-mode cluster /usr/share/spark/examples/jars/spark-examples_2.11-2.4.1.jar 10

显示

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.NativeCodeLoader).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/08/17 05:48:53 INFO SecurityManager: Changing view acls to: root

19/08/17 05:48:53 INFO SecurityManager: Changing modify acls to: root

19/08/17 05:48:53 INFO SecurityManager: Changing view acls groups to:

19/08/17 05:48:53 INFO SecurityManager: Changing modify acls groups to:

19/08/17 05:48:53 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/17 05:48:53 INFO Utils: Successfully started service 'driverClient' on port 46251.

19/08/17 05:48:53 INFO TransportClientFactory: Successfully created connection to vm1/172.169.18.28:7077 after 28 ms (0 ms spent in bootstraps)

19/08/17 05:48:53 INFO ClientEndpoint: Driver successfully submitted as driver-20190817054853-0001

19/08/17 05:48:53 INFO ClientEndpoint: ... waiting before polling master for driver state

19/08/17 05:48:58 INFO ClientEndpoint: ... polling master for driver state

19/08/17 05:48:58 INFO ClientEndpoint: State of driver-20190817054853-0001 is RUNNING

19/08/17 05:48:58 INFO ClientEndpoint: Driver running on 172.169.18.2:42186 (worker-20190817051849-172.169.18.2-42186)

19/08/17 05:48:58 INFO ShutdownHookManager: Shutdown hook called

19/08/17 05:48:58 INFO ShutdownHookManager: Deleting directory /tmp/spark-9a6e7757-b29f-48b7-bab2-1c3f89eda79a

可以看到结果很简短,具体操作日志可以在spark web端进行查看。

Local模式(with Docker)

Docker 安装

参照这里(点击)

测试

Big Data Europe是一个大数据的社区组织,重点讨论欧洲大数据项目产生的技术问题。我们使用它在Docker Hub上的发布的公共镜像。

docker run -it --rm bde2020/spark-base:2.4.1-hadoop2.7 /spark/bin/spark-submit --class org.apache.spark.examples.SparkPi --master local[*] /spark/examples/jars/spark-examples_2.11-2.4.1.jar 10

测试结果和非docker 模式下一致

Standalone模式(with Docker)

创建overlay网络

参照这里(点击)

docker network create overlay --driver=overlay --attachable

启动集群

再vm1,vm2,vm3上分别执行命令

#vm1

docker run --network overlay --name spark-master -h spark-master -e ENABLE_INIT_DAEMON=false -d bde2020/spark-master:2.4.1-hadoop2.7

#vm2

docker run --network overlay --name spark-worker-1 -h spark-worker-1 -e ENABLE_INIT_DAEMON=false -d bde2020/spark-worker:2.4.1-hadoop2.7

#vm3

docker run --network overlay --name spark-worker-2 -h spark-worker-2 -e ENABLE_INIT_DAEMON=false -d bde2020/spark-worker:2.4.1-hadoop2.7

spark-master 启动日志

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/08/20 09:32:25 INFO Master: Started daemon with process name: 12@spark-master

19/08/20 09:32:25 INFO SignalUtils: Registered signal handler for TERM

19/08/20 09:32:25 INFO SignalUtils: Registered signal handler for HUP

19/08/20 09:32:25 INFO SignalUtils: Registered signal handler for INT

19/08/20 09:32:26 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/08/20 09:32:26 INFO SecurityManager: Changing view acls to: root

19/08/20 09:32:26 INFO SecurityManager: Changing modify acls to: root

19/08/20 09:32:26 INFO SecurityManager: Changing view acls groups to:

19/08/20 09:32:26 INFO SecurityManager: Changing modify acls groups to:

19/08/20 09:32:26 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/20 09:32:26 INFO Utils: Successfully started service 'sparkMaster' on port 7077.

19/08/20 09:32:26 INFO Master: Starting Spark master at spark://spark-master:7077

19/08/20 09:32:26 INFO Master: Running Spark version 2.4.1

19/08/20 09:32:26 INFO Utils: Successfully started service 'MasterUI' on port 8080.

19/08/20 09:32:26 INFO MasterWebUI: Bound MasterWebUI to 0.0.0.0, and started at http://spark-master:8080

19/08/20 09:32:27 INFO Master: I have been elected leader! New state: ALIVE

19/08/20 09:32:33 INFO Master: Registering worker 10.0.0.4:35308 with 2 cores, 2.7 GB RAM

19/08/20 09:32:37 INFO Master: Registering worker 10.0.0.6:45378 with 2 cores, 2.7 GB RAM

可以看见两个worker加入了集群

spark-worker-1启动日志

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/08/20 09:32:31 INFO Worker: Started daemon with process name: 11@spark-worker-1

19/08/20 09:32:31 INFO SignalUtils: Registered signal handler for TERM

19/08/20 09:32:31 INFO SignalUtils: Registered signal handler for HUP

19/08/20 09:32:31 INFO SignalUtils: Registered signal handler for INT

19/08/20 09:32:32 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/08/20 09:32:32 INFO SecurityManager: Changing view acls to: root

19/08/20 09:32:32 INFO SecurityManager: Changing modify acls to: root

19/08/20 09:32:32 INFO SecurityManager: Changing view acls groups to:

19/08/20 09:32:32 INFO SecurityManager: Changing modify acls groups to:

19/08/20 09:32:32 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/20 09:32:32 INFO Utils: Successfully started service 'sparkWorker' on port 35308.

19/08/20 09:32:33 INFO Worker: Starting Spark worker 10.0.0.4:35308 with 2 cores, 2.7 GB RAM

19/08/20 09:32:33 INFO Worker: Running Spark version 2.4.1

19/08/20 09:32:33 INFO Worker: Spark home: /spark

19/08/20 09:32:33 INFO Utils: Successfully started service 'WorkerUI' on port 8081.

19/08/20 09:32:33 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://spark-worker-1:8081

19/08/20 09:32:33 INFO Worker: Connecting to master spark-master:7077...

19/08/20 09:32:33 INFO TransportClientFactory: Successfully created connection to spark-master/10.0.0.2:7077 after 47 ms (0 ms spent in bootstraps)

19/08/20 09:32:33 INFO Worker: Successfully registered with master spark://spark-master:7077

spark-worker-2启动日志

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

19/08/20 09:32:36 INFO Worker: Started daemon with process name: 11@spark-worker-2

19/08/20 09:32:36 INFO SignalUtils: Registered signal handler for TERM

19/08/20 09:32:36 INFO SignalUtils: Registered signal handler for HUP

19/08/20 09:32:36 INFO SignalUtils: Registered signal handler for INT

19/08/20 09:32:36 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/08/20 09:32:36 INFO SecurityManager: Changing view acls to: root

19/08/20 09:32:36 INFO SecurityManager: Changing modify acls to: root

19/08/20 09:32:36 INFO SecurityManager: Changing view acls groups to:

19/08/20 09:32:36 INFO SecurityManager: Changing modify acls groups to:

19/08/20 09:32:36 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

19/08/20 09:32:37 INFO Utils: Successfully started service 'sparkWorker' on port 45378.

19/08/20 09:32:37 INFO Worker: Starting Spark worker 10.0.0.6:45378 with 2 cores, 2.7 GB RAM

19/08/20 09:32:37 INFO Worker: Running Spark version 2.4.1

19/08/20 09:32:37 INFO Worker: Spark home: /spark

19/08/20 09:32:37 INFO Utils: Successfully started service 'WorkerUI' on port 8081.

19/08/20 09:32:37 INFO WorkerWebUI: Bound WorkerWebUI to 0.0.0.0, and started at http://spark-worker-2:8081

19/08/20 09:32:37 INFO Worker: Connecting to master spark-master:7077...

19/08/20 09:32:37 INFO TransportClientFactory: Successfully created connection to spark-master/10.0.0.2:7077 after 47 ms (0 ms spent in bootstraps)

19/08/20 09:32:38 INFO Worker: Successfully registered with master spark://spark-master:7077

测试

获取spark-submit的镜像,先看submit具体实现步骤

docker run -it --rm bde2020/spark-submit:2.4.1-hadoop2.7 cat submit.sh

显示

#!/bin/bash

export SPARK_MASTER_URL=spark://${SPARK_MASTER_NAME}:${SPARK_MASTER_PORT}

export SPARK_HOME=/spark

/wait-for-step.sh

/execute-step.sh

if [ -f "${SPARK_APPLICATION_JAR_LOCATION}" ]; then

echo "Submit application ${SPARK_APPLICATION_JAR_LOCATION} with main class ${SPARK_APPLICATION_MAIN_CLASS} to Spark master ${SPARK_MASTER_URL}"

echo "Passing arguments ${SPARK_APPLICATION_ARGS}"

/spark/bin/spark-submit \

--class ${SPARK_APPLICATION_MAIN_CLASS} \

--master ${SPARK_MASTER_URL} \

${SPARK_SUBMIT_ARGS} \

${SPARK_APPLICATION_JAR_LOCATION} ${SPARK_APPLICATION_ARGS}

else

if [ -f "${SPARK_APPLICATION_PYTHON_LOCATION}" ]; then

echo "Submit application ${SPARK_APPLICATION_PYTHON_LOCATION} to Spark master ${SPARK_MASTER_URL}"

echo "Passing arguments ${SPARK_APPLICATION_ARGS}"

PYSPARK_PYTHON=python3 /spark/bin/spark-submit \

--master ${SPARK_MASTER_URL} \

${SPARK_SUBMIT_ARGS} \

${SPARK_APPLICATION_PYTHON_LOCATION} ${SPARK_APPLICATION_ARGS}

else

echo "Not recognized application."

fi

fi

/finish-step.sh

可以看出 SPARK_APPLICATION_JAR_LOCATION 、 SPARK_APPLICATION_MAIN_CLASS、SPARK_MASTER_URL为必填参数,SPARK_SUBMIT_ARGS、SPARK_APPLICATION_ARGS为选填参数。

docker run --network overlay \

-e ENABLE_INIT_DAEMON=false \

-e SPARK_APPLICATION_JAR_LOCATION="/spark/examples/jars/spark-examples_2.11-2.4.1.jar" \

-e SPARK_APPLICATION_MAIN_CLASS="org.apache.spark.examples.SparkPi" \

-e SPARK_MASTER_URL="spark://vm1:7077" \

-e SPARK_SUBMIT_ARGS="--deploy-mode client" \

-e SPARK_APPLICATION_ARGS="10" \

-it --rm bde2020/spark-submit:2.4.1-hadoop2.7

测试日志类似Standalone模式。

Docker Stack部署

编写配置文件

version: '3.7'

services:

spark-master:

image: bde2020/spark-master:2.4.1-hadoop2.7

hostname: spark-master

networks:

- overlay

ports:

- 8080:8080

- 7077:7077

environment:

- ENABLE_INIT_DAEMON=false

- SPARK_MASTER_HOST=0.0.0.0

- SPARK_MASTER_PORT=7077

- SPARK_MASTER_WEBUI_PORT=8080

volumes:

- /etc/localtime:/etc/localtime

deploy:

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname==vm1

spark-worker-1:

image: bde2020/spark-worker:2.4.1-hadoop2.7

hostname: spark-worker-1

networks:

- overlay

ports:

- 8081:8081

environment:

- ENABLE_INIT_DAEMON=false

- SPARK_WORKER_WEBUI_PORT=8081

- SPARK_MASTER=spark://spark-master:7077

volumes:

- /etc/localtime:/etc/localtime

depends_on:

- spark-master

deploy:

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname==vm2

spark-worker-2:

image: bde2020/spark-worker:2.4.1-hadoop2.7

hostname: spark-worker-2

networks:

- overlay

ports:

- 8082:8082

environment:

- ENABLE_INIT_DAEMON=false

- SPARK_WORKER_WEBUI_PORT=8082

- SPARK_MASTER=spark://spark-master:7077

volumes:

- /etc/localtime:/etc/localtime

depends_on:

- spark-master

deploy:

restart_policy:

condition: on-failure

placement:

constraints:

- node.hostname==vm3

networks:

overlay:

driver: overlay

attachable: true

启动

docker stack deploy -c spark.yaml spark

启动日志跟之前的集群一样

Docker Stack测试

docker run --network spark_overlay \

-e ENABLE_INIT_DAEMON=false \

-e SPARK_APPLICATION_JAR_LOCATION="/spark/examples/jars/spark-examples_2.11-2.4.1.jar" \

-e SPARK_APPLICATION_MAIN_CLASS="org.apache.spark.examples.SparkPi" \

-e SPARK_MASTER_URL="spark://vm1:7077" \

-e SPARK_SUBMIT_ARGS="--deploy-mode client" \

-e SPARK_APPLICATION_ARGS="10" \

-it --rm bde2020/spark-submit:2.4.1-hadoop2.7