maskrcnn_benchmark理解记录——modeling\roi_heads\box_head\roi_box_feature_extractors.py

摘取config记录如下

MODEL:

META_ARCHITECTURE: "GeneralizedRCNN"

WEIGHT: "catalog://ImageNetPretrained/MSRA/R-50"

BACKBONE:

CONV_BODY: "R-50-FPN"

RESNETS:

BACKBONE_OUT_CHANNELS: 256

ROI_HEADS:

USE_FPN: True

ROI_BOX_HEAD:

POOLER_RESOLUTION: 7

POOLER_SCALES: (0.25, 0.125, 0.0625, 0.03125)

POOLER_SAMPLING_RATIO: 2

FEATURE_EXTRACTOR: "FPN2MLPFeatureExtractor"

PREDICTOR: "FPNPredictor"

NUM_CLASSES: 2MODEL.ROI_BOX_HEAD:

POOLER_RESOLUTION: 7

POOLER_SCALES: (0.25, 0.125, 0.0625, 0.03125)

POOLER_SAMPLING_RATIO: 2

FEATURE_EXTRACTOR: "FPN2MLPFeatureExtractor"

1.关于POOLER_SCALES: (0.25, 0.125, 0.0625, 0.03125)和POOLER_SAMPLING_RATIO: 2

_C.MODEL.ROI_BOX_HEAD.POOLER_SCALES = (0.25, 0.125, 0.0625, 0.03125)

_C.MODEL.ROI_BOX_HEAD.POOLER_SAMPLING_RATIO = 2

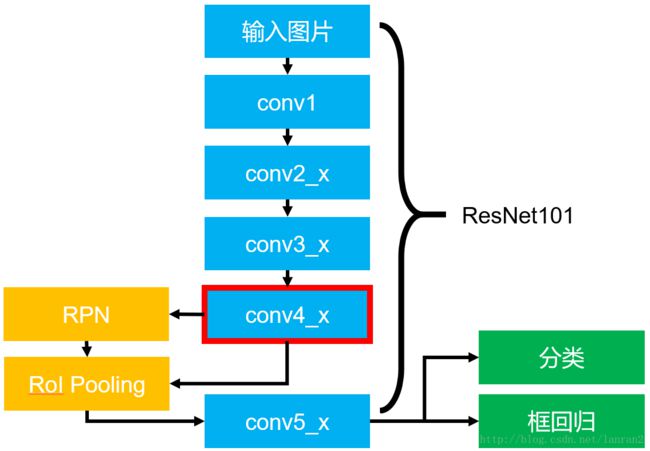

POOLER_SCALES是由于backbone(Resnet或Resnext架构)的strides生成的不同的缩小比例,(因为后四层作RPN的,所以这里是四层# conv2_x →conv5_x 作为特征提取层 那么对应POOLER_SCALES: (0.25, 0.125, 0.0625, 0.03125) 其实是第2到5层的池化 1/4;1/8;1/16;1/32)。BTW, you should understand well the ResNet and ResNeXt architectures to better understand this explanation.resnet【链接】【链接】a

例如,假设您在输入图像中找到了坐标[0,0,64,64]的RoI。 再次假设您希望从所有backbone的层级pool its features (这个其实还挺好玩,常叫pool为池化,但其实是pool its features,汇集其特征。那其实pool就是一步步地聚集、提取特征)

So, since there is a stride of 2 in the conv1 layer and another stride of 2 at the end of the first block, it results in a feature-map 4x smaller than the original image, thus, a scale of 0.25(这里 是到conv2_x). Since, there is a stride of 2 between all the convolution blocks of the backbone, the scale gets divided by 2 at each level.

Hence, the coordinates of your RoI will be:

[0, 0, 16, 16]in the first level feature-map[0, 0, 8, 8]in the second level feature-map[0, 0, 4, 4]in the third level feature-map[0, 0, 2, 2]in the fourth level feature-map

The sampling_ratio parameter determines how many samples you want to do in the bi-linear interpolation of the RoIAlign algorithm.

也就是这里:

(1)

(2)基于ResNet的Faster RCNN中RPN的共享特征图用的是conv4_x的输出。

以发现conv4_x的最后的输出为RPN和RoI Pooling共享的部分,而conv5_x(共9层网络)都作用于RoI Pooling之后的一堆特征图(14 x 14 x 1024),特征图的大小维度也刚好符合原本的ResNet101中conv5_x的输入;

conv4_x(14*14*1024)→RoI Pooling→(14*14*1024)→conv5_x(7*7*2048)→pooling→1*1*2018→2048 x 1000

(到底是只把RoI Pooling的结果作为conv5_x的输入,还是说RoI Pooling的结果和顺着的ResNet101中conv5_x映射????)

最后要接一个average pooling是针对整个channel×H×W的特征图,把每个H×W Pooling成1个像素,得到channel×1×1维特征(conv5_x输出是2048 x (7 x 7)大小的特征图,pooling操作是取每个7x7的最大或者平均值,最后我们得到的是2048 x (1 x 1)),最后的fc是2048 x 1000;分别用于分类和框回归。

而mask rcnn是:

(RCNN Head):

conv4_x(sizew4*sizeh4*1024)→conv5_x(sizew5*sizeh5*2048)→RoIAlign→7*7*256→1*1*1024→1*1*1024→1024

(Mask Head):

conv4_x(sizew4*sizeh4*1024)→RoIAlign→14*14*256→×4→14*14*256→28*28*256→28*28*C

(Keyponts Head)是:

conv4_x(sizew4*sizeh4*1024)→RoIAlign→14*14*256→14*14*512→×8→14*14*512→7*7*17

3.其实FEATURE_EXTRACTOR: "FPN2MLPFeatureExtractor",是指的用这个特征提取层做分类和回归。是后面的部分,也就是RCNN Head。

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

import torch

from torch import nn

from torch.nn import functional as F

from maskrcnn_benchmark.modeling import registry

from maskrcnn_benchmark.modeling.backbone import resnet

from maskrcnn_benchmark.modeling.poolers import Pooler

from maskrcnn_benchmark.modeling.make_layers import group_norm

from maskrcnn_benchmark.modeling.make_layers import make_fc

@registry.ROI_BOX_FEATURE_EXTRACTORS.register("ResNet50Conv5ROIFeatureExtractor")

class ResNet50Conv5ROIFeatureExtractor(nn.Module):

def __init__(self, config, in_channels):

super(ResNet50Conv5ROIFeatureExtractor, self).__init__()

resolution = config.MODEL.ROI_BOX_HEAD.POOLER_RESOLUTION

scales = config.MODEL.ROI_BOX_HEAD.POOLER_SCALES

sampling_ratio = config.MODEL.ROI_BOX_HEAD.POOLER_SAMPLING_RATIO

pooler = Pooler(

output_size=(resolution, resolution),

scales=scales,

sampling_ratio=sampling_ratio,

)

stage = resnet.StageSpec(index=4, block_count=3, return_features=False)

head = resnet.ResNetHead(

block_module=config.MODEL.RESNETS.TRANS_FUNC,

stages=(stage,),

num_groups=config.MODEL.RESNETS.NUM_GROUPS,

width_per_group=config.MODEL.RESNETS.WIDTH_PER_GROUP,

stride_in_1x1=config.MODEL.RESNETS.STRIDE_IN_1X1,

stride_init=None,

res2_out_channels=config.MODEL.RESNETS.RES2_OUT_CHANNELS,

dilation=config.MODEL.RESNETS.RES5_DILATION

)

self.pooler = pooler

self.head = head

self.out_channels = head.out_channels

def forward(self, x, proposals):

x = self.pooler(x, proposals)

x = self.head(x)

return x

#RCNN head的那部分 class +box

@registry.ROI_BOX_FEATURE_EXTRACTORS.register("FPN2MLPFeatureExtractor")

class FPN2MLPFeatureExtractor(nn.Module):

"""

Heads for FPN for classification

"""

def __init__(self, cfg, in_channels):

super(FPN2MLPFeatureExtractor, self).__init__()

resolution = cfg.MODEL.ROI_BOX_HEAD.POOLER_RESOLUTION #14→7

scales = cfg.MODEL.ROI_BOX_HEAD.POOLER_SCALES #(0.25, 0.125, 0.0625, 0.03125)

sampling_ratio = cfg.MODEL.ROI_BOX_HEAD.POOLER_SAMPLING_RATIO #2

pooler = Pooler(

output_size=(resolution, resolution),

scales=scales,

sampling_ratio=sampling_ratio,

)

input_size = in_channels * resolution ** 2 # 256*7**7 RCNN head的那部分

#Hidden layer dimension when using an MLP for the RoI box head

representation_size = cfg.MODEL.ROI_BOX_HEAD.MLP_HEAD_DIM #1024

use_gn = cfg.MODEL.ROI_BOX_HEAD.USE_GN #False

self.pooler = pooler

self.fc6 = make_fc(input_size, representation_size, use_gn)

self.fc7 = make_fc(representation_size, representation_size, use_gn)

self.out_channels = representation_size #1024

def forward(self, x, proposals):

x = self.pooler(x, proposals)

x = x.view(x.size(0), -1)

x = F.relu(self.fc6(x))

x = F.relu(self.fc7(x))

return x

@registry.ROI_BOX_FEATURE_EXTRACTORS.register("FPNXconv1fcFeatureExtractor")

class FPNXconv1fcFeatureExtractor(nn.Module):

"""

Heads for FPN for classification

"""

def __init__(self, cfg, in_channels):

super(FPNXconv1fcFeatureExtractor, self).__init__()

resolution = cfg.MODEL.ROI_BOX_HEAD.POOLER_RESOLUTION

scales = cfg.MODEL.ROI_BOX_HEAD.POOLER_SCALES

sampling_ratio = cfg.MODEL.ROI_BOX_HEAD.POOLER_SAMPLING_RATIO

pooler = Pooler(

output_size=(resolution, resolution),

scales=scales,

sampling_ratio=sampling_ratio,

)

self.pooler = pooler

use_gn = cfg.MODEL.ROI_BOX_HEAD.USE_GN

conv_head_dim = cfg.MODEL.ROI_BOX_HEAD.CONV_HEAD_DIM

num_stacked_convs = cfg.MODEL.ROI_BOX_HEAD.NUM_STACKED_CONVS

dilation = cfg.MODEL.ROI_BOX_HEAD.DILATION

xconvs = []

for ix in range(num_stacked_convs):

xconvs.append(

nn.Conv2d(

in_channels,

conv_head_dim,

kernel_size=3,

stride=1,

padding=dilation,

dilation=dilation,

bias=False if use_gn else True

)

)

in_channels = conv_head_dim

if use_gn:

xconvs.append(group_norm(in_channels))

xconvs.append(nn.ReLU(inplace=True))

self.add_module("xconvs", nn.Sequential(*xconvs))

for modules in [self.xconvs,]:

for l in modules.modules():

if isinstance(l, nn.Conv2d):

torch.nn.init.normal_(l.weight, std=0.01)

if not use_gn:

torch.nn.init.constant_(l.bias, 0)

input_size = conv_head_dim * resolution ** 2

representation_size = cfg.MODEL.ROI_BOX_HEAD.MLP_HEAD_DIM

self.fc6 = make_fc(input_size, representation_size, use_gn=False)

self.out_channels = representation_size

def forward(self, x, proposals):

x = self.pooler(x, proposals)

x = self.xconvs(x)

x = x.view(x.size(0), -1)

x = F.relu(self.fc6(x))

return x

def make_roi_box_feature_extractor(cfg, in_channels):

func = registry.ROI_BOX_FEATURE_EXTRACTORS[

cfg.MODEL.ROI_BOX_HEAD.FEATURE_EXTRACTOR

] #@registry.ROI_BOX_FEATURE_EXTRACTORS.register("FPN2MLPFeatureExtractor")

return func(cfg, in_channels) #return x