Oracle Rac 11g安装

Oracle Rac 11g安装

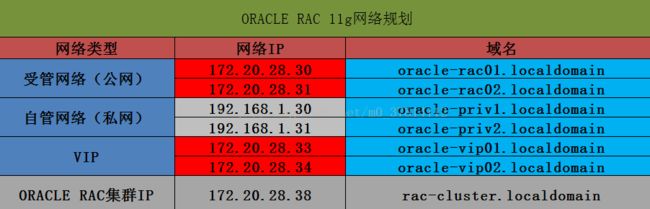

由于生产环境是青云私有云,所以一些实验环境准备可在青云上操作

服务器A:172.20.28.30

服务器B:172.20.28.31

1、 青云云平台上构建VIRTUAL SAN共享存储

2、 在两台服务器上分别安装ISCSI客户端

3、 在两台服务器上分别配置ASM磁盘

4、 在两台服务器上配置ORCLE RAC网络,并在青云上配置ORACLE RAC集群的DNS域名

5、 配置ORACLE RAC集群的时间同步服务

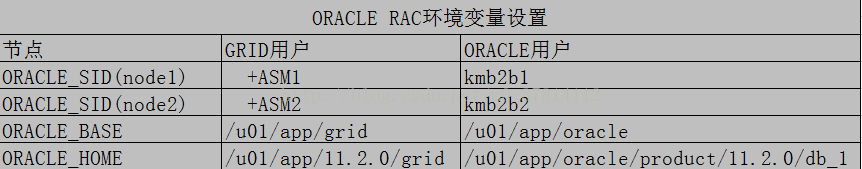

6、 创建用户和组、修改用户配置文件、配置两台服务器的oracle环境变量

7、 配置两台服务器的oracle、grid用户ssh的对等性

8、 两台服务器上分别安装oracle软件依赖包

9、 安装Oracle Grid Infrastructure

10、安装Oracle Database

配置oracle rac 11g注意事项:

1、做共享硬盘的硬盘必须是新硬盘(无分区,没有被格式化),否则Grid无法安装

2、配置集群网络是VIP要与公网IP在同一个网段

3、两台服务器的公网和私网的网卡名要一一对应。如服务器A:公网网卡:eth0 私网网卡:eth1,那么服务器B:公网网卡:eth0 私网网卡:eth1

4、scan-cluster IP 并不是主机,只是一个配置了 DNS 域名解析的 IP 地址,作用类似 VIP

6、Grid 的ORACLE_BASE 和ORACLE_HOME有所不同,GRID的ORACLE_HOME不能是ORACLE_BASE的子目录,否则安装会报错

在GRID安装过程的root.sh会把GRID所在目录的属主改成root,而且会一直修改到顶层目录,这样一来就会影响到其他的Oracle软件,所以,不能把GRID的oracle_home放到ORACLE_BASE的子目录中。对于GRID来说,这两个目录是平行的

7、共享内存大小>SGA内存大小,共享内存在shmall这个参数中设置,shmall单位为页,换算成内存大小还需要把两个值相乘,如shmall=4194304,那么共享内存的大小就是4194304*4194304/1024/1024=16G

8、两台服务器上都要下载rac集群件,解压后即使grid目录下的文件

8、在oracle rac 11g版本中安装 oracle grid infrastructure软件时不需要手动配置双机互信,在图形化安装过程中可以自动配置

9、虚拟vip在此实验环境中,只需要在青云上配置即可,不需要手动在两个节点上配置

一、青云云平台上构建VIRTUAL SAN共享存储

小结:此处在青云上操作,无法演示,效果图如下:

二、在两台服务器上分别安装ISCSI客户端

1、安装ISCSI客户端软件

[root@dg2 ~]# yum install iscsi-initiator-utils -y

2、(青云上操作)由于我再iSCSI客户端没有设置的 initiator name(启动器名称),所以就不要修改/etc/iscsi/initiatorname.iscsi的名称

3、查找目标存储(发现目标,默认情况下,iSCSI 发起方和目标方之间通过端口3260连接)

[root@dg1 ~]# iscsiadm -m discovery -t st -p 172.20.28.29

172.20.28.29:3260,1 iqn.2014-12.com.qingcloud.s2:sn.target1

[root@dg2 ~]# iscsiadm -m discovery -t st -p 172.20.28.29

172.20.28.29:3260,1 iqn.2014-12.com.qingcloud.s2:sn.target1

4、登录到目标

[root@dg1 ~]# iscsiadm -m node -T iqn.2014-12.com.qingcloud.s2:sn.target1 -p 172.20.28.29 --login

Logging in to [iface: default, target: iqn.2014-12.com.qingcloud.s2:sn.target1, portal: 172.20.28.29,3260] (multiple)

Login to [iface: default, target: iqn.2014-12.com.qingcloud.s2:sn.target1, portal: 172.20.28.29,3260] successful.

[root@dg2 ~]# iscsiadm -m node -T iqn.2014-12.com.qingcloud.s2:sn.target1 -p 172.20.28.29 --login

Logging in to [iface: default, target: iqn.2014-12.com.qingcloud.s2:sn.target1, portal: 172.20.28.29,3260] (multiple)

Login to [iface: default, target: iqn.2014-12.com.qingcloud.s2:sn.target1, portal: 172.20.28.29,3260] successful.

5、分别在两台ISCSI客户端上验证共享存储

[root@dg1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

└─sda1 8:1 0 20G 0 part /

sdc 8:32 0 16G 0 disk [SWAP]

sdb 8:16 0 80G 0 disk

└─sdb1 8:17 0 80G 0 part /u01

sdd 8:48 0 100G 0 disk

sdf 8:80 0 100G 0 disk

sde 8:64 0 100G 0 disk

[root@dg2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

└─sda1 8:1 0 20G 0 part /

sdb 8:16 0 80G 0 disk

└─sdb1 8:17 0 80G 0 part /u01

sdc 8:32 0 16G 0 disk [SWAP]

sdd 8:48 0 100G 0 disk

sde 8:64 0 100G 0 disk

sdf 8:80 0 100G 0 disk

三、在两台服务器上分别配置ASM磁盘

1、配置ASM磁盘(创建文件99-oracle-asmdevices.rules)

利用脚本的方式创建99-oracle-asmdevices.rules

[root@dg1 rules.d]# cat createASM.sh

#!/bin/bash

echo "options=--whitelisted --replace-whitespace">/etc/scsi_id.config

i=1

id=''

for x in d e f

do

id=`scsi_id --whitelisted --replace-whitespace --device=/dev/sd$x`

echo "KERNEL==\"sd*\", SUBSYSTEM==\"block\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\", RESULT==\"$id\", NAME=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"">>/etc/udev/rules.d/99-oracle-asmdevices.rules

let i++

done

[root@dg2 rules.d]# sh createASM.sh

[root@dg2 rules.d]# cat 99-oracle-asmdevices.rules

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36001405efc81cec3ab84a5cabf0979f5", NAME="asm-disk1", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36001405caa5c21fbcc74d0aa2b1d3b95", NAME="asm-disk2", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", SUBSYSTEM=="block", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36001405a0cd0848c8314e4f9b45dbb55", NAME="asm-disk3", OWNER="grid", GROUP="asmadmin", MODE="0660"

注意:在另外一台服务器上均按照此操作重复一遍即可。

2、分别在两台服务器上启动udev

[root@dg1 rules.d]# udevadm control --reload-rules

[root@dg1 rules.d]# start_udev

Starting udev: [ OK ]

3、检查udev是否正常配置

[root@dg1 rules.d]# ls -lrt /dev/asm*

brw-rw---- 1 root root 8, 48 Aug 17 09:16 /dev/asm-disk1

brw-rw---- 1 root root 8, 64 Aug 17 09:16 /dev/asm-disk2

brw-rw---- 1 root root 8, 80 Aug 17 09:16 /dev/asm-disk3

四、在两台服务器上配置ORCLE RAC网络,并在青云上配置ORACLE RAC集群的DNS域名

小结:VIP网络的IP要与受管网络的IP在同一个子网内

1、在青云云平台上创建一个自管的私有网络,并分别添加到两台服务器上(此处操作因为在青云云平台上,所以省略)

2、在两台服务器上分别配置私有网络

[root@dg1 rules.d]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

IPADDR=192.168.1.30

BOOTPROTO=static

NETMASK=255.255.255.0

ONBOOT=yes

NM_CONTROLLED=yes

HWADDR=52:54:B5:B5:9E:8B

[root@dg2 rules.d]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

IPADDR=192.168.1.31

BOOTPROTO=static

NETMASK=255.255.255.0

ONBOOT=yes

NM_CONTROLLED=yes

HWADDR=52:54:67:92:01:67

小结:分别重启网卡,效果如下:

[root@dg1 rules.d]# ifconfig

eth0 Link encap:Ethernet HWaddr 52:54:CD:11:6D:0B

inet addr: 172.20.28.30 Bcast:172.20.28.255 Mask:255.255.255.0

inet6 addr: fe80::5054:cdff:fe11:6d0b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:15030 errors:0 dropped:0 overruns:0 frame:0

TX packets:11688 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:13986299 (13.3 MiB) TX bytes:1041078 (1016.6 KiB)

eth1 Link encap:Ethernet HWaddr 52:54:B5:B5:9E:8B

inet addr: 192.168.1.30 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::5054:b5ff:feb5:9e8b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:71 errors:0 dropped:0 overruns:0 frame:0

TX packets:49 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:15966 (15.5 KiB) TX bytes:13974 (13.6 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:12 errors:0 dropped:0 overruns:0 frame:0

TX packets:12 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:600 (600.0 b) TX bytes:600 (600.0 b)

[root@dg2 rules.d]# ifconfig

eth0 Link encap:Ethernet HWaddr 52:54:C4:8D:18:81

inet addr: 172.20.28.31 Bcast:172.20.28.255 Mask:255.255.255.0

inet6 addr: fe80::5054:c4ff:fe8d:1881/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:13419 errors:0 dropped:0 overruns:0 frame:0

TX packets:10004 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:11234155 (10.7 MiB) TX bytes:915658 (894.1 KiB)

eth1 Link encap:Ethernet HWaddr 52:54:67:92:01:67

inet addr: 192.168.1.31 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::5054:67ff:fe92:167/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:46 errors:0 dropped:0 overruns:0 frame:0

TX packets:48 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:13352 (13.0 KiB) TX bytes:14232 (13.8 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:12 errors:0 dropped:0 overruns:0 frame:0

TX packets:12 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:600 (600.0 b) TX bytes:600 (600.0 b)

3、在青云云平台上进行DNS域名配置

5、分别在两台服务器上添加hosts信息

[root@dg1 rules.d]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# hostname loopback address

#127.0.1.1 i-scn6i80f

192.168.1.30 oracle-priv1.localdomain

192.168.1.31 oracle-priv2.localdomain

172.20.28.30 oracle-rac01.localdomain

172.20.28.31 oracle-rac02.localdomain

172.20.28.33 oracle-vip01.localdomain

172.20.28.34 oracle-vip02.localdomain

[root@dg2 rules.d]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# hostname loopback address

#127.0.1.1 i-scn6i80f

192.168.1.30 oracle-priv1.localdomain

192.168.1.31 oracle-priv2.localdomain

172.20.28.30 oracle-rac01.localdomain

172.20.28.31 oracle-rac02.localdomain

172.20.28.33 oracle-vip01.localdomain

172.20.28.34 oracle-vip02.localdomain

五、配置ORACLE RAC集群的时间同步服务

Oracle 11g CTSS时间同步进程(新特性)

节点间的时间同步对于Oracle来说是非常重要的,在11g版本之前往往通过搭建NTP服务器完成时间同步。在Oracle 11g中新增加了一个CTSS(Cluster Time Synchronization Services,集群时间同步服务)服务,用来完成集群节点间时间

同步。在安装Grid Infrastructure过程中,如果没有发现节点有NTP服务,就会自动安装CTSS

1. NTP时间同步服务配置

NTP是传统的时间同步服务,往往在一个企业中有一个专门的时间同步服务器,这种情况依然可以采用NTP的方式来同步节点之间的时间。

2. CTSS时间同步服务配置

11gR2版本中,Oracle推出了自己的时间同步服务,这只是在一个RAC的所有节点中有效,与其他系统的时间并不同步。如果要使用CTSS同步时间,除了要停止、禁止自动重启NTP服务外,/etc/ntp.conf配置文件也不允许存在,执行以下

的步骤清除NTP服务。

在两个节点分别运行以下命令

service ntpd stop

chkconfig ntpd off

rm -f /etc/ntp.conf

小结:在此处环境中采用的是CTSS时间同步服务

六、创建用户和组、修改用户配置文件、配置两台服务器的oracle环境变量

注意:分别在两台服务器上进行一下操作

1、oracle软件各个组件说明

2、创建grid用户、oracle用户、主组、辅助组、配置grid、oracle用户的环境变量、oracle软件、Grid Infrastructure软件的安装目录及目录权限设置

创建grid用户、主组、辅助组

[root@dg1 rules.d]# groupadd -g 1000 oinstall

[root@dg1 rules.d]# groupadd -g 1200 asmadmin

[root@dg1 rules.d]# groupadd -g 1201 asmdba

[root@dg1 rules.d]# groupadd -g 1202 asmoper

[root@dg1 rules.d]# useradd -u 1100 -g oinstall -G asmadmin,asmdba,asmoper -d /home/grid -s /bin/bash -c "grid infrasturcture owner" grid

[root@dg1 rules.d]# echo grid | passwd --stdin grid

Changing password for user grid.

passwd: all authentication tokens updated successfully.

[root@dg1 rules.d]# id grid

uid=1100(grid) gid=1000(oinstall) groups=1000(oinstall),1200(asmadmin),1201(asmdba),1202(asmoper)

创建oracle用户、主组、附属组

[root@dg1 rules.d]# groupadd -g 1300 dba

[root@dg1 rules.d]# groupadd -g 1301 oper

[root@dg1 rules.d]# useradd -u 1101 -g oinstall -G dba,oper,asmdba -d /home/oracle -s /bin/bash -c "oracle software owner" oracle

[root@dg1 rules.d]# id oracle

uid=1101(oracle) gid=1000(oinstall) groups=1000(oinstall),1201(asmdba),1300(dba),1301(oper)

创建oracle数据库软件和grid infrastructure软件的安装目录、设置目录权限

[root@dg1 rules.d]# mkdir -p /u01/app/grid

[root@dg1 rules.d]# mkdir -p /u01/app/11.2.0/grid

[root@dg1 rules.d]# mkdir /u01/app/oracle

[root@dg1 rules.d]# mkdir -p /u01/app/oracle/product/11.2.0/db_1

[root@dg1 rules.d]# chown -R oracle:oinstall /u01/

[root@dg1 rules.d]# chown -R grid:oinstall /u01/app/grid/

[root@dg1 rules.d]# chown -R grid:oinstall /u01/app/11.2.0/

[root@dg1 rules.d]# chmod -R 755 /u01

[root@oracle-rac01 app]# mkdir /u01/app/oraInventory

[root@oracle-rac01 app]# chown grid:oinstall oraInventory

drwxr-xr-x 3 grid oinstall 4096 Aug 18 14:39 11.2.0

drwxr-xr-x 2 grid oinstall 4096 Aug 18 14:50 grid

drwxr-xr-x 3 oracle oinstall 4096 Aug 17 11:34 oracle

drwxr-xr-x 2 grid oinstall 4096 Aug 18 14:45 oraInventory

配置用户grid、oracle用户环境变量

服务器:172.20.28.30

[oracle@dg1 app]$ cat /home/oracle/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export ORACLE_TERM=xterm

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export ORACLE_UNQNAME=kmb2b

export ORACLE_SID= kmb2b1

export TNS_ADMIN=$ORACLE_HOME/network/admin

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export NLS_DATE_FORMAT='yyyy/mm/dd hh24:mi:ss'

export NLS_LANG=american_america.AL32UTF8

export LANG=en_US

umask 022

[grid@dg1 app]$ cat /home/grid/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export ORACLE_TERM=xterm

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/11.2.0/grid

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export PATH=/usr/sbin:$PATH

export ORACLE_SID= +ASM1

export PATH=$PATH:$HOME/bin:$ORACLE_HOME/bin

export TNS_ADMIN=$ORACLE_HOME/network/admin

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export NLS_DATE_FORMAT='yyyy/mm/dd hh24:mi:ss'

export LANG=en_US

export NLS_LANG=american_america.AL32UTF8

umask 022

服务器:172.20.28.31

[oracle@dg2 app]$ cat /home/oracle/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export ORACLE_TERM=xterm

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export ORACLE_UNQNAME=kmb2b

export ORACLE_SID= kmb2b2

export TNS_ADMIN=$ORACLE_HOME/network/admin

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export NLS_DATE_FORMAT='yyyy/mm/dd hh24:mi:ss'

export NLS_LANG=american_america.AL32UTF8

export LANG=en_US

umask 022

[grid@dg1 app]$ cat /home/grid/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export ORACLE_TERM=xterm

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/11.2.0/grid

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export PATH=/usr/sbin:$PATH

export ORACLE_SID= +ASM2

export PATH=$PATH:$HOME/bin:$ORACLE_HOME/bin

export TNS_ADMIN=$ORACLE_HOME/network/admin

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export NLS_DATE_FORMAT='yyyy/mm/dd hh24:mi:ss'

export LANG=en_US

export NLS_LANG=american_america.AL32UTF8

umask 022

修改用户配置文件

修改/etc/security/limits.conf

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

修改/etc/pam.d/login 配置文件

session required /lib/security/pam_limits.so

session required pam_limits.so

修改/etc/profile文件

if [ $USER = "oracle" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

fi

[root@dg2 u01]# source /etc/profile

修改内核配置文件/etc/sysctl.conf

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = 4194304

kernel.shmmax = 4294967296

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

net.ipv4.tcp_wmem = 262144 262144 262144

net.ipv4.tcp_rmem = 4194304 4194304 4194304

[root@dg1 app]# sysctl -p

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

error: "net.bridge.bridge-nf-call-ip6tables" is an unknown key

error: "net.bridge.bridge-nf-call-iptables" is an unknown key

error: "net.bridge.bridge-nf-call-arptables" is an unknown key

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

net.ipv4.tcp_wmem = 262144 262144 262144

net.ipv4.tcp_rmem = 4194304 4194304 4194304

七、配置两台服务器的oracle、grid用户ssh的对等性

在oracle rac 11g版本中安装 oracle grid infrastructure软件时不需要手动配置双机互信,在图形化安装过程中可以自动配置

八、两台服务器上分别安装oracle软件依赖包

[root@dg2 u01]# yum install -y make binutils gcc libaio glibc compat-libstdc++-33 elfutils-libelf elfutils-libelf-devel glibc-common glibc-devel glibc-headers gcc-c++ libaio-devel libgcc libstdc++ libstdc++-devel sysstat pdksh expat

[root@dg2 u01]# rpm -ivh pdksh-5.2.14-37.el5_8.1.x86_64.rpm

warning: pdksh-5.2.14-37.el5_8.1.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID e8562897: NOKEY

error: Failed dependencies:

pdksh conflicts with ksh-20120801-35.el6_9.x86_64

[root@dg2 u01]# rpm -e ksh-20120801-35.el6_9

[root@dg2 u01]# rpm -ivh pdksh-5.2.14-37.el5_8.1.x86_64.rpm

warning: pdksh-5.2.14-37.el5_8.1.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID e8562897: NOKEY

Preparing... ########################################### [100%]

1:pdksh ########################################### [100%]

备注:由于pdksh包yum源没有,所以需要下载手动安装

oracle rac图形化安装需要配置vnc,如何安装vnc请参考我的博客: http://blog.csdn.net/m0_37814112/article/details/77159944

九、安装Oracle Grid Infrastructure

备注:

1、rac集群只需要在一台安装即可,这里默认主节点为:172.20.28.30,即在这台服务器上安装oracle grid infrastructure软件,在安装之前须得安装图形化工具vnc

2、检查安装前预检查配置信息,、oracle grid infrastructure 提供了一个脚本,检查,并修复环境问题,请在节点172.20.28.30上执行如下命令

问题一:

[grid@dg1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac01 rac02 -fixup -verbose

You do not have sufficient permissions to access the inventory '/u01/oraInventory'. Installation cannot continue. It is required that the primary group of the install user is same as the inventory owner group. Make sure that the

install user is part of the inventory owner group and restart the installer.: Permission denied

解决:rm -rf /etc/oraInst.loc

问题二:

Check: Membership of user "grid" in group "dba"

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rac02 yes yes no failed

rac01 yes yes no failed

分析:

Please run the following script on each node as "root" user to execute the fixups:

'/tmp/CVU_11.2.0.4.0_grid/runfixup.sh'

Pre-check for cluster services setup was unsuccessful on all the nodes.

解决:分别在两个节点以root身份执行/tmp/CVU_11.2.0.4.0_grid/runfixup.sh

[root@rac01 app]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log

uid=1100(grid) gid=1000(oinstall) 组=1000(oinstall),1200(asmadmin),1201(asmdba),1202(asmoper)

[root@rac02 app]# /tmp/CVU_11.2.0.4.0_grid/runfixup.sh

Response file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.response

Enable file being used is :/tmp/CVU_11.2.0.4.0_grid/fixup.enable

Log file location: /tmp/CVU_11.2.0.4.0_grid/orarun.log

uid=1100(grid) gid=1000(oinstall) 组=1000(oinstall),1200(asmadmin),1201(asmdba),1202(asmoper)

具体配置过程参考图形化安装oracle grid infrastructure篇