Spring boot 配置多数据源并使用连接池管理 - mysql - hive

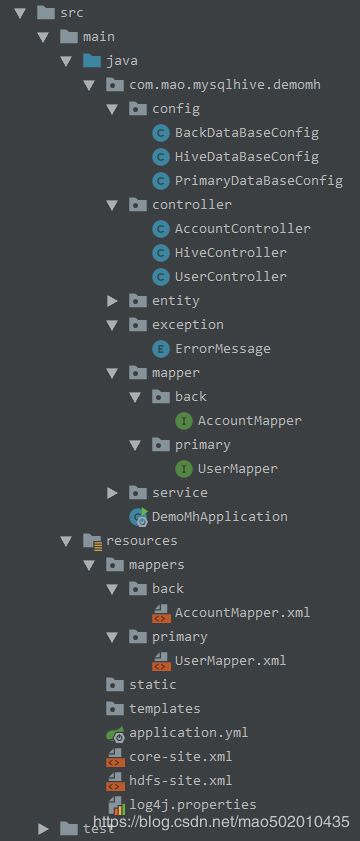

1、搭建springboot项目,项目目录如下:

2.部分配置源码如下,不想自己配置的小伙伴可以直接去github下载,github地址:github项目地址:

yml配置:

spring:

datasource:

#使用druid连接池

type: com.alibaba.druid.pool.DruidDataSource

# 自定义的主数据源配置信息

primary:

datasource:

#druid相关配置

druid:

#监控统计拦截的filters

filters: stat

driverClassName: com.mysql.jdbc.Driver

#配置基本属性

url: jdbc:mysql://127.0.0.1:3306/test2?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&autoReconnect=true&useSSL=false

username: root

password: 12311

#配置初始化大小/最小/最大

initialSize: 1

minIdle: 1

maxActive: 10

#获取连接等待超时时间

maxWait: 60000

#间隔多久进行一次检测,检测需要关闭的空闲连接

timeBetweenEvictionRunsMillis: 60000

#一个连接在池中最小生存的时间

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 'x'

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

#打开PSCache,并指定每个连接上PSCache的大小。oracle设为true,mysql设为false。分库分表较多推荐设置为false

poolPreparedStatements: false

maxPoolPreparedStatementPerConnectionSize: 10

# 自定义的从数据源配置信息

back:

datasource:

#druid相关配置

druid:

#监控统计拦截的filters

filters: stat

driverClassName: com.mysql.jdbc.Driver

#配置基本属性

url: jdbc:mysql://127.0.0.1:3306/test?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&autoReconnect=true&useSSL=false

username: root

password: 12311

#配置初始化大小/最小/最大

initialSize: 1

minIdle: 1

maxActive: 10

#获取连接等待超时时间

maxWait: 60000

#间隔多久进行一次检测,检测需要关闭的空闲连接

timeBetweenEvictionRunsMillis: 60000

#一个连接在池中最小生存的时间

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 'x'

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

#打开PSCache,并指定每个连接上PSCache的大小。oracle设为true,mysql设为false。分库分表较多推荐设置为false

poolPreparedStatements: false

maxPoolPreparedStatementPerConnectionSize: 10

# 自定义的从数据源配置信息

hive:

datasource:

#druid相关配置

druid:

#监控统计拦截的filters

filters: stat

driverClassName: org.apache.hive.jdbc.HiveDriver

#配置基本属性

url: jdbc:hive2://192.168.159.206:10000/eshop

username: root

password: 12311

#配置初始化大小/最小/最大

initialSize: 1

minIdle: 1

maxActive: 10

#获取连接等待超时时间

maxWait: 60000

#间隔多久进行一次检测,检测需要关闭的空闲连接

timeBetweenEvictionRunsMillis: 60000

#一个连接在池中最小生存的时间

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 'x'

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

#打开PSCache,并指定每个连接上PSCache的大小。oracle设为true,mysql设为false。分库分表较多推荐设置为false

poolPreparedStatements: false

maxPoolPreparedStatementPerConnectionSize: 10

namenodes: hdfs://192.168.159.206:8020

es:

clusterName: elasticsearch

clientTransportSniff: ture

port: 9300

hostname: 127.0.0.1mysql主库配置文件:

package com.mao.mysqlhive.demomh.config;

import com.alibaba.druid.pool.DruidDataSource;

import lombok.Data;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import javax.sql.DataSource;

import java.sql.SQLException;

/**

* @Description: 主数据源配置类

* 前缀为primary.datasource.druid的配置信息

*/

@Data

@Configuration

@ConfigurationProperties(prefix = "primary.datasource.druid")

@MapperScan(basePackages = PrimaryDataBaseConfig.PACKAGE, sqlSessionFactoryRef = "primarySqlSessionFactory")

public class PrimaryDataBaseConfig {

/**

* dao层的包路径

*/

static final String PACKAGE = "com.mao.mysqlhive.demomh.mapper.primary";

/**

* mapper文件的相对路径

*/

private static final String MAPPER_LOCATION = "classpath:mappers/primary/*Mapper.xml";

private String filters;

private String url;

private String username;

private String password;

private String driverClassName;

private int initialSize;

private int minIdle;

private int maxActive;

private long maxWait;

private long timeBetweenEvictionRunsMillis;

private long minEvictableIdleTimeMillis;

private String validationQuery;

private boolean testWhileIdle;

private boolean testOnBorrow;

private boolean testOnReturn;

private boolean poolPreparedStatements;

private int maxPoolPreparedStatementPerConnectionSize;

/**

* 主数据源使用@Primary注解进行标识

*/

@Primary

@Bean(name = "primaryDataSource")

public DataSource primaryDataSource() throws SQLException {

DruidDataSource druid = new DruidDataSource();

// 监控统计拦截的filters

druid.setFilters(filters);

// 配置基本属性

druid.setDriverClassName(driverClassName);

druid.setUsername(username);

druid.setPassword(password);

druid.setUrl(url);

//初始化时建立物理连接的个数

druid.setInitialSize(initialSize);

//最大连接池数量

druid.setMaxActive(maxActive);

//最小连接池数量

druid.setMinIdle(minIdle);

//获取连接时最大等待时间,单位毫秒。

druid.setMaxWait(maxWait);

//间隔多久进行一次检测,检测需要关闭的空闲连接

druid.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

//一个连接在池中最小生存的时间

druid.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

//用来检测连接是否有效的sql

druid.setValidationQuery(validationQuery);

//建议配置为true,不影响性能,并且保证安全性。

druid.setTestWhileIdle(testWhileIdle);

//申请连接时执行validationQuery检测连接是否有效

druid.setTestOnBorrow(testOnBorrow);

druid.setTestOnReturn(testOnReturn);

//是否缓存preparedStatement,也就是PSCache,oracle设为true,mysql设为false。分库分表较多推荐设置为false

druid.setPoolPreparedStatements(poolPreparedStatements);

// 打开PSCache时,指定每个连接上PSCache的大小

druid.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

return druid;

}

/**

* 创建该数据源的事务管理

*/

@Primary

@Bean(name = "primaryTransactionManager")

public DataSourceTransactionManager primaryTransactionManager() throws SQLException {

return new DataSourceTransactionManager(primaryDataSource());

}

/**

* 创建Mybatis的连接会话工厂实例

*/

@Primary

@Bean(name = "primarySqlSessionFactory")

public SqlSessionFactory primarySqlSessionFactory(@Qualifier("primaryDataSource") DataSource primaryDataSource) throws Exception {

final SqlSessionFactoryBean sessionFactory = new SqlSessionFactoryBean();

sessionFactory.setDataSource(primaryDataSource); // 设置数据源bean

sessionFactory.setMapperLocations(new PathMatchingResourcePatternResolver()

.getResources(PrimaryDataBaseConfig.MAPPER_LOCATION)); // 设置mapper文件路径

return sessionFactory.getObject();

}

}mysql从库配置文件:

package com.mao.mysqlhive.demomh.config;

import com.alibaba.druid.pool.DruidDataSource;

import lombok.Data;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import javax.sql.DataSource;

import java.sql.SQLException;

/**

* @ProjectName

* @Description: 后台数据源配置类

*/

@Data

@Configuration

@ConfigurationProperties(prefix = "back.datasource.druid")

@MapperScan(basePackages = BackDataBaseConfig.PACKAGE, sqlSessionFactoryRef = "backSqlSessionFactory")

public class BackDataBaseConfig {

/**

* dao层的包路径

*/

static final String PACKAGE = "com.mao.mysqlhive.demomh.mapper.back";

/**

* mapper文件的相对路径

*/

private static final String MAPPER_LOCATION = "classpath:mappers/back/*Mapper.xml";

@Value("${back.datasource.druid.filters}")

private String filters;

@Value("${back.datasource.druid.driverClassName}")

private String url;

@Value("${back.datasource.druid.url}")

private String username;

@Value("${back.datasource.druid.username}")

private String password;

@Value("${back.datasource.druid.password}")

private String driverClassName;

@Value("${back.datasource.druid.initialSize}")

private int initialSize;

@Value("${back.datasource.druid.minIdle}")

private int minIdle;

@Value("${back.datasource.druid.maxActive}")

private int maxActive;

@Value("${back.datasource.druid.maxWait}")

private long maxWait;

@Value("${back.datasource.druid.timeBetweenEvictionRunsMillis}")

private long timeBetweenEvictionRunsMillis;

@Value("${back.datasource.druid.minEvictableIdleTimeMillis}")

private long minEvictableIdleTimeMillis;

@Value("${back.datasource.druid.validationQuery}")

private String validationQuery;

@Value("${back.datasource.druid.testWhileIdle}")

private boolean testWhileIdle;

@Value("${back.datasource.druid.testOnBorrow}")

private boolean testOnBorrow;

@Value("${back.datasource.druid.testOnReturn}")

private boolean testOnReturn;

@Value("${back.datasource.druid.poolPreparedStatements}")

private boolean poolPreparedStatements;

@Value("${back.datasource.druid.maxPoolPreparedStatementPerConnectionSize}")

private int maxPoolPreparedStatementPerConnectionSize;

@Bean(name = "backDataSource")

public DataSource backDataSource() throws SQLException {

DruidDataSource druid = new DruidDataSource();

// 监控统计拦截的filters

druid.setFilters(filters);

// 配置基本属性

druid.setDriverClassName(driverClassName);

druid.setUsername(username);

druid.setPassword(password);

druid.setUrl(url);

//初始化时建立物理连接的个数

druid.setInitialSize(initialSize);

//最大连接池数量

druid.setMaxActive(maxActive);

//最小连接池数量

druid.setMinIdle(minIdle);

//获取连接时最大等待时间,单位毫秒。

druid.setMaxWait(maxWait);

//间隔多久进行一次检测,检测需要关闭的空闲连接

druid.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

//一个连接在池中最小生存的时间

druid.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

//用来检测连接是否有效的sql

druid.setValidationQuery(validationQuery);

//建议配置为true,不影响性能,并且保证安全性。

druid.setTestWhileIdle(testWhileIdle);

//申请连接时执行validationQuery检测连接是否有效

druid.setTestOnBorrow(testOnBorrow);

druid.setTestOnReturn(testOnReturn);

//是否缓存preparedStatement,也就是PSCache,oracle设为true,mysql设为false。分库分表较多推荐设置为false

druid.setPoolPreparedStatements(poolPreparedStatements);

// 打开PSCache时,指定每个连接上PSCache的大小

druid.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

return druid;

}

@Bean(name = "backTransactionManager")

public DataSourceTransactionManager backTransactionManager() throws SQLException {

return new DataSourceTransactionManager(backDataSource());

}

@Bean(name = "backSqlSessionFactory")

public SqlSessionFactory backSqlSessionFactory(@Qualifier("backDataSource") DataSource backDataSource) throws Exception {

final SqlSessionFactoryBean sessionFactory = new SqlSessionFactoryBean();

sessionFactory.setDataSource(backDataSource);

sessionFactory.setMapperLocations(new PathMatchingResourcePatternResolver()

.getResources(BackDataBaseConfig.MAPPER_LOCATION));

return sessionFactory.getObject();

}

}

hive连接配置文件:

package com.mao.mysqlhive.demomh.config;

import com.alibaba.druid.pool.DruidDataSource;

import lombok.Data;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.core.JdbcTemplate;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import javax.sql.DataSource;

import java.sql.SQLException;

/**

* @ProjectName

* @Description: 后台数据源配置类

*/

@Data

@Configuration

@ConfigurationProperties(prefix = "hive.datasource.druid")

public class HiveDataBaseConfig {

@Value("${hive.datasource.druid.filters}")

private String filters;

@Value("${hive.datasource.druid.driverClassName}")

private String url;

@Value("${hive.datasource.druid.url}")

private String username;

@Value("${hive.datasource.druid.username}")

private String password;

@Value("${hive.datasource.druid.password}")

private String driverClassName;

@Value("${hive.datasource.druid.initialSize}")

private int initialSize;

@Value("${hive.datasource.druid.minIdle}")

private int minIdle;

@Value("${hive.datasource.druid.maxActive}")

private int maxActive;

@Value("${hive.datasource.druid.maxWait}")

private long maxWait;

@Value("${hive.datasource.druid.timeBetweenEvictionRunsMillis}")

private long timeBetweenEvictionRunsMillis;

@Value("${hive.datasource.druid.minEvictableIdleTimeMillis}")

private long minEvictableIdleTimeMillis;

@Value("${hive.datasource.druid.validationQuery}")

private String validationQuery;

@Value("${hive.datasource.druid.testWhileIdle}")

private boolean testWhileIdle;

@Value("${hive.datasource.druid.testOnBorrow}")

private boolean testOnBorrow;

@Value("${hive.datasource.druid.testOnReturn}")

private boolean testOnReturn;

@Value("${hive.datasource.druid.poolPreparedStatements}")

private boolean poolPreparedStatements;

@Value("${hive.datasource.druid.maxPoolPreparedStatementPerConnectionSize}")

private int maxPoolPreparedStatementPerConnectionSize;

@Bean(name = "hiveDruidDataSource")

@Qualifier("hiveDruidDataSource")

public DataSource hiveDataSource() throws SQLException {

DruidDataSource druid = new DruidDataSource();

// 监控统计拦截的filters

druid.setFilters(filters);

// 配置基本属性

druid.setDriverClassName(driverClassName);

druid.setUsername(username);

druid.setPassword(password);

druid.setUrl(url);

//初始化时建立物理连接的个数

druid.setInitialSize(initialSize);

//最大连接池数量

druid.setMaxActive(maxActive);

//最小连接池数量

druid.setMinIdle(minIdle);

//获取连接时最大等待时间,单位毫秒。

druid.setMaxWait(maxWait);

//间隔多久进行一次检测,检测需要关闭的空闲连接

druid.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

//一个连接在池中最小生存的时间

druid.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

//用来检测连接是否有效的sql

druid.setValidationQuery(validationQuery);

//建议配置为true,不影响性能,并且保证安全性。

druid.setTestWhileIdle(testWhileIdle);

//申请连接时执行validationQuery检测连接是否有效

druid.setTestOnBorrow(testOnBorrow);

druid.setTestOnReturn(testOnReturn);

//是否缓存preparedStatement,也就是PSCache,oracle设为true,mysql设为false。分库分表较多推荐设置为false

druid.setPoolPreparedStatements(poolPreparedStatements);

// 打开PSCache时,指定每个连接上PSCache的大小

druid.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

return druid;

}

@Bean(name = "hiveJdbcTemplate")

public JdbcTemplate hiveJdbcTemplate(@Qualifier("hiveDruidDataSource") DataSource dataSource){

return new JdbcTemplate(dataSource);

}

}

mysql数据库如下:

#test:

-- ----------------------------

-- Table structure for account

-- ----------------------------

DROP TABLE IF EXISTS `account`;

CREATE TABLE `account` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) DEFAULT NULL,

`money` int(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=9 DEFAULT CHARSET=utf8;

-- ----------------------------

-- Records of account

-- ----------------------------

INSERT INTO `account` VALUES ('1', 'tom', '10');

#test2:

-- ----------------------------

-- Table structure for user

-- ----------------------------

DROP TABLE IF EXISTS `user`;

CREATE TABLE `user` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) DEFAULT NULL,

`age` int(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=3 DEFAULT CHARSET=utf8;

-- ----------------------------

-- Records of user

-- ----------------------------

INSERT INTO `user` VALUES ('1', 'tom', '12');

INSERT INTO `user` VALUES ('2', 'jonn', '13');其他的如目录所见,与单数据源使用方法相同,此处不再赘述。