1: 安装docker

参考这个链接,我做了一些整理

step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

Step 2: 添加软件源信息

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Step 3: 列举出来有哪些docker的版本可以安装

yum list docker-ce.x86_64 --showduplicates | sort -r

Step4: 指定版本来安装

yum -y install docker-ce-[VERSION]

比如 我想安装 17的版本

yum -y install docker-ce-17.12.1.ce-1.el7.centos

Step 5:安装完毕,把docker服务启动起来

service docker start

Step 6: 把docker服务加入到系统启动项里面,以便系统重启,docker也能自动起来

systemctl start docker && systemctl enable docker

总结:以上步骤,如果我整理一下,写为一个shell就可以直接去执行了。

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce-17.12.1.ce-1.el7.centos

service docker start

systemctl start docker && systemctl enable docker

2:安装k8s,参考了阳明的教程,这里我自己整理一番。

https://blog.qikqiak.com/post/use-kubeadm-install-kubernetes-1.10/

准备环境

step 1 我们这里准备两台Centos7的主机用于安装,后续节点可以根究需要添加即可:这里都是指内网ip

$ cat /etc/hosts

192.168.142.48 ljg-master

192.168.142.49 ljg-node-1

192.168.142.52 ljg-node-2

192.168.142.55 ljg-node-3

step 2 禁用防火墙:

systemctl stop firewalld

systemctl disable firewalld

step 3 禁用SELINUX:

setenforce 0

cat /etc/selinux/config

SELINUX=disabled

step 4 创建/etc/sysctl.d/k8s.conf文件,添加如下内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

step 5 执行如下命令使修改生效:

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

3: 我们现在来安装k8s

3.1 : 准备阿里云镜像加速器,以便我们合理的上网,拉取镜像。

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

3.1 安装master

3.1.1 拉取镜像, master节点,执行下面的命令:

docker pull cnych/kube-apiserver-amd64:v1.10.0

docker pull cnych/kube-scheduler-amd64:v1.10.0

docker pull cnych/kube-controller-manager-amd64:v1.10.0

docker pull cnych/kube-proxy-amd64:v1.10.0

docker pull cnych/k8s-dns-kube-dns-amd64:1.14.8

docker pull cnych/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker pull cnych/k8s-dns-sidecar-amd64:1.14.8

docker pull cnych/etcd-amd64:3.1.12

docker pull cnych/flannel:v0.10.0-amd64

docker pull cnych/pause-amd64:3.1

docker tag cnych/kube-apiserver-amd64:v1.10.0 k8s.gcr.io/kube-apiserver-amd64:v1.10.0

docker tag cnych/kube-scheduler-amd64:v1.10.0 k8s.gcr.io/kube-scheduler-amd64:v1.10.0

docker tag cnych/kube-controller-manager-amd64:v1.10.0 k8s.gcr.io/kube-controller-manager- amd64:v1.10.0

docker tag cnych/kube-proxy-amd64:v1.10.0 k8s.gcr.io/kube-proxy-amd64:v1.10.0

docker tag cnych/k8s-dns-kube-dns-amd64:1.14.8 k8s.gcr.io/k8s-dns-kube-dns-amd64:1.14.8

docker tag cnych/k8s-dns-dnsmasq-nanny-amd64:1.14.8 k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker tag cnych/k8s-dns-sidecar-amd64:1.14.8 k8s.gcr.io/k8s-dns-sidecar-amd64:1.14.8

docker tag cnych/etcd-amd64:3.1.12 k8s.gcr.io/etcd-amd64:3.1.12

docker tag cnych/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64

docker tag cnych/pause-amd64:3.1 k8s.gcr.io/pause-amd64:3.1

可以将上面的命令保存为一个shell脚本,然后直接执行即可。这些镜像是在master节点上需要使用到的镜像,一定要提前下载下来。

3.1.2 待镜像安装完毕,我们就来安装 kubeadm、kubelet、kubectl

yum makecache fast && yum install -y kubelet-1.10.0-0 kubeadm-1.10.0-0 kubectl-1.10.0-0

3.1.3 配置 kubelet

安装完成后,我们还需要对kubelet进行配置,因为用yum源的方式安装的kubelet生成的配置文件将参数--cgroup-driver改成了systemd,而docker的cgroup-driver是cgroupfs,这二者必须一致才行,我们可以通过docker info命令查看:

$ docker info |grep Cgroup

Cgroup Driver: cgroupfs

修改文件kubelet的配置文件/etc/systemd/system/kubelet.service.d/10-kubeadm.conf,将其中的KUBELET_CGROUP_ARGS参数更改成cgroupfs:

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

修改完成后,重新加载我们的配置文件即可:

systemctl daemon-reload

3.2: 集群安装

初始化

到这里我们的准备工作就完成了,接下来我们就可以在master节点上用kubeadm命令来初始化我们的集群了:

kubeadm init --kubernetes-version=v1.10.0 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.142.48

[root@iZbp1fpm1mc6adbyeht7iqZ ~]#kubeadm init --kubernetes-version=v1.10.0 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.142.48

[init] Using Kubernetes version: v1.10.0

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.12.1-ce. Max validated version: 17.03

[WARNING Hostname]: hostname "izbp1fpm1mc6adbyeht7iqz" could not be reached

[WARNING Hostname]: hostname "izbp1fpm1mc6adbyeht7iqz" lookup izbp1fpm1mc6adbyeht7iqz on 100.100.2.138:53: no such host

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [izbp1fpm1mc6adbyeht7iqz kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.142.48]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [localhost] and IPs [127.0.0.1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [izbp1fpm1mc6adbyeht7iqz] and IPs [192.168.142.48]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 21.502490 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node izbp1fpm1mc6adbyeht7iqz as master by adding a label and a taint

[markmaster] Master izbp1fpm1mc6adbyeht7iqz tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 8d1dco.0oiu8d0vz61c7ao8

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p HOME/.kube sudo cp -i /etc/kubernetes/admin.confHOME/.kube/config

sudo chown (id -u):(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.142.48:6443 --token 7uznt6.vzfh109zpz4mdu9t --discovery-token-ca-cert-hash sha256:d8a5617375d0b609cfbceaa1d5b4fc554066af3cef4da14fedc316aa225b8a0d

上面做完之后,根据提示,需要做些操作

mkdir -p HOME/.kube sudo cp -i /etc/kubernetes/admin.confHOME/.kube/config

sudo chown (id -u):(id -g) $HOME/.kube/config

特别注意 需要记录这个输出,后面join Node的时候需要用到。

kubeadm join 192.168.142.48:6443 --token 7uznt6.vzfh109zpz4mdu9t --discovery-token-ca-cert-hash sha256:d8a5617375d0b609cfbceaa1d5b4fc554066af3cef4da14fedc316aa225b8a0d

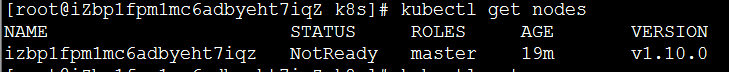

安装之后,发现 Node 还是not ready

[root@iZbp1fpm1mc6adbyeht7iqZ k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

izbp1fpm1mc6adbyeht7iqz NotReady master 19m v1.10.0

怎样解决这个问题:

安装 Pod Network

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io "flannel" created

clusterrolebinding.rbac.authorization.k8s.io "flannel" created

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset.extensions "kube-flannel-ds" created

安装完成后使用kubectl get pods命令可以查看到我们集群中的组件运行状态,如果都是Running状态的话,那么恭喜你,你的master节点安装成功了。

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-ydzs-master1 1/1 Running 0 10m

kube-system kube-apiserver-ydzs-master1 1/1 Running 0 10m

kube-system kube-controller-manager-ydzs-master1 1/1 Running 0 10m

kube-system kube-dns-86f4d74b45-f5595 3/3 Running 0 10m

kube-system kube-flannel-ds-qxjs2 1/1 Running 0 1m

kube-system kube-proxy-vf5fg 1/1 Running 0 10m

kube-system kube-scheduler-ydzs-master1 1/1 Running 0 10m

kubeadm初始化完成后,默认情况下Pod是不会被调度到master节点上的,所以现在还不能直接测试普通的Pod,需要添加一个工作节点后才可以。

4: 安装node,安装完了,就可以直接去拉取镜像了

Node上如何安装docker参考上文1所述。

摘抄下来

step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

Step 2: 添加软件源信息

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Step 3: 列举出来有哪些docker的版本可以安装

yum list docker-ce.x86_64 --showduplicates | sort -r

Step4: 指定版本来安装

yum -y install docker-ce-[VERSION]

比如 我想安装 17的版本

yum -y install docker-ce-17.12.1.ce-1.el7.centos

Step 5:安装完毕,把docker服务启动起来

service docker start

Step 6: 把docker服务加入到系统启动项里面,以便系统重启,docker也能自动起来

systemctl start docker && systemctl enable docker

总结:以上步骤,如果我整理一下,写为一个shell就可以直接去执行了。

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce-17.12.1.ce-1.el7.centos

service docker start

systemctl start docker && systemctl enable docker

step 1准备好了,去拉取镜像

设置加速器:科学上网的话,我们可以使用阿里云的源进行安装

cat <

/etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

docker pull cnych/kube-proxy-amd64:v1.10.0

docker pull cnych/flannel:v0.10.0-amd64

docker pull cnych/pause-amd64:3.1

docker pull cnych/kubernetes-dashboard-amd64:v1.8.3

docker pull cnych/heapster-influxdb-amd64:v1.3.3

docker pull cnych/heapster-grafana-amd64:v4.4.3

docker pull cnych/heapster-amd64:v1.4.2

docker tag cnych/flannel:v0.10.0-amd64 quay.io/coreos/flannel:v0.10.0-amd64

docker tag cnych/pause-amd64:3.1 k8s.gcr.io/pause-amd64:3.1

docker tag cnych/kube-proxy-amd64:v1.10.0 k8s.gcr.io/kube-proxy-amd64:v1.10.0

docker tag cnych/kubernetes-dashboard-amd64:v1.8.3 k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3

docker tag cnych/heapster-influxdb-amd64:v1.3.3 k8s.gcr.io/heapster-influxdb-amd64:v1.3.3

docker tag cnych/heapster-grafana-amd64:v4.4.3 k8s.gcr.io/heapster-grafana-amd64:v4.4.3

docker tag cnych/heapster-amd64:v1.4.2 k8s.gcr.io/heapster-amd64:v1.4.2

step 2 : 安装 kubeadm、kubelet、kubectl

yum makecache fast && yum install -y kubelet-1.10.0-0 kubeadm-1.10.0-0 kubectl-1.10.0-0

step 3 : 检查 cgroup ,docker的和 kubelet的是否一致

docker info |grep Cgroup

若不一致,编辑下面的文件

vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

使上面的配置生效

systemctl daemon-reload

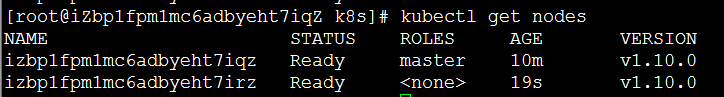

step 4: 把Node Join 到master集群中去

kubeadm join 192.168.142.48:6443 --token 8d1dco.0oiu8d0vz61c7ao8 --discovery-token-ca-cert-hash sha256:241d59a0429288f47d5159393faf4fd55f3665081cba87c474c2578ebb2316c5

step5 : 去master集群里看是否添加node成功。

至此:集群安装完毕

5: 接下来 还有 安装dashboard 和 heasper插件

https://blog.qikqiak.com/post/manual-install-high-available-kubernetes-cluster/#10-%E9%83%A8%E7%BD%B2dashboard-%E6%8F%92%E4%BB%B6-a-id-dashboard-a