windows单机实现hbase、hive 整合

首先hbase-site.xml设置好 hbase使用的zk端口

hbase.master

localhost

hbase.rootdir

hdfs://127.0.0.1:9000/hbase/

hbase.tmp.dir

D:/hbase-1.2.5/tmp

hbase.zookeeper.quorum

127.0.0.1

hbase.zookeeper.property.dataDir

D:/hbase-1.2.5/zoo

hbase.cluster.distributed

false

hbase.master.info.port

60010

hbase.zookeeper.property.clientPort

2185

不难看到zk使用的端口 是2185,

hive-size.xml

javax.jdo.option.ConnectionURL

jdbc:mysql://127.0.0.1:3306/hive?createDatabaseIfNotExist=true&useSSL=false

JDBC connect string for a JDBC metastore

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

root

username to use against metastore database

javax.jdo.option.ConnectionPassword

root

password to use against metastore database

hive.metastore.schema.verification

false

hive.metastore.warehouse.dir

/user/hive/warehouse

javax.jdo.option.DetachAllOnCommit

true

detaches all objects from session so that they can be used after transaction is committed

javax.jdo.option.NonTransactionalRead

true

reads outside of transactions

datanucleus.readOnlyDatastore

false

datanucleus.fixedDatastore

false

datanucleus.autoCreateSchema

true

datanucleus.autoCreateTables

true

datanucleus.autoCreateColumns

true

hive.support.concurrency

true

hive.zookeeper.quorum

localhost

hive.server2.thrift.min.worker.threads

5

hive.server2.thrift.max.worker.threads

100

hive.server2.transport.mode

binary

hive.hwi.listen.host

0.0.0.0

hive.server2.webui.host

0.0.0.0

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

hive.server2.thrift.client.user

root

hive.server2.thrift.client.password

123456

hive.server2.thrift.http.port

11002

hive.server2.thrift.port

11006

hbase.zookeeper.quorum

127.0.0.1

hbase.zookeeper.property.clientPort

2185

hive.aux.jars.path

file:///D:/apache-hive-2.1.1-bin/lib/hive-hbase-handler-2.1.1.jar,file:///D:/apache-hive-2.1.1-bin/lib/protobuf-java-2.5.0.jar,file:///D:/apache-hive-2.1.1-bin/lib/hbase-common-1.2.5.jar,file:///D:/apache-hive-2.1.1-bin/lib/hbase-client-1.2.5.jar,file:///D:/apache-hive-2.1.1-bin/lib/hbase-server-1.2.5.jar,file:///D:/apache-hive-2.1.1-bin/lib/zookeeper-3.4.6.jar,file:///D:/apache-hive-2.1.1-bin/lib/guava-14.0.1.jar

hive.querylog.location

D:/apache-hive-2.1.1-bin/logs

该配置在hive中增加了hbase的连接,和加载相应的jar

在启动hive shell,和start_metastore时,加上个环境变量(不加好像也行)start_metastore.cmd内容如下:

cd D:\apache-hive-2.1.1-bin\bin

SET HIVE_AUX_JARS_PATH=D:\apache-hive-2.1.1-bin\auxlib\

hive --service metastore 从Hive中创建HBase表

创建表语句:

CREATE TABLE iteblog(key int, value string) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,cf1:val") TBLPROPERTIES ("hbase.table.name" = "iteblog", "hbase.mapred.output.outputtable" = "iteblog");

创建一个临时表

在hive中执行:

insert overwrite table iteblog select * from pokes;

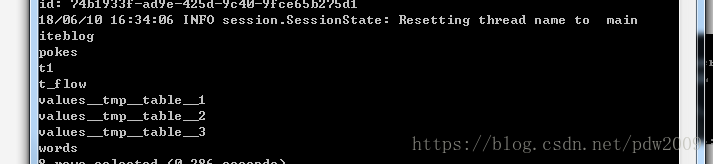

在hbase shell 查看iteblog 表的记录:

我说我们整合hbase+hive 的记录添加成功。

再用hive执行

insert into iteblog(key,value) values (99,'KK');

至此,从Hive中创建HBase表 完成!!!!!

使用Hive中映射HBase中已经存在的表

本机开发环境hbase中存在一个info_user的表

hive 不存在这个表:

hbase库里的info_user 有两字段id,name 在hive 要建外部表语句:

CREATE EXTERNAL TABLE info_user(rowid string ,id string, name string) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,id:id,id:name") TBLPROPERTIES("hbase.table.name" = "info_user", "hbase.mapred.output.outputtable" = "info_user");

参考:

windows单机实现hbase、hive 整合

hive支持事务-更新与删除