python 目标追踪

1. 传统的追踪方法

# opencv已经实现了的追踪算法

OPENCV_OBJECT_TRACKERS = {

"csrt": cv2.TrackerCSRT_create,

"kcf": cv2.TrackerKCF_create,

"boosting": cv2.TrackerBoosting_create,

"mil": cv2.TrackerMIL_create,

"tld": cv2.TrackerTLD_create,

"medianflow": cv2.TrackerMedianFlow_create,

"mosse": cv2.TrackerMOSSE_create

}import cv2

import numpy as np

cap = cv2.VideoCapture("./video/soccer_01.mp4")

trackers = cv2.MultiTracker()

while True:

_, frame = cap.read()

if frame is None:

break

frame = cv2.resize(frame, (600, int(frame.shape[0]*600/frame.shape[1])), cv2.INTER_AREA)

(success, boxes) = trackers.update(frame)

for box in boxes:

(x1, y1, w, h) = box

cv2.rectangle(frame, (int(x1), int(y1)), (int(x1+w), int(y1+h)), (0, 255, 0))

cv2.imshow("video", frame)

key = cv2.waitKey(100)

if key == ord('s'):

box = cv2.selectROI("video", frame, True, False)

trackers.add(cv2.TrackerKCF_create(), frame, box)

if key == 27:

break

cap.release()

cv2.destroyAllWindows()

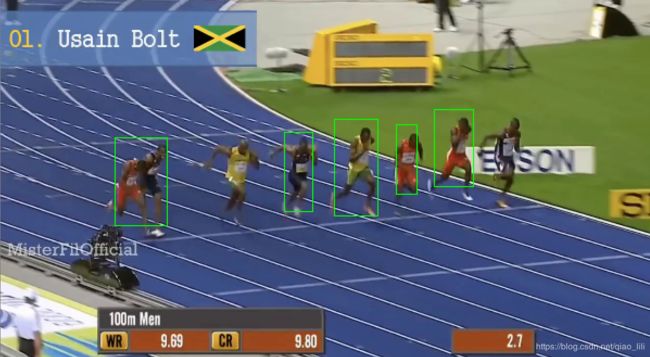

Result:

2. 深度学习方法

引入caffe的SSD模型,对第一帧进行识别,结果如下:

使用opencv的KCF追踪器进行追踪:所计算的帧率为3.8帧/s

%% FPS 计算帧率

import datetime

class FPS:

def __init__(self):

self._start = None

self._end = None

self._numFrames = 0

def start(self):

self._start = datetime.datetime.now()

return self

def stop(self):

self._end = datetime.datetime.now()

def update(self):

self._numFrames += 1

def elapsed(self):

return (self._end - self._start).total_seconds()

def fps(self):

return self._numFrames / self.elapsed()

import cv2

import numpy as np

from FPS import FPS

cap = cv2.VideoCapture('./video/race.mp4')

net = cv2.dnn.readNetFromCaffe("./model/MobileNetSSD_deploy.prototxt", "./model/MobileNetSSD_deploy.caffemodel")

# SSD标签

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

tracker = []

tracker_cv = cv2.MultiTracker_create()

FPS_IMG = FPS()

FPS_IMG.start()

while True:

_, frame = cap.read()

if frame is None:

break

[h, w] = frame.shape[0:2]

dim = (600, int(h*600/w))

frame = cv2.resize(frame, dim, cv2.INTER_AREA)

[h, w] = frame.shape[:2]

if len(tracker) == 0:

blob = cv2.dnn.blobFromImage(frame, 0.007843, (600, int(h*600/w)), 125)

net.setInput(blob)

detections = net.forward()

# 不同的网络检测结果的存储形式不同

for i in np.arange(0, detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > 0.27:

idx = int(detections[0, 0, i, 1])

label = CLASSES[idx]

if label != 'person':

continue

# 计算该目标在图像中的位置

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

[x1, y1, x2, y2] = box.astype('int')

tracker.append([x1, y1, x2, y2])

tracker_cv.add(cv2.TrackerKCF_create(), frame, (x1, y1, x2, y2))

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 2, 8)

else:

(_, persons) = tracker_cv.update(frame)

for person in persons:

[x1, y1, x2, y2] = person

cv2.rectangle(frame, (int(x1), int(y1)), (int(x2), int(y2)), (0, 255, 0), 2, 8)

cv2.imshow("run", frame)

key = cv2.waitKey(1) & 0xFF

# 退出

if key == 27:

break

# 更新帧

FPS_IMG.update()

FPS_IMG.stop()

print(FPS_IMG.fps())

cap.release()

cv2.destroyAllWindows()值得注意的是SSD网络的数据形式:

for i in np.arange(0, detections.shape[2]): # 遍历所有的结果

confidence = detections[0, 0, i, 2]

if confidence > 0.27:

idx = int(detections[0, 0, i, 1])

label = CLASSES[idx]

if label != 'person':

continue

# 计算该目标在图像中的位置

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h]) # 比例因子

[x1, y1, x2, y2] = box.astype('int')3. 采用多进程加速

# 调用多进程进行目标追踪

import cv2

import numpy as np

import multiprocessing

from FPS import FPS

def tracker_func(roi, frame, inputQueue, outputQueue):

t = cv2.TrackerKCF_create()

t.init(frame, roi)

while True:

img = inputQueue.get()

if img is not None:

_, pos = t.update(img)

startX = int(pos[0])

startY = int(pos[1])

endX = int(pos[2])

endY = int(pos[3])

# 把结果放到输出q

outputQueue.put((startX, startY, endX, endY))

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat", "bottle",

"bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

net = cv2.dnn.readNetFromCaffe("./model/MobileNetSSD_deploy.prototxt", "./model/MobileNetSSD_deploy.caffemodel")

cap = cv2.VideoCapture("./video/race.mp4")

FPS_IMG = FPS()

inputQueues = []

outputQueues = []

FPS_IMG.start()

if __name__ == '__main__':

while True:

_, frame = cap.read()

if frame is None:

break

[h, w] = frame.shape[:2]

ar = 600/w

h = int(ar*h)

frame = cv2.resize(frame, (w, h), cv2.INTER_AREA)

if len(inputQueues) == 0:

blob = cv2.dnn.blobFromImage(frame, 0.007843, (w, h), 127.5)

net.setInput(blob)

detections = net.forward()

for i in range(0, detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > 0.27:

idx = int(detections[0, 0, i, 1])

if CLASSES[idx] == "person":

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

[startX, startY, endX, endY] = box.astype("int")

bb = (startX, startY, endX, endY)

# 多进程队列

iq = multiprocessing.Queue()

oq = multiprocessing.Queue()

inputQueues.append(iq)

outputQueues.append(oq)

# 多核

p = multiprocessing.Process(target=tracker_func, args=(bb, frame, iq, oq))

p.daemon = True

p.start()

cv2.rectangle(frame, (startX, startY), (endX, endY), (0, 255, 0), 2)

else:

for iq in inputQueues:

iq.put(frame)

for oq in outputQueues:

(startX, startY, endX, endY) = oq.get()

# 绘图

cv2.rectangle(frame, (startX, startY), (endX, endY), (0, 255, 0), 2)

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

if key == 27:

break

FPS_IMG.update()

FPS_IMG.stop()

print(FPS_IMG.fps())

cap.release()

cv2.destroyAllWindows()