【opencv】双目视觉下空间坐标计算/双目测距 6/13更新

最近是多么的崩溃,昨天中了最新的cerber病毒,把我的电脑资料一扫而空,虽然有备份,但是已经是一周前的了。不得不加班加点补回来。

这篇博客,这是我第二次写,我凭着记忆,重新写一遍之前写的,因为之前写好了,却不小心被删掉,然而CSDN又特别默契的在那一刻保存了一下,满满的都是伤心;

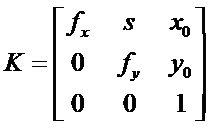

摄像机矩阵由内参矩阵和外参矩阵组成,对摄像机矩阵进行QR分解可以得到内参矩阵和外参矩阵。

内参包括焦距、主点、倾斜系数、畸变系数

Mat jiaozheng( Mat image )

{

Size image_size = image.size();

float intrinsic[3][3] = {589.2526583947847,0,321.8607532099886,0,585.7784771038199,251.0338528599469,0,0,1};

float distortion[1][5] = {-0.5284205687061442, 0.3373615384253201, -0.002133029981628697, 0.001511983002864886, -0.1598661778309496};

Mat intrinsic_matrix = Mat(3,3,CV_32FC1,intrinsic);

Mat distortion_coeffs = Mat(1,5,CV_32FC1,distortion);

Mat R = Mat::eye(3,3,CV_32F);

Mat mapx = Mat(image_size,CV_32FC1);

Mat mapy = Mat(image_size,CV_32FC1);

initUndistortRectifyMap(intrinsic_matrix,distortion_coeffs,R,intrinsic_matrix,image_size,CV_32FC1,mapx,mapy);

Mat t = image.clone();

cv::remap( image, t, mapx, mapy, INTER_LINEAR);

return t;

}

校正完成后就可以进行坐标计算了,分两种

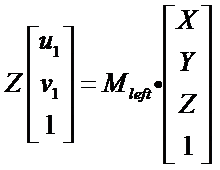

(1)世界坐标系——>像面坐标系

已知某点在世界坐标系中的坐标为(Xw, Yw, Zw),由旋转和平移矩阵可得摄像机坐标系和世界坐标系的关系为

其中[u v 1]T为点在图像坐标系中的坐标,[Xc Yc Zc 1]T为点在摄像机坐标系中的坐标,K为摄像机内参数矩阵。

这样最终可以得到:

(2)像面坐标系——>世界坐标系

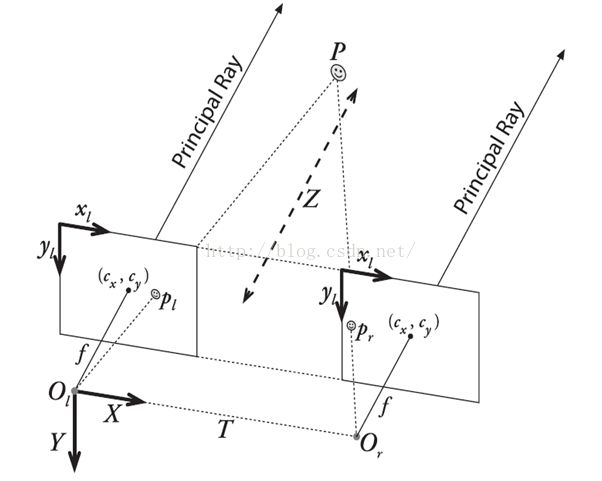

光轴会聚模型:

对于两相机分别有:

公式56,左边Z应分别为Zc1,Zc2

其中,

这样可以把(5)(6)写成

公式8左边Z应为Zc1

公式9左边Z应为Zc2

将(8)(9)整理可以得到

以上公式参考评论6楼 wisemanjack

采用最小二乘法求解X,Y,Z,在opencv中可以用solve(A,B,XYZ,DECOMP_SVD)求解

代码如下:

//opencv2.4.9 vs2012

#include

#include

#include

using namespace std;

using namespace cv;

Point2f xyz2uv(Point3f worldPoint,float intrinsic[3][3],float translation[1][3],float rotation[3][3]);

Point3f uv2xyz(Point2f uvLeft,Point2f uvRight);

//图片对数量

int PicNum = 14;

//左相机内参数矩阵

float leftIntrinsic[3][3] = {4037.82450, 0, 947.65449,

0, 3969.79038, 455.48718,

0, 0, 1};

//左相机畸变系数

float leftDistortion[1][5] = {0.18962, -4.05566, -0.00510, 0.02895, 0};

//左相机旋转矩阵

float leftRotation[3][3] = {0.912333, -0.211508, 0.350590,

0.023249, -0.828105, -0.560091,

0.408789, 0.519140, -0.750590};

//左相机平移向量

float leftTranslation[1][3] = {-127.199992, 28.190639, 1471.356768};

//右相机内参数矩阵

float rightIntrinsic[3][3] = {3765.83307, 0, 339.31958,

0, 3808.08469, 660.05543,

0, 0, 1};

//右相机畸变系数

float rightDistortion[1][5] = {-0.24195, 5.97763, -0.02057, -0.01429, 0};

//右相机旋转矩阵

float rightRotation[3][3] = {-0.134947, 0.989568, -0.050442,

0.752355, 0.069205, -0.655113,

-0.644788, -0.126356, -0.753845};

//右相机平移向量

float rightTranslation[1][3] = {50.877397, -99.796492, 1507.312197};

int main()

{

//已知空间坐标求成像坐标

Point3f point(700,220,530);

cout<<"左相机中坐标:"<2017/5/26补充

matlab或者opencv标定完都是在左相机上建立世界坐标系,于是上面代码对应的改为:

//左相机旋转矩阵

float leftRotation[3][3] = {1,0,0, 0,1,0, 0,0,1 };

//左相机平移向量

float leftTranslation[1][3] = {0,0,0};

畸变矩阵(默认获得5个畸变参数k1,k2,p1,p2,k3)

——————————————————————————————————————————————————————————————

像面坐标系——>世界坐标系还有一种模型是光轴平行模型

双目立体视觉三位测量是基于视差原理:

这里,除cx‘外的所有参数都来自于左图像,cx’是主点在右图像上的x坐标。如果主光线在无穷远处相交,那么cx=cx‘,并且右下角的项为0,给定一个二维齐次点和其关联的视差d,我们可以将此点投影到三维中:

三维坐标就是(X / W , Y / W , Z / W)

光轴平行模型要得到视差图,http://blog.csdn.net/wangchao7281/article/details/52506691?locationNum=7

可以参考opencv的例子,例子的使用方法可以参考http://blog.csdn.net/t247555529/article/details/48046859

得到视差图后可以调用cvReprojectImageTo3D输出的三维坐标

看到很多人输出三维坐标时z出现10000,那个其实是输出方式不对,应该是下面这样

Point p;

p.x =294,p.y=189;

cout<(p)*16 <

为什么要乘以16呢?

因为在OpenCV2.0中,BM函数得出的结果是以16位符号数的形式的存储的,出于精度需要,所有的视差在输出时都扩大了16倍(2^4)。其具体代码表示如下:

dptr[y*dstep] = (short)(((ndisp - mind - 1 + mindisp)*256 + (d != 0 ? (p-n)*128/d : 0) + 15) >> 4);

可以看到,原始视差在左移8位(256)并且加上一个修正值之后又右移了4位,最终的结果就是左移4位

因此,在实际求距离时,cvReprojectTo3D出来的X/W,Y/W,Z/W都要乘以16 (也就是W除以16),才能得到正确的三维坐标信息

下面是在opencv3.0下实现的该方法的测距(转载)

/******************************/

/* 立体匹配和测距 */

/******************************/

#include

#include

using namespace std;

using namespace cv;

const int imageWidth = 640; //摄像头的分辨率

const int imageHeight = 480;

Size imageSize = Size(imageWidth, imageHeight);

Mat rgbImageL, grayImageL;

Mat rgbImageR, grayImageR;

Mat rectifyImageL, rectifyImageR;

Rect validROIL;//图像校正之后,会对图像进行裁剪,这里的validROI就是指裁剪之后的区域

Rect validROIR;

Mat mapLx, mapLy, mapRx, mapRy; //映射表

Mat Rl, Rr, Pl, Pr, Q; //校正旋转矩阵R,投影矩阵P 重投影矩阵Q

Mat xyz; //三维坐标

Point origin; //鼠标按下的起始点

Rect selection; //定义矩形选框

bool selectObject = false; //是否选择对象

int blockSize = 0, uniquenessRatio =0, numDisparities=0;

Ptr bm = StereoBM::create(16, 9);

/*

事先标定好的相机的参数

fx 0 cx

0 fy cy

0 0 1

*/

Mat cameraMatrixL = (Mat_(3, 3) << 836.771593170594,0,319.970748854743,

0,839.416501863912,228.788913693256,

0, 0, 1);

Mat distCoeffL = (Mat_(5, 1) << 0, 0, 0, 0, 0);

Mat cameraMatrixR = (Mat_(3, 3) << 838.101721655709,0,319.647150557935,

0,840.636812165056,250.655818405938,

0, 0, 1);

Mat distCoeffR = (Mat_(5, 1) << 0, 0, 0, 0, 0);

Mat T = (Mat_(3, 1) << -39.7389449993974,0.0740619639178984,0.411914303245886);//T平移向量

Mat rec = (Mat_(3, 1) << -0.00306, -0.03207, 0.00206);//rec旋转向量

Mat R = (Mat_(3, 3) << 0.999957725513956,-0.00103511880221423,0.00913650447492805,

0.00114462826834523,0.999927476064641,-0.0119888463633882,

-0.00912343197938050,0.0119987974423658,0.999886389470751);//R 旋转矩阵

/*****立体匹配*****/

void stereo_match(int,void*)

{

bm->setBlockSize(2*blockSize+5); //SAD窗口大小,5~21之间为宜

bm->setROI1(validROIL);

bm->setROI2(validROIR);

bm->setPreFilterCap(31);

bm->setMinDisparity(0); //最小视差,默认值为0, 可以是负值,int型

bm->setNumDisparities(numDisparities*16+16);//视差窗口,即最大视差值与最小视差值之差,窗口大小必须是16的整数倍,int型

bm->setTextureThreshold(10);

bm->setUniquenessRatio(uniquenessRatio);//uniquenessRatio主要可以防止误匹配

bm->setSpeckleWindowSize(100);

bm->setSpeckleRange(32);

bm->setDisp12MaxDiff(-1);

Mat disp, disp8;

bm->compute(rectifyImageL, rectifyImageR, disp);//输入图像必须为灰度图

disp.convertTo(disp8, CV_8U, 255 / ((numDisparities * 16 + 16)*16.));//计算出的视差是CV_16S格式

reprojectImageTo3D(disp, xyz, Q, true); //在实际求距离时,ReprojectTo3D出来的X / W, Y / W, Z / W都要乘以16(也就是W除以16),才能得到正确的三维坐标信息。

xyz = xyz * 16;

imshow("disparity", disp8);

}

/*****描述:鼠标操作回调*****/

static void onMouse(int event, int x, int y, int, void*)

{

if (selectObject)

{

selection.x = MIN(x, origin.x);

selection.y = MIN(y, origin.y);

selection.width = std::abs(x - origin.x);

selection.height = std::abs(y - origin.y);

}

switch (event)

{

case EVENT_LBUTTONDOWN: //鼠标左按钮按下的事件

origin = Point(x, y);

selection = Rect(x, y, 0, 0);

selectObject = true;

cout << origin <<"in world coordinate is: " << xyz.at(origin) << endl;

break;

case EVENT_LBUTTONUP: //鼠标左按钮释放的事件

selectObject = false;

if (selection.width > 0 && selection.height > 0)

break;

}

}

/*****主函数*****/

int main()

{

/*

立体校正

*/

//Rodrigues(rec, R); //Rodrigues变换

stereoRectify(cameraMatrixL, distCoeffL, cameraMatrixR, distCoeffR, imageSize, R, T, Rl, Rr, Pl, Pr, Q, CALIB_ZERO_DISPARITY,

0, imageSize, &validROIL, &validROIR);

initUndistortRectifyMap(cameraMatrixL, distCoeffL, Rl, Pr, imageSize, CV_32FC1, mapLx, mapLy);

initUndistortRectifyMap(cameraMatrixR, distCoeffR, Rr, Pr, imageSize, CV_32FC1, mapRx, mapRy);

/*

读取图片

*/

rgbImageL = imread("左2.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageL, grayImageL, CV_BGR2GRAY);

rgbImageR = imread("右2.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageR, grayImageR, CV_BGR2GRAY);

imshow("ImageL Before Rectify", grayImageL);

imshow("ImageR Before Rectify", grayImageR);

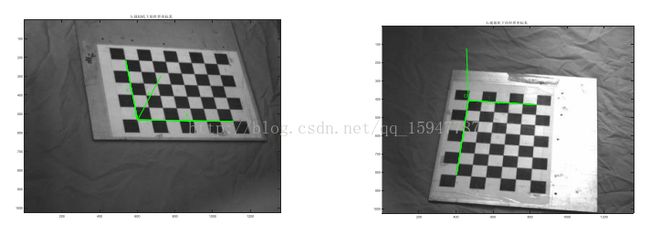

/*

经过remap之后,左右相机的图像已经共面并且行对准了

*/

remap(grayImageL, rectifyImageL, mapLx, mapLy, INTER_LINEAR);

remap(grayImageR, rectifyImageR, mapRx, mapRy, INTER_LINEAR);

/*

把校正结果显示出来

*/

Mat rgbRectifyImageL, rgbRectifyImageR;

cvtColor(rectifyImageL, rgbRectifyImageL, CV_GRAY2BGR); //伪彩色图

cvtColor(rectifyImageR, rgbRectifyImageR, CV_GRAY2BGR);

//单独显示

//rectangle(rgbRectifyImageL, validROIL, Scalar(0, 0, 255), 3, 8);

//rectangle(rgbRectifyImageR, validROIR, Scalar(0, 0, 255), 3, 8);

imshow("ImageL After Rectify", rgbRectifyImageL);

imshow("ImageR After Rectify", rgbRectifyImageR);

//显示在同一张图上

Mat canvas;

double sf;

int w, h;

sf = 600. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width * sf);

h = cvRound(imageSize.height * sf);

canvas.create(h, w * 2, CV_8UC3); //注意通道

//左图像画到画布上

Mat canvasPart = canvas(Rect(w * 0, 0, w, h)); //得到画布的一部分

resize(rgbRectifyImageL, canvasPart, canvasPart.size(), 0, 0, INTER_AREA); //把图像缩放到跟canvasPart一样大小

Rect vroiL(cvRound(validROIL.x*sf), cvRound(validROIL.y*sf), //获得被截取的区域

cvRound(validROIL.width*sf), cvRound(validROIL.height*sf));

//rectangle(canvasPart, vroiL, Scalar(0, 0, 255), 3, 8); //画上一个矩形

cout << "Painted ImageL" << endl;

//右图像画到画布上

canvasPart = canvas(Rect(w, 0, w, h)); //获得画布的另一部分

resize(rgbRectifyImageR, canvasPart, canvasPart.size(), 0, 0, INTER_LINEAR);

Rect vroiR(cvRound(validROIR.x * sf), cvRound(validROIR.y*sf),

cvRound(validROIR.width * sf), cvRound(validROIR.height * sf));

//rectangle(canvasPart, vroiR, Scalar(0, 0, 255), 3, 8);

cout << "Painted ImageR" << endl;

//画上对应的线条

for (int i = 0; i < canvas.rows; i += 16)

line(canvas, Point(0, i), Point(canvas.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

/*

立体匹配

*/

namedWindow("disparity", CV_WINDOW_AUTOSIZE);

// 创建SAD窗口 Trackbar

createTrackbar("BlockSize:\n", "disparity",&blockSize, 8, stereo_match);

// 创建视差唯一性百分比窗口 Trackbar

createTrackbar("UniquenessRatio:\n", "disparity", &uniquenessRatio, 50, stereo_match);

// 创建视差窗口 Trackbar

createTrackbar("NumDisparities:\n", "disparity", &numDisparities, 16, stereo_match);

//鼠标响应函数setMouseCallback(窗口名称, 鼠标回调函数, 传给回调函数的参数,一般取0)

setMouseCallback("disparity", onMouse, 0);

stereo_match(0,0);

waitKey(0);

return 0;

}

————————————————————————————————————————

最后是完整的大作业代码,感觉自己写的并不是很好,敷衍了事~~

运行该程序,会对素材文件夹中图像进行处理,生成三个文件夹,分别是“大球圆心”、“畸变校正”、“亮度对比度”以及一个csv文件,文件名为“三维坐标.csv”,里面记录了计算得到的左右相机中球的像面坐标和解算出的空间坐标。该程序默认对所有计算出的圆心坐标进行了重新赋值,如需修改圆心坐标,需在工程中的initPos()修改赋值语句,如需程序自动计算,在主函数中注释该指令即可,但是自动计算的圆心并不准确。

//opencv2.4.9 vs2012

#include

#include

#include

using namespace std;

using namespace cv;

//图片对数量

#define PicNum 14

//左相机内参数矩阵

float leftIntrinsic[3][3] = {4037.82450, 0, 947.65449,

0, 3969.79038, 455.48718,

0, 0, 1};

//左相机畸变系数

float leftDistortion[1][5] = {0.18962, -4.05566, -0.00510, 0.02895, 0};

//左相机旋转矩阵

float leftRotation[3][3] = {0.912333, -0.211508, 0.350590,

0.023249, -0.828105, -0.560091,

0.408789, 0.519140, -0.750590};

//左相机平移向量

float leftTranslation[1][3] = {-127.199992, 28.190639, 1471.356768};

//右相机内参数矩阵

float rightIntrinsic[3][3] = {3765.83307, 0, 339.31958,

0, 3808.08469, 660.05543,

0, 0, 1};

//右相机畸变系数

float rightDistortion[1][5] = {-0.24195, 5.97763, -0.02057, -0.01429, 0};

//右相机旋转矩阵

float rightRotation[3][3] = {-0.134947, 0.989568, -0.050442,

0.752355, 0.069205, -0.655113,

-0.644788, -0.126356, -0.753845};

//右相机平移向量

float rightTranslation[1][3] = {50.877397, -99.796492, 1507.312197};

//球坐标数组

//大球

float rightDaqiu[PicNum][2] = {0};

float leftDaqiu[PicNum][2] = {0};

float worldDaqiu[PicNum][3] = {0};

//小球

float rightXiaoqiu[PicNum][2] = {0};

float leftXiaoqiu[PicNum][2] = {0};

float worldXiaoqiu[PicNum][3] = {0};

//花球

float rightHuaqiu[PicNum][2] = {0};

float leftHuaqiu[PicNum][2] = {0};

float worldHuaqiu[PicNum][3] = {0};

void ContrastAndBright(double alpha, double beta);//调节亮度/对比度

void CorrectionProcess();//对素材校正畸变

void initPos();//手动赋值球的图像坐标

void Daqiu();//计算大球图像坐标

Mat PictureCorrection( Mat image ,float intrinsic[3][3],float distortion[1][5]);//单张图像畸变校正

Point2f xyz2uv(Point3f worldPoint,float intrinsic[3][3],float translation[1][3],float rotation[3][3]);//从世界坐标转为图像坐标

Point3f uv2xyz(Point2f uvLeft,Point2f uvRight);//从图像坐标转为世界坐标

int main()

{

CorrectionProcess();//对素材校正畸变

ContrastAndBright(2.5,50);//调节亮度/对比度

Daqiu();//自动计算大球坐标

initPos();//手动修正,如需验证数据,可以在该函数中修改

//求取大球的空间坐标

cout<<"求解大球的世界坐标:"<(i,j)[k] = saturate_cast(src.at(i,j)[k]*alpha+beta);

char* output = new char[100];

sprintf(output,"亮度对比度/rightky1/r%d.bmp",ii);

imwrite(output,dst); //顺次保存校正图

delete []output;//释放字符串

}

//左相机调节

//如果校正图像目录不存在,则创建该目录

system("md 亮度对比度\\leftky1");

for (int ii=1; ii<=PicNum; ii++)

{

cout<<"左:第"<(i,j)[k] = saturate_cast(src.at(i,j)[k]*alpha+beta);

char* output = new char[100];

sprintf(output,"亮度对比度/leftky1/l%d.bmp",ii);

imwrite(output,dst); //顺次保存校正图

delete []output;//释放字符串

}

cout<<"**********************************************"<> strFileName;

strFileName = "亮度对比度/rightky1/r" + strFileName + ".bmp";

img = imread(strFileName,IMREAD_GRAYSCALE);

GaussianBlur(img,img,Size(5,5),0);

vector circles;

HoughCircles( img, circles, CV_HOUGH_GRADIENT, 3 ,70, 70, 30, 95 ,100);//hough圆变换

Point2f center(0,0);

float radius;

for (int j = 0; j < circles.size(); j++)

{

if (circles[j][0] > center.x && circles[j][0] < img.cols/2)

{

center.x = circles[j][0];

center.y = circles[j][1];

radius = circles[j][2];//半径

}

}

rightDaqiu[i-1][0] = center.x;

rightDaqiu[i-1][1] = center.y;

CvScalar color = CV_RGB(0,0,0);

circle( img, (Point)center, radius, color, 3, 8, 0);//绘制圆

circle( img, (Point)center, 3, color, 3, 8, 0);//绘制圆心

cout<<"圆心:"<> strFileName;

strFileName = "亮度对比度/leftky1/l" + strFileName + ".bmp";

img = imread(strFileName,IMREAD_GRAYSCALE);

GaussianBlur(img,img,Size(5,5),0);

vector circles;

HoughCircles( img, circles, CV_HOUGH_GRADIENT, 3 ,70, 30, 50, 100 ,110);//hough圆变换

Point2f center(0,1024);

float radius;

for (int j = 0; j < circles.size(); j++)

{

if (circles[j][1]

运行该程序,会提取生成的“三维坐标.csv”中的空间坐标数据,并绘制运动轨迹需要注意的是,在“三维坐标.csv”文件中直接修改圆心坐标没有用,需要在工程中的initPos()修改。

clc;clear;

M = csvread('三维坐标.csv',3,6,[3,6,16,8]);

x = M(:,1);

y = M(:,2);

z = M(:,3);

plot3(x,y,z,'r');

%legend('大球');

hold on

M = csvread('三维坐标.csv',20,6,[20,6,33,8]);

x = M(:,1);

y = M(:,2);

z = M(:,3);

plot3(x,y,z,'g');

%legend('小球');

hold on

M = csvread('三维坐标.csv',37,6,[37,6,50,8]);

x = M(:,1);

y = M(:,2);

z = M(:,3);

plot3(x,y,z,'b');

%legend('花球');

hold on

legend('大球','小球','花球');

title('小球运动轨迹');

xlabel('x');

ylabel('y');

zlabel('z');

grid on

axis square

最后绘制的轨迹图

补一张世界坐标系的图