算一算-Word2Vec(2)

摘要:

上一篇文章里,我们详细的介绍了Word2Vec下SkipGram的层级SoftMax的计算流程,在这篇文章里,我们将介SkipGram下的Negative Sampling的计算流程。

训练参数

SkipGram & Negative Samping 训练过程

预备工作:

和层级SoftMax一样,我们需要对词向量进行初始化:

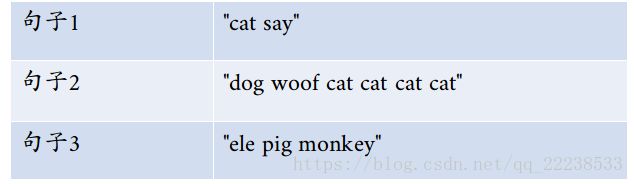

接下来,构造的样本和上一篇文章的一样,具体如下:

关于负采样:

在讨论负采样之前,我们先来思考一个问题,对于训练样本 {cat,say} { c a t , s a y } ,当输入是 cat c a t 时,输出是是 say s a y ,也就是说输出是 say s a y 时算正样本,输出为其他时单词时比如 dog、woof、ele、pig、monkey d o g 、 w o o f 、 e l e 、 p i g 、 m o n k e y 都算是一个负样本。

那么当词汇表的非常巨大的时候,负样本的计算开销是非常庞大的。负采样的核心思想其实就是:每次在计算负样本的时候,只抽取其中的若干个词(设定参数)作为负样本,具体来说,其中一个最简单的负采样的思路如下:

之后,我们根据频率按累加的方式在一个长度为1的线段上把这些词分段画出:

有了这个之后,我们就可以在 [0,1] [ 0 , 1 ] 中随机产生一个随机数来选择我的抽样词。可以看到,频率越大的词被抽中的概率也越大。

最后,我们参考文章可以推导出下面的算法流程图:

我们先简单的说明一下流程图中的参数含义(部分已经在前面的文章解释了)

1. Negative N e g a t i v e 表示负采样的个数。比如对于本文的例子,词汇表的长度为6,当参数Negative设置为2的时候即每次抽取2个词作为负样本。

2. Neg{U}Negative N e g { U } N e g a t i v e 表示对词 U U 以外的词做负采样,采样的个数为 Negative N e g a t i v e 个。

比如说对于训练样本 {cat,say} { c a t , s a y } , Neg{say}2 N e g { s a y } 2 其结果就是在 dog、woof、ele、pig、monkey d o g 、 w o o f 、 e l e 、 p i g 、 m o n k e y 抽取2个词作为负样本。

3. get_index{d} g e t _ i n d e x { d } 其实就是找到词 d d 的编号。后面计算过程中会说明。

4. Label{d} L a b e l { d } 为词 d d 的标签。

比如说对于训练样本 {cat,say} { c a t , s a y } , Label{say} L a b e l { s a y } 就是1。当抽取出来的负样本为 Neg{say}2={dog,woof} N e g { s a y } 2 = { d o g , w o o f } 时

Label{dog}=0 L a b e l { d o g } = 0 , Label{woof}=0 L a b e l { w o o f } = 0 。

计算

通过上面一系列的铺垫,其实有些问题可能还是很模糊,结合下面的计算,做进一步的说明。

我们先初始化 θindex辅助向量矩阵 θ i n d e x 辅 助 向 量 矩 阵 :

| word | θindex θ i n d e x | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| cat c a t | θ0 θ 0 | 0 | 0 | 0 | 0 | 0 |

| say s a y | θ1 θ 1 | 0 | 0 | 0 | 0 | 0 |

| dog d o g | θ2 θ 2 | 0 | 0 | 0 | 0 | 0 |

| woof w o o f | θ3 θ 3 | 0 | 0 | 0 | 0 | 0 |

| ele e l e | θ4 θ 4 | 0 | 0 | 0 | 0 | 0 |

| pig p i g | θ5 θ 5 | 0 | 0 | 0 | 0 | 0 |

| monkey m o n k e y | θ6 θ 6 | 0 | 0 | 0 | 0 | 0 |

第一次训练:

步骤0:此时,

w=cat w = c a t

Context(w)={say} C o n t e x t ( w ) = { s a y }

V(cat)=[0.054,−0.090,−0.038,0.063,−0.015] V ( c a t ) = [ 0.054 , − 0.090 , − 0.038 , 0.063 , − 0.015 ]

步骤1:

初始化向量 e=[0,0,0,0,0] e = [ 0 , 0 , 0 , 0 , 0 ]

步骤2:

首先计算正样本 say s a y :

index=get_index{say}=1 i n d e x = g e t _ i n d e x { s a y } = 1

Label{say}=1 L a b e l { s a y } = 1

f=σ(V(w)Tθindex) f = σ ( V ( w ) T θ i n d e x ) ==>

σ(V(cat)Tθ1)=σ(0.054∗0−0.090∗0−0.038∗00.063∗0−0.015∗0)=0.5 σ ( V ( c a t ) T θ 1 ) = σ ( 0.054 ∗ 0 − 0.090 ∗ 0 − 0.038 ∗ 0 0.063 ∗ 0 − 0.015 ∗ 0 ) = 0.5

步骤3:

g=η(Label{say}−f)=0.95∗(1−0.5)=0.475 g = η ( L a b e l { s a y } − f ) = 0.95 ∗ ( 1 − 0.5 ) = 0.475

步骤4:

e=e+gθindex e = e + g θ i n d e x ==>

e=e+gθ1=[0,0,0,0,0]+0.475∗[0,0,0,0,0]=[0,0,0,0,0] e = e + g θ 1 = [ 0 , 0 , 0 , 0 , 0 ] + 0.475 ∗ [ 0 , 0 , 0 , 0 , 0 ] = [ 0 , 0 , 0 , 0 , 0 ]

步骤5:

θindex=θindex+gV(w) θ i n d e x = θ i n d e x + g V ( w ) ==>

θ1=θ1+gV(cat)=[0,0,0,0,0]+0.475∗[0.054,−0.090,−0.038,0.063,−0.015]=[0.026,−0.043,−0.018,0.030,−0.007] θ 1 = θ 1 + g V ( c a t ) = [ 0 , 0 , 0 , 0 , 0 ] + 0.475 ∗ [ 0.054 , − 0.090 , − 0.038 , 0.063 , − 0.015 ] = [ 0.026 , − 0.043 , − 0.018 , 0.030 , − 0.007 ]

步骤6:

计算完正样本后,我们根据 Negative=2 N e g a t i v e = 2 参数设置,我们需要抽取两个词作为负样本,这里假设抽取的词为 Neg{say}2={dog,woof} N e g { s a y } 2 = { d o g , w o o f }

步骤2:(计算负样本 dog d o g )

index=get_index{dog}=2 i n d e x = g e t _ i n d e x { d o g } = 2

Label{dog}=0 L a b e l { d o g } = 0

f=σ(V(w)Tθindex) f = σ ( V ( w ) T θ i n d e x ) ==>

σ(V(cat)Tθ2)=σ(0.054∗0−0.090∗0−0.038∗00.063∗0−0.015∗0)=0.5 σ ( V ( c a t ) T θ 2 ) = σ ( 0.054 ∗ 0 − 0.090 ∗ 0 − 0.038 ∗ 0 0.063 ∗ 0 − 0.015 ∗ 0 ) = 0.5

步骤3:

g=η(Label{dog}−f)=0.95∗(0−0.5)=−0.475 g = η ( L a b e l { d o g } − f ) = 0.95 ∗ ( 0 − 0.5 ) = − 0.475

步骤4:

e=e+gθindex e = e + g θ i n d e x ==>

e=e+gθ2=[0,0,0,0,0]−0.475∗[0,0,0,0,0]=[0,0,0,0,0] e = e + g θ 2 = [ 0 , 0 , 0 , 0 , 0 ] − 0.475 ∗ [ 0 , 0 , 0 , 0 , 0 ] = [ 0 , 0 , 0 , 0 , 0 ]

步骤5:

θindex=θindex+gV(w) θ i n d e x = θ i n d e x + g V ( w ) ==>

θ2=θ2+gV(cat)=[0,0,0,0,0]−0.475∗[0.054,−0.090,−0.038,0.063,−0.015]=[−0.026,0.043,0.018,−0.030,0.007] θ 2 = θ 2 + g V ( c a t ) = [ 0 , 0 , 0 , 0 , 0 ] − 0.475 ∗ [ 0.054 , − 0.090 , − 0.038 , 0.063 , − 0.015 ] = [ − 0.026 , 0.043 , 0.018 , − 0.030 , 0.007 ]

计算完一个负样本,还有一个负样本:

步骤2:(计算负样本 woof w o o f )

index=get_index{woof}=3 i n d e x = g e t _ i n d e x { w o o f } = 3

Label{woof}=0 L a b e l { w o o f } = 0

f=σ(V(w)Tθindex) f = σ ( V ( w ) T θ i n d e x ) ==>

σ(V(cat)Tθ3)=σ(0.054∗0−0.090∗0−0.038∗00.063∗0−0.015∗0)=0.5 σ ( V ( c a t ) T θ 3 ) = σ ( 0.054 ∗ 0 − 0.090 ∗ 0 − 0.038 ∗ 0 0.063 ∗ 0 − 0.015 ∗ 0 ) = 0.5

步骤3:

g=η(Label{woof}−f)=0.95∗(0−0.5)=−0.475 g = η ( L a b e l { w o o f } − f ) = 0.95 ∗ ( 0 − 0.5 ) = − 0.475

步骤4:

e=e+gθindex e = e + g θ i n d e x ==>

e=e+gθ3=[0,0,0,0,0]−0.475∗[0,0,0,0,0]=[0,0,0,0,0] e = e + g θ 3 = [ 0 , 0 , 0 , 0 , 0 ] − 0.475 ∗ [ 0 , 0 , 0 , 0 , 0 ] = [ 0 , 0 , 0 , 0 , 0 ]

步骤5:

θindex=θindex+gV(w) θ i n d e x = θ i n d e x + g V ( w ) ==>

θ3=θ3+gV(cat)=[0,0,0,0,0]−0.475∗[0.054,−0.090,−0.038,0.063,−0.015]=[−0.026,0.043,0.018,−0.030,0.007] θ 3 = θ 3 + g V ( c a t ) = [ 0 , 0 , 0 , 0 , 0 ] − 0.475 ∗ [ 0.054 , − 0.090 , − 0.038 , 0.063 , − 0.015 ] = [ − 0.026 , 0.043 , 0.018 , − 0.030 , 0.007 ]

步骤7:更新词向量 V(w) V ( w )

V(w)=V(w)+e V ( w ) = V ( w ) + e ==>

V(w)=[0.054,−0.090,−0.038,0.063,−0.015]+[0,0,0,0,0]=[0.054,−0.090,−0.038,0.063,−0.015] V ( w ) = [ 0.054 , − 0.090 , − 0.038 , 0.063 , − 0.015 ] + [ 0 , 0 , 0 , 0 , 0 ] = [ 0.054 , − 0.090 , − 0.038 , 0.063 , − 0.015 ]

至此,对于训练样本 {cat,say} { c a t , s a y } 才训练完毕。

这里简单汇总一下训练的结果:

对于辅助向量 θindex θ i n d e x :

| word | θindex θ i n d e x | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| cat c a t | θ0 θ 0 | 0 | 0 | 0 | 0 | 0 |

| say s a y | θ1 θ 1 | 0.026 | -0.043 | -0.018 | 0.030 | -0.007 |

| dog d o g | θ2 θ 2 | -0.026 | 0.043 | 0.018 | -0.030 | 0.007 |

| woof w o o f | θ3 θ 3 | -0.026 | 0.043 | 0.018 | -0.030 | 0.007 |

| ele e l e | θ4 θ 4 | 0 | 0 | 0 | 0 | 0 |

| pig p i g | θ5 θ 5 | 0 | 0 | 0 | 0 | 0 |

| monkey m o n k e y | θ6 θ 6 | 0 | 0 | 0 | 0 | 0 |

接着,我们需要训练下一个语料: {say,cat} { s a y , c a t }

步骤0:此时,

w=say w = s a y

Context(w)={cat} C o n t e x t ( w ) = { c a t }

V(say)=[−0.010,−0.073,0.065,−0.042,−0.044] V ( s a y ) = [ − 0.010 , − 0.073 , 0.065 , − 0.042 , − 0.044 ]

步骤1:

初始化向量 e=[0,0,0,0,0] e = [ 0 , 0 , 0 , 0 , 0 ]

步骤2:

首先计算正样本 cat c a t :

index=get_index{cat}=0 i n d e x = g e t _ i n d e x { c a t } = 0

Label{cat}=1 L a b e l { c a t } = 1

f=σ(V(w)Tθindex) f = σ ( V ( w ) T θ i n d e x ) ==>

σ(V(say)Tθ0)=σ(−0.010∗0−0.073∗0+0.065∗0−0.042∗0−0.044∗0)=0.5 σ ( V ( s a y ) T θ 0 ) = σ ( − 0.010 ∗ 0 − 0.073 ∗ 0 + 0.065 ∗ 0 − 0.042 ∗ 0 − 0.044 ∗ 0 ) = 0.5

步骤3:

g=η(Label{cat}−f)=0.95∗(1−0.5)=0.475 g = η ( L a b e l { c a t } − f ) = 0.95 ∗ ( 1 − 0.5 ) = 0.475

步骤4:

e=e+gθindex e = e + g θ i n d e x ==>

e=e+gθ0=[0,0,0,0,0]+0.475∗[0,0,0,0,0]=[0,0,0,0,0] e = e + g θ 0 = [ 0 , 0 , 0 , 0 , 0 ] + 0.475 ∗ [ 0 , 0 , 0 , 0 , 0 ] = [ 0 , 0 , 0 , 0 , 0 ]

步骤5:

θindex=θindex+gV(w) θ i n d e x = θ i n d e x + g V ( w ) ==>

θ0=θ0+gV(say)=[0,0,0,0,0]+0.475∗[−0.010,−0.073,0.065,−0.042,−0.044]=[−0.005,−0.035,0.031,−0.020,−0.021] θ 0 = θ 0 + g V ( s a y ) = [ 0 , 0 , 0 , 0 , 0 ] + 0.475 ∗ [ − 0.010 , − 0.073 , 0.065 , − 0.042 , − 0.044 ] = [ − 0.005 , − 0.035 , 0.031 , − 0.020 , − 0.021 ]

步骤6:

计算完正样本后,我们根据 Negative=2 N e g a t i v e = 2 参数设置,我们需要抽取两个词作为负样本,这里假设抽取的词为 Neg{cat}2={dog,ele} N e g { c a t } 2 = { d o g , e l e }

步骤2:(计算负样本 dog d o g )

index=get_index{dog}=2 i n d e x = g e t _ i n d e x { d o g } = 2

Label{dog}=0 L a b e l { d o g } = 0

f=σ(V(w)Tθindex) f = σ ( V ( w ) T θ i n d e x ) ==>

σ(V(say)Tθ2)=σ(−0.010∗(−0.026)−0.073∗0.043+0.065∗0.018−0.042∗−0.030−0.044∗0.007)≈0.5 σ ( V ( s a y ) T θ 2 ) = σ ( − 0.010 ∗ ( − 0.026 ) − 0.073 ∗ 0.043 + 0.065 ∗ 0.018 − 0.042 ∗ − 0.030 − 0.044 ∗ 0.007 ) ≈ 0.5

步骤3:

g=η(Label{dog}−f)=0.95∗(0−0.5)=−0.475 g = η ( L a b e l { d o g } − f ) = 0.95 ∗ ( 0 − 0.5 ) = − 0.475

步骤4:

e=e+gθindex e = e + g θ i n d e x ==>

e=e+gθ2=[0,0,0,0,0]−0.475∗[−0.026,0.043,0.018,−0.030,0.007]=[0.012,−0.020,−0.009,0.014,−0.003] e = e + g θ 2 = [ 0 , 0 , 0 , 0 , 0 ] − 0.475 ∗ [ − 0.026 , 0.043 , 0.018 , − 0.030 , 0.007 ] = [ 0.012 , − 0.020 , − 0.009 , 0.014 , − 0.003 ]

步骤5:

θindex=θindex+gV(w) θ i n d e x = θ i n d e x + g V ( w ) ==>

θ2=θ2+gV(say)=[−0.026,0.043,0.018,−0.030,0.007]−0.475∗[−0.010,−0.073,0.065,−0.042,−0.044]=[−0.021,0.078,−0.013,−0.010,0.028] θ 2 = θ 2 + g V ( s a y ) = [ − 0.026 , 0.043 , 0.018 , − 0.030 , 0.007 ] − 0.475 ∗ [ − 0.010 , − 0.073 , 0.065 , − 0.042 , − 0.044 ] = [ − 0.021 , 0.078 , − 0.013 , − 0.010 , 0.028 ]

计算完一个负样本,还有一个负样本:

步骤2:(计算负样本 ele e l e )

index=get_index{ele}=4 i n d e x = g e t _ i n d e x { e l e } = 4

Label{ele}=0 L a b e l { e l e } = 0

f=σ(V(w)Tθindex) f = σ ( V ( w ) T θ i n d e x ) ==>

σ(V(say)Tθ4)=σ(−0.010∗0−0.073∗0+0.065∗0−0.042∗0−0.044∗0)=0.5 σ ( V ( s a y ) T θ 4 ) = σ ( − 0.010 ∗ 0 − 0.073 ∗ 0 + 0.065 ∗ 0 − 0.042 ∗ 0 − 0.044 ∗ 0 ) = 0.5

步骤3:

g=η(Label{ele}−f)=0.95∗(0−0.5)=−0.475 g = η ( L a b e l { e l e } − f ) = 0.95 ∗ ( 0 − 0.5 ) = − 0.475

步骤4:

e=e+gθindex e = e + g θ i n d e x ==>

e=e+gθ4=[0,0,0,0,0]−0.475∗[0,0,0,0,0]=[0,0,0,0,0] e = e + g θ 4 = [ 0 , 0 , 0 , 0 , 0 ] − 0.475 ∗ [ 0 , 0 , 0 , 0 , 0 ] = [ 0 , 0 , 0 , 0 , 0 ]

步骤5:

θindex=θindex+gV(w) θ i n d e x = θ i n d e x + g V ( w ) ==>

θ4=θ4+gV(say)=[0,0,0,0,0]−0.475∗[−0.010,−0.073,0.065,−0.042,−0.044]=[0.005,0.035,−0.031,0.020,0.021] θ 4 = θ 4 + g V ( s a y ) = [ 0 , 0 , 0 , 0 , 0 ] − 0.475 ∗ [ − 0.010 , − 0.073 , 0.065 , − 0.042 , − 0.044 ] = [ 0.005 , 0.035 , − 0.031 , 0.020 , 0.021 ]

步骤7:更新词向量 V(w) V ( w )

V(w)=V(w)+e V ( w ) = V ( w ) + e ==>

V(w)=[−0.010,−0.073,0.065,−0.042,−0.044]+[0.012,−0.020,−0.009,0.014,−0.003]=[0.002,−0.093,−0.056,−0.028,−0.047] V ( w ) = [ − 0.010 , − 0.073 , 0.065 , − 0.042 , − 0.044 ] + [ 0.012 , − 0.020 , − 0.009 , 0.014 , − 0.003 ] = [ 0.002 , − 0.093 , − 0.056 , − 0.028 , − 0.047 ]

至此,第二个训练样本也训练完了,后面剩余的流程也类似。

以上的内容就是Neagtive Sampling的计算流程。