keras文本分类

1,reuters数据集,keras内置可下载

from keras.datasets import reuters

import numpy as np

from keras import models

from keras import layers

import copy

# from keras.utils.np_utils import to_categorical

# 8,982 training examples and 2,246 test examples,46个目标分类

(train_data,train_labels),(test_data,test_labels)=reuters.load_data(num_words=10000)

#计算随机猜测命中率18.4%

copy_labels=copy.copy(test_labels)

np.random.shuffle(copy_labels)

arr=np.array(test_labels)==np.array(copy_labels)

print(float(np.sum(arr)/len(arr)))

# 查看实际内容

word_index = reuters.get_word_index()

reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])

decoded_newswire = ' '.join([reverse_word_index.get(i - 3, '?') for i in train_data[0]])

print(decoded_newswire)

def vectorize_sequences(sequences, dimension=10000):

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

#内置了to_categorical方法,作用一样

def prehandle_labels(labels,dimension=46):

results=np.zeros((len(labels),dimension))

for i,label in enumerate(labels):

results[i,label]=1.

return results

#预处理数据和标签

x_train=vectorize_sequences(train_data)

x_test=vectorize_sequences(test_data)

y_train=prehandle_labels(train_labels)

y_test=prehandle_labels(test_labels)

#分出部分验证集

x_val=x_train[:1000]

y_val=y_train[:1000]

part_x_train=x_train[1000:]

part_y_train=y_train[1000:]

model=models.Sequential()

model.add(layers.Dense(64, activation='relu', input_shape=(10000,)))

model.add(layers.Dense(64, activation='relu'))

#softmax,概率分布

model.add(layers.Dense(46, activation='softmax'))

model.compile(loss='categorical_crossentropy',optimizer='rmsprop',metrics=['acc'])

#9次迭代开始过拟合

history=model.fit(part_x_train,part_y_train,epochs=9,batch_size=512,validation_data=(x_val,y_val))

results=model.evaluate(x_test,y_test)

#[0.9810771037719128, 0.7880676759212865],大概80%的成功率,比瞎猜好很多

print(results)

#查看预测结果

predict=model.predict(x_test)

print(predict[:20])

2,IMDB数据集,keras可下载

2.1 原始方式

from keras.datasets import imdb

import numpy as np

from keras import models

from keras import layers

import matplotlib.pyplot as plt

#只保留出现最频繁的10000个单词

#训练数据为电影评论,单词映射为数字了,标签0代表负面评价,1代表正面

(train_data,train_labels),(test_data,test_labels)=imdb.load_data(num_words=10000)

# print(train_data[0])

# print(train_data.shape)

# print(train_labels[0])

#每篇评价的最大单词量组成数组,输出数组最大值:9999

# print(max([max(seq) for seq in train_data]))

#输出数字序列对应单词文本

# word_index = imdb.get_word_index()

# reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])

# #前面3个为json头信息

# decoded_review = ' '.join([reverse_word_index.get(i - 3, '?') for i in train_data[0]])

# print(decoded_review)

#预处理数据,将每个序列扩展到10000维向量,train_data返回results为(25000,10000)

def vectorize_sequences(sequences, dimension=10000):

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

#sequence为train_data一个评论单词对应的索引列表

#比如第一个索引列表排序为1,2,3,4 那么转换后这个评论的张量表为0,1,1,1,1

#即索引值为x,则第x位(0开始)置为1

results[i, sequence] = 1.

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

# print(x_train[0]) #[0 1 1... 0 0 0]

#处理标签为向量

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

#构建model

model=models.Sequential()

#bias是内置了的,如果没有bias,则多个线性变换的结果可能等效于一个线性变换,

# 那么多层网络就没有多少意思了

model.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(16,activation='relu'))

# 激活函数为对应二分情况

model.add(layers.Dense(1,activation='sigmoid'))

# 损失函数

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

# 将训练集分出部分验证集

x_val = x_train[:10000]

partial_x_train = x_train[10000:]

y_val = y_train[:10000]

partial_y_train = y_train[10000:]

history=model.fit(partial_x_train,partial_y_train,epochs=20,batch_size=512,validation_data=(x_val, y_val))

loss,accuracy=model.evaluate(x_test,y_test)

print('accuracy:',accuracy)

# 训练集精度99.9%,验证集精度87%,测试集精度85%

# 图形化过程

history_dict=history.history

train_loss=history_dict['loss']

validation_loss=history_dict['val_loss']

epochs=range(1,len(train_loss)+1)

plt.plot(epochs,train_loss,'bo',label='Training loss')

plt.plot(epochs,validation_loss,'r',label='Validation loss')

plt.title('training and validation loss')

plt.xlabel('epochs')

plt.ylabel('loss')

plt.legend()

plt.show()

#清楚之前作图,重新作图

plt.clf()

train_acc = history_dict['acc']

validation_acc = history_dict['val_acc']

plt.plot(epochs, train_acc, 'bo', label='Training acc')

plt.plot(epochs, validation_acc, 'r', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

#重新训练,epochs不宜过大,容易过拟合,大概3,4就够

#以上初步从训练集中选出10000的验证集训练,此时可对所有训练集训练了

model=models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['accuracy'])

#epochs=4,使用整个训练集

model.fit(x_train,y_train,epochs=4,batch_size=512)

#输出在测试集上的测试loss和精度[0.294587216424942, 0.8832]

results=model.evaluate(x_test,y_test)

print(results)

#输出模型在测试集上每一个试样的预测值(0-1之间)

predict=model.predict(x_test)

print(predict)2.2 在原始方式上,进行3种调节,查看测试结果

#3种调节方式

from keras.datasets import imdb

import numpy as np

from keras import models

from keras import layers

(train_data,train_labels),(test_data,test_labels)=imdb.load_data(num_words=10000)

def vectorize_sequences(sequences, dimension=10000):

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

model=models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(16,activation='relu'))

#3,使用tanh激活函数,测试集精度只有84%,验证集上的精度也不行

# model.add(layers.Dense(16,activation='tanh',input_shape=(10000,)))

# model.add(layers.Dense(16,activation='tanh'))

#1,添加一层后,调整第二层输出为32维,其他不变,结果没什么改变

# model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

#2,使用均方误差损失函数mse,验证loss变的很低了,但是2种精度都没什么改变

# model.compile(optimizer='rmsprop',loss='mse',metrics=['accuracy'])

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['accuracy'])

model.fit(x_train,y_train,epochs=4,batch_size=512)

#原始测试集上的测试loss和精度[0.294587216424942, 0.8832]

#1,[0.308044932346344, 0.882]

#2,[0.086122335729599, 0.88292]

#3,[0.32080198724746706, 0.87768]

results=model.evaluate(x_test,y_test)

print(results)

2.3 在原始方式上,添加L1或L2正则化

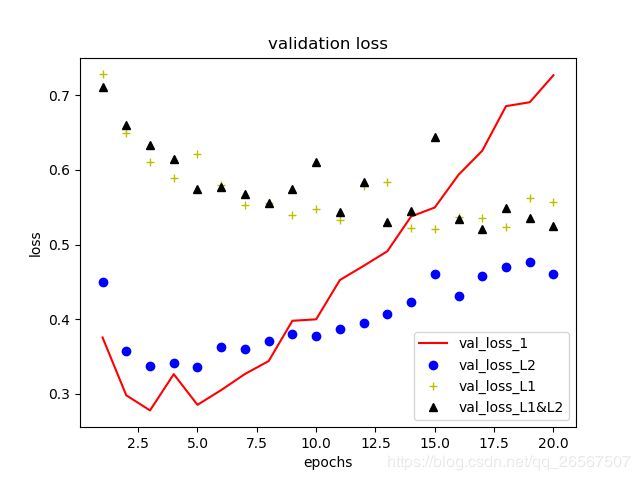

从结果看,L1与L2同时使用最佳,L2表现就已经很好了,L1表现还比原始方式略微低一点

#添加L1或L2正则化

from keras.datasets import imdb

import numpy as np

from keras import models

from keras import layers

from keras import regularizers

(train_data,train_labels),(test_data,test_labels)=imdb.load_data(num_words=10000)

def vectorize_sequences(sequences, dimension=10000):

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

#打乱训练集

index=[i for i in range(len(x_train))]

np.random.shuffle(index)

x_train=x_train[index]

y_train=y_train[index]

#分出验证集

x_val = x_train[:10000]

partial_x_train = x_train[10000:]

y_val = y_train[:10000]

partial_y_train = y_train[10000:]

#原始模型

print('start training model_1')

model=models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['accuracy'])

history=model.fit(partial_x_train,partial_y_train,epochs=20,batch_size=512,validation_data=(x_val,y_val),verbose=0)

#L2正则化后

print('start training model_2')

model2=models.Sequential()

model2.add(layers.Dense(16,kernel_regularizer=regularizers.l2(0.001),activation='relu',input_shape=(10000,)))

model2.add(layers.Dense(16,kernel_regularizer=regularizers.l2(0.001),activation='relu'))

model2.add(layers.Dense(1,activation='sigmoid'))

model2.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['accuracy'])

history2=model2.fit(partial_x_train,partial_y_train,epochs=20,batch_size=512,validation_data=(x_val,y_val),verbose=0)

#L1正则化后

print('start training model_3')

model3=models.Sequential()

model3.add(layers.Dense(16,kernel_regularizer=regularizers.l1(0.001),activation='relu',input_shape=(10000,)))

model3.add(layers.Dense(16,kernel_regularizer=regularizers.l1(0.001),activation='relu'))

model3.add(layers.Dense(1,activation='sigmoid'))

model3.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['accuracy'])

history3=model3.fit(partial_x_train,partial_y_train,epochs=20,batch_size=512,validation_data=(x_val,y_val),verbose=0)

#L1与L2同时使用

print('start training model_4')

model4=models.Sequential()

model4.add(layers.Dense(16,kernel_regularizer=regularizers.l1_l2(l1=0.001,l2=0.001),activation='relu',input_shape=(10000,)))

model4.add(layers.Dense(16,kernel_regularizer=regularizers.l1_l2(l1=0.001,l2=0.001),activation='relu'))

model4.add(layers.Dense(1,activation='sigmoid'))

model4.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['accuracy'])

#40个循环整体验证loss依然下降

#48个循环后整体开始上升

history4=model4.fit(partial_x_train,partial_y_train,epochs=20,batch_size=512,validation_data=(x_val,y_val),verbose=0)

#作图

import matplotlib.pyplot as plt

#注意只有将训练集分出验证集,训练模型后才会有val_loss这个key

val_loss_1=history.history['val_loss']

val_loss_2=history2.history['val_loss']

val_loss_3=history3.history['val_loss']

val_loss_4=history4.history['val_loss']

epochs=range(1,len(val_loss_4)+1)

plt.plot(epochs,val_loss_1,'r',label='val_loss_1')

plt.plot(epochs,val_loss_2,'bo',label='val_loss_L2')

plt.plot(epochs,val_loss_3,'y+',label='val_loss_L1')

plt.plot(epochs,val_loss_4,'k^',label='val_loss_L1&L2')

plt.title('validation loss')

plt.xlabel('epochs')

plt.ylabel('loss')

plt.legend()

plt.show()

results=model.evaluate(x_test,y_test)

results_2=model2.evaluate(x_test,y_test)

results_3=model3.evaluate(x_test,y_test)

results_4=model4.evaluate(x_test,y_test)

# [0.7685215129828453, 0.85004]

# [0.4729495596218109, 0.86188]

# [0.5593033714866639, 0.84552]

# [0.5237347905826568, 0.87284]

print(results,results_2,results_3,results_4)

这是验证loss图,从图中看,似乎L2最佳

2.4 在原始方式上,添加dropout

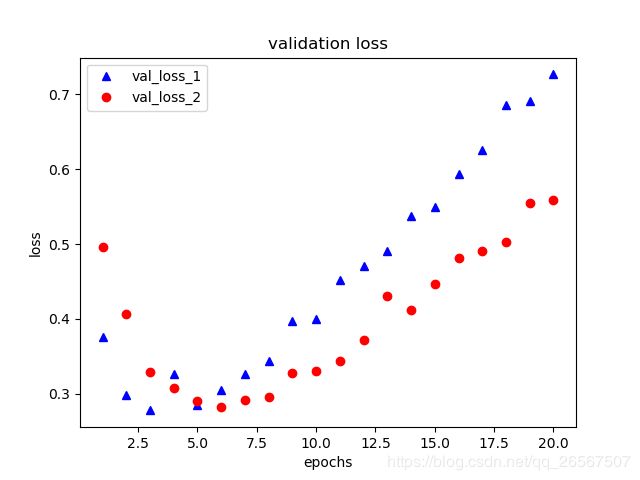

#使用Dropout层的影响,验证loss要低一些,时间为556s

from keras.datasets import imdb

import numpy as np

from keras import models

from keras import layers

import matplotlib.pyplot as plt

(train_data,train_labels),(test_data,test_labels)=imdb.load_data(num_words=10000)

def vectorize_sequences(sequences, dimension=10000):

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

#打乱训练集

index=[i for i in range(len(x_train))]

np.random.shuffle(index)

x_train=x_train[index]

y_train=y_train[index]

#分出验证集

x_val = x_train[:10000]

partial_x_train = x_train[10000:]

y_val = y_train[:10000]

partial_y_train = y_train[10000:]

#model 1

print('starting model_1...')

model=models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history=model.fit(partial_x_train,partial_y_train,epochs=20,batch_size=512,validation_data=(x_val, y_val))

#model 2

print('starting model_2...')

model2=models.Sequential()

model2.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

#每个隐藏层后添加Dropout层

model2.add(layers.Dropout(0.5))

model2.add(layers.Dense(16,activation='relu'))

model2.add(layers.Dropout(0.5))

model2.add(layers.Dense(1,activation='sigmoid'))

model2.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history2=model2.fit(partial_x_train,partial_y_train,epochs=20,batch_size=512,validation_data=(x_val, y_val))

#

result1=model.evaluate(x_test,y_test)

result2=model2.evaluate(x_test,y_test)

print(result1,result2)

#

history_dict=history.history

val_loss_1=history_dict['val_loss']

val_loss_2=history2.history['val_loss']

epochs=range(1,len(val_loss_1)+1)

plt.plot(epochs,val_loss_1,'b^',label='val_loss_1')

plt.plot(epochs,val_loss_2,'ro',label='val_loss_2')

plt.title('validation loss')

plt.xlabel('epochs')

plt.ylabel('loss')

plt.legend()

plt.show()

验证loss如下,可以看出过拟合推迟出现,且上升更为缓慢,还是有点作用的。

3,还是IMDB数据集, 使用其他技术

3.1 词嵌入Embedding

Embedding其实相当于一层全连接层,但是直接使用全连接层,效率不高,使用查表方式效率很高,具体可参考这篇文章:

Embedding剖析

#词嵌入

from keras.datasets import imdb

from keras import preprocessing

from keras.models import Sequential

from keras.layers import Flatten,Dense,Embedding

import matplotlib.pyplot as plt

#符号数为1000:1+max_word_index,每个矢量维度64

# embedding_layer=Embedding(1000,64)

max_feature=10000

#每个评论仅考虑前20个单词

max_len=20

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_feature)

#预处理数据为统一维度

x_train=preprocessing.sequence.pad_sequences(x_train,maxlen=max_len)

x_test=preprocessing.sequence.pad_sequences(x_test,maxlen=max_len)

model=Sequential()

#3个参数为:只考虑文本中出现的最热10000词,

# 每个词对应的向量长度8,每个评论只取前max_len个词训练或测试

model.add(Embedding(10000,8,input_length=max_len))

model.add(Flatten())

model.add(Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

#validation_split更简便的分出验证集

#仅仅取前20个单词,可以达到75%左右精度

history=model.fit(x_train,y_train,epochs=10,batch_size=32,validation_split=0.2,verbose=2)

h=history.history

acc=h['acc']

val_acc=h['val_acc']

loss=h['loss']

val_loss=h['val_loss']

epochs=range(1,len(acc)+1)

plt.plot(epochs,acc,'ro',label='train_acc')

plt.plot(epochs,val_acc,'b^',label='val_acc')

plt.title('acc')

plt.legend()

plt.figure()

plt.plot(epochs,loss,'ro',label='train_loss')

plt.plot(epochs,val_loss,'b^',label='val_loss')

plt.title('loss')

plt.legend()

plt.figure()

plt.show()3.2 使用原始的IMDB数据集,即没有将文本转换成数字序列,然而根据下载的大表制作自己的热词字典

这里需要下载2个东东,一个叫aclImdb,为原始数据集,一个是glove,为40万的单词-向量表。当我们想处理原始的文本数据时,就得像这样从头开始一步步做。glove应该是在庞大的数据集上生成的,里面的词-向量对应关系具有强的普遍性。

测试结果好像不怎么样~

#使用预设的词嵌入,即40万的单词-向量表

#热词:10000

#每个文本:取前100词训练、评估

#使用原始(raw)的IMDB数据,即文件夹aclImdb下的

#只用200个训练样本,10000个验证样本

import os

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

import numpy as np

from keras.models import Sequential

from keras.layers import Embedding, Flatten, Dense

import json

import matplotlib.pyplot as plt

from keras.models import model_from_json

imdb_dir='D:/Data/DeepLearningWithPython/IMDB/aclImdb'

train_dir=os.path.join(imdb_dir,'train')

#获取文本及标签

labels=[]

texts=[]

for label_type in ['neg','pos']:

dir_name=os.path.join(train_dir,label_type)

for fname in os.listdir(dir_name):

#默认是按系统编码打开,要设置utf-8

f=open(os.path.join(dir_name,fname),mode='r',encoding='utf-8')

texts.append(f.read())

f.close()

if label_type=='neg':

labels.append(0)

else:

labels.append(1)

maxlen = 100

training_samples = 200

validation_samples = 10000

max_words = 10000

#步骤

#1,定义Tokenizer

#只考虑最常见的10000个单词

tokenizer = Tokenizer(num_words=max_words)

#2,建立词索引

#选好热词,设置对应向量

tokenizer.fit_on_texts(texts)

#3,文本到序列

sequences = tokenizer.texts_to_sequences(texts)

#也可以一步到位,这里2个字符串样本,输出维度(samples,10000)

# one_hot_results=tokenizer.texts_to_matrix(samples,mode='binary')

#word及对应索引(0-9999)

word_index = tokenizer.word_index

# print('Found %s unique tokens.' % len(word_index))

#截取或填充至100长度

data = pad_sequences(sequences, maxlen=maxlen)

#标签list转array

labels = np.asarray(labels)

#(25000, 100)即25000个样本,每个样本取前100词

# print('Shape of data tensor:', data.shape)

#(25000, )

# print('Shape of label tensor:', labels.shape)

# 3,打乱数据

indices = np.arange(data.shape[0])

np.random.shuffle(indices)

data = data[indices]

labels = labels[indices]

x_train = data[:training_samples]

y_train = labels[:training_samples]

x_val = data[training_samples: training_samples + validation_samples]

y_val = labels[training_samples: training_samples + validation_samples]

#4,导入预定的400000个词及对应向量,即用其进行查表

glove_dir = 'D:/Data/DeepLearningWithPython/glove'

#400000个词及对应的向量,每个向量长度为100

embeddings_index = {}

#100d说明这个文件里将每个单词或符号映射为100维向量

f = open(os.path.join(glove_dir, 'glove.6B.100d.txt'),encoding='utf-8')

for line in f:

values = line.split()

word = values[0]

coefs = np.asarray(values[1:], dtype='float32')

embeddings_index[word] = coefs

f.close()

# print('Found %s word vectors.' % len(embeddings_index))

embedding_dim = 100

#5,将统计的word_index在预定的词-向量字典中查找,将结果汇总至embedding_matrix矩阵

#维度为10000个热词,每个词对应100长度的向量

embedding_matrix = np.zeros((max_words, embedding_dim))

for word, i in word_index.items():

if i < max_words:

#查表

embedding_vector = embeddings_index.get(word)

#不存在的向量置为0

if embedding_vector is not None:

embedding_matrix[i] = embedding_vector

#6,构建模型

model = Sequential()

model.add(Embedding(max_words, embedding_dim, input_length=maxlen))

model.add(Flatten())

model.add(Dense(32, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

#7,为嵌入层设置权重(即词-向量矩阵,也即生成的热词字典),

# 并冻结为不可训练(字典当然是固定的,只供查找)

model.layers[0].set_weights([embedding_matrix])

#冻结

model.layers[0].trainable = False

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['acc'])

history = model.fit(x_train, y_train,

epochs=10,

batch_size=32,

validation_data=(x_val, y_val))

#绘图

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'ro', label='Training acc')

plt.plot(epochs, val_acc, 'b^', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'ro', label='Training loss')

plt.plot(epochs, val_loss, 'b^', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

#测试数据

test_dir = os.path.join(imdb_dir, 'test')

labels = []

texts = []

for label_type in ['neg', 'pos']:

dir_name = os.path.join(test_dir, label_type)

for fname in sorted(os.listdir(dir_name)):

if fname[-4:] == '.txt':

f = open(os.path.join(dir_name, fname),encoding='utf-8')

texts.append(f.read())

f.close()

if label_type == 'neg':

labels.append(0)

else:

labels.append(1)

sequences = tokenizer.texts_to_sequences(texts)

x_test = pad_sequences(sequences, maxlen=maxlen)

y_test = np.asarray(labels)

result=model.evaluate(x_test,y_test)

# [0.8080911725044251, 0.561]

print(result)3.3 使用RNN(循环神经网络)之SimpleRNN

测试精度80%,比之前最高的L1&L2正则化后的87%要低,因为之前每个单词对应1万维的向量,且使用所有单词。

#RNN循环神经网络SimpleRNN

#热词10000

#取前500个词

#SimpleRNN不适合处理长序列

#实际中也不可能学习到长期依赖信息,多层网络面临梯度消失问题

#解决梯度消失:使用独立模块保存信息,以便后续使用

#LSTM:长短期记忆

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.models import Sequential

from keras.layers import Dense,Embedding,SimpleRNN

import matplotlib.pyplot as plt

max_features=10000

maxlen=500

# batch_size=32

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_features)

x_train=sequence.pad_sequences(x_train,maxlen=maxlen)

x_test=sequence.pad_sequences(x_test,maxlen=maxlen)

model=Sequential()

#少了一个input_length参数,当它后面跟Dense或Flatten层时,这个参数必需

model.add(Embedding(max_features,32))

#输出32维

model.add(SimpleRNN(32))

model.add(Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history=model.fit(x_train,y_train,epochs=10,batch_size=128,validation_split=0.2,verbose=2)

h=history.history

acc = h['acc']

val_acc = h['val_acc']

loss = h['loss']

val_loss = h['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'ro', label='Training acc')

plt.plot(epochs, val_acc, 'b^', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'ro', label='Training loss')

plt.plot(epochs, val_loss, 'b^', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

#[0.6611378932380676, 0.804]比第三章的88%要低,因为只取前500个词

result=model.evaluate(x_test,y_test,verbose=2)

print(result)3.4 使用LSTM(长短期记忆)替换SimpleRNN,为RNN的一个子类

测试精度提升到86%,接近之前的最高值了。

#RNN循环神经网络LSTM

#LSTM:长短期记忆。回答问题、机器翻译

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.models import Sequential

from keras.layers import Dense,Embedding,LSTM

import matplotlib.pyplot as plt

max_features=10000

maxlen=500

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_features)

x_train=sequence.pad_sequences(x_train,maxlen=maxlen)

x_test=sequence.pad_sequences(x_test,maxlen=maxlen)

model=Sequential()

model.add(Embedding(max_features,32))

#输出32维

model.add(LSTM(32))

model.add(Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history=model.fit(x_train,y_train,epochs=10,batch_size=128,validation_split=0.2,verbose=2)

h=history.history

acc = h['acc']

val_acc = h['val_acc']

loss = h['loss']

val_loss = h['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'ro', label='Training acc')

plt.plot(epochs, val_acc, 'b^', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'ro', label='Training loss')

plt.plot(epochs, val_loss, 'b^', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

#[0.46161370715618133, 0.86164]比SimpleRNN提高6%

result=model.evaluate(x_test,y_test,verbose=2)

print(result)3.5 使用另一个RNN子类GRU

这个跟LSTM原理类似,不过比LSTM稍微简洁一些,精度略有下降,但是训练较为快。

关于LSTM与GRU的原理解析,见参考文章:LSTM与GRU

#GRU代替LSTM

#GRU:只使用2个门,重置门和更新门。时间稍少一点

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.models import Sequential

from keras.layers import Dense,Embedding,GRU

import matplotlib.pyplot as plt

max_features=10000

maxlen=500

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_features)

x_train=sequence.pad_sequences(x_train,maxlen=maxlen)

x_test=sequence.pad_sequences(x_test,maxlen=maxlen)

model=Sequential()

model.add(Embedding(max_features,32))

model.add(GRU(32))

model.add(Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history=model.fit(x_train,y_train,epochs=10,batch_size=128,validation_split=0.2,verbose=2)

h=history.history

acc = h['acc']

val_acc = h['val_acc']

loss = h['loss']

val_loss = h['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'ro', label='Training acc')

plt.plot(epochs, val_acc, 'b^', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'ro', label='Training loss')

plt.plot(epochs, val_loss, 'b^', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

#[0.4026906166577339, 0.85212]跟LSTM差不多

result=model.evaluate(x_test,y_test,verbose=2)

print(result)3.6 将数据翻转,测试

可以看到精度仍然有83%,表明情感分析可能只与单词有关,而与其出现顺序没有什么关系

#将训练和测试数据进行翻转,测试表明其对顺序不敏感

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras import layers

from keras.models import Sequential

import matplotlib.pyplot as plt

max_features=10000

maxlen=500

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_features)

#1,翻转

x_train=[x[::-1] for x in x_train]

x_test=[x[::-1] for x in x_test]

x_train=sequence.pad_sequences(x_train,maxlen=maxlen)

x_test=sequence.pad_sequences(x_test,maxlen=maxlen)

model=Sequential()

# input_dim:字典长度,输入数据最大下标+1,表示独热码的维度

#output_dim:表示一个单词映射成向量的维度

#Embedding:其实就是一个字典,充当全连接层,

# 将独热码乘以全连接层的操作转换为查表,提升效率

model.add(layers.Embedding(input_dim=max_features,output_dim=32))

model.add(layers.GRU(32))

model.add(layers.Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history=model.fit(x_train,y_train,batch_size=128,epochs=10,validation_split=0.2,verbose=2)

h=history.history

acc = h['acc']

val_acc = h['val_acc']

loss = h['loss']

val_loss = h['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'r.:', label='Training acc')

plt.plot(epochs, val_acc, 'b.:', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'r.:', label='Training loss')

plt.plot(epochs, val_loss, 'b.:', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

#[0.47393704013347626, 0.83376]跟正向GRU的85%差距不大

result=model.evaluate(x_test,y_test,verbose=2)

print(result)

3.7 使用双向循环神经网络Bidirectional

即同时从正、反2个反向训练数据。从结果来看,还没有单独正向的好。

#bidirectional RNNs 双向神经网络

#对于情感分析等文本来说,不同顺序的loss可能差不多,但从不同角度可以获得更多的pattern

#即同时进行正向与反向的训练

from keras.layers import Bidirectional,Dense,Embedding,GRU

from keras.models import Sequential

from keras.preprocessing import sequence

from keras.datasets import imdb

import sys

sys.path.append('..')

#这个是我自己写的一个保存数据和读取数据的辅助模块

from utils.acc_loss_from_txt import data_to_text

# import matplotlib.pyplot as plt

max_features=10000

maxlen=500

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_features)

x_train=sequence.pad_sequences(x_train,maxlen=maxlen)

x_test=sequence.pad_sequences(x_test,maxlen=maxlen)

model=Sequential()

model.add(Embedding(max_features,32))

#1,使用双向循环神经网络

model.add(Bidirectional(GRU(32)))

model.add(Dense(1,activation='sigmoid'))

model.compile(optimizer='rmsprop',loss='binary_crossentropy',metrics=['acc'])

history=model.fit(x_train,y_train,batch_size=128,epochs=10,validation_split=0.2,verbose=2)

h=history.history

acc = h['acc']

val_acc = h['val_acc']

loss = h['loss']

val_loss = h['val_loss']

path='./imdb_7.txt'

data_to_text(path,'acc',acc)

data_to_text(path,'val_acc',val_acc)

data_to_text(path,'loss',loss)

data_to_text(path,'val_loss',val_loss)

# [0.4243735435771942, 0.83652]比反向大一点点,比正向还小?

result=model.evaluate(x_test,y_test,verbose=2)

# print(result)

data_to_text(path,'test result',result)

附上我自己写的一个辅助模块,用于保存模型的精度、loss信息并读取显示为图片

import matplotlib.pyplot as plt

#用于平滑曲线

def smooth_curve(points, factor=0.8):

smoothed_points = []

for point in points:

if smoothed_points:

previous = smoothed_points[-1]

smoothed_points.append(previous * factor + point * (1 - factor))

else:

smoothed_points.append(point)

return smoothed_points

#读取数据

def data_from_text(file,smooth=False):

"""

# Arguments

file:the file which data extract from

smooth:wether or not to smooth the curve

"""

f=open(file,encoding='utf-8')

lines=f.read().split('\n')

f.close()

names=[]

result=[]

for line in lines:

#排除空行

if line:

data=line.split(':')

names.append(data[0])

a=[float(x) for x in data[1][1:-1].split(',')]

if smooth:

a=smooth_curve(a)

result.append(a)

return names,result

#记录数据

def data_to_text(file,desc,data):

"""

# Arguments

file:the file which data extract from

desc:a string describe for data

data:datas to store,list pattern

"""

f=open(file,'a+')

f.write(desc+':'+str(data)+'\n')

f.close()

#直接一步读取数据并显示为图片

def data_to_graph(file,smooth=False):

names,data=data_from_text(file,smooth)

length=len(data)

#如果包含result,就是奇数

if length%2==1:

length-=1

epoches=range(1,len(data[0])+1)

for i in range(0,length,2):

plt.plot(epoches,data[i],'r.--',label=names[i])

plt.plot(epoches,data[i+1],'b.--',label=names[i+1])

plt.legend()

if i==length-2:

plt.show()

else:

plt.figure()3.8 使用1D卷积-池化层

类似与图片的2D卷积-池化层,文本可以有1D卷积-池化层。优势在于训练非常迅速,一轮只需要10几秒,缺点是只能获取局部序列信息,对于对顺序敏感的文本,表现不好。

#使用1D卷积-池化层,对于文本训练非常迅速,且精度还可以

#第6轮验证精度接近84%,测试精度82%,GRU测试精度为85.2%,还是有差距的

from keras.models import Sequential

from keras.layers import Conv1D,Dense,Embedding,MaxPool1D,GlobalMaxPool1D

from keras.optimizers import RMSprop

from keras.preprocessing import sequence

from keras.datasets import imdb

import sys

sys.path.append('..')

from utils.acc_loss_from_txt import data_to_text,data_to_graph

max_features=10000

maxlen=500

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_features)

x_train=sequence.pad_sequences(x_train,maxlen=maxlen)

x_test=sequence.pad_sequences(x_test,maxlen=maxlen)

model=Sequential()

model.add(Embedding(max_features,128,input_length=maxlen))

#1D卷积层

model.add(Conv1D(32,7,activation='relu'))

#1D最大池化层

model.add(MaxPool1D(5))

model.add(Conv1D(32,7,activation='relu'))

#全局最大池化层,也可以用Flatten层

model.add(GlobalMaxPool1D())

model.add(Dense(1))

#学习率默认0.001

model.compile(optimizer=RMSprop(lr=1e-4),loss='binary_crossentropy',metrics=['acc'])

history=model.fit(x_train,y_train,batch_size=128,epochs=10,validation_split=0.2,verbose=2)

h=history.history

loss=h['loss']

val_loss=h['val_loss']

acc=h['acc']

val_acc=h['val_acc']

path='./imdb_8.txt'

data_to_text(path,'loss',loss)

data_to_text(path,'val_loss',val_loss)

data_to_text(path,'acc',acc)

data_to_text(path,'val_acc',val_acc)

result=model.evaluate(x_test,y_test,verbose=2)

data_to_text(path,'test result',result)

data_to_graph(path)

3.9 使用1D卷积层预处理,再使用GRU训练

这里是先使用了验证集,结果显示第6轮开始出现过拟合,然后使用全部训练集训练到第6轮,然后在测试集上测试精度,之前的程序有些是直接用过拟合的模型测试精度,不够正规。不过这里精度不如上一个。。。奇怪~

#使用1D卷积-池化层预处理,再使用GRU训练

#第6轮验证精度79%,再使用全部训练集训练6轮,得到测试精度79%

from keras.models import Sequential

from keras.layers import Conv1D,Dense,Embedding,MaxPool1D,GRU

from keras.optimizers import RMSprop

from keras.preprocessing import sequence

from keras.datasets import imdb

import time

import sys

sys.path.append('..')

from utils.acc_loss_from_txt import data_to_text,data_to_graph

max_features=10000

maxlen=500

(x_train,y_train),(x_test,y_test)=imdb.load_data(num_words=max_features)

x_train=sequence.pad_sequences(x_train,maxlen=maxlen)

x_test=sequence.pad_sequences(x_test,maxlen=maxlen)

model=Sequential()

model.add(Embedding(max_features,128,input_length=maxlen))

model.add(Conv1D(32,7,activation='relu'))

model.add(MaxPool1D(5))

model.add(Conv1D(32,7,activation='relu'))

#1,1D卷积层之后使用GRU

model.add(GRU(32,dropout=0.2,recurrent_dropout=0.5))

model.add(Dense(1))

#学习率默认0.001

model.compile(optimizer=RMSprop(lr=1e-4),loss='binary_crossentropy',metrics=['acc'])

t1=time.time()

history=model.fit(x_train,y_train,batch_size=128,epochs=6,verbose=2)

h=history.history

loss=h['loss']

acc=h['acc']

path='./imdb_9.txt'

data_to_text(path,'loss',loss)

data_to_text(path,'acc',acc)

result=model.evaluate(x_test,y_test,verbose=2)

data_to_text(path,'test_result',result)

t2=time.time()

data_to_text(path,'cost_mins',(t2-t1)/60.)

data_to_graph(path)