深入剖析kubernetes及企业级搭建实战(二)

三、企业级k8s搭建实战

本次安裝的版本:1.12.3

操作系统主机名节点及功能 IP备注

系统 主机名 IP地址 安装软件

CentOS7.4 X86_64 k8s-master1 192.168.2.101 kube-apiserver、kube-controller-manager、kube-scheduler、etcd、docker、calico-image

CentOS7.4 X86_65 k8s-master2 192.168.2.102 kube-apiserver、kube-controller-manager、kube-scheduler、etcd、docker、calico-image

CentOS7.4 X86_66 k8s-master3 192.168.2.103 kube-apiserver、kube-controller-manager、kube-scheduler、etcd、docker、calico-image

CentOS7.4 X86_65 k8s-node2 192.168.2.104 kube-proxy、kubelet、etcd、docker、calico

CentOS7.4 X86_66 k8s-node3 192.168.2.105 kube-proxy、kubelet、etcd、docker、calico

CentOS7.4 X86_66 k8s-node3 192.168.2.106 kube-proxy、kubelet、etcd、docker、calico

-

事前准备

下面的所有操作将会在k8s-master1上进行,所以k8s-master1到其他节点的网络必须通,这里将采用ansible

①配置master1到其他节点ssh免密钥登录#ssh-keygen 一直回车即可 #ssh-copy-id [email protected] 输入对应的服务器密码(下面几个步骤同理) #ssh-copy-id [email protected] #ssh-copy-id [email protected] #ssh-copy-id [email protected] #ssh-copy-id [email protected] #ssh-copy-id [email protected] 完了之后最好验证一下:ssh 192.168.6.102,直接进入表示免密钥成功

②在master1上,下载ansible

#yum install -y ansible

#cat > /etc/ansible/hosts <③关闭防火墙及selinux

#ansible all -m shell -a "systemctl stop firewalld && systemctl disable firewalld"

#ansible all -m shell -a "setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disable/g' /etc/sysconfig/selinux"

④关闭虚拟分区

#ansible all -m shell -a "swapoff -a && sysctl -w vm.swappiness=0"

#ansible all -m shell -a "sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab"

⑤升级内核,因为centos和ubuntu在内核版本低的情况下安装docker都存在bug报错kernel:unregister_netdevice: waiting for lo to become free. Usage count = 1。

#ansible all -m shell -a "yum install epel-release wget git jq psmisc update -y"

完了之后重启所有机器reboot

⑥导入 elrepo 的 key并安装 elrepo 源

#ansible all -m shell -a "rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org"

#ansible all -m shell -a "rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm"

查看可用的内核

#yum --disablerepo="*" --enablerepo="elrepo-kernel" list available --showduplicates

在yum的ELRepo源中,mainline 为最新版本的内核,安装kernel

ipvs依赖于nf_conntrack_ipv4内核模块,4.19包括之后内核里改名为nf_conntrack,但是kube-proxy的代码里没有加判断一直用的nf_conntrack_ipv4,所以这里安装4.19版本以下的内核

下面链接为其他版本的内核:RHELhttp://mirror.rc.usf.edu/compute_lock/elrepo/kernel/el7/x86_64/RPMS/

⑦自选内核的安装方法

#ansible all -m shell -a "export Kernel_Version=4.18.9-1 && wget http://mirror.rc.usf.edu/compute_lock/elrepo/kernel/el7/x86_64/RPMS/kernel-ml{,-devel}-${Kernel_Version}.el7.elrepo.x86_64.rpm"

#ansible all -m shell -a "yum localinstall -y kernel-ml*"

查看这个内核里是否有这个内核模块

# find /lib/modules -name '*nf_conntrack_ipv4*' -type f

...这是输出

/lib/modules/4.18.16-1.el7.elrepo.x86_64/kernel/net/ipv4/netfilter/nf_conntrack_ipv4.ko

...

修改内核启动顺序,默认启动的顺序应该为1,升级以后内核是往前面插入,为0(如果每次启动时需要手动选择哪个内核,该步骤可以省略)

#ansible all -m shell -a "grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg"

使用下面命令看看确认下是否启动默认内核指向上面安装的内核

# grubby --default-kernel

docker官方的内核检查脚本建议(RHEL7/CentOS7: User namespaces disabled; add ‘user_namespace.enable=1’ to boot command line),使用下面命令开启

grubby --args=“user_namespace.enable=1” --update-kernel="$(grubby --default-kernel)"

重启加载新内核

reboot

测试加载模块,如果失败了可联系我

modprobe nf_conntrack_ipv4

所有机器安装ipvs(1.11后使用ipvs,性能将有所提高)

在每台机器上安装依赖包

#ansible all -m shell -a "yum install ipvsadm ipset sysstat conntrack libseccomp -y"

所有机器选择需要开机加载的内核模块,以下是 ipvs 模式需要加载的模块并设置开机自动加载

#ansible all -m shell -a ":> /etc/modules-load.d/ipvs.conf"

#ansible all -m shell -a "module=(

ip_vs \

ip_vs_lc \

ip_vs_wlc \

ip_vs_rr \

ip_vs_wrr \

ip_vs_lblc \

ip_vs_lblcr \

ip_vs_dh \

ip_vs_sh \

ip_vs_fo \

ip_vs_nq \

ip_vs_sed \

ip_vs_ftp \

)"

#ansible all -m shell -a "for kernel_module in ${module[@]};do

/sbin/modinfo -F filename $kernel_module |& grep -qv ERROR && echo $kernel_module >> /etc/modules-load.d/ipvs.conf || :

done"

#systemctl enable --now systemd-modules-load.service

上面如果systemctl enable命令报错,可手动一台台加载模块,或者在/etc/modules-load.d/ipvs.conf里注释掉不能加载的模块再enable试试。

所有机器需要设定/etc/sysctl.d/k8s.conf的系统参数。

#ansible all -m shell -a "cat < /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

EOF"

# ansible all -m shell -a "sysctl --system"

检查系统内核和模块是否适合运行 docker (仅适用于 linux 系统)

#ansible all -m shell -a "curl https://raw.githubusercontent.com/docker/docker/master/contrib/check-config.sh > check-config.sh"

#bash ./check-config.sh

所有机器需要安装Docker CE 版本的容器引擎,推荐使用年份命名版本的docker ce:

在官方查看K8s支持的docker版本 https://github.com/kubernetes/kubernetes 里进对应版本的changelog里搜The list of validated docker versions remain

这里利用docker的官方安装脚本来安装一次来添加repo,然后查询可用的docker版本,选择你要安装的k8s版本支持的docker版本即可

#ansible all -m shell -a "curl -fsSL "https://get.docker.com/" | bash -s -- --mirror Aliyun && yum autoremove docker-ce -y"

#ansible all -m shell -a "yum list docker-ce --showduplicates | sort -r"

#ansible all -m shell -a "yum install -y docker-ce-"

所有机器配置加速源:

#ansible all -m shell -a "mkdir -p /etc/docker/"

#ansible all -m shell -a "cat>/etc/docker/daemon.json<设置docker开机启动,CentOS安装完成后docker需要手动设置docker命令补全:

#ansible all -m shell -a "yum install -y epel-release bash-completion && cp /usr/share/bash-completion/completions/docker /etc/bash_completion.d/"

#ansible all -m shell -a "systemctl enable --now docker"

设置所有机器的hostname,有些人喜欢用master1就自己改,我的是下面的k8s-master1,所有机器都要设置

#hostnamectl set-hostname k8s-master1

其他的主机自己设置,在这就不写出来了

所有机器需要设定/etc/hosts解析到所有集群主机。

#declare -A Allnode

# Allnode=(['k8s-master1']=192.168.2.111 ['k8s-master2']=192.168.2.112 ['k8s-master3']=192.168.2.113 ['k8s-node1']=192.168.2.114 ['k8s-node2']=192.168.2.115 ['k8s-node3']=192.168.2.116)

# for NODE in "${!Allnode[@]}"; do

echo "---$NODE ${Allnode[$NODE]} ---"

ssh root@${Allnode[$NODE]} "echo '#' > /etc/hosts"

for node1 in "${!Allnode[@]}"; do

a=`echo "$node1 ${Allnode[$node1]}" | xargs -n2|sort|awk '{print $0}'`

echo $a

ssh root@${Allnode[$NODE]} "echo '$a' >> /etc/hosts"

done

done

这部分完了之后,若无报错,可以给所有机器做一个快照

- 使用环境变量声明集群信息

根据自己环境声明用到的变量,后续操作依赖于环境变量,所以断开了ssh后要重新声明下(主要是ip和一些信息,路径最好别改)

下面键是主机的hostname,值是主机的IP,有些人喜欢用master1就自己改,我是下面的k8s-master1,所有机器同理

haproxy每台上占据8443端口去负载到每台master上的api-server的6443端口

然后keepalived会保证vip飘在可用的master上

所有管理组件和kubelet都会去访问vip:8443确保了即使down掉一台master也能访问到apiserver

云上的话选择熟练的LB来代替掉haproxy和keepalived即可

VIP和INGRESS_VIP选同一个局域网的没用过IP来使用即可

建议下面写进一个文件,这样断开了也能source一下加载

声明集群成员信息

declare -A MasterArray otherMaster NodeArray Allnode

MasterArray=(['k8s-master1']=192.168.2.111 ['k8s-master2']=192.168.2.112 ['k8s-master3']=192.168.2.113)

otherMaster=(['k8s-master2']=192.168.2.112 ['k8s-master3']=192.168.2.113)

NodeArray=(['k8s-node1']=192.168.2.114 ['k8s-node2']=192.168.2.115 ['k8s-node3']=192.168.2.116)

Allnode=(['k8s-master1']=192.168.2.111 ['k8s-master2']=192.168.2.112 ['k8s-master3']=192.168.2.113 ['k8s-node1']=192.168.2.114 ['k8s-node2']=192.168.2.115 ['k8s-node3']=192.168.2.116)

export VIP=192.168.2.120

export INGRESS_VIP=192.168.2.121

[ "${#MasterArray[@]}" -eq 1 ] && export VIP=${MasterArray[@]} || export API_PORT=8443

export KUBE_APISERVER=https://${VIP}:${API_PORT:-6443}

#声明需要安装的的k8s版本

export KUBE_VERSION=v1.12.3

# 网卡名

export interface=ens160

export K8S_DIR=/etc/kubernetes

export PKI_DIR=${K8S_DIR}/pki

export ETCD_SSL=/etc/etcd/ssl

export MANIFESTS_DIR=/etc/kubernetes/manifests/

# cni

export CNI_URL="https://github.com/containernetworking/plugins/releases/download"

export CNI_VERSION=v0.7.1

# cfssl

export CFSSL_URL="https://pkg.cfssl.org/R1.2"

# etcd

export ETCD_version=v3.3.9

k8s-master1登陆其他机器要免密(不然就后面文章手动输入)或者在k8s-m1安装sshpass后使用别名来让ssh和scp不输入密码,123456为所有机器密码

yum install sshpass -y

alias ssh='sshpass -p 123456 ssh -o StrictHostKeyChecking=no'

alias scp='sshpass -p 123456 scp -o StrictHostKeyChecking=no'

首先在k8s-master1通过git获取部署要用到的二进制配置文件和yml

git clone https://github.com/zhangguanzhang/k8s-manual-files.git ~/k8s-manual-files -b bin

cd ~/k8s-manual-files/

在k8s-m1下载Kubernetes二进制文件后分发到其他机器

可通过下面命令查询所有stable版本(耐心等待,请确保能访问到github)

# curl -s https://zhangguanzhang.github.io/bash/pull.sh | bash -s search gcr.io/google_containers/kube-apiserver-amd64/ |

grep -P 'v[\d.]+$' | sort -t '.' -n -k 2

无越墙工具的,所有二进制文件上传到dockerhub了.

# cd ~/k8s-manual-files/

#docker pull zhangguanzhang/k8s_bin:$KUBE_VERSION-full

#docker run --rm -d --name temp zhangguanzhang/k8s_bin:$KUBE_VERSION-full sleep 10

#docker cp temp:/kubernetes-server-linux-amd64.tar.gz .

#tar -zxvf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

分发master相关组件到其他master上(这边不想master跑pod的话就不复制kubelet和kube-proxy过去,以及后面master节点上的kubelet的相关操作)

#for NODE in "${!otherMaster[@]}"; do

echo "--- $NODE ${otherMaster[$NODE]} ---"

scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} ${otherMaster[$NODE]}:/usr/local/bin/

done

分发node的kubernetes二进制文件

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

scp /usr/local/bin/kube{let,-proxy} ${NodeArray[$NODE]}:/usr/local/bin/

done

在k81-master1下载Kubernetes CNI 二进制文件并分发

mkdir -p /opt/cni/bin

wget "${CNI_URL}/${CNI_VERSION}/cni-plugins-amd64-${CNI_VERSION}.tgz"

tar -zxf cni-plugins-amd64-${CNI_VERSION}.tgz -C /opt/cni/bin

# 分发cni文件

for NODE in "${!otherMaster[@]}"; do

echo "--- $NODE ${otherMaster[$NODE]} ---"

ssh ${otherMaster[$NODE]} 'mkdir -p /opt/cni/bin'

scp /opt/cni/bin/* ${otherMaster[$NODE]}:/opt/cni/bin/

done

分发cni文件到node

#for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

ssh ${NodeArray[$NODE]} 'mkdir -p /opt/cni/bin'

scp /opt/cni/bin/* ${NodeArray[$NODE]}:/opt/cni/bin/

done

在k8s-master1需要安裝CFSSL工具,这将会用來建立 TLS Certificates。

# wget "${CFSSL_URL}/cfssl_linux-amd64" -O /usr/local/bin/cfssl

#wget "${CFSSL_URL}/cfssljson_linux-amd64" -O /usr/local/bin/cfssljson

# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

建立集群CA keys 与Certificates

在这个部分,将需要产生多个元件的Certificates,这包含Etcd、Kubernetes 元件等,并且每个集群都会有一个根数位凭证认证机构(Root Certificate Authority)被用在认证API Server 与Kubelet 端的凭证。

- PS这边要注意CA JSON档的CN(Common Name)与O(Organization)等内容是会影响Kubernetes元件认证的。

CN Common Name, apiserver 会从证书中提取该字段作为请求的用户名 (User Name)

O Organization, apiserver 会从证书中提取该字段作为请求用户所属的组 (Group) - CA (Certificate Authority) 是自签名的根证书,用来签名后续创建的其它证书。

- 本文档使用 CloudFlare 的 PKI 工具集 cfssl 创建所有证书。

Etcd:用来保存集群所有状态的 Key/Value 存储系统,所有 Kubernetes 组件会通过 API Server 来跟 Etcd 进行沟通从而保存或读取资源状态。

这边etcd跑在master上,有条件的可以单独几台机器跑,不过得会配置apiserver指向etcd集群

etcd如果未启用tls暴露在公网上会被人注入信息,大多数人集群里会多几个挖矿的pod,就像docker开启远端访问没配置tls一样被人恶意利用

首先在k8s-master1建立/etc/etcd/ssl文件夹

#cd ~/k8s-manual-files/pki

mkdir -p ${ETCD_SSL}

从CSR json文件ca-config.json与etcd-ca-csr.json生成etcd的CA keys与Certificate:

#cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare ${ETCD_SSL}/etcd-ca

生成Etcd证书:

#cfssl gencert \

-ca=${ETCD_SSL}/etcd-ca.pem \

-ca-key=${ETCD_SSL}/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,$(xargs -n1<<<${MasterArray[@]} | sort | paste -d, -s -) \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare ${ETCD_SSL}/etcd

-

-hostname值为所有masters节点的IP,如果后续master节点扩容此处可以多预留ip到证书里。

完成后删除不必要文件,确认/etc/etcd/ssl有以下文件

rm -rf ${ETCD_SSL}/*.csr

ls $ETCD_SSL

etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem

在k8s-master1上复制相关文件至其他Etcd节点,这边etcd跑在所有master节点上,所以etcd的证书复制到其他mster节点:#for NODE in "${!otherMaster[@]}"; do echo "--- $NODE ${otherMaster[$NODE]} ---" ssh ${otherMaster[$NODE]} "mkdir -p ${ETCD_SSL}" for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do scp ${ETCD_SSL}/${FILE} ${otherMaster[$NODE]}:${ETCD_SSL}/${FILE} done done

etcd所有标准版本可以在下面url查看

https://github.com/etcd-io/etcd/releases

在k8s-master1上下载etcd的二进制文件,单台的话建议使用v3.1.9

[ "${#MasterArray[@]}" -eq 1 ] && ETCD_version=v3.1.9 || :

cd ~/k8s-manual-files

如果下面直接下载失败的话一样使用骚套路:docker拉镜像后cp出来

wget https://github.com/etcd-io/etcd/releases/download/${ETCD_version}/etcd-${ETCD_version}-linux-amd64.tar.gz

tar -zxvf etcd-${ETCD_version}-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-${ETCD_version}-linux-amd64/etcd{,ctl}

#-------

#上面被墙了可以使用骚套路

docker pull quay.io/coreos/etcd:$ETCD_version

docker run --rm -d --name temp quay.io/coreos/etcd:$ETCD_version sleep 10

docker cp temp:/usr/local/bin/etcd /usr/local/bin

docker cp temp:/usr/local/bin/etcdctl /usr/local/bin

在k8s-master1上分发etcd的二进制文件到其他master上

#for NODE in "${!otherMaster[@]}"; do

echo "--- $NODE ${otherMaster[$NODE]} ---"

scp /usr/local/bin/etcd* ${otherMaster[$NODE]}:/usr/local/bin/

done

在k8s-master1上配置etcd配置文件并分发相关文件

配置文件存放在/etc/etcd/etcd.config.yml里

注入基础变量

cd ~/k8s-manual-files/master/

etcd_servers=$( xargs -n1<<<${MasterArray[@]} | sort | sed 's#^#https://#;s#$#:2379#;$s#\n##' | paste -d, -s - )

etcd_initial_cluster=$( for i in ${!MasterArray[@]};do echo $i=https://${MasterArray[$i]}:2380; done | sort | paste -d, -s - )

sed -ri "/initial-cluster:/s#'.+'#'${etcd_initial_cluster}'#" etc/etcd/config.yml

分发systemd和配置文件

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} "mkdir -p $MANIFESTS_DIR /etc/etcd /var/lib/etcd"

scp systemd/etcd.service ${MasterArray[$NODE]}:/usr/lib/systemd/system/etcd.service

scp etc/etcd/config.yml ${MasterArray[$NODE]}:/etc/etcd/etcd.config.yml

ssh ${MasterArray[$NODE]} "sed -i "s/{HOSTNAME}/$NODE/g" /etc/etcd/etcd.config.yml"

ssh ${MasterArray[$NODE]} "sed -i "s/{PUBLIC_IP}/${MasterArray[$NODE]}/g" /etc/etcd/etcd.config.yml"

ssh ${MasterArray[$NODE]} 'systemctl daemon-reload'

done

在k8s-master1上启动所有etcd

etcd 进程首次启动时会等待其它节点的 etcd 加入集群,命令 systemctl start etcd 会卡住一段时间,为正常现象

可以全部启动后后面的etcdctl命令查看状态确认正常否

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} 'systemctl enable --now etcd' &

done

wait

然后输出到终端了的时候多按几下回车直到等光标回到终端状态

k8s-master1上执行下面命令验证 ETCD 集群状态,下面第二个是使用3的api去查询集群的键值

etcdctl \

--cert-file /etc/etcd/ssl/etcd.pem \

--key-file /etc/etcd/ssl/etcd-key.pem \

--ca-file /etc/etcd/ssl/etcd-ca.pem \

--endpoints $etcd_servers cluster-health

...下面是输出

member 4f15324b6756581c is healthy: got healthy result from https://192.168.88.111:2379

member cce1303a6b6dd443 is healthy: got healthy result from https://192.168.88.112:2379

member ead42f3e6c9bb295 is healthy: got healthy result from https://192.168.88.113:2379

cluster is healthy

ETCDCTL_API=3 \

etcdctl \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--cacert /etc/etcd/ssl/etcd-ca.pem \

--endpoints $etcd_servers get / --prefix --keys-only

为确保安全,kubernetes 系统各组件需要使用 x509 证书对通信进行加密和认证。

在k8s-master1建立pki文件夹,并生成根CA凭证用于签署其它的k8s证书。

mkdir -p ${PKI_DIR}

cd ~/k8s-manual-files/pki

cfssl gencert -initca ca-csr.json | cfssljson -bare ${PKI_DIR}/ca

ls ${PKI_DIR}/ca*.pem

/etc/kubernetes/pki/ca-key.pem /etc/kubernetes/pki/ca.pem

kubectl的参数意义为

–certificate-authority:验证根证书;

–client-certificate、–client-key:生成的 组件证书和私钥,连接 kube-apiserver 时会用到

–embed-certs=true:将 ca.pem 和 组件.pem 证书内容嵌入到生成的 kubeconfig 文件中(不加时,写入的是证书文件路径)

API Server Certificate

此凭证将被用于API Server和Kubelet Client通信使用,使用下面命令生成kube-apiserver凭证:

cfssl gencert \

-ca=${PKI_DIR}/ca.pem \

-ca-key=${PKI_DIR}/ca-key.pem \

-config=ca-config.json \

-hostname=10.96.0.1,${VIP},127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,$(xargs -n1<<<${MasterArray[@]} | sort | paste -d, -s -) \

-profile=kubernetes \

apiserver-csr.json | cfssljson -bare ${PKI_DIR}/apiserver

ls ${PKI_DIR}/apiserver*.pem

/etc/kubernetes/pki/apiserver-key.pem /etc/kubernetes/pki/apiserver.pem

Front Proxy Certificate

此凭证将被用于Authenticating Proxy的功能上,而该功能主要是提供API Aggregation的认证。使用下面命令生成CA:

cfssl gencert \

-initca front-proxy-ca-csr.json | cfssljson -bare ${PKI_DIR}/front-proxy-ca

ls ${PKI_DIR}/front-proxy-ca*.pem

/etc/kubernetes/pki/front-proxy-ca-key.pem /etc/kubernetes/pki/front-proxy-ca.pem

接着生成front-proxy-client凭证(hosts的warning忽略即可):

cfssl gencert \

-ca=${PKI_DIR}/front-proxy-ca.pem \

-ca-key=${PKI_DIR}/front-proxy-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

front-proxy-client-csr.json | cfssljson -bare ${PKI_DIR}/front-proxy-client

ls ${PKI_DIR}/front-proxy-client*.pem

front-proxy-client-key.pem front-proxy-client.pem

Controller Manager Certificate

凭证会建立system:kube-controller-manager的使用者(凭证 CN),并被绑定在RBAC Cluster Role中的system:kube-controller-manager来让Controller Manager 元件能够存取需要的API object。

这边通过以下命令生成 Controller Manager 凭证(hosts的warning忽略即可):

cfssl gencert \

-ca=${PKI_DIR}/ca.pem \

-ca-key=${PKI_DIR}/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare ${PKI_DIR}/controller-manager

ls ${PKI_DIR}/controller-manager*.pem

controller-manager-key.pem controller-manager.pem

接着利用kubectl生成Controller Manager的kubeconfig文件:

# controller-manager set cluster

kubectl config set-cluster kubernetes \

--certificate-authority=${PKI_DIR}/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${K8S_DIR}/controller-manager.kubeconfig

# controller-manager set credentials

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=${PKI_DIR}/controller-manager.pem \

--client-key=${PKI_DIR}/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=${K8S_DIR}/controller-manager.kubeconfig

# controller-manager set context

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=${K8S_DIR}/controller-manager.kubeconfig

# controller-manager set default context

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=${K8S_DIR}/controller-manager.kubeconfig

Scheduler Certificate

凭证会建立system:kube-scheduler的使用者(凭证 CN),并被绑定在 RBAC Cluster Role 中的system:kube-scheduler来让 Scheduler 元件能够存取需要的 API object。

这边通过以下命令生成 Scheduler 凭证(hosts的warning忽略即可):

cfssl gencert \

-ca=${PKI_DIR}/ca.pem \

-ca-key=${PKI_DIR}/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare ${PKI_DIR}/scheduler

ls ${PKI_DIR}/scheduler*.pem

/etc/kubernetes/pki/scheduler-key.pem /etc/kubernetes/pki/scheduler.pem

接着利用kubectl生成Scheduler的kubeconfig文件:

# scheduler set cluster

kubectl config set-cluster kubernetes \

--certificate-authority=${PKI_DIR}/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${K8S_DIR}/scheduler.kubeconfig

# scheduler set credentials

kubectl config set-credentials system:kube-scheduler \

--client-certificate=${PKI_DIR}/scheduler.pem \

--client-key=${PKI_DIR}/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=${K8S_DIR}/scheduler.kubeconfig

# scheduler set context

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=${K8S_DIR}/scheduler.kubeconfig

# scheduler use default context

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=${K8S_DIR}/scheduler.kubeconfig

Admin Certificate

Admin 被用来绑定 RBAC Cluster Role 中 cluster-admin,当想要(最常见的就是使用kubectl)操作所有 Kubernetes 集群功能时,就必须利用这边生成的 kubeconfig 文件。

admin-csr.json里

O 为 system:masters,kube-apiserver 收到该证书后将请求的 Group 设置为 system:masters

预定义的 ClusterRoleBinding cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予所有 API的权限

该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空或者不写

这边通过以下命令生成 Kubernetes Admin 凭证(hosts的warning忽略即可):

cfssl gencert \

-ca=${PKI_DIR}/ca.pem \

-ca-key=${PKI_DIR}/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare ${PKI_DIR}/admin

ls ${PKI_DIR}/admin*.pem

/etc/kubernetes/pki/admin-key.pem /etc/kubernetes/pki/admin.pem

kubectl 默认从 ~/.kube/config 文件读取 kube-apiserver 地址、证书、用户名等信息,如果没有配置,执行 kubectl 命令时可能会出错(因为默认连接8080匿名端口)

接着利用kubectl生成 Admin 的kubeconfig文件

# admin set cluster

kubectl config set-cluster kubernetes \

--certificate-authority=${PKI_DIR}/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${K8S_DIR}/admin.kubeconfig

# admin set credentials

kubectl config set-credentials kubernetes-admin \

--client-certificate=${PKI_DIR}/admin.pem \

--client-key=${PKI_DIR}/admin-key.pem \

--embed-certs=true \

--kubeconfig=${K8S_DIR}/admin.kubeconfig

# admin set context

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=${K8S_DIR}/admin.kubeconfig

# admin set default context

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=${K8S_DIR}/admin.kubeconfig

Master Kubelet Certificate

这边使用 Node authorizer 来让节点的 kubelet 能够存取如 services、endpoints 等 API,而使用 Node authorizer 需定义system:nodesCLusterRole(凭证的 Organization),并且包含system:node:的使用者名称(凭证的 Common Name)。

首先在k8s-master1节点生成所有 master 节点的 kubelet 凭证,这边通过下面命令來生成:

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ---"

\cp kubelet-csr.json kubelet-$NODE-csr.json;

sed -i "s/\$NODE/$NODE/g" kubelet-$NODE-csr.json;

cfssl gencert \

-ca=${PKI_DIR}/ca.pem \

-ca-key=${PKI_DIR}/ca-key.pem \

-config=ca-config.json \

-hostname=$NODE \

-profile=kubernetes \

kubelet-$NODE-csr.json | cfssljson -bare ${PKI_DIR}/kubelet-$NODE;

rm -f kubelet-$NODE-csr.json

done

ls ${PKI_DIR}/kubelet*.pem

/etc/kubernetes/pki/kubelet-k8s-m1-key.pem /etc/kubernetes/pki/kubelet-k8s-m2.pem

/etc/kubernetes/pki/kubelet-k8s-m1.pem /etc/kubernetes/pki/kubelet-k8s-m3-key.pem

/etc/kubernetes/pki/kubelet-k8s-m2-key.pem /etc/kubernetes/pki/kubelet-k8s-m3.pem

完成后复制kubelet凭证至所有master节点:

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} "mkdir -p ${PKI_DIR}"

scp ${PKI_DIR}/ca.pem ${MasterArray[$NODE]}:${PKI_DIR}/ca.pem

scp ${PKI_DIR}/kubelet-$NODE-key.pem ${MasterArray[$NODE]}:${PKI_DIR}/kubelet-key.pem

scp ${PKI_DIR}/kubelet-$NODE.pem ${MasterArray[$NODE]}:${PKI_DIR}/kubelet.pem

rm -f ${PKI_DIR}/kubelet-$NODE-key.pem ${PKI_DIR}/kubelet-$NODE.pem

done

接着在k8s-m1执行以下命令给所有master产生kubelet的kubeconfig文件:

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ---"

ssh ${MasterArray[$NODE]} "cd ${PKI_DIR} && \

kubectl config set-cluster kubernetes \

--certificate-authority=${PKI_DIR}/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${K8S_DIR}/kubelet.kubeconfig && \

kubectl config set-credentials system:node:${NODE} \

--client-certificate=${PKI_DIR}/kubelet.pem \

--client-key=${PKI_DIR}/kubelet-key.pem \

--embed-certs=true \

--kubeconfig=${K8S_DIR}/kubelet.kubeconfig && \

kubectl config set-context system:node:${NODE}@kubernetes \

--cluster=kubernetes \

--user=system:node:${NODE} \

--kubeconfig=${K8S_DIR}/kubelet.kubeconfig && \

kubectl config use-context system:node:${NODE}@kubernetes \

--kubeconfig=${K8S_DIR}/kubelet.kubeconfig"

done

Service Account Key

Kubernetes Controller Manager 利用 Key pair 生成与签署 Service Account 的 tokens,而这边不能通过 CA 做认证,而是建立一组公私钥来让 API Server 与 Controller Manager 使用:

在k8s-m1执行以下指令

openssl genrsa -out ${PKI_DIR}/sa.key 2048

openssl rsa -in ${PKI_DIR}/sa.key -pubout -out ${PKI_DIR}/sa.pub

ls ${PKI_DIR}/sa.*

/etc/kubernetes/pki/sa.key /etc/kubernetes/pki/sa.pub

如果熟悉证书过程可以删除以下文件,小白不建议删除这些文件

所有资讯准备完成后,就可以将一些不必要文件删除:

rm -f ${PKI_DIR}/*.csr \

${PKI_DIR}/scheduler*.pem \

${PKI_DIR}/controller-manager*.pem \

${PKI_DIR}/admin*.pem \

${PKI_DIR}/kubelet*.pem

复制文件至其他节点

复制凭证文件至其他master节点:

for NODE in "${!otherMaster[@]}"; do

echo "--- $NODE ${otherMaster[$NODE]}---"

for FILE in $(ls ${PKI_DIR}); do

scp ${PKI_DIR}/${FILE} ${otherMaster[$NODE]}:${PKI_DIR}/${FILE}

done

done

复制Kubernetes config文件至其他master节点:

for NODE in "${!otherMaster[@]}"; do

echo "--- $NODE ${otherMaster[$NODE]}---"

for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do

scp ${K8S_DIR}/${FILE} ${otherMaster[$NODE]}:${K8S_DIR}/${FILE}

done

done

HA(haproxy+keepalived) 单台master就不要用HA了

首先所有master安装haproxy+keepalived,多按几次回车如果没输出的话

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} 'yum install haproxy keepalived -y' &

done

wait

在k8s-master1节点下把相关配置文件配置后再分发

cd ~/k8s-manual-files/master/etc

# 修改haproxy.cfg配置文件

sed -i '$r '<(paste <( seq -f' server k8s-api-%g' ${#MasterArray[@]} ) <( xargs -n1<<<${MasterArray[@]} | sort | sed 's#$#:6443 check#')) haproxy/haproxy.cfg

# 修改keepalived(网卡和VIP写进去,使用下面命令)

sed -ri "s#\{\{ VIP \}\}#${VIP}#" keepalived/*

sed -ri "s#\{\{ interface \}\}#${interface}#" keepalived/keepalived.conf

sed -i '/unicast_peer/r '<(xargs -n1<<<${MasterArray[@]} | sort | sed 's#^#\t#') keepalived/keepalived.conf

# 分发文件

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

scp -r haproxy/ ${MasterArray[$NODE]}:/etc

scp -r keepalived/ ${MasterArray[$NODE]}:/etc

ssh ${MasterArray[$NODE]} 'systemctl enable --now haproxy keepalived'

done

ping下vip看看能通否,先等待大概四五秒等keepalived和haproxy起来

ping $VIP

如果vip没起来就是keepalived没起来就每个节点上去restart下keepalived或者确认下配置文件/etc/keepalived/keepalived.conf里网卡名和ip是否注入成功

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} 'systemctl restart haproxy keepalived'

done

在k8s-master1节点下把相关配置文件配置后再分发

cd ~/k8s-manual-files/master/

etcd_servers=$( xargs -n1<<<${MasterArray[@]} | sort | sed 's#^#https://#;s#$#:2379#;$s#\n##' | paste -d, -s - )

# 注入VIP和etcd_servers

sed -ri '/--advertise-address/s#=.+#='"$VIP"' \\#' systemd/kube-apiserver.service

sed -ri '/--etcd-servers/s#=.+#='"$etcd_servers"' \\#' systemd/kube-apiserver.service

# 修改encryption.yml

ENCRYPT_SECRET=$( head -c 32 /dev/urandom | base64 )

sed -ri "/secret:/s#(: ).+#\1${ENCRYPT_SECRET}#" encryption/config.yml

# 分发文件(不想master跑pod的话就不复制kubelet的配置文件)

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} "mkdir -p $MANIFESTS_DIR /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes"

scp systemd/kube-*.service ${MasterArray[$NODE]}:/usr/lib/systemd/system/

scp encryption/config.yml ${MasterArray[$NODE]}:/etc/kubernetes/encryption.yml

scp audit/policy.yml ${MasterArray[$NODE]}:/etc/kubernetes/audit-policy.yml

scp systemd/kubelet.service ${MasterArray[$NODE]}:/lib/systemd/system/kubelet.service

scp systemd/10-kubelet.conf ${MasterArray[$NODE]}:/etc/systemd/system/kubelet.service.d/10-kubelet.conf

scp etc/kubelet/kubelet-conf.yml ${MasterArray[$NODE]}:/etc/kubernetes/kubelet-conf.yml

done

在k8s-master1上给所有master机器启动kubelet 服务并设置kubectl补全脚本:

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} 'systemctl enable --now kubelet kube-apiserver kube-controller-manager kube-scheduler;

cp /etc/kubernetes/admin.kubeconfig ~/.kube/config;

kubectl completion bash > /etc/bash_completion.d/kubectl'

done

完成后,在任意一台master节点通过简单指令验证:

# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 36s

# kubectl get node

k8s-master1 NotReady master 25d v1.12.3

k8s-master2 NotReady master 25d v1.12.3

k8s-master3 NotReady master 25d v1.12.3

建立TLS Bootstrapping RBAC 与Secret

由于本次安装启用了TLS认证,因此每个节点的kubelet都必须使用kube-apiserver的CA的凭证后,才能与kube-apiserver进行沟通,而该过程需要手动针对每台节点单独签署凭证是一件繁琐的事情,且一旦节点增加会延伸出管理不易问题;而TLS bootstrapping目标就是解决该问题,通过让kubelet先使用一个预定低权限使用者连接到kube-apiserver,然后在对kube-apiserver申请凭证签署,当授权Token一致时,Node节点的kubelet凭证将由kube-apiserver动态签署提供。具体作法可以参考TLS Bootstrapping与Authenticating with Bootstrap Tokens。

后面kubectl命令只需要在任何一台master执行就行了

首先在k8s-master1建立一个变数来产生BOOTSTRAP_TOKEN,并建立bootstrap-kubelet.conf的Kubernetes config文件:

export TOKEN_ID=$(openssl rand 3 -hex)

export TOKEN_SECRET=$(openssl rand 8 -hex)

export BOOTSTRAP_TOKEN=${TOKEN_ID}.${TOKEN_SECRET}

# bootstrap set cluster

kubectl config set-cluster kubernetes \

--certificate-authority=${PKI_DIR}/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${K8S_DIR}/bootstrap-kubelet.kubeconfig

# bootstrap set credentials

kubectl config set-credentials tls-bootstrap-token-user \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=${K8S_DIR}/bootstrap-kubelet.kubeconfig

# bootstrap set context

kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=${K8S_DIR}/bootstrap-kubelet.kubeconfig

# bootstrap use default context

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=${K8S_DIR}/bootstrap-kubelet.kubeconfig

接着在k8s-master1建立TLS bootstrap secret来提供自动签证使用:

cd ~/k8s-manual-files/master

# 注入变量

sed -ri "s#\{TOKEN_ID\}#${TOKEN_ID}#g" resources/bootstrap-token-Secret.yml

sed -ri "/token-id/s#\S+\$#'&'#" resources/bootstrap-token-Secret.yml

sed -ri "s#\{TOKEN_SECRET\}#${TOKEN_SECRET}#g" resources/bootstrap-token-Secret.yml

kubectl create -f resources/bootstrap-token-Secret.yml

# 下面是输出

secret "bootstrap-token-65a3a9" created

在k8s-m1建立 TLS Bootstrap Autoapprove RBAC来自动处理 CSR:

kubectl apply -f resources/kubelet-bootstrap-rbac.yml

# 下面是输出

clusterrolebinding.rbac.authorization.k8s.io "kubelet-bootstrap" created

clusterrolebinding.rbac.authorization.k8s.io "node-autoapprove-bootstrap" created

clusterrolebinding.rbac.authorization.k8s.io "node-autoapprove-certificate-rotation" created

这边会发现kubectl logs出现403 Forbidden问题,这是因为kube-apiserveruser并没有nodes的资源存取权限,属于正常。

为了方便管理集群,因此需要通过 kubectl logs 来查看,但由于 API 权限,故需要建立一个 RBAC Role 来获取存取权限,这边在k8s-master1节点执行下面命令创建:

kubectl apply -f resources/apiserver-to-kubelet-rbac.yml

# 下面是输出

clusterrole.rbac.authorization.k8s.io "system:kube-apiserver-to-kubelet" configured

clusterrolebinding.rbac.authorization.k8s.io "system:kube-apiserver" configured

设定master节点加上污点Taint不让(没有声明容忍该污点的)pod跑在master节点上:

kubectl taint nodes node-role.kubernetes.io/master="":NoSchedule --all

# 下面是输出

node "k8s-master1" tainted

node "k8s--maste2" tainted

node "k8s--maste3" tainted

Kubernetes Nodes

本部分将说明如何建立与设定Kubernetes Node 角色,Node 是主要执行容器实例(Pod)的工作节点。

在开始部署前,先在k8-master1将需要用到的文件复制到所有node节点上:

cd ${PKI_DIR}

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

ssh ${NodeArray[$NODE]} "mkdir -p ${PKI_DIR} ${ETCD_SSL}"

# Etcd

for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do

scp ${ETCD_SSL}/${FILE} ${NodeArray[$NODE]}:${ETCD_SSL}/${FILE}

done

# Kubernetes

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig ; do

scp ${K8S_DIR}/${FILE} ${NodeArray[$NODE]}:${K8S_DIR}/${FILE}

done

done

部署与设定

在k8s-master1节点分发kubelet.service相关文件到每台node上去管理kubelet:

cd ~/k8s-manual-files/

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

ssh ${NodeArray[$NODE]} "mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d $MANIFESTS_DIR"

scp node/systemd/kubelet.service ${NodeArray[$NODE]}:/lib/systemd/system/kubelet.service

scp node/systemd/10-kubelet.conf ${NodeArray[$NODE]}:/etc/systemd/system/kubelet.service.d/10-kubelet.conf

scp node/etc/kubelet/kubelet-conf.yml ${NodeArray[$NODE]}:/etc/kubernetes/kubelet-conf.yml

done

最后在k8s-master1上去启动每个node节点的kubelet 服务:

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

ssh ${NodeArray[$NODE]} 'systemctl enable --now kubelet.service'

done

验证集群

完成后,在任意一台master节点并通过简单指令验证(刚开始master上的csr状态是pending可以等待):

前三个是master上的kubelet,最后是node1的

kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-bvz9l 11m system:node:k8s-m1 Approved,Issued

csr-jwr8k 11m system:node:k8s-m2 Approved,Issued

csr-q867w 11m system:node:k8s-m3 Approved,Issued

node-csr-Y-FGvxZWJqI-8RIK_IrpgdsvjGQVGW0E4UJOuaU8ogk 17s system:bootstrap:dca3e1 Approved,Issued

kubectl get nodes

k8s-master1 NotReady master 25d v1.12.3

k8s-master2 NotReady master 25d v1.12.3

k8s-master3 NotReady master 25d v1.12.3

k8s-node1 NotReady node 25d v1.12.3

k8s-node2 NotReady node 25d v1.12.3

k8s-node3 NotReady node 25d v1.12.3

Kubernetes Core Addons部署

当完成上面所有步骤后,接着需要部署一些插件,其中如Kubernetes DNS与Kubernetes Proxy等这种Addons是非常重要的。

Kubernetes Proxy(二进制和ds选择一种方式)

Kube-proxy是实现Service的关键插件,kube-proxy会在每台节点上执行,然后监听API Server的Service与Endpoint资源物件的改变,然后来依据变化执行iptables来实现网路的转发。这边我们会需要建议一个DaemonSet来执行,并且建立一些需要的Certificates。

二进制部署方式(ds比二进制更好扩展,后面有ds部署)

在k8s-master1配置 kube-proxy:

创建一个 kube-proxy 的 service account:

kubectl -n kube-system create serviceaccount kube-proxy

将 kube-proxy 的 serviceaccount 绑定到 clusterrole system:node-proxier 以允许 RBAC:

kubectl create clusterrolebinding system:kube-proxy \

--clusterrole system:node-proxier \

--serviceaccount kube-system:kube-proxy

创建kube-proxy的kubeconfig:

SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

# proxy set cluster

kubectl config set-cluster kubernetes \

--certificate-authority=${PKI_DIR}/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

# proxy set credentials

kubectl config set-credentials kubernetes \

--token=${JWT_TOKEN} \

--kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

# proxy set context

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=kubernetes \

--kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

# proxy set default context

kubectl config use-context kubernetes \

--kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

在k8s-master1分发kube-proxy 的 相关文件到所有节点

cd ~/k8s-manual-files/

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

scp ${K8S_DIR}/kube-proxy.kubeconfig ${MasterArray[$NODE]}:${K8S_DIR}/kube-proxy.kubeconfig

scp addons/kube-proxy/kube-proxy.conf ${MasterArray[$NODE]}:/etc/kubernetes/kube-proxy.conf

scp addons/kube-proxy/kube-proxy.service ${MasterArray[$NODE]}:/usr/lib/systemd/system/kube-proxy.service

done

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

scp ${K8S_DIR}/kube-proxy.kubeconfig ${NodeArray[$NODE]}:${K8S_DIR}/kube-proxy.kubeconfig

scp addons/kube-proxy/kube-proxy.conf ${NodeArray[$NODE]}:/etc/kubernetes/kube-proxy.conf

scp addons/kube-proxy/kube-proxy.service ${NodeArray[$NODE]}:/usr/lib/systemd/system/kube-proxy.service

done

然后在k8s-master1上启动master节点的kube-proxy 服务:

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} 'systemctl enable --now kube-proxy'

done

然后在k8s-master1上启动node节点的kube-proxy 服务:

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

ssh ${NodeArray[$NODE]} 'systemctl enable --now kube-proxy'

done

daemonSet方式部署

cd ~/k8s-manual-files

# 注入变量

sed -ri "/server:/s#(: ).+#\1${KUBE_APISERVER}#" addons/kube-proxy/kube-proxy.yml

sed -ri "/image:.+kube-proxy/s#:[^:]+\$#:$KUBE_VERSION#" addons/kube-proxy/kube-proxy.yml

kubectl apply -f addons/kube-proxy/kube-proxy.yml

# 下面是输出

serviceaccount "kube-proxy" created

clusterrolebinding.rbac.authorization.k8s.io "system:kube-proxy" created

configmap "kube-proxy" created

daemonset.apps "kube-proxy" created

这里如果版本为1.12以上的话node会相对之前多一个污点node.kubernetes.io/not-ready:NoSchedule

要么pod容忍该污点(我github的yaml里已经添加容忍该污点),如果kubeadm或者手动我之前博客的staticPod方式则要在/etc/kubernetes/manifests/的kube-proxy和flannel或者calico的yaml里添加下面的

- key: node.kubernetes.io/not-ready

operator: Exists

effect: NoSchedule

正常是下面状态,如果有问题可以看看docker拉到了镜像否和kubelet的日志输出

kubectl -n kube-system get po -l k8s-app=kube-proxy

NAME READY STATUS RESTARTS AGE

kube-proxy-6dwvj 1/1 Running 13 25d

kube-proxy-85grm 1/1 Running 14 25d

kube-proxy-gmbcv 1/1 Running 14 25d

kube-proxy-m29vs 1/1 Running 15 25d

kube-proxy-nv7ct 1/1 Running 14 25d

kube-proxy-vqbvj 1/1 Running 20 25d

一般上面都正常,如果还是无法创建出kube-proxy的pod就试试下面我个人奇特的方式

可以创建下cni配置文件欺骗kubelet把状态变成ready跳过

先创建cni的配置文件欺骗下kubelet,后面再删掉

mkdir -p /etc/cni/net.d/

grep -Poz 'cni-conf.json: \|\s*\n\K[\s\S]+?}(?=\s*\n\s+net-conf.json)' \

addons/flannel/kube-flannel.yml > /etc/cni/net.d/10-flannel.conflist

# 分发到master

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} 'mkdir -p /etc/cni/net.d/'

scp /etc/cni/net.d/10-flannel.conflist ${MasterArray[$NODE]}:/etc/cni/net.d/

done

# 分发到node

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

ssh ${NodeArray[$NODE]} 'mkdir -p /etc/cni/net.d/'

scp /etc/cni/net.d/10-flannel.conflist ${NodeArray[$NODE]}:/etc/cni/net.d/

done

通过ipvsadm查看 proxy 规则(正常了直接跳到下面集群网络那)

ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 192.168.88.110:5443 Masq 1 0 0

确认使用ipvs模式

curl localhost:10249/proxyMode

ipvs

删掉master上的欺骗kubelet的cni文件

for NODE in "${!MasterArray[@]}"; do

echo "--- $NODE ${MasterArray[$NODE]} ---"

ssh ${MasterArray[$NODE]} 'rm -f /etc/cni/net.d/10-flannel.conflist'

done

删掉node上的欺骗kubelet的cni文件

for NODE in "${!NodeArray[@]}"; do

echo "--- $NODE ${NodeArray[$NODE]} ---"

ssh ${NodeArray[$NODE]} 'rm -f /etc/cni/net.d/10-flannel.conflist'

done

部署flanneld

flannel 使用 vxlan 技术为各节点创建一个可以互通的 Pod 网络,使用的端口为 UDP 8472,需要开放该端口

#grep -Pom1 'image:\s+\K\S+' addons/flannel/kube-flannel.yml

quay.io/coreos/flannel:v0.10.0-amd64

#curl -s https://zhangguanzhang.github.io/bash/pull.sh | bash -s -- quay.io/coreos/flannel:v0.10.0-amd64

创建flannel,这边使用ds来创建

#sed -ri "s#\{\{ interface \}\}#${interface}#" addons/flannel/kube-flannel.yml

#kubectl apply -f addons/flannel/kube-fla

检查是否启动

# kubectl -n kube-system get po -l k8s-app=flannel

Calico部署

由于 Calico 提供了 Kubernetes resources YAML 文件来快速以容器方式部署网络插件至所有节点上,因此只需要在k8s-m1使用 kubeclt 执行下面指令來建立:

这边镜像因为是quay.io域名仓库会拉取很慢,所有节点可以提前拉取下,否则就等。镜像名根据输出来,可能我博客部分使用镜像版本更新了

#grep -Po 'image:\s+\K\S+' addons/calico/v3.1/calico.yml

quay.io/calico/typha:v0.7.4

quay.io/calico/node:v3.1.3

quay.io/calico/cni:v3.1.3

包含上面三个镜像,拉取两个即可

# curl -s https://zhangguanzhang.github.io/bash/pull.sh | bash -s -- quay.io/calico/node:v3.1.3

#curl -s https://zhangguanzhang.github.io/bash/pull.sh | bash -s -- quay.io/calico/cni:v3.1.3

#sed -ri "s#\{\{ interface \}\}#${interface}#" addons/calico/v3.1/calico.yml

#kubectl apply -f addons/calico/v3.1

kubectl -n kube-system get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default jenkins-demo-5789d6b9ff-8njk4 0/1 CrashLoopBackOff 811 2d20h

default nginx-c5b5c6f7c-pb7tk 1/1 Running 0 14d

default test1-ingress-6sdm8 1/1 Running 0 14d

ehaofang-jenkins jenkins2-d7fd6b749-jtrkn 1/1 Running 1 4d3h

ingress-nginx default-http-backend-86569b9d95-nrnt6 1/1 Running 2 17d

ingress-nginx nginx-ingress-controller-57fcbb657d-4j658 1/1 Running 4 17d

kube-system calico-node-2f7pb 2/2 Running 13 18d

kube-system calico-node-98x4m 2/2 Running 10 18d

kube-system calico-node-9tnhq 2/2 Running 14 18d

kube-system calico-node-g8rrt 2/2 Running 12 18d

kube-system calico-node-tfbrl 2/2 Running 12 18d

kube-system calico-node-v4wz4 2/2 Running 12 18d

kube-system calicoctl-66d787bc49-xpfv9 1/1 Running 6 18d

kube-system kube-dns-59c677cb95-5zf26 3/3 Running 6 17d

kube-system kube-dns-59c677cb95-gf9wc 3/3 Running 18 18d

kube-system kube-proxy-6dwvj 1/1 Running 13 25d

kube-system kube-proxy-85grm 1/1 Running 14 25d

kube-system kube-proxy-gmbcv 1/1 Running 14 25d

kube-system kube-proxy-m29vs 1/1 Running 15 25d

kube-system kube-proxy-nv7ct 1/1 Running 14 25d

kube-system kube-proxy-vqbvj 1/1 Running 20 25d

kube-system kubernetes-dashboard-6c4fb64bb7-44zm8 1/1 Running 10 18d

kube-system metrics-server-655c8b6669-zt526 1/1 Running 3 17d

kube-system tiller-deploy-74b78b6d55-r5lbt 1/1 Running 2 17d

calico正常是下面状态

kube-system calico-node-2f7pb 2/2 Running 13 18d

kube-system calico-node-98x4m 2/2 Running 10 18d

kube-system calico-node-9tnhq 2/2 Running 14 18d

kube-system calico-node-g8rrt 2/2 Running 12 18d

kube-system calico-node-tfbrl 2/2 Running 12 18d

kube-system calico-node-v4wz4

部署后通过下面查看状态即使正常

calico-node-2f7pb 2/2 Running 13 18d

calico-node-98x4m 2/2 Running 10 18d

calico-node-9tnhq 2/2 Running 14 18d

calico-node-g8rrt 2/2 Running 12 18d

calico-node-tfbrl 2/2 Running 12 18d

calico-node-v4wz4 2/2 Running 12 18d

查找calicoctl的pod名字

kubectl -n kube-system get po -l k8s-app=calicoctl

NAME READY STATUS RESTARTS AGE

calicoctl-66d787bc49-xpfv9 1/1 Running 6 18d

通过 kubectl exec calicoctl pod 执行命令来检查功能是否正常

kubectl -n kube-system exec calicoctl-66d787bc49-xpfv9 -- calicoctl get profiles -o wide

NAME LABELS

kns.default map[]

kns.ehaofang-jenkins map[]

kns.ingress-nginx map[]

kns.kube-jenkins map[]

kns.kube-public map[]

kns.kube-system map[]

kns.monitoring map[]

kns.weave map[]

CoreDNS

1.11后CoreDNS 已取代 Kube DNS 作为集群服务发现元件,由于 Kubernetes 需要让 Pod 与 Pod 之间能夠互相通信,然而要能够通信需要知道彼此的 IP 才行,而这种做法通常是通过 Kubernetes API 来获取,但是 Pod IP 会因为生命周期变化而改变,因此这种做法无法弹性使用,且还会增加 API Server 负担,基于此问题 Kubernetes 提供了 DNS 服务来作为查询,让 Pod 能夠以 Service 名称作为域名来查询 IP 位址,因此使用者就再不需要关心实际 Pod IP,而 DNS 也会根据 Pod 变化更新资源记录(Record resources)。

CoreDNS 是由 CNCF 维护的开源 DNS 方案,该方案前身是 SkyDNS,其采用了 Caddy 的一部分来开发伺服器框架,使其能够建立一套快速灵活的 DNS,而 CoreDNS 每个功能都可以被当作成一個插件的中介软体,如 Log、Cache、Kubernetes 等功能,甚至能够将源记录存储在 Redis、Etcd 中。

这里节点使用的是hostname,所以建议把hosts关系写到Coredns的解析里

写成下面这种格式也就是使用Coredns的hosts插件

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

hosts {

192.168.2.111 k8s-master1

192.168.2.112 k8s-master2

192.168.2.113 k8s-master3

192.168.2.114 k8s-node1

192.168.2.115 k8s-node2

192.168.2.116 k8s-node

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

reload

loadbalance

}

如果偷懒可以我命令修改文件注入(顺序乱没关系,强迫症的话自己去改)

sed -i '57r '<(echo ' hosts {';for NODE in "${!MasterArray[@]}";do echo " ${MasterArray[$NODE]} $NODE"; done;for NODE in "${!NodeArray[@]}";do echo " ${NodeArray[$NODE]} $NODE";done;echo ' }';) addons/coredns/coredns.yml

如果后期增加类似解析记录的话可以改cm后(注意cm是yaml格式写的,所以不要使用tab必须用空格)用kill信号让coredns去reload,因为主进程是前台也就是PID为1,找到对应的pod执行即可,也可以利用deploy的更新机制去伪更新实现重启

kubectl exec coredns-xxxxxx -- kill -SIGUSR1 1

在k8s-master1通过 kubeclt 执行下面命令來创建,并检查是否部署成功:

kubectl apply -f addons/coredns/coredns.yml

# 下面是输出

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.extensions/coredns created

service/kube-dns created

$ kubectl -n kube-system get po -l k8s-app=kube-dns

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6975654877-jjqkg 1/1 Running 0 1m

kube-system coredns-6975654877-ztqjh 1/1 Running 0 1m

完成后,通过检查节点是否不再是NotReady,以及 Pod 是否不再是Pending:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready master 25d v1.12.3

k8s-master2 Ready master 25d v1.12.3

k8s-master3 Ready master 25d v1.12.3

k8s-node1 Ready node 25d v1.12.3

k8s-node2 Ready node 25d v1.12.3

k8s-node3 Ready node 25d v1.12.3

这里似乎有个官方bug https://github.com/coredns/coredns/issues/2289

coredns正常否看脸,可以下面创建pod来测试

先创建一个dnstool的pod

cat<nslookup下看看能返回地址不

kubectl exec -ti busybox -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

下面则是遇到了,这个现象是官方bug,如果想看log的话在Corefile加一行log则开启log打印查看,上面的issue里官方目前也无解

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

nslookup: can't resolve 'kubernetes'

command terminated with exit code 1

KubeDNS(如果遇到上面的CoreDNS的bug的话使用它)

Kube DNS是Kubernetes集群内部Pod之间互相沟通的重要Addon,它允许Pod可以通过Domain Name方式来连接Service,其主要由Kube DNS与Sky DNS组合而成,通过Kube DNS监听Service与Endpoint变化,来提供给Sky DNS资讯,已更新解析位址。

如果CoreDNS工作不正常,先删掉它,删掉后确保coredns的pod和svc不存在

kubectl delete -f addons/coredns/coredns.yml

kubectl -n kube-system get pod,svc -l k8s-app=kube-dns

No resources found.

创建KubeDNS

kubectl apply -f addons/Kubedns/kubedns.yml

serviceaccount/kube-dns created

service/kube-dns created

deployment.extensions/kube-dns create

查看pod状态

kubectl -n kube-system get pod,svc -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

pod/kube-dns-59c677cb95-5zf26 3/3 Running 6 17d

pod/kube-dns-59c677cb95-gf9wc 3/3 Running 18 18d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.96.0.10 53/UDP,53/TCP 25d

检查集群dns正常否

kubectl exec -ti busybox -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

Metrics Server部署

kubectl create -f addons/metric-server/metrics-server-1.12+.yml

查看pod状态

kubectl -n kube-system get po -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-655c8b6669-zt526 1/1 Running 3 17d

完成后,等待一段时间(约 30s - 1m)收集 Metrics,再次执行 kubectl top 指令查看:

kubectl get --raw /apis/metrics.k8s.io/v1beta1

kubectl get apiservice|grep metrics

kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master1 346m 8% 1763Mi 22%

k8s-master2 579m 14% 1862Mi 23%

k8s-master3 382m 9% 1669Mi 21%

k8s-node1 661m 5% 4362Mi 18%

k8s-node2 440m 3% 3929Mi 16%

k8s-node3 425m 3% 1688Mi 7%

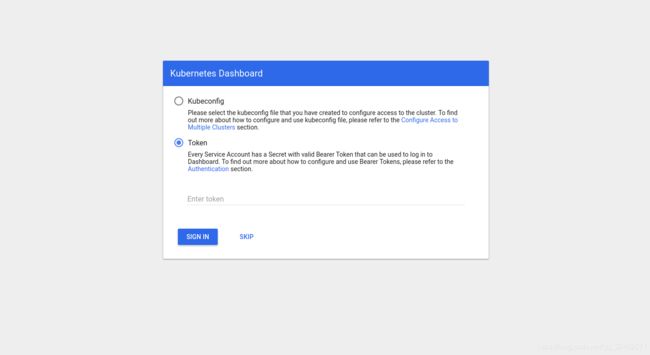

Dashboard部署

kubectl apply -f ExtraAddons/dashboard

kubectl -n kube-system get po,svc -l k8s-app=kubernetes-dashboard

这边会额外建立一个名称为anonymous-dashboard-proxy的 Cluster Role(Binding) 来让system:anonymous这个匿名使用者能够通过 API Server 来 proxy 到 Kubernetes Dashboard,而这个 RBAC 规则仅能够存取services/proxy资源,以及https:kubernetes-dashboard:资源名称同时在 1.7 版本以后的 Dashboard 将不再提供所有权限,因此需要建立一个 service account 来绑定 cluster-admin role(这系列已经写在dashboard/anonymous-proxy-rbac.yml里)

完成后,就可以通过浏览器存取Dashboard https://{YOUR_VIP}:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/。

获取token

#kubectl -n kube-system describe secrets | sed -rn '/\sdashboard-token-/,/^token/{/^token/s#\S+\s+##p}'

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtdG9rZW4teHJ6bGciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiY2M4NDYwZjEtZmEzYi0xMWU4LWIxYTYtMDAwYzI5YzJmNTA5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZCJ9.GQfWRhu_HCHct7xQitSAV6SM1wdGxei4Wjxf4gH5DthP5x6-GRSGapJ4fxNbJrVgtxH8GeZQcTqToGGP-pOeyoDsWauOI977E5sevHUUIh3hnDkqw1BHnk6v_i9vSJHRX1c0CseJHlEzB1CgWZiMw99vfxEEeTqM7FRq9FoNcQ2852pukJ_KMlqKEJ0kf-zyLgzuEEPAQMpVFRh7n9qbgs2sBn6-yHUx8I71iD2IYYDvdzQke4iNJofn7gmQT0sQr9045GOmCWV9hfz42W0ahTqvNMZJAL_75IlxIVH1PDJIAvveUpllR5q8RO8VnHbuM_Sl1YWdib2hSZMw-QVB2Q

复制token,然后贴到Kubernetes dashboard。注意这边一般来说要针对不同User开启特定存取权限。

至此,集群基本搭建完成!!!!

未完待续。。。