背景

最近开始收集客户的浏览访问记录数据,为以后的用户行为及用户画像打基础。数据的流转分析如下图所示:

这篇博文讲的是 从 nginx 到s3 的过程,只涉及上图的一小部分,使用的是logstash,版本5.4.3

注意,之前让运维让安装,默认安装的1.4.5,往s3写入的过程各种报错,升级版本之后才成功。

Logstash

手机日志的插件,安装在服务器上可以解析日志,支持各种匹配,能很方便的让你从复杂的日志文件里面收集出你想要的内容,安装和使用请看官网教程

Nginx日志

日志的格式运维和前段可配合做调整,如需要cookie运维配合日志记录cookie;需要其他参数的话可以让前端在url后面带上。我们开看一段nginx记录的原始日志:

192.168.199.63 - - [07/Jul/2017:20:55:38 +0800] "GET /c.gif?ezspm=4.0.2.0.0&keyword=humidifiers&catalog=SG HTTP/1.1" 304 0 "http://m2.sg.65emall.net/search?keyword=humidifiers&ezspm=4.0.2.0.0&listing_origin=20000002" "Mozilla/5.0 (Linux; Android 5.0; SM-G900P Build/LRX21T) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Mobile Safari/537.36" "140.206.112.194" 0.001 _ga=GA1.3.1284908540.1465133186; tma=228981083.10548698.1465133185510.1465133185510.1465133185510.1; bfd_g=bffd842b2b4843320000156c000106ca575405eb; G_ENABLED_IDPS=google; __zlcmid=azfbRwlP4TTFyi; ez_cookie_id=dce5aaf7-6eef-4193-b9d2-dd65dd0a2de5; 65_customer=9C7E020D4493C5A9,DPS:1dSKhm:7XnxmT6xJXDmu5h3mYdecAMwgmg; _ga=GA1.2.1284908540.1465133186; _gid=GA1.2.1865898003.1499149209

日志按空格分割,可以解读为:

第一个是客户端ip ;第二第三是 “-” 这里应该存的是用户的信息,如果没有的话就以 “-”代替;第四位是“[]”里面的内容,记录日志的服务器时间;再后面双引号里面的一长串是http请求的信息,格式是固定的 :“请求方式 请求url http版本”;往后是http请求返回的code,数字类型;再是请求返回的内容大小;往后依次是当前的url、浏览器信息、服务器IP地址、请求耗时。如果后面还有就是cookie的信息。

最后面的cookie信息也是有规律的,以我这边的日志为例:

_ga=GA1.3.1284908540.1465133186; tma=228981083.10548698.1465133185510.1465133185510.1465133185510.1; bfd_g=bffd842b2b4843320000156c000106ca575405eb; G_ENABLED_IDPS=google; __zlcmid=azfbRwlP4TTFyi; ez_cookie_id=dce5aaf7-6eef-4193-b9d2-dd65dd0a2de5; 65_customer=9C7E020D4493C5A9,DPS:1dSKhm:7XnxmT6xJXDmu5h3mYdecAMwgmg; _ga=GA1.2.1284908540.1465133186; _gid=GA1.2.1865898003.1499149209

我需要取到ez_cookie_id 和 65_customer 两个信息。

logstash配置

filter

我们来创建一个logstash grok pattern:

grok{match => { "message" => "%{IP:client_ip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] \"%{WORD:method} /%{NOTSPACE:request_page} HTTP/%{NUMBER:http_version}\" %{NUMBER:server_response}" }}

这个pattern我只格式化到 服务器的返回code,需要往后可以按照格式继续加

然后还要把request_page里面的 url参数也解析出来,这里用kv插件解析:

kv{

source => "request_page"

field_split =>"&?"

value_split => "="

trim_value => ";"

include_keys => ["ezspm","keyword","info","catalog","referrer","categoryid","productid"]

}

url里面可能有很多参数值,可以用 include_keys 来选出你只想要的值

同理也可以用kv插件 取出 log里面的cookie

kv{

source => "message"

field_split =>" "

value_split => "="

trim_value => ";"

include_keys => ["ez_cookie_id","65_customer"]

}

output

s3 {

access_key_id => "access-id"

secret_access_key => "access-key"

region => "ap-southeast-1"

prefix => "nginxlog/%{+YYYY-MM-dd}"

bucket => "bi-spm-test"

time_file=> 60

codec => "json_lines"

}

这里注意 bucket 不用写 “s3://”,直接写最外层的 bucket文件夹名称,prefix可以实现按日志切割文件夹存放log

按上述配置,我的输出目录最终为:

s3://bi-spm-test/nginxlog/2017-07-07/ls.s3.....txt

按天切割文件夹,每天的日志放到没天的文件夹下面。

date配置

logstash默认是utc 时间,比我们晚8个小时,比如

2017-07-07 06:00:00

产生的日志,存储到logstash 时间会变为,2017-07-06T22:00:00Z,此时不对日期处理,日志就会存储到 2017-07-06这个文件夹下面,可我明明是7月7号产生的log。

同时往s3 copy的时候 ,2017-07-06T22:00:00Z 这个时间 也会直接变成:

2017-07-06 22:00:00,这就造成了数据不准确的问题了。

在logstash社区有个小伙伴提出了同样的问题:date时区问题

这里我们用刀date filter来配置日期:

date {

locale=>"en"

match => ["timestamp", "dd/MMM/yyyy:HH:mm:ss +0800"]

target => "@timestamp"

timezone => "UTC"

}

把timezone 就设定为UTC,在它的基础上再加8个小时。

然后 2017-07-07 06:00:00 这个时间 存储下来就是

2017-07-07T06:00:00Z , 这是一个IOS的date格式,理论上 拿来用的时候 要再加 8个小时,但是这里我 只需要它放到正确的文件夹下面,以及copy到s3的时候日期正确,所以这里用投机取巧的方式满足我的需求。

完善的logstash配置:

input {

file {

path => ["/etc/logstash/test.log"]

type => "system"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter{

grok{

match => { "message" => "%{IP:client_ip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] \"%{WORD:method} /%{NOTSPACE:request_page} HTTP/%{NUMBER:http_version}\" %{NUMBER:server_response}" }

}

kv{

source => "message"

field_split =>" "

value_split => "="

trim_value => ";"

include_keys => ["ez_cookie_id","65_customer"]

}

kv{

source => "request_page"

field_split =>"&?"

value_split => "="

trim_value => ";"

include_keys => ["ezspm","keyword","info","catalog","referrer","categoryid","productid"]

}

urldecode {

all_fields => true

}

mutate{

remove_field=>["message","request_page","host","path","method","type","server_response","ident","auth","@version"] }

if [tags]{ drop {} }

if ![ezspm] { drop{} }

if ![65_customer] {mutate { add_field => {"65_customer" => ""} }}

if ![categoryid] {mutate { add_field => {"categoryid" => 0} }}

if ![productid] {mutate { add_field => {"productid" => 0} }}

if ![keyword] {mutate { add_field => {"keyword" => ""} }}

if ![referrer]{mutate { add_field => { "referrer" => ""} }}

if ![info]{mutate { add_field => { "info" => ""} }}

date {

locale=>"en"

match => ["timestamp", "dd/MMM/yyyy:HH:mm:ss +0800"]

target => "@timestamp"

timezone => "UTC"

}

mutate{remove_field => ["timestamp"]}

}

output {

s3 {

access_key_id => "access_id"

secret_access_key => "access_key"

region => "ap-southeast-1"

prefix => "nginxlog/%{+YYYY-MM-dd}"

bucket => "bi-spm-test"

time_file=> 60

codec => "json_lines"

}

}

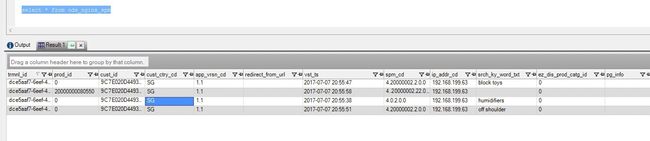

最终收集到的日志:

{"ez_cookie_id":"dce5aaf7-6eef-4193-b9d2-dd65dd0a2de5","productid":"20000000080550","65_customer":"9C7E020D4493C5A9,DPS:1dSKhm:7XnxmT6xJXDmu5h3mYdecAMwgmg","catalog":"SG","http_version":"1.1","referrer":"","@timestamp":"2017-07-07T20:55:58.000Z","ezspm":"4.20000002.22.0.0","client_ip":"192.168.199.63","keyword":"","categoryid":"0","info":""}

Copy到S3

copy dw.ods_nginx_spm

from 's3://bi-spm-test/nginxlog/2017-07-07-20/ls.s3'

REGION 'ap-southeast-1'

access_key_id 'access-id'

secret_access_key 'access-key'

timeformat 'auto'

FORMAT AS JSON 's3://bi-spm-test/test1.txt';

来查看下结果: