**Reference: **

-

Logical address: Included in the machine language instructions to specify the address of an operand or of an instruction. This type of addre ss embodies the well-known Intel segmented architecture that forces MS-DOS and Window s programmers to divide their programs into segments. Each logical address consists of a segment and an offset (or displacement) that denotes the distance from the start of the segment to the actual address.

Segmentation has been included in Intel microprocessors to encourage programmers to split their applications in logically related entities, such as subrou tines or global and local data areas. However, Linux uses segmentation in a very limited way. In fact, segmentation and paging are somewhat redundant since both can be used to separate the physical address spaces of processes: segmentation can assign a different linear address space to each process while paging can map the same linear address space into different physical address spaces.

Linear address: A single 32-bit unsigned integer that can be us ed to address up to 4 GB, that is, up to 4,294,967,296 memory cells. Linear addresses are usually represented in hexadecimal notation; their values range from 0x00000000 to 0xffffffff.

Physical address: Used to address memory cells included in memory chips. They correspond to the electrical signals sent along the address pi ns of the microprocessor to the memory bus. Physical addresses are represen ted as 32-bit unsigned integers.

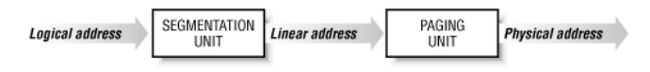

The CPU control unit transforms a logical address into a linear address by means of a hardware circuit called a segmentation unit; successively, a second hardware circuit called a paging unit transforms the linear address into a physical address (see Below).

Process Descriptor: In order to manage processes, the kernel must have a clear picture of what each process is doing. It must know, for instance, the process's priority, whether it is running on the CPU or blocked on some event, what address space has been assigned to it, which files it is allowed to address, and so on. This is the role of the process descriptor , that is, of a task_struct type structure whose fields contain all the information related to a single process.

Tip: Process Context & Interrupt Context

Virtual Memory

Conceptually, a virtual memory is organized as an array of N contiguous byte-sized cells stored on disk. Each byte has a unique virtual address that serves as an index into the array. The contents of the array on disk are cached in main memory. As with any other cache in the memory hierarchy, the data on disk (the lower level) is partitioned into blocks that serve as the transfer units between the disk and the main memory (the upper level). VM systems handle this by partitioning the virtual memory into fixed-sized blocks called virtual pages (VPs). Each virtual page is P = 2^p bytes in size. Similarly, physical memory is partitioned into physical pages (PPs), also P bytes in size. (Physical pages are also referred to as page frames.)

At any point in time, the set of virtual pages is partitioned into three disjoint subsets:

- Unallocated: Pages that have not yet been allocated (or created) by the VM system. Unallocated blocks do not have any data associated with them, and thus do not occupy any space on disk.

- Cached: Allocated pages that are currently cached in physical memory.

- Uncached: Allocated pages that are not cached in physical memory.

To help us keep the different caches in the memory hierarchy straight, we will use the term SRAM cache to denote the L1, L2, and L3 cache memories between the CPU and main memory, and the term DRAM cache to denote the VM system’s cache that caches virtual pages in main memory.

Page Table

A page table is an array of page table entries (PTEs). Each page in the virtual address space has a PTE at a fixed offset in the page table. For our purposes, we will assume that each PTE consists of a valid bit and an n-bit address field. The valid bit indicates whether the virtual page is currently cached in DRAM. If the valid bit is set, the address field indicates the start of the corresponding physical page in DRAM where the virtual page is cached. If the valid bit is not set, then a null address indicates that the virtual page has not yet been allocated. Otherwise, the address points to the start of the virtual page on disk.

(Tip: Multi-Level Page Tables is used to save memory.)

Allocating Pages

Figure 9.8 shows the effect on our example page table when the operating system allocates a new page of virtual memory, for example, as a result of calling malloc. In the example, VP 5 is allocated by creating room on disk and updating PTE 5 to point to the newly created page on disk.

Executable Object Files

Loading Executable Object Files

This process of copying the program into memory and then running it is known as loading. When the loader runs, it creates the memory image shown in Figure 7.13. Guided by the segment header table in the executable, it copies chunks of the executable into the code and data segments. Next, the loader jumps to the pro-gram’s entry point, which is always the address of the _start(main) symbol.

How do loaders really work?

Each program in a Unix system runs in the context of a process with its own virtual address space. When the shell runs a program, the parent shell process forks a child process that is a duplicate of the parent. The child process invokes the loader via the execve system call. The loader deletes the child’s existing virtual memory segments, and creates a new set of code, data, heap, and stack segments. The new stack and heap segments are initialized to zero. The new code and data segments are initialized to the contents of the executable file by mapping pages in the virtual address space to page-sized chunks of the executable file. Finally, the loader jumps to the _start address, which eventually calls the application’s mainroutine. Aside from some header information, there is no copying of data from disk to memory during loading. The copying is deferred until the CPU references a mapped virtual page, at which point the operating system automatically transfers the page from disk to memory using its paging mechanism. The interesting point is that the loader never actually copies any data from disk into memory. The data is paged in automatically and on demand(Page Fault) by the virtual memory system the first time each page is referenced, either by the CPU when it fetches an instruction, or by an executing instruction when it references a memory location.

Linux Virtual Memory Areas

Linux organizes the virtual memory as a collection of areas(also called segments). An area is a contiguous chunk of existing (allocated) virtual memory whose pages are related in some way. For example, the code segment, data segment, heap, shared library segment, and user stack are all distinct areas.

Figure 9.27 highlights the kernel data structures that keep track of the virtual memory areas in a process. The kernel maintains a distinct task structure (task_struct in the source code) for each process in the system.

Shared Objects Revisited

For example, every C program requires functions from the standard C library such as printf. It would be extremely wasteful for each process to keep duplicate copies of these commonly used codes in physical memory. Fortunately, memory mapping provides us with a clean mechanism for controlling how objects are shared by multiple processes.

A virtual memory area into which a shared object is mapped is often called a shared area. Similarly for a private area.

Since each object has a unique file name, the kernel can quickly determine that process 1 has already mapped this object and can point the page table entries in process 2 to the appropriate physical pages. The key point is that only a single copy of the shared object needs to be stored in physical memory, even though the object is mapped into multiple shared areas. For convenience, we have shown the physical pages as being contiguous, but of course this is not true in general.

User-level Memory Mapping with the mmap Function

Unix processes can use the mmap function to create new areas of virtual memory and to map objects into these areas.

![[CSAPP]Virtual Memory_第1张图片](http://img.e-com-net.com/image/info10/b474baaea6c04531b7684ec9381b21f8.jpg)

![[CSAPP]Virtual Memory_第2张图片](http://img.e-com-net.com/image/info10/e547e253838246dba748bc73895b464a.jpg)

![[CSAPP]Virtual Memory_第3张图片](http://img.e-com-net.com/image/info10/f3683a97beb14b289acffde9a3a0418b.jpg)

![[CSAPP]Virtual Memory_第4张图片](http://img.e-com-net.com/image/info10/2374d2b51a584807b273da78fa70102e.jpg)

![[CSAPP]Virtual Memory_第5张图片](http://img.e-com-net.com/image/info10/8d225cc5f43448829145d0a794ee8151.jpg)

![[CSAPP]Virtual Memory_第6张图片](http://img.e-com-net.com/image/info10/f3f670c3f1c6467cb7bdd95e166f1615.jpg)

![[CSAPP]Virtual Memory_第7张图片](http://img.e-com-net.com/image/info10/52cf3c26b18a48c088ca72c3ed7c12c5.jpg)