一、项目目标

搭建一个高可用web集群网站

二、项目规划

2.1 ip地址规划

2.2 拓扑图

2.3 相关说明

2.3.1 数据库采用mysql主主复制和mmm高可用

2.3.2 web集群为lvs+dr模式,keeplived实现高可用

2.3.3 nfs使两个web服务器间信息数据同步

2.3.4 nagios监控各服务器状态

三、环境搭建

各主机本地yum源配置

或者此处也可使用自建内网yum源

mount -t iso9660 /dev/sr0 /media

echo '/dev/sr0 /media iso9660 defaults 0 0'>>/etc/fstab

[root@web02 ~]# cat /etc/yum.repos.d/centos.repo

[centos6-iso]

name=centos

baseurl=file:///media

enabled=1

gpgcheck=0

iptables及selinux关闭

service iptables stop;chkconfig iptables off

sed -i “s/SELINUX=enforcing/SELINUX=disabled/” /etc/selinux/config;setenforce 0

时间同步问题---NTP 服务器搭建

[root@nagios ~]# rpm -qa|grep ntp

如未安装使用yum install -y ntp安装

chkconfig ntpd on

vim /etc/ntp.conf

#restrict default kod nomodify notrap nopeer noquery #注释此行

restrict 192.168.2.0 mask 255.255.0.0 nomodify notrap #新增此行

server s1d.time.edu.cn #网络同步地址

server s2g.time.edu.cn #网络同步地址

[root@nagios ~]#service ntpd restart 重启ntp服务

然后其他主机做定时任务同步此时间服务器

echo '*/5 * * * * /usr/sbin/ntpdate 192.168.2.11 >/dev/null 2>&1'>>/var/spool/cron/root

各主机hosts文件修改

[root@nagios ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.62 mysql-m44

192.168.2.64 mysql-m45

192.168.2.40 mysql-mon40

192.168.2.65 web-lnmp01

192.168.2.66 web-lnmp02

192.168.2.60 nfs

192.168.2.50 lb-01

192.168.2.51 lb-02

192.168.2.11 nagios

3.1 nagios服务器搭建

所需软件包

nagios-3.5.1.tar.gz

nagios-plugins-2.1.1.tar

nrpe-2.15.tar

[root@nagios ~]# yum install -y httpd

[root@nagios ~]# chkconfig httpd on

nagios安装

[root@nagios nagios]# groupadd nagios;useradd nagios -g nagios

[root@nagios ~]# tar xzvf nagios-3.5.1.tar.gz ;cd nagios

[root@nagios nagios]# yum install -y gcc gcc-c++ glibc glibc-common gd gd-devel mysql-server httpd php php-gd

[root@nagios nagios]# ./configure --prefix=/usr/local/nagios --with-command-group=nagios

[root@nagios nagios]#make all && make install

[root@nagios nagios]# make install-init;make install-commandmode;make install-config

[root@nagios nagios]# make install-webconf ##nagios web配置文件

[root@nagios nagios]# htpasswd -cb /usr/local/nagios/etc/htpasswd.users nagiosadmin 123456

service httpd restart && service nagios restart

nagios-plugins安装

[root@nagios ~]# tar xf nagios-plugins-2.1.1.tar.gz

[root@nagios ~]# cd nagios-plugins-2.1.1

[root@nagios nagios-plugins-2.1.1]# ./configure --prefix=/usr/local/nagios/ --with-nagios-user=nagios --with-nagios-group=nagios && make && make install

[root@nagios ~]# /usr/local/nagios/bin/nagios -d /usr/local/nagios/etc/nagios.cfg

[root@nagios nagios-plugins-2.1.1]# chkconfig nagios on

[root@nagios nagios-plugins-2.1.1]# service nagios restart

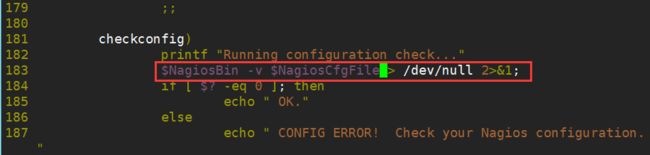

验证nagios配置文件命令/etc/init.d/nagios checkconfig

如想看到详细报错又不想用/usr/local/nagios/bin/nagios -v /usr/local/nagios/etc/nagios.cfg命令

可以修改vim /etc/init.d/nagios,将下图中定向到空的内容删除

即修正后为$NagiosBin -v $NagiosCfgFile;

[root@nagios ~]# vim /usr/local/nagios/etc/nagios.cfg

cfg_file=/usr/local/nagios/etc/objects/hosts.cfg

cfg_file=/usr/local/nagios/etc/objects/services.cfg

#cfg_file=/usr/local/nagios/etc/objects/localhost.cfg

nagios本机监控配置

[root@nagios ~]# vim /usr/local/nagios/etc/objects/hosts.cfg

[root@nagios etc]# head -51 objects/localhost.cfg |grep -v '#|^

>objects/hosts.cfg

[root@nagios etc]# vim objects/hosts.cfg

define host{

use linux-server

host_name 2.11-nagios

alias 2.11-nagios

address 127.0.0.1

}

define hostgroup{

hostgroup_name linux-servers

alias Linux Servers

members 2.11-nagios

}

[root@nagios etc]# grep -v '#|^$' objects/services.cfg

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description PING

check_command check_ping!100.0,20%!500.0,60%

}

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description Root Partition

check_command check_local_disk!20%!10%!/

}

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description Current Users

check_command check_local_users!20!50

}

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description Total Processes

check_command check_local_procs!250!400!RSZDT

}

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description Current Load

check_command check_local_load!5.0,4.0,3.0!10.0,6.0,4.0

}

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description Swap Usage

check_command check_local_swap!20!10

}

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description SSH

check_command check_ssh

notifications_enabled 0

}

define service{

use local-service ; Name of service template to use

host_name 2.11-nagios

service_description HTTP

check_command check_http

notifications_enabled 0

}

[root@nagios etc]#chown nagios.nagios /usr/local/nagios/etc/objects/hosts.cfg

[root@nagios etc]#chown nagios.nagios /usr/local/nagios/etc/objects/services.cfg

[root@nagios etc]# /etc/init.d/nagios checkconfig

Total Warnings: 0

Total Errors: 0

Things look okay - No serious problems were detected during the pre-flight check

OK.

[root@nagios etc]# /etc/init.d/nagios restart

客户端访问http://192.168.2.11/nagios

3.2 mysql服务器搭建mysql-m62和mysql-m64

mysql-5.5.52.tar.gz #源码安装

解决依赖

[root@xuegod62 mysql-5.5.52]# yum install -y ncurses-devel libaio-devel cmake

解压安装

[root@xuegod62 ~]# tar xf mysql-5.5.52.tar.gz;cd mysql-5.5.52

[root@xuegod64 mysql-5.5.52]# cmake -DCMAKE_INSTALL_PREFIX=/application/mysql -DMYSQL_DATADIR=/application/mysql/data -DMYSQL_UNIX_ADDR=/application/mysql/tmp/mysql.sock -DDEFAULT_CHARSET=utf8 -DDEFAULT_COLLATION=utf8_general_ci -DENABLED_LOCAL_INFILE=1 -DWITH_PARTITION_STORAGE_ENGINE=1 -DWITH_MYISAM_STORAGE_ENGINE=1 -DWITH_INNOBASE_STORAGE_ENGINE=1 -DWITH_MEMORY_STORAGE_ENGINE=1 -DWITH_READLINE=1

[root@xuegod62 mysql-5.5.52]# make -j 4 && make install

[root@xuegod62 mysql-5.5.52]# mysql -V

mysql Ver 14.14 Distrib 5.5.52, for Linux (x86_64) using readline 5.1

#授权mysql用户管理mysql的安装目录,

初始化mysl数据库文件

[root@mysql-m62 mysql-5.5.52]# chown -R mysql.mysql /application/mysql/

[root@mysql-m62 mysql-5.5.52]# /application/mysql/scripts/mysql_install_db --basedir=/application/mysql/ --datadir=/application/mysql/data/ --user=mysql

[root@mysql-m62 mysql]# cp support-files/mysql.server /etc/init.d/mysqld

[root@mysql-m62 mysql]# chmod +x /etc/init.d/mysqld

启动报错:

设置mysql开机自启动

[root@mysql-m62 mysql]# chkconfig mysqld on

[root@mysql-m62 mysql]# chkconfig --add mysqld

重启mysql service mysqld restart

[root@mysql-m62 mysql]# mysqladmin -uroot password ‘123456’

删除多余不用库

mysql-m.64安装配置同

数据库主主复制配置

mysql> create database web; #我们后面网站要使用的数据库web(两个sql服务器都要创建,以便后续)

mysql-m62上修改 [root@mysql-m62 mysql]# vim /etc/my.cnf

mysql> grant replication slave on *.* to [email protected] identified by '123456';

mysql>show master status;

mysql-m64上/etc/my.cnf配置

mysql> change master to master_host='192.168.2.62',master_user='slave',master_password='123456',master_log_file='mysql_bin.000012',master_log_pos=107;

mysql> start slave;

1 mysql> show slave status \G;

2 *************************** 1. row ***************************

3 Slave_IO_State:

4 Master_Host: 192.168.2.62

5 Master_User: slave

6 Master_Port: 3306

7 Connect_Retry: 60

8 Master_Log_File: mysql_bin.000011

9 Read_Master_Log_Pos: 330

10 Relay_Log_File: mysql-m64-relay-bin.000002

11 Relay_Log_Pos: 4

12 Relay_Master_Log_File: mysql_bin.000011

13 Slave_IO_Running: No

14 Slave_SQL_Running: Yes

15 Replicate_Do_DB: web

16 Replicate_Ignore_DB:

17 Replicate_Do_Table:

18 Replicate_Ignore_Table:

19 Replicate_Wild_Do_Table:

20 Replicate_Wild_Ignore_Table:

21 Last_Errno: 0

22 Last_Error:

23 Skip_Counter: 0

24 Exec_Master_Log_Pos: 107

25 Relay_Log_Space: 107

26 Until_Condition: None

27 Until_Log_File:

28 Until_Log_Pos: 0

29 Master_SSL_Allowed: No

30 Master_SSL_CA_File:

31 Master_SSL_CA_Path:

32 Master_SSL_Cert:

33 Master_SSL_Cipher:

34 Master_SSL_Key:

35 Seconds_Behind_Master: NULL

36 Master_SSL_Verify_Server_Cert: No

37 Last_IO_Errno: 1236

38 Last_IO_Error: Got fatal error 1236 from master when reading data from binary log: 'Could not find first log file name in binary log index file'

39 Last_SQL_Errno: 0

40 Last_SQL_Error:

41 Replicate_Ignore_Server_Ids:

42 Master_Server_Id: 1

43 1 row in set (0.00 sec)

44

45 ERROR:

46 No query specified

上面发现有报错,

主主复制报错解决

13行Slave_IO_Running: No 说明没有同步 以及38行Last_IO_Error: Got fatal error 1236 from master when reading data from binary log: 'Could not find first log file name in binary log index file'

处理方法:在2.62主库上reset master;2.64从库上先stop再reset slave;重新change master to master_host='192.168.2.62',master_user='slave',master_password='123456',master_log_file='mysql_bin.000001',master_log_pos=107;

然后start slave

查看slave状态show slave status;确认slave和master通信成功

接下来我们接着配置2.62为从,2.64为主

在2.64上

mysql> grant replication slave on *.* to 'slave'@'192.168.2.62' identified by '123456';

Query OK, 0 rows affected (0.00 sec)

192.168.2.62(mysql-m62)上

mysql> change master to master_host='192.168.2.64',master_user='slave',master_password='123456',master_log_file='mysql-bin.000001',master_log_pos=264;

Query OK, 0 rows affected (0.03 sec)

mysql> start slave;

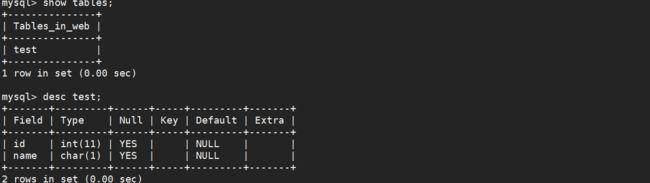

主主复制测试

m62上web库创建一个test表

mysql> use web;

mysql> create table test(id int,name char);

m64上查看,然后删除表再到m62查看表状态同步删除

通过验证可以看到主主复制已配置成功。

3.3配置安装mmm和agent

3.3.1 安装mmm

(mon40安装mmm monitor,m62和m64安装mmm-agent)

mon40安装epel源,epel-release-latest-6.noarch.rpm

rpm -ivh https://dl.fedoraproject.org/pub/epel/epel-release-latest-6.noarch.rpm

[root@mysql-mon40 mm]# yum install -y mysql-mmm*

提示报错,解决依赖,本地安装以下rpm包

[root@mysql-mon40 mm]# yum install -y mysql-mmm*

已加载插件:fastestmirror, security

设置安装进程

Loading mirror speeds from cached hostfile

* epel: mirrors.tuna.tsinghua.edu.cn

解决依赖关系

。。。。。。。。。中间安装省略部分。。。。。。。。。。。

已安装:

mysql-mmm.noarch 0:2.2.1-2.el6 mysql-mmm-agent.noarch 0:2.2.1-2.el6

mysql-mmm-monitor.noarch 0:2.2.1-2.el6 mysql-mmm-tools.noarch 0:2.2.1-2.el6

作为依赖被安装:

perl-Class-Singleton.noarch 0:1.4-6.el6

perl-DBD-MySQL.x86_64 0:4.013-3.el6

perl-Date-Manip.noarch 0:6.24-1.el6

perl-Log-Dispatch.noarch 0:2.27-1.el6

perl-Log-Dispatch-FileRotate.noarch 0:1.19-4.el6

perl-Log-Log4perl.noarch 0:1.30-1.el6

perl-Mail-Sender.noarch 0:0.8.16-3.el6

perl-Mail-Sendmail.noarch 0:0.79-12.el6

perl-Net-ARP.x86_64 0:1.0.6-2.1.el6

perl-Params-Validate.x86_64 0:0.92-3.el6

perl-Path-Class.noarch 0:0.25-1.el6

perl-Proc-Daemon.noarch 0:0.19-1.el6

perl-Proc-ProcessTable.x86_64 0:0.48-1.el6

perl-XML-DOM.noarch 0:1.44-7.el6

perl-XML-RegExp.noarch 0:0.03-7.el6

perl-YAML-Syck.x86_64 0:1.07-4.el6

完毕!

m62和m64上安装mysql-mmm-agent

[root@mysql-m62 ~]# rpm -ivh https://dl.fedoraproject.org/pub/epel/epel-release-latest-6.noarch.rpm

[root@mysql-mon40 mm]# ls

perl-Algorithm-Diff-1.1902-9.el6.noarch.rpm perl-Email-Date-Format-1.002-5.el6.noarch.rpm perl-MIME-Types-1.28-2.el6.noarch_(1).rpm rrdtool-1.4.7-1.el6.rfx.x86_64.rpm

perl-Email-Date-1.102-2.el6.noarch.rpm perl-MIME-Lite-3.027-2.el6.noarch.rpm perl-rrdtool-1.4.7-1.el6.rfx.x86_64.rpm

[root@mysql-mon40 ~]# scp mm/* 192.168.2.62:/root/

[root@mysql-mon40 ~]# scp mm/* 192.168.2.64:/root/

[root@mysql-m62 ~]# yum remove -y gd

[root@mysql-m62 ~]# yum localinstall libgd2-2.0.33-2_11.el6.x86_64.rpm gd-devel-2.0.33-2_11.el6.x86_64.rpm

[root@mysql-m62 ~]# yum localinstall -y perl-rrdtool-1.4.7-1.el6.rfx.x86_64.rpm rrdtool-1.4.7-1.el6.rfx.x86_64.rpm

[root@mysql-m62 ~]# yum localinstall -y perl-Algorithm-Diff-1.1902-9.el6.noarch.rpm perl-Email* perl-MIME*

[root@mysql-m62 ~]# yum install mysql-mmm-agent -y

m62和m64分别创建并授权用户

mysql> grant replication client on *.* to 'monitor'@'192.168.2.%' identified by '123456';

mysql> grant super,replication client,process on *.* to 'agentuser'@'192.168.2.%' identified by '123456';

mysql> flush privileges;

mysql-m40配置

[root@mysql-mon40 ~]# vim /etc/mysql-mmm/mmm_mon.conf

###修改监控的ip的地址和监控用的用户名密码

include mmm_common.confip 127.0.0.1

pid_path /var/run/mmm_mond.pid

bin_path /usr/lib/mysql-mmm/

status_path /var/lib/misc/mmm_mond.status

ping_ips 192.168.2.62, 192.168.2.64, 192.168.2.1

monitor_user monitor

monitor_password 123456

debug 0

m62和m64节点数据库配置

[root@mysql-m62 ~]# vim /etc/mysql-mmm/mmm_agent.conf

this mysql-m62

[root@mysql-m64~]# vim /etc/mysql-mmm/mmm_agent.conf

this mysql-m64

[root@mysql-mon40 ~]# scp /etc/mysql-mmm/mmm_common.conf 192.168.2.62:/etc/mysql-mmm/

[root@mysql-mon40 ~]# scp /etc/mysql-mmm/mmm_common.conf 192.168.2.64:/etc/mysql-mmm/

两台节点主机mysql-mmm-agent启动并加入开机在自动启动

[root@mysql-m62 ~]# /etc/init.d/mysql-mmm-agent start

[root@mysql-m62 ~]# echo "/etc/init.d/mysql-mmm-agent start">>/etc/rc.local

[root@mysql-m64 ~]# /etc/init.d/mysql-mmm-agent start

[root@mysql-m64 ~]# echo "/etc/init.d/mysql-mmm-agent start">>/etc/rc.local

monitor启动并加入开机启动

[root@mysql-mon40 ~]# /etc/init.d/mysql-mmm-monitor start

[root@mysql-mon40 ~]# echo '/etc/init.d/mysql-mmm-monitor start'>>/etc/rc.local

检测发现有一个报错2.64,连接不到

多方查询未找到解决方法,待后续重新安装测试。

3.4 nfs服务器安装配置

[root@nfs ~]# yum install -y nfs-utils

[root@xuegod64 ~]# vim /etc/exports

/www *(rw,sync,root_squash)

[root@nfs ~]# service rpcbind restart;service nfs restart

设置开机启动chkconfig nfs on ;chkconfig rpcbind on

[root@nfs ~]#cd /www

[root@nfs www]# unzip Discuz_X3.2_SC_UTF8.zip

[root@nfs www]# mv upload/* .

[root@nfs www]# chmod 777 /www/ -R

3.5 web服务器配置

3.5.1 两台web上nfs配置挂载

[root@xuegod65 ~]# yum install -y httpd php php-mysql

[root@xuegod65 ~]# service httpd restart;chkconfig httpd on

[root@xuegod65 ~]# showmount -e 192.168.2.60

[root@xuegod65 ~]# mount 192.168.2.60:/www /var/www/html/

配置开机自动挂载[root@xuegod65 ~]# echo "192.168.2.60:/www /var/www/html nfs _netdev 0 0">>/etc/fstab

3.6集群高可用配置

lb01主机keepalived安装(lb02主机同)

tar xf keepalived-1.2.13.tar.gz

[root@lb01 ~]# cd keepalived-1.2.13

[root@lb01 keepalived-1.2.13]# ./configure --prefix=/usr/local/keepalived/

[root@lb01 keepalived-1.2.13]# make && make install

keepalived默认启动时会去/etc/keepalived目录下找配置文件

[root@lb01 ~]# cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

[root@lb01 ~]# cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

[root@lb01 ~]# cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

[root@lb01 ~]# cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

[root@lb01 ~]# chkconfig keepalived on

[root@lb01 ~]# chmod +x /etc/init.d/keepalived

LVS安装(lb02同)

先安装以下软件包

[root@lb01 ~]# yum install -y libnl* popt*

查看是否加载lvs模块[root@lb01 ~]# modprobe -l|grep ipvs

[root@lb01 ~]# rpm -ivh /media/Packages/ipvsadm-1.26-4.el6.x86_64.rpm

[root@lb01 ~]# ipvsadm -L -n ;查看当前lvs集群

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

LVS+Keepalived配置

lb01节点主机配置

[root@lb01 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from root@localhost

smtp_server localhost

smtp_connect_timeout 30

router_id lb01

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.2.69

}

}

virtual_server 192.168.2.69 80 {

delay_loop 6

lb_algo wrr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 192.168.2.65 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.2.66 80 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

注意事项:

TCP_CHECK { #这个TCP_CHECK和{之间要加空格,不然只能识别到第一个realserver

lb02节点主机配置

先将lb01的keepadlived配置文件复制到lb02,略加修改,保存

[root@lb01 ~]#scp /etc/keepalived/keepalived.conf 192.168.2.52:/etc/keepalived/keepalived.conf

[root@lb02 ~]# service keepalived restart

[root@lb02 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.2.69:80 rr persistent 50

-> 192.168.2.65:80 Route 1 0 0

-> 192.168.2.66:80 Route 1 0 0

配置两个realserver

将/etc/init.d/lvsrsdr 中的VIP修改为192.168.2.69

[root@xuegod65 ~]# chmod +x /etc/init.d/lvsrsdr

[root@xuegod65 ~]# echo "/etc/init.d/lvsrsdr start">>/etc/rc.d/rc.local

[root@xuegod65 ~]# service lvsrsdr start

[root@xuegod66 ~]# chmod +x /etc/init.d/lvsrsdr

[root@xuegod66 ~]# echo "/etc/init.d/lvsrsdr start">>/etc/rc.d/rc.local

[root@xuegod66 ~]# service lvsrsdr start

[root@xuegod65 ~]# ifconfig lo:1

lo:1 Link encap:Local Loopback

inet addr:192.168.2.69 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1

[root@xuegod66 ~]# ifconfig lo:1

lo:1 Link encap:Local Loopback

inet addr:192.168.2.69 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1

数据库授权

[root@mysql-m62 ~]# mysql -uroot -p123456

mysql> grant all on web.* to web@'%' identified by '123456';

[root@mysql-m64 ~]# mysql -uroot -p123456

mysql> grant all on web.* to web@'%' identified by '123456';

有前面nfs服务器以及web站点已挂载

web站点安装discuz

现在可以直接访问

[root@web01 ~]# yum install php-mysql解决mysql_connect()问题

nfs服务器上 chmod -R 777 /www 检查权限

nfs服务器上配置config_global.php文件,

配置web站点读写分离

nagios插件自动部署脚本

先在nagios服务器2.11上临时搭建ftp服务,方便下一步创建插件自动部署脚本

yum install -y vsftpd

rz上传nagios-plugins-2.1.1.tar.gz和nrpe-2.15.tar.gz到/var/ftp/pub/目录下

[root@nagios yum]# cd /var/ftp/pub/

[root@nagios pub]# ls

nagios-plugins-2.1.1.tar.gz nrpe-2.15.tar.gz

创建自动部署nagios-plugin及nrpe命令脚本

vim nagios.sh

wget ftp://192.168.2.11/pub/nrpe-2.15.tar.gz

wget ftp://192.168.2.11/pub/nagios-plugins-2.1.1.tar.gz

groupadd nagios

useradd -u 8001 -s /sbin/nologin -g nagios nagios

tar xf nagios-plugins-2.1.1.tar.gz ;cd nagios-plugins-2.1.1

./configure && make && make install && cd

sleep 5

tar xf nrpe-2.15.tar.gz;cd nrpe-2.15

./configure && make all && make install && make install-plugin&& make install-daemon-config&& make install-xinetd

创建好了批量部署脚本后,传输给各个服务器,为了简化操作

我们使用

sshpass批量传输脚本给各个服务器

[root@nagios scp]wget http://sourceforge.net/projects/sshpass/files/sshpass/1.05/sshpass-1.05.tar.gz

解压,编译,安装完成后

新建host主机文件

[root@nagios scp]# vim host

192.168.2.62 root 123456

192.168.2.64 root 123456

192.168.2.40 root 123456

192.168.2.65 root 123456

192.168.2.66 root 123456

192.168.2.60 root 123456

192.168.2.51 root 123456

192.168.2.52 root 123456

新建传输脚本t.sh

[root@nagios scp]# vim t.sh

#!/bin/bash

host=($(cat host | awk '{print $1}'))

user=($(cat host | awk '{print $2}'))

pass=($(cat host | awk '{print $3}'))

for((i=0;i<${#host[@]};i++));

do

sshpass -p ${pass[$i]} scp /root/nagios.sh ${user[$i]}@${host[$i]}:/root/

done

然后在xshell底部发送以下命令到全部窗口执行脚本即可全部安装部署完成

chmox +x /root/nagios.sh;sh nagios.sh

yum install -y xinetd;service xinetd start;chkconfig xinetd on

nagios服务器监控各服务器

所有主机配置项:

在shell主机组中执行命令发送到全部会话主机

echo "nrpe 5666/tcp #nrpe">>/etc/services

sed -i 's#127.0.0.1#127.0.0.1,192.168.2.11#g' /etc/xinetd.d/nrpe 以及

数据库服务器监控

2.40-mysql-mon,mysql-m62以及mysql-m64主机配置

vim /usr/local/nagios/etc/nrpe.cfg

allowed_host=127.0.0.1,192.168.2.11

command[check_users]=/usr/local/nagios/libexec/check_users -w 5 -c 10

command[check_load]=/usr/local/nagios/libexec/check_load -w 15,10,5 -c 30,25,20

command[check_sda1]=/usr/local/nagios/libexec/check_disk -w 20% -c 10% -p /dev/sda1 #注意修改对应磁盘

command[check_zombie_procs]=/usr/local/nagios/libexec/check_procs -w 5 -c 10 -s Z

command[check_total_procs]=/usr/local/nagios/libexec/check_procs -w 200 -c 250

command[check_host_alive]=/usr/local/nagios/libexec/check_ping -H 192.168.2.40 -w 1000.0,80% -c 2000.0,100% -p 5 ##x新增,不同服务器对应不同ip

#command[check_mysql_status]=/usr/local/nagios/libexec/check_mysql -umonitor -P3306 -Hlocalhost --password='123456' -d discuz -w 60 -c 100 #此处不使用这种监控方式,实际采用了端口3306监控方式。

启动nrpe

[root@mysql-mon40 ~]# /usr/local/nagios/bin/nrpe -c /usr/local/nagios/etc/nrpe -d

[root@mysql-nagios ~]#/usr/local/nagios/libexec/check_nrpe -H 192.168.2.40

提示:CHECK_NRPE: Error - Could not complete SSL handshake.

是因为前面修改过nrpe.cfg,需要重启,使用命令pkill nrpe和

/usr/local/nagios/bin/nrpe -c /usr/local/nagios/etc/nrpe -d

[root@nagios ~]# /usr/local/nagios/libexec/check_nrpe -H 192.168.2.40

NRPE v2.15 #成功

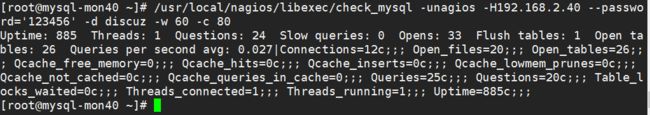

本机check_mysql状态报错

[root@mysql-mon40 nrpe-2.15]# /usr/local/nagios/libexec/check_mysql -h

/usr/local/nagios/libexec/check_mysql: error while loading shared libraries: libmysqlclient.so.18: cannot open shared object file: No such file or directory

处理方法新增一行 /usr/local/mysql/lib

vim /etc/ld.so.conf

include ld.so.conf.d/*.conf

/usr/local/mysql/lib

然后ldconfig使其生效

添加账号 mysql> GRANT PROCESS, SUPER, REPLICATIONCLIENT ON *.* TO 'nagios'@'192.168.2.%' IDENTIFIED BY '123456' with grant option;

flush privilegs;

本机nrpe验证

nagios服务端验证

而后完善配置文件

commands.cfg

[root@nagios ~]# vim /usr/local/nagios/etc/objects/commands.cfg

define command{

command_name check_nrpe

command_line $USER1$/check_nrpe-H $HOSTADDRESS$ -c $ARG1$

}

define command{

command_name check_host_alive

command_line $USER1$/check_ping-H $HOSTADDRESS$ -w 3000.0,80% -c 5000.0,100% -p 5

}

创建192.168.2.40.cfg 192.168.2.62.cfg 192.168.2.62.cfg配置文件 (见附件)

分别在这三个主机上重新启动nrpe和xinetd

命令:pkill nrpe; /usr/local/nagios/bin/nrpe -c /usr/local/nagios/etc/nrpe.cfg -d;service xinetd restart

nagios服务器上service nagios restart

web服务器监控

2.65-web01以及2.66-web02主机监控

nagios服务器创建配置文件192.168.2.65.cfg 192.168.2.66.cfg (见单独附件)

修改2.65-web01以及2.66-web02主机上nrpe.cfg配置

command[check_sda1]=/usr/local/nagios/libexec/check_disk -w 20% -c 10% -p /dev/sda1 ##修改

command[check_host_alive]=/usr/local/nagios/libexec/check_ping -H 192.168.2.65 -w 1000.0,80% -c 2000.0,100% -p 5 ##新增

重新启动nrpe和xinetd

命令:pkill nrpe; /usr/local/nagios/bin/nrpe -c /usr/local/nagios/etc/nrpe.cfg -d;service xinetd restart

nagios服务器上验证配置

[root@nagios servers]# /usr/local/nagios/bin/nagios -v /usr/local/nagios/etc/nagios.cfg

Total Warnings: 0

Total Errors: 0

[root@nagios servers]# service nagios restart

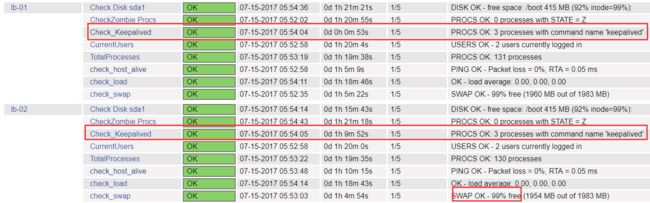

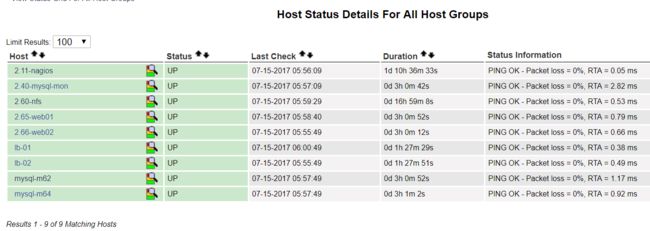

浏览器访问192.168.2.11/nagios,效果如下

上面看到有2.66-web02的total processes运行总进程warning,是因为默认设置的监控阈值太低

command[check_total_procs]=/usr/local/nagios/libexec/check_procs -w 150 -c 200

修改为command[check_total_procs]=/usr/local/nagios/libexec/check_procs -w 300 -c 500

重启nrpe后显示ok

2.11nagios本身http服务warning的原因是/var/www/html/下缺少主页

echo "2.11">/var/www/html/index.html,重启httpd后显示ok

至此还剩下两台lvs&keepalived设备未做监控

keepalived服务器监控

监控方法:/usr/local/nagios/libexec/check_procs -w:2 -c:4 -C keepalived

check_proc是检查操作系统中的进程个数的插件,可以通过参数匹配出是否存在某个进程,进程的个数是多少等等。

本处意思是监控keepalived进程数量,如果超过3个就报warning,超过5个就是critical

192.168.2.51 lb01服务器上(lb同)

[root@lb01 ~]# /usr/local/nagios/libexec/check_procs -w:2 -c:4 -C keepalived

PROCS WARNING: 3 processes with command name 'keepalived' | procs=3;:2;:4;0;

[root@lb01 ~]# ps -ef|grep keepalived|grep -v grep

root 36304 1 0 01:12 ? 00:00:00 keepalived -D

root 36306 36304 0 01:12 ? 00:00:00 keepalived -D

root 36307 36304 0 01:12 ? 00:00:00 keepalived -D

修改其nrpe配置文件

[root@lb01 ~]# vim /usr/local/nagios/etc/nrpe.cfg

allowed_host=127.0.0.1,192.168.2.11 ##添加允许nagios服务器进行监控

nagios服务器上创建lb01和lb02的监控配置文件

192.168.2.51.cfg和192.168.2.52.cfg {见单独附件}

重启lb01和lb02的nrpe和xinetd

命令:pkill nrpe; /usr/local/nagios/bin/nrpe -c /usr/local/nagios/etc/nrpe.cfg -d;service xinetd restart

查看监控状态如下:

以上所有监控部署完毕,看下整体情况

忘记,再创建一下监控组(不创也没事,只是方便分组查看)

[root@nagios nagios]# pwd

/usr/local/nagios

[root@nagios nagios]# vim etc/servers/group.cfg

define hostgroup{

hostgroup_name linux-server

alias Linux Server

members 2.60-nfs,2.65-web01,2.66-web02,2.40-mysql-mon,mysql-m62,mysql-m64,lb-01,lb-02

}

重启nagios

性能优化部分

mysql服务器优化(查询优化)

vim /etc/my.cnf

set-variable=max_connections=500

set-variable=wait_timeout=10

max_connect_errors = 100

max_connections = 500

max_user_connections = 100

log-slow-queries ; enable the slow query log, default 10 seconds

long_query_time = 5 ; log queries taking longer than 5 seconds

log-queries-not-using-indexes

key_buffer_size = 128M

web服务器优化

net.core.somaxconn = 32768

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_syn_retries = 2

net.ipv4.tcp_tw_recycle = 1

#net.ipv4.tcp_tw_len = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_mem = 94500000 915000000 927000000

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.ip_local_port_range = 1024 65535

#网络参数优化结束

系统最大打开文件数优化

[root@web01 ~]# ulimit -n

1024

临时修改命令ulimit -SHn 51201

但是重启会被还原,可以在开机自动将上述命令写入 /etc/rc.local

如果想永久更改,可以按照如下修改:

vi /etc/security/limits.conf

# add

* soft nofile 51200

* hard nofile 51200

数据库备份脚本

[root@mysql-m62 scripts]# vim mysqlbackup.sh

#!/bin/bash

#time 2017-08-16

DATE='date +%Y-%m-%D'

username=root

password=123456

database=web

backdir=/data/backup/db

mysqldump -u$username -p$password -d $database > $backdir/mysql-$DATE.sql

cd $backdir && tar czf mysql-$DATE.tar.gz *.sql

find $backdir -name *.sql -exec rm -rf{} \;

if [$? -eq 0];then

echo "mysql-$DATE.sql was successed backup"|mail -s "$mysql-DATE backup-success" [email protected]

else

echo "mysql-$DATE.sql was failed backup"|mail -s "mysql-DATE failed-backup" [email protected]

fi

find $backdir -type f -mtime +30 -exec rm -rf{} \;

添加到定时任务,每天晚上凌晨1点执行

[root@mysql-m62 scripts]# crontab -e

*/5 * * * * /usr/sbin/ntpdate 192.168.2.11 >/dev/null 2>&1

* 1 * * * sh /scripts/mysqlbackup.sh