介绍:hadoop是大数据使用的平台,而hbase是一个非关系型数据库,是hadoop下的应用。Phoenix的功能是可以像关系型数据库SQL语言一样操作非关系型数据库hbase。

1. 环境

Ubuntu环境下,需要先安装 JDK 和 SSH

1.1 JDK,即java的安装

安装vim :sudo apt-get install vim

解压: tar -zxvf jdk-8u181-linux-x64.tar.gz

建夹:mkdir /usr/local/java

移动到usr/local/ :mv ~/jdk1.8.0_181 /usr/local/java/

配置环境变量:

vim /etc/profile

export JAVA_HOME=/usr/local/java/jdk1.8.0_181

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:{JAVA_HOME}/lib:{JRE_HOME}/lib

export PATH={JAVA_HOME}/bin:PATH

保存配置:

source /etc/profile

查看java的版本:

java -version

1.2 配置ssh免密码登录

安装ssh server:

sudo apt-get install openssh-server

cd ~/.ssh/ # 若没有该目录,请先执行一次

ssh localhost

ssh-keygen -t rsa # 会有提示,都按回车就可以

cat id_rsa.pub >> authorized_keys # 加入授权

使用ssh localhost试试能否直接登录

实例:

cc@cc-fibric:~/.ssh$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/cc/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/cc/.ssh/id_rsa.

Your public key has been saved in /home/cc/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:DXbPG+Gxf/Ot+Q94bBWxWlWCp0MvH9Ql+zhq97YRx14 cc@cc-fibric

The key's randomart image is:

+---[RSA 2048]----+

| .oo*|

| o o+=|

| o ..o=.+ |

| . + +++=+.|

| S . *=ooE|

| B.++|

| = Boo|

| . + =*|

| o=O|

+----[SHA256]-----+

使用ssh localhost试试能否直接登录

cc@cc-fibric:~$ ssh localhost

The authenticity of host 'localhost (127.0.0.1)' can't be established.

ECDSA key fingerprint is SHA256:TpzOW91Qn9qGniVI1ZzuVcvwccerM1U9xskPi7b0ZQw.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

cc@localhost's password:

Welcome to Ubuntu 16.04.4 LTS (GNU/Linux 4.13.0-36-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

174 packages can be updated.

11 updates are security updates.

*** System restart required ***

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

2 hadoop的伪分布部署

2.1 安装hadoop-2.6.0

先下载hadoop-2.6.0.tar.gz,链接如下:

http://mirrors.hust.edu.cn/apache/hadoop/common/

下面进行安装:

$ sudo tar -zxvf hadoop-2.6.0.tar.gz -C /usr/local #解压到/usr/local目录下

$ cd /usr/local

$ sudo mv hadoop-2.6.0 hadoop #重命名为hadoop

$ sudo chown -R hadoop ./hadoop #修改文件权限

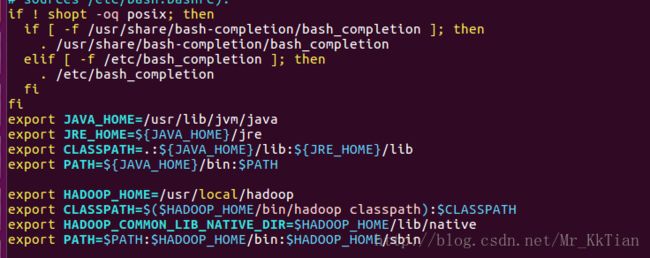

给hadoop配置环境变量,将下面代码添加到.bashrc文件:

export HADOOP_HOME=/usr/local/hadoop

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

同样,执行source ~./bashrc使设置生效,并查看hadoop是否安装成功

查看hadoop版本:

cc@cc-fibric:/usr/local$ hadoop version

Hadoop 2.7.6

Subversion https://[email protected]/repos/asf/hadoop.git -r 085099c66cf28be31604560c376fa282e69282b8

Compiled by kshvachk on 2018-04-18T01:33Z

Compiled with protoc 2.5.0

From source with checksum 71e2695531cb3360ab74598755d036

This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-2.7.6.jar

2.2 hadoop的伪分布部署

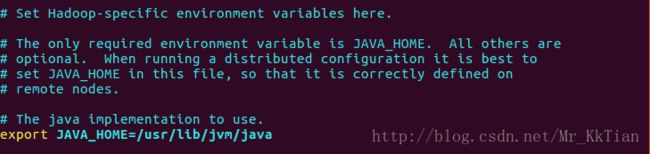

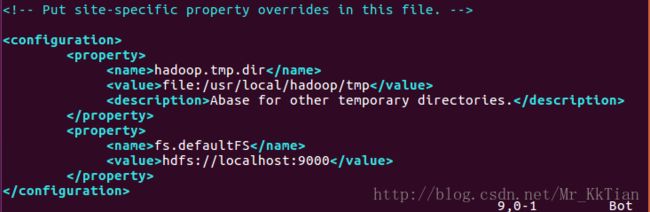

Hadoop 可以在单节点上以伪分布式的方式运行,Hadoop 进程以分离的 Java 进程来运行,节点既作为 NameNode 也作为 DataNode,同时,读取的是 HDFS 中的文件。Hadoop 的配置文件位于 /usr/local/hadoop/etc/hadoop/ 中,伪分布式需要修改2个配置文件 core-site.xml 和 hdfs-site.xml 。Hadoop的配置文件是 xml 格式,每个配置以声明 property 的 name 和 value 的方式来实现。首先将jdk1.7的路径添(export JAVA_HOME=/usr/lib/jvm/java )加到hadoop-env.sh文件

接下来修改core-site.xml文件:

hadoop.tmp.dir

file:/usr/local/hadoop/tmp

Abase for other temporary directories.

fs.defaultFS

hdfs://localhost:9000

接下来修改配置文件 hdfs-site.xml

dfs.replication

1

dfs.namenode.name.dir

file:/usr/local/hadoop/tmp/dfs/name

dfs.datanode.data.dir

file:/usr/local/hadoop/tmp/dfs/data

关于Hadoop配置项的一点说明:虽然只需要配置 fs.defaultFS 和 dfs.replication 就可以运行(官方教程如此),不过若没有配置 hadoop.tmp.dir 参数,则默认使用的临时目录为 /tmp/hadoo-hadoop,而这个目录在重启时有可能被系统清理掉,导致必须重新执行 format 才行。所以我们进行了设置,同时也指定 dfs.namenode.name.dir 和 dfs.datanode.data.dir,否则在接下来的步骤中可能会出错。

- 配置mapred-site.xml

从模板文件复制一个xml,执行命令:mv mapred-site.xml.template mapred-site.xml

执行命令:vim mapred-site.xml

将文件修改为

mapreduce.framework.name

yarn

123456

或者:

mapred.job.tracker

localhost:9001

123456

注释:yarn是一个从mapreduce中提取出来的资源管理模块,但是在单机伪分布式的环境中是否要启动该服务就要因情况讨论了,因为yarn会大大减慢程序执行速度,所以本教程同时记录了配置yarn和不配置yarn两种方案。关于yarn更多知识请移步后问相关阅读。

这样配置后可跳过配置yarn-site.xml,因为不会调用这个模块,同时也不会出现最后一栏中出现的问题。

- 配置yarn-site.xml,执行命令:

vim yarn-site.xml,修改为:

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

12345678910

配置完成后,执行格式化:hdfs namenode -format。倒数第五行出现Exitting with status 0 表示成功,若为 Exitting with status 1 则是出错。

配置完成后,执行 NameNode 的格式化

$ ./bin/hdfs namenode -format

启动namenode和datanode进程,并查看启动结果

$ ./sbin/start-dfs.sh

$ jps

实例:

cc@cc-fibric:~$ cd /usr/local/hadoop/

cc@cc-fibric:/usr/local/hadoop$ ls

bin include libexec NOTICE.txt sbin tmp

etc lib LICENSE.txt README.txt share

cc@cc-fibric:/usr/local/hadoop$ sudo ./bin/hdfs namenode -format

18/09/28 11:21:42 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = cc-fibric/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.6

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-

..........

省略不写

..........

2.7.6.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.6-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.6.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.6.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.6.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.6.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.6.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.6.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://[email protected]/repos/asf/hadoop.git -r 085099c66cf28be31604560c376fa282e69282b8; compiled by 'kshvachk' on 2018-04-18T01:33Z

STARTUP_MSG: java = 1.8.0_181

************************************************************/

18/09/28 11:21:42 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

18/09/28 11:21:42 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-e25c92b2-bce2-414f-a11d-3d06a5283d6a

............

省略不写

............

18/09/28 11:21:46 INFO common.Storage: Storage directory /usr/local/hadoop/tmp/dfs/name has been successfully formatted.

18/09/28 11:21:46 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

18/09/28 11:21:47 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 320 bytes saved in 0 seconds.

18/09/28 11:21:47 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/09/28 11:21:47 INFO util.ExitUtil: Exiting with status 0

18/09/28 11:21:47 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at cc-fibric/127.0.1.1

************************************************************/

root@cc-fibric:~# jps

6881 Jps

6359 NameNode

6733 SecondaryNameNode

6527 DataNode

2.3 一些出错的记录

报错处理(一)

在装完 hadoop 及 jdk 之后,在执行start-all.sh的时候出现

root@localhost's password:localhost:permission denied,please try again

可是,我记得当时设置的密码是对的,无论怎么输都不对,提示密码错误。

解决方法:在出现上述问题后,

输入

sudo passwd

然后,会输入新的密码,设置之后,再重新格式化一下namenode,最后执行start-all.sh,OK。

当然,网上还有一种解决方法,但我的按照这样改后,问题还是没有解决:

出现要求输入localhost密码的情况 ,如果此时明明输入的是正确的密码却仍无法登入,其原因是由于如果不输入用户名的时候默认的是root用户,但是安全期间ssh服务默认没有开root用户的ssh权限

输入代码:

$vim /etc/ssh/sshd_config

检查PermitRootLogin 后面是否为yes,如果不是,则将该行代码 中PermitRootLogin 后面的内容删除,改为yes,保存。之后输入下列代码重启SSH服务:

$ /etc/init.d/sshd restart

即可正常登入

报错处理(二)

在启动hadoop后,确定namenode,datanode 已经启动后使用jps查看,只能查看到

6881 Jps

查明原因后,发现是使用权限的问题

切换用户,最好用root

cc@cc-fibric: su - hadoop

在/home 下可查看用户

cc@cc-fibric:/usr/local/hadoop$ jps

6853 Jps

cc@cc-fibric:/usr/local/hadoop$ sudo -i

root@cc-fibric:~# jps

6881 Jps

6359 NameNode

6733 SecondaryNameNode

6527 DataNode

2.4 查看结果

成功启动后,可以访问 Web 界面 http://localhost:50070 查看 NameNode 和 Datanode 信息,还可以在线查看 HDFS 中的文件。