ELK由ElasticSearch、Logstash和Kiabana三个开源工具组成。官方网站:https://www.elastic.co/products

Elasticsearch

- Elasticsearch 是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash

- Logstash是一个完全开源的工具,他可以对你的日志进行收集、分析,并将其存储供以后使用(如,搜索)。

kibana

- kibana 是一个开源和免费的工具,他Kibana可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

-

工作原理:

安装Logstash

- 安装JDK

[root@h001 jdk1.8.0_45]# java -version

java version "1.8.0_45"

Java(TM) SE Runtime Environment (build 1.8.0_45-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.45-b02, mixed mode)

- 安装Logstash,添加环境变量

[elk@h001 soft]$ tar -xzvf logstash-6.6.0.tar.gz -C ~/app/

[elk@h001 soft]$ cd ~/app

[elk@h001 app]$ ll

total 4

drwxrwxr-x 12 elk elk 4096 May 5 11:35 logstash-6.6.0

[elk@h001 soft]$ vi ~/.bash_profile

export LOGSTASH_HOME=/home/elk/app/logstash-6.6.0

export PATH=$LOGSTASH_HOME/bin:$PATH

- 安装完后执行如下命令

[elk@h001 logstash-6.6.0]$ logstash -e 'input { stdin { } } output { stdout {} }'

Sending Logstash logs to /home/elk/app/logstash-6.6.0/logs which is now configured via log4j2.properties

[2019-05-05T11:39:10,547][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-05-05T11:39:10,564][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.0"}

[2019-05-05T11:39:17,173][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2019-05-05T11:39:17,275][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

[2019-05-05T11:39:17,337][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

The stdin plugin is now waiting for input:

[2019-05-05T11:39:17,601][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

- 启动完后在控制台界面输入

hello!!!

{

"@timestamp" => 2019-05-06T01:54:24.393Z,

"message" => "hello!!!",

"@version" => "1",

"host" => "h001"

}

- 创建配置文件

以上使用-e参数在命令行中指定配置是很常用的方式,不过如果需要配置更多设置则需要很长的内容。这种情况,我们首先创建一个简单的配置文件,并且指定logstash使用这个配置文件。在config目录下编写一个简单的配置文件test.conf。

logstash使用input和output定义收集日志时的输入和输出的相关配置,本例中input定义了一个叫"stdin"的input,output定义一个叫"stdout"的output。无论我们输入什么字符,Logstash都会按照某种格式来返回我们输入的字符。使用logstash的-f参数来读取配置文件,执行如下开始进行测试:

[elk@h001 config]$ vi test.conf

input { stdin { } }

output { stdout { }}

[elk@h001 config]$ logstash -f test.conf

Sending Logstash logs to /home/elk/app/logstash-6.6.0/logs which is now configured via log4j2.properties

[2019-05-06T10:28:01,611][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-05-06T10:28:01,625][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.0"}

[2019-05-06T10:28:07,493][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2019-05-06T10:28:07,627][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

The stdin plugin is now waiting for input:

[2019-05-06T10:28:07,687][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-05-06T10:28:07,875][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

hello!!!++++

{

"host" => "h001",

"@version" => "1",

"@timestamp" => 2019-05-06T02:28:20.898Z,

"message" => "hello!!!++++"

}

安装Elasticsearch

- 下载Elasticsearch解压并添加环境变量

[elk@h001 soft]$ tar -xzvf elasticsearch-6.6.0.tar.gz -C ~/app

[elk@h001 soft]$ vi ~/.bash_profile

export ES_HOME=/home/elk/app/elasticsearch-6.6.0

export PATH=$ES_HOME/bin:$PATH

[elk@h001 soft]$ source ~/.bash_profile

[elk@h001 soft]$ echo $ES_HOME

/home/elk/app/elasticsearch-6.6.0

- 启动Elasticsearch

[elk@h001 ~]$ nohup elasticsearch &

然后查看日志,看到如下日志后,说明ES已经启动,可以查看网页

[elk@h001 ~]$ more nohup.out

[2019-05-06T11:33:53,769][INFO ][o.e.h.n.Netty4HttpServerTransport] [3bWHi_8] publish_address {172.26.121.32:9200}, bound_addre

sses {0.0.0.0:9200}

[2019-05-06T11:33:53,770][INFO ][o.e.n.Node ] [3bWHi_8] started

- ES启动过程中遇到的问题

ERROR: [2] bootstrap checks failed

[1]: max file descriptors [65535] for elasticsearch process is too low, increase to at least [65536]

[2]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

解决办法:

[1]:sudo vi /etc/sysctl.conf

修改如下配置vm.max_map_count=655360

并执行命令sysctl -p即可

[2]:sudo vi /etc/security/limits.conf

修改如下配置* soft nofile 65536

需要系统重启

另外启动ES不可以使用root 账号

- 测试使用ES

[elk@h001 config]$ vi logstash-es.conf

input { stdin { } }

output {

elasticsearch {hosts => "172.26.121.32:9200" }

stdout { }

}

[elk@h001 config]$ logstash -f logstash-es.conf

.....

[2019-05-06T14:01:07,003][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

hello

{

"@timestamp" => 2019-05-06T06:01:13.669Z,

"message" => "hellohello",

"@version" => "1",

"host" => "h001"

}

查看数据是否发送到ES

[elk@h001 ~]$ curl 'http://localhost:9200/_search?pretty'

{

"took" : 52,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 1.0,

"hits" : [

{

"_index" : "logstash-2019.05.06",

"_type" : "doc",

"_id" : "g267i2oBSTfxCsN6YJri",

"_score" : 1.0,

"_source" : {

"@timestamp" : "2019-05-06T06:01:13.669Z",

"message" : "hellohello",

"@version" : "1",

"host" : "h001"

}

}

]

}

}

- 安装ES插件

参考:https://blog.csdn.net/u012332735/article/details/54946355

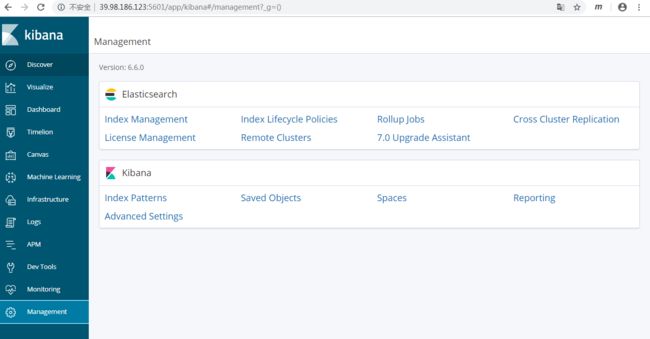

安装Kibana

- 下载Kibana,解压并配置环境变量

[elk@h001 soft]$ tar -xzvf kibana-6.6.0-linux-x86_64.tar.gz -C ~/app

[elk@h001 soft]$ vi ~/.bash_profile

export KIBANA_HOME=/home/elk/app/kibana-6.6.0-linux-x86_64

export PATH=$KIBANA_HOME/bin:$PATH

~

~

"~/.bash_profile" 15L, 402C written

[elk@h001 soft]$ source ~/.bash_profile

- 启动Kibana

[elk@h001 ~]$ kibana

log [08:56:44.834] [info][listening] Server running at http://0.0.0.0:5601

log [08:56:45.125] [info][status][plugin:[email protected]] Status changed from yellow to green - Ready

打开网页

第一次打开添加index

选择默认的@timestamp

然后在Discover中选择对应的index

即可采集到对应的日志