大纲

一、环境准备

二、拓扑准备

三、前提条件

四、安装相关软件

五、配置 heartbeat

六、测试Web集群

七、问题汇总

八、共享存储

一、环境准备

1.操作系统

CentOS 5.5 X86_64 最小化安装

说明:一般Heartbeat v2.x 都安装在CentOS 5.x系列中,而CentOS 6.x中都用Heartbeat v3.x。

2.相关软件

Heartbeat 2.1.4

Apache 2.2.3

3.配置epel YUM源(两节点都要配置)

node1,node2:

[root@node src]# wget http://download.fedoraproject.org/pub/epel/5/x86_64/epel-release-5-4.noarch.rpm [root@node src]# rpm -ivh epel-release-5-4.noarch.rpm warning: epel-release-5-4.noarch.rpm: Header V3 DSA signature: NOKEY, key ID 217521f6 Preparing... ########################################### [100%] 1:epel-release ########################################### [100%] [root@node src]# rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-5 [root@node src]# yum list

4.关闭防火墙与SELinux (两节点都要配置)

node1,node2:

[root@node ~]# service iptables stop [root@node ~]# vim /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - SELinux is fully disabled. SELINUX=disabled # SELINUXTYPE= type of policy in use. Possible values are: # targeted - Only targeted network daemons are protected. # strict - Full SELinux protection. SELINUXTYPE=targeted

二、拓扑准备

说明:有两个节点分别为node1与node2,VIP为192.168.18.200,测试机是一台Windows主机,NFS服务器为192.168.18.208

三、前提条件(两节点都要配置)

1.节点之间主机名互相解析

node1,node2:

[root@node ~]# vim /etc/hosts # Do not remove the following line, or various programs # that require network functionality will fail. 127.0.0.1 localhost.localdomain localhost ::1 localhost6.localdomain6 localhost6 192.168.18.201 node1.test.com node1 192.168.18.202 node2.test.com node2

2.节点之间时间得同步

node1,node2:

[root@node ~]# yum -y install ntp [root@node ~]# ntp 210.72.145.44 [root@node ~]# date 2013年 08月 07日 星期三 16:06:30 CST

3.节点之间配置SSH互信

node1:

[root@node1 ~]# ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' [root@node1 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected] [root@node1 ~]# ssh node2 [root@node2 ~]# ifconfig eth0 Link encap:Ethernet HWaddr 00:0C:29:EA:CE:79 inet addr:192.168.18.202 Bcast:192.168.18.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:feea:ce79/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:41736 errors:0 dropped:0 overruns:0 frame:0 TX packets:36201 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:35756480 (34.1 MiB) TX bytes:5698609 (5.4 MiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:560 (560.0 b) TX bytes:560 (560.0 b)

node2:

[root@node2 ~]# ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' [root@node2 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected] [root@node2 ~]# ssh node1 [root@node1 ~]# ifconfig eth0 Link encap:Ethernet HWaddr 00:0C:29:23:76:4D inet addr:192.168.18.201 Bcast:192.168.18.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe23:764d/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:35226 errors:0 dropped:0 overruns:0 frame:0 TX packets:30546 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:32941174 (31.4 MiB) TX bytes:4670062 (4.4 MiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:560 (560.0 b) TX bytes:560 (560.0 b)

四、安装相关软件 (两节点都要安装)

1.heartbeat 安装组件说明

heartbeat 核心组件 *

heartbeat-devel 开发包

heartbeat-gui 图形管理接口 *

heartbeat-ldirectord 为lvs高可用提供规则自动生成及后端realserver健康状态检查的组件

heartbeat-pils 装载库插件接口 *

heartbeat-stonith 爆头接口 *

注:带*表示必须安装

2.安装heartbeat

node1,node2:

[root@node ~]# yum -y install heartbeat*

3.安装httpd

node1:

[root@node1~]# yum install -y httpd [root@node1 ~]# service httpd start 启动 httpd: [确定] [root@node1 ~]# echo "node1.test.com

" > /var/www/html/index.html

测试

[root@node1 ha.d]# service httpd stop 停止 httpd: [确定] [root@node1 ha.d]# chkconfig httpd off [root@node1 ha.d]# chkconfig httpd --list httpd 0:关闭 1:关闭 2:关闭 3:关闭 4:关闭 5:关闭 6:关闭

说明:测试完成后关闭服务,并让其开机不启动(注,httpd由heartbeat管理)

node2:

[root@node2~]# yum install -y httpd [root@node2 ~]# service httpd start 启动 httpd: [确定] [root@node2 ~]# echo "node2.test.com

" > /var/www/html/index.html

测试

[root@node2 ha.d]# service httpd stop 停止 httpd: [确定] [root@node2 ha.d]# chkconfig httpd off [root@node2 ha.d]# chkconfig httpd --list httpd 0:关闭 1:关闭 2:关闭 3:关闭 4:关闭 5:关闭 6:关闭

说明:测试完成后关闭服务,并让其开机不启动(注,httpd由heartbeat管理)

五、配置 heartbeat

1.配置文件说明

[root@node1 ~]# cd /etc/ha.d/ [root@node1 ha.d]# ls harc rc.d README.config resource.d shellfuncs shellfuncs.rpmsave、

说明:安装好的heartbeat默认是没有配置文件的,但提供了配置文件样本

[root@node1 ha.d]# cd /usr/share/doc/heartbeat-2.1.4/ [root@node1 heartbeat-2.1.4]# ls apphbd.cf COPYING faqntips.txt HardwareGuide.html hb_report.txt README rsync.txt authkeys COPYING.LGPL GettingStarted.html HardwareGuide.txt heartbeat_api.html Requirements.html startstop AUTHORS DirectoryMap.txt GettingStarted.txt haresources heartbeat_api.txt Requirements.txt ChangeLog faqntips.html ha.cf hb_report.html logd.cf rsync.html [root@node1 heartbeat-2.1.4]# cp authkeys ha.cf haresources /etc/ha.d/

说明:其中有三个配置文件是我们需要的分别为,authkeys、ha.cf、haresources

authkeys #是节点之间的认证key文件,我们不能让什么服务器都加入集群中来,加入集群中的节点都是需要认证的

ha.cf #heartbeat的主配置文件

haresources #集群资源管理配置文件(在heartbeat所有版本中都是支持haresources来配置集群中的资源的)

2.配置authkeys文件

[root@node1 ha.d]# dd if=/dev/random bs=512 count=1 | openssl md5 #生成密钥随机数 0+1 records in 0+1 records out 128 bytes (128 B) copied, 0.000214 seconds, 598 kB/s a4d20b0dd3d5e35e0f87ce4266d1dd64

[root@node1 ha.d]# vim authkeys #auth 1 #1 crc #2 sha1 HI! #3 md5 Hello! auth 1 1 md5 a4d20b0dd3d5e35e0f87ce4266d1dd64

[root@node1 ha.d]# chmod 600 authkeys #修改密钥文件的权限为600 [root@node1 ha.d]# ll 总计 56 -rw------- 1 root root 691 08-07 16:45 authkeys -rw-r--r-- 1 root root 10539 08-07 16:42 ha.cf -rwxr-xr-x 1 root root 745 2010-03-21 harc -rw-r--r-- 1 root root 5905 08-07 16:42 haresources drwxr-xr-x 2 root root 4096 08-07 16:21 rc.d -rw-r--r-- 1 root root 692 2010-03-21 README.config drwxr-xr-x 2 root root 4096 08-07 16:21 resource.d -rw-r--r-- 1 root root 7862 2010-03-21 shellfuncs -rw-r--r-- 1 root root 7862 2010-03-21 shellfuncs.rpmsave

3.配置ha.cf文件

[root@node1 ha.d]# vim ha.cf

主要修改两处(其它都可以默认):

(1).修改心跳信息的传播方式(这里是组播)

mcast eth0 225.100.100.100 694 1 0

(2).配置集群中的节点数

node node1.test.com node node2.test.com

4.配置haresources文件

[root@node1 ha.d]# vim haresources node1.test.com IPaddr::192.168.18.200/24/eth0 httpd

5.复制以上三个配置文件到node2上

[root@node1 ha.d]# scp authkeys ha.cf haresources node2:/etc/ha.d/ authkeys 100% 691 0.7KB/s 00:00 ha.cf 100% 10KB 10.4KB/s 00:00 haresources 100% 5957 5.8KB/s 00:00 [root@node1 ha.d]# ssh node2 Last login: Wed Aug 7 16:13:44 2013 from node1.test.com [root@node2 ~]# ll /etc/ha.d/ 总计 56 -rw------- 1 root root 691 08-07 17:12 authkeys -rw-r--r-- 1 root root 10614 08-07 17:12 ha.cf -rwxr-xr-x 1 root root 745 2010-03-21 harc -rw-r--r-- 1 root root 5957 08-07 17:12 haresources drwxr-xr-x 2 root root 4096 08-07 16:24 rc.d -rw-r--r-- 1 root root 692 2010-03-21 README.config drwxr-xr-x 2 root root 4096 08-07 16:24 resource.d -rw-r--r-- 1 root root 7862 2010-03-21 shellfuncs -rw-r--r-- 1 root root 7862 2010-03-21 shellfuncs.rpmsave

6.启动node1与node2

[root@node1 ha.d]# ssh node2 "service heartbeat start"

Strting High-Availability services:

[确定]

logd is already stopped

[root@node1 ha.d]# service heartbeat start

Starting High-Availability services:

2013/08/07_17:19:22 INFO: Resource is stopped

[确定]

六、测试Web集群

1.查看启动的服务

[root@node1 ha.d]# netstat -ntulp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:612 0.0.0.0:* LISTEN 2550/rpc.statd tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2511/portmap tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 2845/cupsd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2889/sendmail: acce tcp 0 0 :::80 :::* LISTEN 8693/httpd tcp 0 0 :::22 :::* LISTEN 2832/sshd udp 0 0 0.0.0.0:43528 0.0.0.0:* 8278/heartbeat: wri udp 0 0 225.100.100.100:694 0.0.0.0:* 8278/heartbeat: wri udp 0 0 0.0.0.0:606 0.0.0.0:* 2550/rpc.statd udp 0 0 0.0.0.0:609 0.0.0.0:* 2550/rpc.statd udp 0 0 0.0.0.0:5353 0.0.0.0:* 3002/avahi-daemon: udp 0 0 0.0.0.0:111 0.0.0.0:* 2511/portmap udp 0 0 0.0.0.0:40439 0.0.0.0:* 3002/avahi-daemon: udp 0 0 0.0.0.0:631 0.0.0.0:* 2845/cupsd udp 0 0 :::37782 :::* 3002/avahi-daemon: udp 0 0 :::5353 :::* 3002/avahi-daemon:

2.查看IP

[root@node1 ha.d]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:23:76:4D

inet addr:192.168.18.201 Bcast:192.168.18.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe23:764d/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:59888 errors:0 dropped:0 overruns:0 frame:0

TX packets:38388 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:41693748 (39.7 MiB) TX bytes:5689144 (5.4 MiB)

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:23:76:4D

inet addr:192.168.18.200 Bcast:192.168.18.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:34 errors:0 dropped:0 overruns:0 frame:0

TX packets:34 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:3887 (3.7 KiB) TX bytes:3887 (3.7 KiB)

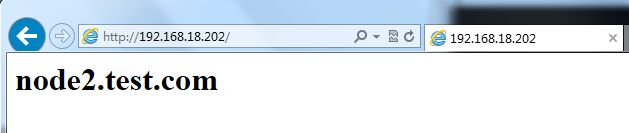

3.测试

4.故障演示

1).关闭node1上的heartbeat

[root@node1 ha.d]# service heartbeat stop #将node1中的heartbeat关闭

Stopping High-Availability services:

[确定]

[root@node1 ha.d]#

2).查看node2上的IP

[root@node2 ~]# ifconfig # 查看node2的IP,可以看到立即转移到node2上

eth0 Link encap:Ethernet HWaddr 00:0C:29:EA:CE:79

inet addr:192.168.18.202 Bcast:192.168.18.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:feea:ce79/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:69701 errors:0 dropped:0 overruns:0 frame:0

TX packets:44269 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:46270836 (44.1 MiB) TX bytes:6735723 (6.4 MiB)

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:EA:CE:79

inet addr:192.168.18.200 Bcast:192.168.18.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:34 errors:0 dropped:0 overruns:0 frame:0

TX packets:34 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:3887 (3.7 KiB) TX bytes:3887 (3.7 KiB)

3).测试

大家可以看到,集群已经转移到node2上,从而实现了Web高可用。

七、问题汇总

各位博友,可以从上面的实验中可以看出,此集群架构存在一重要问题?当主服器故障了或说宕机了,服务(这里是80端口)和VIP立即切换到从服务器上继续提供服务,这是没有问题的,若是这个集群提供的是论坛服务,一用户刚刚上传一附件到主服务器上,这时主服务器宕机了,用户一刷新发现刚刚上传的附件没有了,用户会怎么想呢?这会带来用户体验的缺失。那有博友会问了,那有没有什么方法解决这个问题呢?答案是肯定有的,基本上有两种解决方案,一种是各节点之间进行文件同步,另一种是各节点之间使用共享存储。下面我们就来说一说这两种方案。

第一种方案,我们说了使用节点间的文件同步,我们一般用Rsync+Inotify组合方案来解决节点之间的同步问题,但有个问题,我们说有极端一点,还是刚才论坛附件的问题,用户刚上传了附件,这时主服务器宕机了,还没有同步,用户看不到附件,还有一点情况,用户上传的附件比较大,各节点之间正在同步,这时主服务器宕机了,用户看到的附件是不完整,也不能达到很好的用户体验。而且节点之间同步,还会占用大量的带宽。说了这么多,我们总结一下,这种方案的优缺点吧,优点:可节点一台或多台服务器,节约成本,在小规模的集群中,文件同步效果还是不错的,在大规模的集群服务器不推荐使用,缺点:节点之间同步文件,占用大量的网络带宽,降低整体集群性能,在比较烦忙的集群中不推荐使用。

第二种方案,就是我们所说的共享存储方案了,共享存储方案也有两种方案,一种是文件级别的共享存储(如,NFS文件服务器),另一种是块级别的共享存储(如,iscsi共享存储)。用这种方案就能很好的解决上述的问题,上面的集群中有两个节点,主节点与从节点共享一个文件服务器,当主节点提供服务时,文件服务器是挂载在主节点上,当主节点宕机了,从节点来提供服务,并挂载同一个文件服务器,这时我们就不用担心当主节点宕机了附件也没有了,因为我们使用的同一共享存储,只要附件上传到时服务器中,我们就能看,不用担心附件不存在,或说得同步附件的问题了。在高可用集群中我比较推荐共享存储。下面我们就用NFS来演示一下各节点之间挂载共享存储。

八、共享存储

1.配置NFS服务器

[root@nfs ~]# mkdir -pv /web mkdir: 已创建目录 “/web” [root@nfs ~]# vim /etc/exports /web/ 192.168.18.0/24(ro,async) [root@nfs /]# echo 'Cluster NFS Server

' > /web/index.html [root@nfs /]# /etc/init.d/portmap start 启动 portmap: [确定] [root@nfs /]# /etc/init.d/nfs start 启动 NFS 服务: [确定] 关掉 NFS 配额: [确定] 启动 NFS 守护进程: [确定] 启动 NFS mountd: [确定] [root@nfs /]# showmount -e 192.168.18.208 Export list for 192.168.18.208: /web 192.168.18.0/24

2.节点测试挂载

node1:

[root@node1 ~]# mount -t nfs 192.168.18.208:/web /mnt [root@node1 ~]# cd /mnt/ [root@node1 mnt]# ll 总计 4 -rw-r--r-- 1 root root 28 08-07 17:41 index.html [root@node1 mnt]# mount /dev/sda2 on / type ext3 (rw) proc on /proc type proc (rw) sysfs on /sys type sysfs (rw) devpts on /dev/pts type devpts (rw,gid=5,mode=620) /dev/sda3 on /data type ext3 (rw) /dev/sda1 on /boot type ext3 (rw) tmpfs on /dev/shm type tmpfs (rw) none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw) sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw) 192.168.18.208:/web on /mnt type nfs (rw,addr=192.168.18.208) [root@node1 ~]# umount /mnt [root@node1 ~]# mount /dev/sda2 on / type ext3 (rw) proc on /proc type proc (rw) sysfs on /sys type sysfs (rw) devpts on /dev/pts type devpts (rw,gid=5,mode=620) /dev/sda3 on /data type ext3 (rw) /dev/sda1 on /boot type ext3 (rw) tmpfs on /dev/shm type tmpfs (rw) none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw) sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

node2:

[root@node2 ~]# mount -t nfs 192.168.18.208:/web /mnt [root@node2 ~]# cd /mnt [root@node2 mnt]# ll 总计 4 -rw-r--r-- 1 root root 28 08-07 17:41 index.html [root@node2 mnt]# mount /dev/sda2 on / type ext3 (rw) proc on /proc type proc (rw) sysfs on /sys type sysfs (rw) devpts on /dev/pts type devpts (rw,gid=5,mode=620) /dev/sda3 on /data type ext3 (rw) /dev/sda1 on /boot type ext3 (rw) tmpfs on /dev/shm type tmpfs (rw) none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw) sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw) 192.168.18.208:/web on /mnt type nfs (rw,addr=192.168.18.208) [root@node2 ~]# umount /mnt [root@node2 ~]# mount /dev/sda2 on / type ext3 (rw) proc on /proc type proc (rw) sysfs on /sys type sysfs (rw) devpts on /dev/pts type devpts (rw,gid=5,mode=620) /dev/sda3 on /data type ext3 (rw) /dev/sda1 on /boot type ext3 (rw) tmpfs on /dev/shm type tmpfs (rw) none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw) sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

3.修改haresource文件

[root@node1 ~]# vim /etc/ha.d/haresources node1.test.com IPaddr::192.168.18.200/24/eth0 Filesystem::192.168.18.95:/web::/var/www/html::nfs httpd

4.同步haresource配置文件

[root@node1 ~]# cd /etc/ha.d/ [root@node1 ha.d]# scp haresources node2:/etc/ha.d/ haresources 100% 5964 5.8KB/s 00:00

5.重启一下heartbeat

[root@node1 ha.d]# ssh node2 "service heartbeat restart"

Stopping High-Availability services:

[确定]

Waiting to allow resource takeover to complete:

[确定]

Starting High-Availability services:

2013/08/07_17:59:26 INFO: Running OK

2013/08/07_17:59:26 CRITICAL: Resource IPaddr::192.168.18.200/24/eth0 is active, and should not be!

2013/08/07_17:59:26 CRITICAL: Non-idle resources can affect data integrity!

2013/08/07_17:59:26 info: If you don't know what this means, then get help!

2013/08/07_17:59:26 info: Read the docs and/or source to /usr/share/heartbeat/ResourceManager for more details.

CRITICAL: Resource IPaddr::192.168.18.200/24/eth0 is active, and should not be!

CRITICAL: Non-idle resources can affect data integrity!

info: If you don't know what this means, then get help!

info: Read the docs and/or the source to /usr/share/heartbeat/ResourceManager for more details.

2013/08/07_17:59:26 CRITICAL: Non-idle resources will affect resource takeback!

2013/08/07_17:59:26 CRITICAL: Non-idle resources may affect data integrity!

[确定]

[root@node1 ha.d]# service heartbeat restart

Stopping High-Availability services:

[确定]

Waiting to allow resource takeover to complete:

[确定]

Starting High-Availability services:

2013/08/07_17:59:56 INFO: Resource is stopped

[确定]

[root@node1 ha.d]#

6.查看一下端口

[root@node1 ha.d]# netstat -ntulp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:56610 0.0.0.0:* LISTEN - tcp 0 0 0.0.0.0:612 0.0.0.0:* LISTEN 2550/rpc.statd tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2511/portmap tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 2845/cupsd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2889/sendmail: acce tcp 0 0 :::80 :::* LISTEN 11205/httpd tcp 0 0 :::22 :::* LISTEN 2832/sshd udp 0 0 0.0.0.0:43027 0.0.0.0:* - udp 0 0 225.100.100.100:694 0.0.0.0:* 10659/heartbeat: wr udp 0 0 0.0.0.0:606 0.0.0.0:* 2550/rpc.statd udp 0 0 0.0.0.0:609 0.0.0.0:* 2550/rpc.statd udp 0 0 0.0.0.0:5353 0.0.0.0:* 3002/avahi-daemon: udp 0 0 0.0.0.0:57068 0.0.0.0:* 10659/heartbeat: wr udp 0 0 0.0.0.0:111 0.0.0.0:* 2511/portmap udp 0 0 0.0.0.0:40439 0.0.0.0:* 3002/avahi-daemon: udp 0 0 0.0.0.0:631 0.0.0.0:* 2845/cupsd udp 0 0 :::37782 :::* 3002/avahi-daemon: udp 0 0 :::5353 :::* 3002/avahi-daemon: [root@node1 ha.d]#

7.测试一下Web服务

[root@node1 ha.d]# mount /dev/sda2 on / type ext3 (rw) proc on /proc type proc (rw) sysfs on /sys type sysfs (rw) devpts on /dev/pts type devpts (rw,gid=5,mode=620) /dev/sda3 on /data type ext3 (rw) /dev/sda1 on /boot type ext3 (rw) tmpfs on /dev/shm type tmpfs (rw) none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw) sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw) 192.168.18.208:/web on /var/www/html type nfs (rw,addr=192.168.18.208)

大家可以看到,顺利挂载并测试成功!

8.模拟故障并测试

(1).停止节点1上的heartbeat

[root@node1 ~]# service heartbeat stop

Stopping High-Availability services:

[确定]

logd is already stopped

(2).查看节点2的IP与服务

[root@node2 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:EA:CE:79

inet addr:192.168.18.202 Bcast:192.168.18.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:feea:ce79/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:77896 errors:0 dropped:0 overruns:0 frame:0

TX packets:46970 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:47233291 (45.0 MiB) TX bytes:7348076 (7.0 MiB)

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:EA:CE:79

inet addr:192.168.18.200 Bcast:192.168.18.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:76 errors:0 dropped:0 overruns:0 frame:0

TX packets:76 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8171 (7.9 KiB) TX bytes:8171 (7.9 KiB)

[root@node2 ~]# netstat -ntulp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:34050 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2537/portmap

tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 2871/cupsd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2915/sendmail: acce

tcp 0 0 0.0.0.0:638 0.0.0.0:* LISTEN 2576/rpc.statd

tcp 0 0 :::80 :::* LISTEN 9291/httpd

tcp 0 0 :::22 :::* LISTEN 2858/sshd

udp 0 0 0.0.0.0:57488 0.0.0.0:* 8902/heartbeat: wri

udp 0 0 0.0.0.0:48555 0.0.0.0:* 3028/avahi-daemon:

udp 0 0 0.0.0.0:56756 0.0.0.0:* -

udp 0 0 225.100.100.100:694 0.0.0.0:* 8902/heartbeat: wri

udp 0 0 0.0.0.0:5353 0.0.0.0:* 3028/avahi-daemon:

udp 0 0 0.0.0.0:111 0.0.0.0:* 2537/portmap

udp 0 0 0.0.0.0:631 0.0.0.0:* 2871/cupsd

udp 0 0 0.0.0.0:632 0.0.0.0:* 2576/rpc.statd

udp 0 0 0.0.0.0:635 0.0.0.0:* 2576/rpc.statd

udp 0 0 :::59997 :::* 3028/avahi-daemon:

udp 0 0 :::5353 :::* 3028/avahi-daemon:

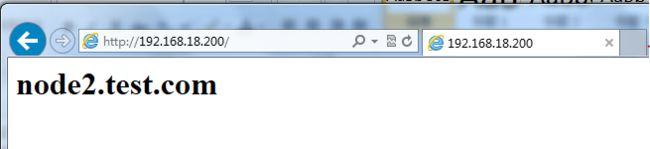

(3).测试服务

说明:大家可以看到,可以正常访问,这样我们就实现了heartbeat+httpd+nfs实现高可用的Web集群。希望通过上面的博文,大家基本了解高可用集群是怎么回事。^_^……

(4).重新启动node1上heartbeat

[root@node1 ~]# service heartbeat start

Starting High-Availability services:

2013/08/07_19:24:41 INFO: Resource is stopped

[确定]

(5).查看日志

[root@node1 ~]# tail -f /var/log/messages Aug 7 19:24:45 node1 IPaddr[11796]: INFO: Success Aug 7 19:24:45 node1 Filesystem[11932]: INFO: Resource is stopped Aug 7 19:24:45 node1 ResourceManager[11700]: info: Running /etc/ha.d/resource.d/Filesystem 192.168.18.208:/web /var/www/html nfs start Aug 7 19:24:45 node1 Filesystem[12013]: INFO: Running start for 192.168.18.208:/web on /var/www/html Aug 7 19:24:45 node1 Filesystem[12002]: INFO: Success Aug 7 19:24:45 node1 ResourceManager[11700]: info: Running /etc/init.d/httpd start Aug 7 19:24:45 node1 heartbeat: [11687]: info: local HA resource acquisition completed (standby). Aug 7 19:24:45 node1 heartbeat: [11661]: info: Standby resource acquisition done [foreign]. Aug 7 19:24:45 node1 heartbeat: [11661]: info: Initial resource acquisition complete (auto_failback) Aug 7 19:24:45 node1 heartbeat: [11661]: info: remote resource transition completed.

(6).查看IP

[root@node1 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:23:76:4D

inet addr:192.168.18.201 Bcast:192.168.18.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe23:764d/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:86048 errors:0 dropped:0 overruns:0 frame:0

TX packets:42794 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:46010055 (43.8 MiB) TX bytes:6400751 (6.1 MiB)

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:23:76:4D

inet addr:192.168.18.200 Bcast:192.168.18.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:100 errors:0 dropped:0 overruns:0 frame:0

TX packets:100 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:10619 (10.3 KiB) TX bytes:10619 (10.3 KiB)

(7).查看服务

[root@node1 ~]# netstat -ntulp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:612 0.0.0.0:* LISTEN 2550/rpc.statd tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2511/portmap tcp 0 0 0.0.0.0:51217 0.0.0.0:* LISTEN - tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 2845/cupsd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2889/sendmail: acce tcp 0 0 :::80 :::* LISTEN 12100/httpd tcp 0 0 :::22 :::* LISTEN 2832/sshd udp 0 0 225.100.100.100:694 0.0.0.0:* 11665/heartbeat: wr udp 0 0 0.0.0.0:36944 0.0.0.0:* 11665/heartbeat: wr udp 0 0 0.0.0.0:606 0.0.0.0:* 2550/rpc.statd udp 0 0 0.0.0.0:609 0.0.0.0:* 2550/rpc.statd udp 0 0 0.0.0.0:5353 0.0.0.0:* 3002/avahi-daemon: udp 0 0 0.0.0.0:111 0.0.0.0:* 2511/portmap udp 0 0 0.0.0.0:40439 0.0.0.0:* 3002/avahi-daemon: udp 0 0 0.0.0.0:631 0.0.0.0:* 2845/cupsd udp 0 0 0.0.0.0:45693 0.0.0.0:* - udp 0 0 :::37782 :::* 3002/avahi-daemon: udp 0 0 :::5353 :::* 3002/avahi-daemon:

(8).再测试一下

大家可以看到,依然可以测试成功,实现了Web的高可用,好了本次博文到这边全部结束!欢迎大家提出宝贵意见,多多交流,共同进步……