最近有个功能, 要渲染从主相机视角看到的另一个相机的可视范围和不可见范围, 大概如下图 :

简单来说就是主相机视野和观察者相机视野重合的地方, 能标记出观察者相机的可见和不可见, 实现原理就跟 ShadowMap 一样, 就是有关深度图, 世界坐标转换之类的, 每次有此类的功能都会很悲催, 虽然它的逻辑很简单, 可是用Unity3D做起来很麻烦...

原理 : 在观察者相机中获取深度贴图, 储存在某张 RenderTexture 中, 然后在主相机中每个片元都获取对应的世界坐标位置, 将世界坐标转换到观察者相机viewPort中, 如果在观察者相机视野内, 就继续把视口坐标转换为UV坐标然后取出储存在 RenderTexture 中的对应深度, 把深度转换为实际深度值后做比较, 如果深度小于等于深度图的深度, 就是观察者可见 否则就是不可见了.

先来看怎样获取深度图吧, 不管哪种渲染方式, 给相机添加获取深度图标记即可:

_cam = GetComponent(); _cam.depthTextureMode |= DepthTextureMode.Depth;

然后深度图要精度高点的, 单通道图即可:

renderTexture = RenderTexture.GetTemporary(Screen.width, Screen.height, 0, RenderTextureFormat.RFloat); renderTexture.hideFlags = HideFlags.DontSave;

之后就直接把相机的贴图经过后处理把深度图渲染出来即可 :

private void OnRenderImage(RenderTexture source, RenderTexture destination) { if (_material && _cam) { Shader.SetGlobalFloat("_cameraNear", _cam.nearClipPlane); Shader.SetGlobalFloat("_cameraFar", _cam.farClipPlane); Graphics.Blit(source, renderTexture, _material); } Graphics.Blit(source, destination); }

材质就是一个简单的获取深度的shader :

sampler2D _CameraDepthTexture; uniform float _cameraFar; uniform float _cameraNear; float DistanceToLinearDepth(float d, float near, float far) { float z = (d - near) / (far - near); return z; } fixed4 frag(v2f i) : SV_Target { float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv); depth = LinearEyeDepth(depth); depth = DistanceToLinearDepth(depth, _cameraNear, _cameraFar); return float4(depth, depth, depth, 1); }

这里有点奇怪吧, 为什么返回的不是深度贴图的深度, 也不是 Linear01Depth(depth) 的归一化深度, 而是自己计算出来的一个深度?

这是因为缺少官方文档........其实看API里面有直接提供 RenderBuffer 给相机的方法 :

_cam.SetTargetBuffers(renderTexture.colorBuffer, renderTexture.depthBuffer);

可是没有文档也没有例子啊, 鬼知道你渲染出来我怎么用啊, 还有 renderTexture.depthBuffer 到底怎样作为 Texture 传给 shader 啊... 我之前尝试过它们之间通过 IntPtr 作的转换操作, 都是失败...

于是就只能用最稳妥的方法, 通过 Shader 渲染一张深度图出来吧, 然后通过 SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv); 获取到的深度值, 应该是 NDC 坐标, 深度值的范围应该是[0, 1]之间吧(非线性), 如果在其它相机过程中获取实际深度的话, 就需要自己实现 LinearEyeDepth(float z) 这个方法:

inline float LinearEyeDepth( float z ) { return 1.0 / (_ZBufferParams.z * z + _ZBufferParams.w); }

而在不同平台, _ZBufferParams 里面的值是不一样的, 所以如果实现它的话会很麻烦, 并且经过我的测试, 结果是不对的......

double x, y; OpenGL would be this: x = (1.0 - m_FarClip / m_NearClip) / 2.0; y = (1.0 + m_FarClip / m_NearClip) / 2.0; D3D is this: x = 1.0 - m_FarClip / m_NearClip; y = m_FarClip / m_NearClip; _ZBufferParams = float4(x, y, x/m_FarClip, y/m_FarClip);

PS : 使用观察者相机的 nearClipPlane, farClipPlane 计算出来的深度也是不正确的, 不知道为什么......

所以就有了我自己计算归一化深度的方法了:

float DistanceToLinearDepth(float d, float near, float far) { float z = (d - near) / (far - near); return z; }

简单好理解, 这样就获得了我自己计算的深度图, 只需要观察者相机的 far/near clip panel 的值即可. 所以可以看出, 由于缺少官方文档等原因, 做一个简单的功能往往会很花费时间, 说真的搜索了很多例子, 直接代码Shader复制过来都不能用, 简直了.

接下来是难点的主相机渲染, 主相机需要做这几件事 :

1. 通过主相机深度图, 获取当前片元的世界坐标位置

2. 通过把世界坐标位置转换到观察者相机视口空间, 检测是否在观察者相机视口之内.

3. 不在观察者相机视口之内, 正常显示.

4. 在观察者相机视口之内的部分, 将视口坐标转换成观察者相机的 UV 坐标, 然后对观察者相机渲染出来的深度图进行取值, 变换为实际深度值然后进行深度比较, 如果片元在观察者相机视口的坐标深度小于等于深度图深度, 则是可见部分, 其它是不可见部分.

主相机也设置打开深度图渲染 :

_cam = GetComponent(); _cam.depthTextureMode |= DepthTextureMode.Depth;

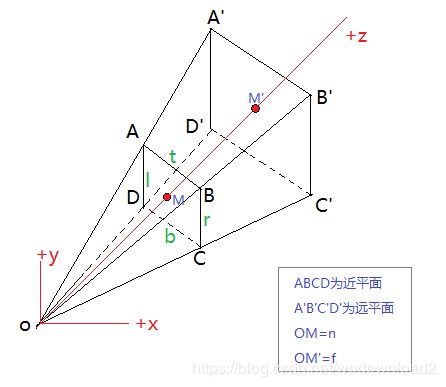

那么怎样通过片元获取世界坐标位置呢? 最稳妥的是参考官方 Fog 相关 Shader, 因为使用的是后处理, 是无法在顶点阶段计算世界坐标位置后通过插值在片元阶段获得片元坐标位置的, 我们可以通过视锥的4个射线配合深度图来进行世界坐标还原. 下图表示了视锥体 :

后处理的顶点过程可以看成是渲染 ABCD 组成的近裁面的过程, 它只有4个顶点, 如果我们可以将OA, OB, OC, OD 四条射线存入顶点程序, 那么在片元阶段就能获得自动插值的相机到片元对应的近裁面的射线, 因为深度值 z 是一个非透视值( 也就是说透视投影矩阵虽然变换了z值, 可是z值直接保留到了w中, 其实是没有被变换的意思 ), 而 x, y 是经过透视变换的, 那么刚好四个射线方向通过GPU插值就符合逆透视变换了( Z在向量插值中不变 ).

假设 ABCD 面现在不是近裁面, 而是离 O 点距离为1的裁面, 那么 ABCD 的中心 M 点跟 O 点的射线 OM 的长度就是1, 那么 O 点片元代表的位置就可以简单计算出来了 :

float3 interpolatedRay = OM 向量(世界坐标系) float linearDepth = LinearEyeDepth(SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, float2(0.5, 0.5))); // 0.5是屏幕中点 float3 wpos = _WorldSpaceCameraPos + linearDepth * interpolatedRay.xyz;

使用相机位置 + OM射线方向 * 深度 就可以算出来了. 那么其他片元怎么计算呢? 使用 OA, OB, OC, OD 向量让 GPU 自动插值计算即可, 其实 OM 向量就是它们的插值结果, 计算这四个向量也有几种方法, 我试了在顶点阶段通过判断顶点Index来计算, 不过似乎结果不正确, 就直接在 C# 中计算了(老版本的Fog Shader就是这样计算的) :

Matrix4x4 frustumCorners = Matrix4x4.identity; //先计算近裁剪平面的四个角对应的向量 float fov = _cam.fieldOfView; float near = _cam.nearClipPlane; float aspect = _cam.aspect; var cameraTransform = _cam.transform; float halfHeight = near * Mathf.Tan(fov * 0.5f * Mathf.Deg2Rad); Vector3 toRight = cameraTransform.right * halfHeight * aspect; Vector3 toTop = cameraTransform.up * halfHeight; Vector3 topLeft = cameraTransform.forward * near + toTop - toRight; float scale = topLeft.magnitude / near; topLeft.Normalize(); topLeft *= scale; Vector3 topRight = cameraTransform.forward * near + toRight + toTop; topRight.Normalize(); topRight *= scale; Vector3 bottomLeft = cameraTransform.forward * near - toTop - toRight; bottomLeft.Normalize(); bottomLeft *= scale; Vector3 bottomRight = cameraTransform.forward * near + toRight - toTop; bottomRight.Normalize(); bottomRight *= scale; //将4个向量存储在矩阵类型的frustumCorners 中 frustumCorners.SetRow(0, bottomLeft); frustumCorners.SetRow(1, bottomRight); frustumCorners.SetRow(2, topRight); frustumCorners.SetRow(3, topLeft); Shader.SetGlobalMatrix("_FrustumCornersRay", frustumCorners);

这样就把 OA, OB, OC, OD 传入 Shader 了, 只要在顶点阶段根据 UV 的象限取出对应的向量来就行了. 我们可以获取片元对应的世界坐标之后, 就要把世界坐标转换到观察者相机的视口之内去, 看看它是不是在观察者相机视野内 :

把观察者相机的矩阵以及参数传入Shader

Shader.SetGlobalFloat("_cameraNear", _cam.nearClipPlane); Shader.SetGlobalFloat("_cameraFar", _cam.farClipPlane); Shader.SetGlobalMatrix("_DepthCameraWorldToLocalMatrix", _cam.worldToCameraMatrix); Shader.SetGlobalMatrix("_DepthCameraProjectionMatrix", _cam.projectionMatrix);

转换坐标到观察者视口( wpos 就是前面计算出来的世界坐标 ) :

// 透视投影之后的坐标转换成NDC坐标, 再转换成视口坐标 float2 ProjPosToViewPortPos(float4 projPos) { float3 ndcPos = projPos.xyz / projPos.w; float2 viewPortPos = float2(0.5f * ndcPos.x + 0.5f, 0.5f * ndcPos.y + 0.5f); return viewPortPos; }

float3 viewPosInDepthCamera = mul(_DepthCameraWorldToLocalMatrix, float4(wpos, 1)).xyz; float4 projPosInDepthCamera = mul(_DepthCameraProjectionMatrix, float4(viewPosInDepthCamera, 1)); float2 viewPortPosInDepthCamera = ProjPosToViewPortPos(projPosInDepthCamera); float depthCameraViewZ = -viewPosInDepthCamera.z;

判断是否在观察者视野内, 不在视野内的直接返回原值 :

if (viewPortPosInDepthCamera.x < 0 || viewPortPosInDepthCamera.x > 1) { return col; } if (viewPortPosInDepthCamera.y < 0 || viewPortPosInDepthCamera.y > 1) { return col; } if (depthCameraViewZ< _cameraNear || depthCameraViewZ > _cameraFar) { return col; }

在视野内的, 就需要从观察者相机的深度图获取深度进行深度对比 :

Shader.SetGlobalTexture("_ObserverDepthTexture", getDepthTexture.renderTexture); // 把深度图传入主相机Shader

float2 depthCameraUV = viewPortPosInDepthCamera.xy; #if UNITY_UV_STARTS_AT_TOP if (_MainTex_TexelSize.y < 0) { depthCameraUV.y = 1 - depthCameraUV.y; } #endif float observer01Depth = tex2D(_ObserverDepthTexture, depthCameraUV).r; float observerEyeDepth = LinearDepthToDistance(observer01Depth, _cameraNear, _cameraFar); float4 finalCol = col * 0.8; float4 visibleColor = _visibleColor * 0.4f; float4 unVisibleColor = _unVisibleColor * 0.4f; // depth check if (depthCameraViewZ <= observerEyeDepth) { return finalCol + visibleColor; } else { // bias check if (abs(depthCameraViewZ - observerEyeDepth) <= _FieldBias) { return finalCol + visibleColor; } else { return finalCol + unVisibleColor; } }

深度贴图是我们自己计算的01深度值, 这里解压深度贴图也是使用观察者相机的 nearClipPlane, farClipPlane 直接计算得到的, 最基本的功能就完全实现了, 那些一大堆 if else 就由他去吧, 永远习惯不了着色语言的写法.

那么说让人头大的地方在哪呢? 上面过程中也出现过了, 包括 C# 那边提供的 API 没有文档不会用, 深度图解压函数自己实现会出错( 完全按照官方人员论坛回复来写的 ), 在顶点阶段自己计算视锥射线出错( 又难测试又难找资料 ), 还有上文中的宏 UNITY_UV_STARTS_AT_TOP 这些我哪知道什么时候要加什么时候不用加啊, 甚至 C# 中的 GL 函数还有 GL.GetGPUProjectionMatrix() 这样的功能.

其实最希望引擎能实现的是我告诉它我希望用那种坐标系或者用哪种标准来编程, 这样我就使用习惯的写法就行了啊, 比如屏幕0,0位置我习惯左上角, 你习惯左下角这样的. 就不需要每个人都对这些宏熟悉才能写个Shader. 我觉得都是比较习惯D3D标准的吧.

Shader.SetGlobalStandard("D3D"); // DX标准 Shader.SetGlobalStandard("OPENGL"); // OpenGL -- 提供全局设置和单独设置的功能的话

最后做出来的只是基本功能, 并没有对距离深度做样本估计, 所以会像硬阴影一样有锯齿边, 还有像没开各项异性的贴图一样有撕扯感, 解决这些问题的方法跟 ShadowMap 一样一样的.

ShadowMap 我在 Unity4.x 的时候就试着写过了, 用的是 VSM 做的软阴影, 现在写这个感觉还是跟那年一样, 没有便利性上的提升......

using System.Collections; using System.Collections.Generic; using UnityEngine; [RequireComponent(typeof(Camera))] public class GetDepthTexture : MonoBehaviour { public RenderTexture renderTexture; public Color visibleCol = Color.green; public Color unVisibleCol = Color.red; private Material _material; public Camera _cam { get; private set; } public LineRenderer lineRendererTemplate; void Start() { _cam = GetComponent(); _cam.depthTextureMode |= DepthTextureMode.Depth; renderTexture = RenderTexture.GetTemporary(Screen.width, Screen.height, 0, RenderTextureFormat.RFloat); renderTexture.hideFlags = HideFlags.DontSave; _material = new Material(Shader.Find("Custom/GetDepthTexture")); _cam.SetTargetBuffers(renderTexture.colorBuffer, renderTexture.depthBuffer); if(lineRendererTemplate) { var dirs = VisualFieldRenderer.GetFrustumCorners(_cam); for(int i = 0; i < 4; i++) { Vector3 dir = dirs.GetRow(i); var tagPoint = _cam.transform.position + (dir * _cam.farClipPlane); var newLine = GameObject.Instantiate(lineRendererTemplate.gameObject).GetComponent (); newLine.useWorldSpace = false; newLine.transform.SetParent(_cam.transform, false); newLine.transform.localPosition = Vector3.zero; newLine.transform.localRotation = Quaternion.identity; newLine.SetPositions(new Vector3[2] { Vector3.zero, _cam.transform.worldToLocalMatrix.MultiplyPoint(tagPoint) }); } } } private void OnDestroy() { if(renderTexture) { RenderTexture.ReleaseTemporary(renderTexture); } if(_cam) { _cam.targetTexture = null; } } private void OnRenderImage(RenderTexture source, RenderTexture destination) { if(_material && _cam) { Shader.SetGlobalColor("_visibleColor", visibleCol); Shader.SetGlobalColor("_unVisibleColor", unVisibleCol); Shader.SetGlobalFloat("_cameraNear", _cam.nearClipPlane); Shader.SetGlobalFloat("_cameraFar", _cam.farClipPlane); Shader.SetGlobalMatrix("_DepthCameraWorldToLocalMatrix", _cam.worldToCameraMatrix); Shader.SetGlobalMatrix("_DepthCameraProjectionMatrix", _cam.projectionMatrix); Graphics.Blit(source, renderTexture, _material); } Graphics.Blit(source, destination); } }

Shader "Custom/GetDepthTexture" { Properties { _MainTex("Texture", 2D) = "white" {} } CGINCLUDE #include "UnityCG.cginc" struct appdata { float4 vertex : POSITION; float2 uv : TEXCOORD0; }; struct v2f { float2 uv : TEXCOORD0; float4 vertex : SV_POSITION; }; sampler2D _CameraDepthTexture; uniform float _cameraFar; uniform float _cameraNear; float DistanceToLinearDepth(float d, float near, float far) { float z = (d - near) / (far - near); return z; } v2f vert(appdata v) { v2f o; o.vertex = UnityObjectToClipPos(v.vertex); o.uv = v.uv; return o; } fixed4 frag(v2f i) : SV_Target { float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv); depth = LinearEyeDepth(depth); depth = DistanceToLinearDepth(depth, _cameraNear, _cameraFar); return float4(depth, depth, depth, 1); } ENDCG SubShader { // No culling or depth Cull Off ZWrite Off ZTest Always //Pass 0 Roberts Operator Pass { CGPROGRAM #pragma vertex vert #pragma fragment frag ENDCG } } }

using System.Collections; using System.Collections.Generic; using UnityEngine; [RequireComponent(typeof(Camera))] public class VisualFieldRenderer : MonoBehaviour { public GetDepthTexture getDepthTexture; [SerializeField] [Range(0.001f, 1f)] public float fieldCheckBias = 0.1f; private Material _material; private Camera _cam; void Start() { _cam = GetComponent(); _cam.depthTextureMode |= DepthTextureMode.Depth; _material = new Material(Shader.Find("Custom/VisualFieldRenderer")); } private void OnRenderImage(RenderTexture source, RenderTexture destination) { if (_cam && getDepthTexture && getDepthTexture.renderTexture && _material) { if (getDepthTexture._cam) { if (getDepthTexture._cam.farClipPlane > _cam.farClipPlane - 500) { _cam.farClipPlane = getDepthTexture._cam.farClipPlane + 500; } } Matrix4x4 frustumCorners = GetFrustumCorners(_cam); Shader.SetGlobalMatrix("_FrustumCornersRay", frustumCorners); Shader.SetGlobalTexture("_ObserverDepthTexture", getDepthTexture.renderTexture); Shader.SetGlobalFloat("_FieldBias", fieldCheckBias); Graphics.Blit(source, destination, _material); } else { Graphics.Blit(source, destination); } } public static Matrix4x4 GetFrustumCorners(Camera cam) { Matrix4x4 frustumCorners = Matrix4x4.identity; float fov = cam.fieldOfView; float near = cam.nearClipPlane; float aspect = cam.aspect; var cameraTransform = cam.transform; float halfHeight = near * Mathf.Tan(fov * 0.5f * Mathf.Deg2Rad); Vector3 toRight = cameraTransform.right * halfHeight * aspect; Vector3 toTop = cameraTransform.up * halfHeight; Vector3 topLeft = cameraTransform.forward * near + toTop - toRight; float scale = topLeft.magnitude / near; topLeft.Normalize(); topLeft *= scale; Vector3 topRight = cameraTransform.forward * near + toRight + toTop; topRight.Normalize(); topRight *= scale; Vector3 bottomLeft = cameraTransform.forward * near - toTop - toRight; bottomLeft.Normalize(); bottomLeft *= scale; Vector3 bottomRight = cameraTransform.forward * near + toRight - toTop; bottomRight.Normalize(); bottomRight *= scale; frustumCorners.SetRow(0, bottomLeft); frustumCorners.SetRow(1, bottomRight); frustumCorners.SetRow(2, topRight); frustumCorners.SetRow(3, topLeft); return frustumCorners; } }

// Upgrade NOTE: replaced '_CameraToWorld' with 'unity_CameraToWorld' Shader "Custom/VisualFieldRenderer" { Properties { _MainTex("Texture", 2D) = "white" {} } CGINCLUDE #include "UnityCG.cginc" struct appdata { float4 vertex : POSITION; float2 uv : TEXCOORD0; }; struct v2f { float2 uv : TEXCOORD0; float4 vertex : SV_POSITION; float2 uv_depth : TEXCOORD1; float4 interpolatedRay : TEXCOORD2; }; sampler2D _MainTex; half4 _MainTex_TexelSize; sampler2D _CameraDepthTexture; uniform float _FieldBias; uniform sampler2D _ObserverDepthTexture; uniform float4x4 _DepthCameraWorldToLocalMatrix; uniform float4x4 _DepthCameraProjectionMatrix; uniform float _cameraFar; uniform float _cameraNear; uniform float4x4 _FrustumCornersRay; float4 _unVisibleColor; float4 _visibleColor; float2 ProjPosToViewPortPos(float4 projPos) { float3 ndcPos = projPos.xyz / projPos.w; float2 viewPortPos = float2(0.5f * ndcPos.x + 0.5f, 0.5f * ndcPos.y + 0.5f); return viewPortPos; } float LinearDepthToDistance(float z, float near, float far) { float d = z * (far - near) + near; return d; } //顶点阶段 v2f vert(appdata v) { v2f o; o.vertex = UnityObjectToClipPos(v.vertex); // MVP matrix screen pos o.uv = v.uv; o.uv_depth = v.uv; #if UNITY_UV_STARTS_AT_TOP if (_MainTex_TexelSize.y < 0) { o.uv_depth.y = 1 - o.uv_depth.y; } #endif int index = 0; if (v.uv.x < 0.5 && v.uv.y < 0.5) { index = 0; } else if (v.uv.x > 0.5 && v.uv.y < 0.5) { index = 1; } else if (v.uv.x > 0.5 && v.uv.y > 0.5) { index = 2; } else { index = 3; } o.interpolatedRay = _FrustumCornersRay[index]; return o; } //片元阶段 fixed4 frag(v2f i) : SV_Target { float4 col = tex2D(_MainTex, i.uv); float z = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv); float depth = Linear01Depth(z); if (depth >= 1) { return col; } // correct in Main Camera float linearDepth = LinearEyeDepth(SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv_depth)); float3 wpos = _WorldSpaceCameraPos + linearDepth * i.interpolatedRay.xyz; // correct in Depth Camera float3 viewPosInDepthCamera = mul(_DepthCameraWorldToLocalMatrix, float4(wpos, 1)).xyz; float4 projPosInDepthCamera = mul(_DepthCameraProjectionMatrix, float4(viewPosInDepthCamera, 1)); float2 viewPortPosInDepthCamera = ProjPosToViewPortPos(projPosInDepthCamera); float depthCameraViewZ = -viewPosInDepthCamera.z; if (viewPortPosInDepthCamera.x < 0 || viewPortPosInDepthCamera.x > 1) { return col; } if (viewPortPosInDepthCamera.y < 0 || viewPortPosInDepthCamera.y > 1) { return col; } if (depthCameraViewZ <= _cameraNear || depthCameraViewZ >= _cameraFar) { return col; } // correct in depth camera float2 depthCameraUV = viewPortPosInDepthCamera.xy; #if UNITY_UV_STARTS_AT_TOP if (_MainTex_TexelSize.y < 0) { depthCameraUV.y = 1 - depthCameraUV.y; } #endif float observer01Depth = tex2D(_ObserverDepthTexture, depthCameraUV).r; float observerEyeDepth = LinearDepthToDistance(observer01Depth, _cameraNear, _cameraFar); float4 finalCol = col * 0.8; float4 visibleColor = _visibleColor * 0.4f; float4 unVisibleColor = _unVisibleColor * 0.4f; // depth check if (depthCameraViewZ <= observerEyeDepth) { return finalCol + visibleColor; } else { // bias check if (abs(depthCameraViewZ - observerEyeDepth) <= _FieldBias) { return finalCol + visibleColor; } else { return finalCol + unVisibleColor; } } } ENDCG SubShader { // No culling or depth Cull Off ZWrite Off ZTest Always //Pass 0 Roberts Operator Pass { CGPROGRAM #pragma vertex vert #pragma fragment frag ENDCG } } }

2019.12.02 添加VSM进行滤波

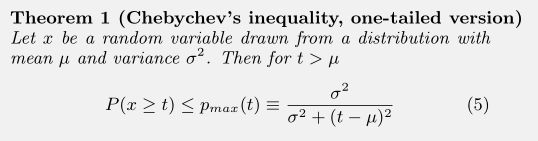

因为VSM是最容易实现的, 切比雪夫不等式应该是最容易理解的, 测试一下能否改善一下效果. 一些功能代码在 https://www.cnblogs.com/tiancaiwrk/p/11957545.html

单边形式的不等式 :

1. 在单边式中, 当t > u 时, 有原始数据 x >= t 的概率小于右边等式. 那么计算出来的就是当前像素在观察者相机中被遮挡的概率了.在单边式中, 当t > u 时, 有原始数据 x >= t 的概率小于右边等式. 那么计算出来的就是当前像素在观察者相机中被遮挡的概率了.

2. 把深度作为数据组, 深度的平方作为中间计算数据, 存进双通道贴图中即可, 再通过一次 Blur 作为求取平均值的过程, 就得到后续的计算数据了.

因为方差可以根据 平方的均值 - 均值的平方 计算 :

3. 将式子代入 (5) 中, t 就直接用片元在观察者相机中的实际深度就可以了.

总代码 :

using UnityEngine; public static class GLHelper { public static void Blit(Texture source, Material material, RenderTexture destination, int materialPass = 0) { if(material.SetPass(materialPass)) { material.mainTexture = source; Graphics.SetRenderTarget(destination); GL.PushMatrix(); GL.LoadOrtho(); GL.Begin(GL.QUADS); { Vector3 coords = new Vector3(0, 0, 0); GL.TexCoord(coords); GL.Vertex(coords); coords = new Vector3(1, 0, 0); GL.TexCoord(coords); GL.Vertex(coords); coords = new Vector3(1, 1, 0); GL.TexCoord(coords); GL.Vertex(coords); coords = new Vector3(0, 1, 0); GL.TexCoord(coords); GL.Vertex(coords); } GL.End(); GL.PopMatrix(); } } public static void CopyTexture(RenderTexture from, Texture2D to) { if(from && to) { if((SystemInfo.copyTextureSupport & UnityEngine.Rendering.CopyTextureSupport.RTToTexture) != 0) { Graphics.CopyTexture(from, to); } else { var current = RenderTexture.active; RenderTexture.active = from; to.ReadPixels(new Rect(0, 0, from.width, from.height), 0, 0); to.Apply(); RenderTexture.active = current; } } } #region Blur Imp public static Texture2D DoBlur(Texture tex) { if(tex) { var material = new Material(Shader.Find("Custom/SimpleBlur")); material.mainTexture = tex; var tempRenderTexture1 = RenderTexture.GetTemporary(tex.width, tex.height, 0, RenderTextureFormat.ARGB32); material.SetVector("_offset", Vector2.up); GLHelper.Blit(tex, material, tempRenderTexture1, 0); var tempRenderTexture2 = RenderTexture.GetTemporary(tex.width, tex.height, 0, RenderTextureFormat.ARGB32); material.SetVector("_offset", Vector2.right); GLHelper.Blit(tempRenderTexture1, material, tempRenderTexture2, 0); var retTexture = new Texture2D(tex.width, tex.height, TextureFormat.ARGB32, false, true); GLHelper.CopyTexture(tempRenderTexture2, retTexture); RenderTexture.ReleaseTemporary(tempRenderTexture1); RenderTexture.ReleaseTemporary(tempRenderTexture2); return retTexture; } return null; } public static bool DoBlur(Texture tex, RenderTexture renderTexture) { if(tex) { var material = new Material(Shader.Find("Custom/SimpleBlur")); material.mainTexture = tex; var tempRenderTexture = RenderTexture.GetTemporary(tex.width, tex.height, 0, RenderTextureFormat.ARGB32); material.SetVector("_offset", Vector2.up); GLHelper.Blit(tex, material, tempRenderTexture, 0); material.SetVector("_offset", Vector2.right); GLHelper.Blit(tempRenderTexture, material, renderTexture, 0); RenderTexture.ReleaseTemporary(tempRenderTexture); return true; } return false; } #endregion }

Shader "Custom/SimpleBlur" { Properties { _MainTex ("Texture", 2D) = "white" {} } SubShader { // No culling or depth Cull Off ZWrite Off ZTest Always Pass { CGPROGRAM #pragma vertex vert #pragma fragment frag #include "UnityCG.cginc" struct appdata { float4 vertex : POSITION; float2 uv : TEXCOORD0; }; struct v2f { float2 uv : TEXCOORD0; float4 vertex : SV_POSITION; }; sampler2D _MainTex; float4 _MainTex_TexelSize; uniform float2 _offset; v2f vert (appdata v) { v2f o; o.vertex = UnityObjectToClipPos(v.vertex); o.uv = v.uv; #if UNITY_UV_STARTS_AT_TOP if (_MainTex_TexelSize.y < 0) { o.uv.y = 1 - o.uv.y; } #endif return o; } fixed4 frag (v2f i) : SV_Target { float4 col = half4(0, 0, 0, 0); // marco for more faster #define PIXELBLUR(weight, kernel_offset) tex2D(_MainTex, float2(i.uv.xy + _offset * _MainTex_TexelSize.xy * kernel_offset)) * weight col += PIXELBLUR(0.05, -4.0); col += PIXELBLUR(0.09, -3.0); col += PIXELBLUR(0.12, -2.0); col += PIXELBLUR(0.15, -1.0); col += PIXELBLUR(0.18, 0.0); col += PIXELBLUR(0.15, +1.0); col += PIXELBLUR(0.12, +2.0); col += PIXELBLUR(0.09, +3.0); col += PIXELBLUR(0.05, +4.0); return col; } ENDCG } } }

using System.Collections; using System.Collections.Generic; using UnityEngine; [RequireComponent(typeof(Camera))] public class GetDepthTexture_VSM : MonoBehaviour { public RenderTexture depthRenderTexture_RG; public RenderTexture depthRenderTexture_VSM; public Color visibleCol = Color.green; public Color unVisibleCol = Color.red; private Material _material; public Camera _cam { get; private set; } public LineRenderer lineRendererTemplate; void Start() { _cam = GetComponent(); _cam.depthTextureMode |= DepthTextureMode.Depth; depthRenderTexture_RG = RenderTexture.GetTemporary(Screen.width, Screen.height, 0, RenderTextureFormat.RGFloat, RenderTextureReadWrite.Default, 2); depthRenderTexture_RG.hideFlags = HideFlags.DontSave; depthRenderTexture_VSM = RenderTexture.GetTemporary(Screen.width, Screen.height, 0, RenderTextureFormat.RGFloat, RenderTextureReadWrite.Default, 2); depthRenderTexture_VSM.hideFlags = HideFlags.DontSave; _material = new Material(Shader.Find("Custom/GetDepthTexture_VSM")); if(lineRendererTemplate) { var dirs = VisualFieldRenderer.GetFrustumCorners(_cam); for(int i = 0; i < 4; i++) { Vector3 dir = dirs.GetRow(i); var tagPoint = _cam.transform.position + (dir * _cam.farClipPlane); var newLine = GameObject.Instantiate(lineRendererTemplate.gameObject).GetComponent (); newLine.useWorldSpace = false; newLine.transform.SetParent(_cam.transform, false); newLine.transform.localPosition = Vector3.zero; newLine.transform.localRotation = Quaternion.identity; newLine.SetPositions(new Vector3[2] { Vector3.zero, _cam.transform.worldToLocalMatrix.MultiplyPoint(tagPoint) }); } } } private void OnDestroy() { if(depthRenderTexture_RG) { RenderTexture.ReleaseTemporary(depthRenderTexture_RG); } if(_cam) { _cam.targetTexture = null; } } private void OnRenderImage(RenderTexture source, RenderTexture destination) { if(_material && _cam) { Shader.SetGlobalColor("_visibleColor", visibleCol); Shader.SetGlobalColor("_unVisibleColor", unVisibleCol); Shader.SetGlobalFloat("_cameraNear", _cam.nearClipPlane); Shader.SetGlobalFloat("_cameraFar", _cam.farClipPlane); Shader.SetGlobalMatrix("_DepthCameraWorldToLocalMatrix", _cam.worldToCameraMatrix); Shader.SetGlobalMatrix("_DepthCameraProjectionMatrix", _cam.projectionMatrix); Graphics.Blit(source, depthRenderTexture_RG, _material); GLHelper.DoBlur(depthRenderTexture_RG, depthRenderTexture_VSM); } Graphics.Blit(source, destination); } }

Shader "Custom/GetDepthTexture_VSM" { Properties { _MainTex("Texture", 2D) = "white" {} } CGINCLUDE #include "UnityCG.cginc" struct appdata { float4 vertex : POSITION; float2 uv : TEXCOORD0; }; struct v2f { float2 uv : TEXCOORD0; float4 vertex : SV_POSITION; }; sampler2D _CameraDepthTexture; uniform float _cameraFar; uniform float _cameraNear; float DistanceToLinearDepth(float d, float near, float far) { float z = (d - near) / (far - near); return z; } v2f vert(appdata v) { v2f o; o.vertex = UnityObjectToClipPos(v.vertex); o.uv = v.uv; return o; } fixed4 frag(v2f i) : SV_Target { float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv); depth = DistanceToLinearDepth(LinearEyeDepth(depth), _cameraNear, _cameraFar); return float4(depth, depth * depth, 0, 1); } ENDCG SubShader { // No culling or depth Cull Off ZWrite Off ZTest Always //Pass 0 Roberts Operator Pass { CGPROGRAM #pragma vertex vert #pragma fragment frag ENDCG } } }

using System.Collections; using System.Collections.Generic; using UnityEngine; [RequireComponent(typeof(Camera))] public class VisualFieldRenderer_VSM : MonoBehaviour { [SerializeField] public GetDepthTexture_VSM getDepthTexture; [SerializeField] [Range(0.001f, 1f)] public float fieldCheckBias = 0.1f; private Material _material; private Camera _cam; void Start() { _cam = GetComponent(); _cam.depthTextureMode |= DepthTextureMode.Depth; _material = new Material(Shader.Find("Custom/VisualFieldRenderer_VSM")); } private void OnRenderImage(RenderTexture source, RenderTexture destination) { if(_cam && getDepthTexture && getDepthTexture.depthRenderTexture_VSM && _material) { if(getDepthTexture._cam) { if(getDepthTexture._cam.farClipPlane > _cam.farClipPlane - 500) { _cam.farClipPlane = getDepthTexture._cam.farClipPlane + 500; } } Matrix4x4 frustumCorners = GetFrustumCorners(_cam); Shader.SetGlobalMatrix("_FrustumCornersRay", frustumCorners); Shader.SetGlobalTexture("_ObserverDepthTexture", getDepthTexture.depthRenderTexture_VSM); Shader.SetGlobalFloat("_FieldBias", fieldCheckBias); Graphics.Blit(source, destination, _material); } else { Graphics.Blit(source, destination); } } public static Matrix4x4 GetFrustumCorners(Camera cam) { Matrix4x4 frustumCorners = Matrix4x4.identity; float fov = cam.fieldOfView; float near = cam.nearClipPlane; float aspect = cam.aspect; var cameraTransform = cam.transform; float halfHeight = near * Mathf.Tan(fov * 0.5f * Mathf.Deg2Rad); Vector3 toRight = cameraTransform.right * halfHeight * aspect; Vector3 toTop = cameraTransform.up * halfHeight; Vector3 topLeft = cameraTransform.forward * near + toTop - toRight; float scale = topLeft.magnitude / near; topLeft.Normalize(); topLeft *= scale; Vector3 topRight = cameraTransform.forward * near + toRight + toTop; topRight.Normalize(); topRight *= scale; Vector3 bottomLeft = cameraTransform.forward * near - toTop - toRight; bottomLeft.Normalize(); bottomLeft *= scale; Vector3 bottomRight = cameraTransform.forward * near + toRight - toTop; bottomRight.Normalize(); bottomRight *= scale; frustumCorners.SetRow(0, bottomLeft); frustumCorners.SetRow(1, bottomRight); frustumCorners.SetRow(2, topRight); frustumCorners.SetRow(3, topLeft); return frustumCorners; } }

// Upgrade NOTE: replaced '_CameraToWorld' with 'unity_CameraToWorld' Shader "Custom/VisualFieldRenderer_VSM" { Properties { _MainTex("Texture", 2D) = "white" {} } CGINCLUDE #include "UnityCG.cginc" struct appdata { float4 vertex : POSITION; float2 uv : TEXCOORD0; }; struct v2f { float2 uv : TEXCOORD0; float4 vertex : SV_POSITION; float2 uv_depth : TEXCOORD1; float4 interpolatedRay : TEXCOORD2; }; sampler2D _MainTex; half4 _MainTex_TexelSize; sampler2D _CameraDepthTexture; uniform float _FieldBias; uniform sampler2D _ObserverDepthTexture; uniform float4x4 _DepthCameraWorldToLocalMatrix; uniform float4x4 _DepthCameraProjectionMatrix; uniform float _cameraFar; uniform float _cameraNear; uniform float4x4 _FrustumCornersRay; float4 _unVisibleColor; float4 _visibleColor; float2 ProjPosToViewPortPos(float4 projPos) { float3 ndcPos = projPos.xyz / projPos.w; float2 viewPortPos = float2(0.5f * ndcPos.x + 0.5f, 0.5f * ndcPos.y + 0.5f); return viewPortPos; } float LinearDepthToDistance(float z, float near, float far) { float d = z * (far - near) + near; return d; } float LinearDepthToDistanceSqrt(float z, float near, float far) { float d = sqrt(z) * (far - near) + near; return d * d; } //顶点阶段 v2f vert(appdata v) { v2f o; o.vertex = UnityObjectToClipPos(v.vertex); // MVP matrix screen pos o.uv = v.uv; o.uv_depth = v.uv; #if UNITY_UV_STARTS_AT_TOP if (_MainTex_TexelSize.y < 0) { o.uv_depth.y = 1 - o.uv_depth.y; } #endif int index = 0; if (v.uv.x < 0.5 && v.uv.y < 0.5) { index = 0; } else if (v.uv.x > 0.5 && v.uv.y < 0.5) { index = 1; } else if (v.uv.x > 0.5 && v.uv.y > 0.5) { index = 2; } else { index = 3; } o.interpolatedRay = _FrustumCornersRay[index]; return o; } //片元阶段 fixed4 frag(v2f i) : SV_Target { float4 col = tex2D(_MainTex, i.uv); float z = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv); float depth = Linear01Depth(z); if (depth >= 1) { return col; } // correct in Main Camera float linearDepth = LinearEyeDepth(SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, i.uv_depth)); float3 wpos = _WorldSpaceCameraPos + linearDepth * i.interpolatedRay.xyz; // correct in Depth Camera float3 viewPosInDepthCamera = mul(_DepthCameraWorldToLocalMatrix, float4(wpos, 1)).xyz; float4 projPosInDepthCamera = mul(_DepthCameraProjectionMatrix, float4(viewPosInDepthCamera, 1)); float2 viewPortPosInDepthCamera = ProjPosToViewPortPos(projPosInDepthCamera); float depthCameraViewZ = -viewPosInDepthCamera.z; if (viewPortPosInDepthCamera.x < 0 || viewPortPosInDepthCamera.x > 1) { return col; } if (viewPortPosInDepthCamera.y < 0 || viewPortPosInDepthCamera.y > 1) { return col; } if (depthCameraViewZ <= _cameraNear || depthCameraViewZ >= _cameraFar) { return col; } // correct in depth camera float2 depthCameraUV = viewPortPosInDepthCamera.xy; #if UNITY_UV_STARTS_AT_TOP if (_MainTex_TexelSize.y < 0) { depthCameraUV.y = 1 - depthCameraUV.y; } #endif float2 depthInfo = tex2D(_ObserverDepthTexture, depthCameraUV).rg; float observerEyeDepth = LinearDepthToDistance(depthInfo.r, _cameraNear, _cameraFar); float4 finalCol = col * 0.8; float4 visibleColor = _visibleColor * 0.4f; float4 unVisibleColor = _unVisibleColor * 0.4f; if (depthCameraViewZ <= observerEyeDepth) { return finalCol + visibleColor; } float var = LinearDepthToDistanceSqrt(depthInfo.g, _cameraNear, _cameraFar) - (observerEyeDepth * observerEyeDepth); float dis = depthCameraViewZ - observerEyeDepth; float ChebychevVal = var / (var + (dis * dis)); return finalCol + lerp(visibleColor, unVisibleColor, 1 - ChebychevVal); } ENDCG SubShader { // No culling or depth Cull Off ZWrite Off ZTest Always //Pass 0 Roberts Operator Pass { CGPROGRAM #pragma vertex vert #pragma fragment frag ENDCG } } }

这样使用真实深度作为 t 变量的时候, 其实作为可视域的功能来说不算好, 因为它不像阴影一样有一个边缘渐变的过程, 而是应该是个二值化的过程, 看看效果对比 :

可以看到VSM在边缘的地方有模糊, 并且在正方体边缘也有明显的缝隙, 这是因为在计算平均深度 (Blur) 的时候这些点周围是跟无限远点做的平均, 必然会得到比较大的平均数, 影响了真实计算.