Kubernetes开发了一个Elasticsearch附加组件来实现集群的日志管 理。这是Elasticsearch、Fluentd和Kibana的组合。Elasticsearch是一个 搜索引擎,负责存储日志并提供查询接口;Fluentd负责从Kubernetes 搜集日志并发送给Elasticsearch;Kibana提供了一个Web GUI,用户可 以浏览和搜索存储在Elasticsearch中的日志,如图下所示:

1.部署

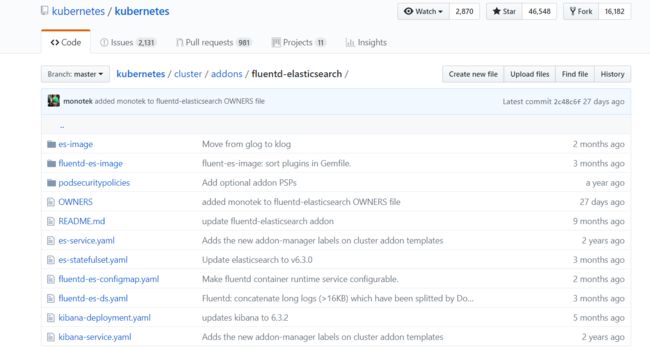

Elasticsearch附加组件本身会作为Kubernetes的应用在集群里运行,其YAML配置文件可从 https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/fluentd-elasticsearch获取

下载文件,切换分支(我们的k8s版本是1.12)

由于github上克隆文件的地址都是一样的,我们是1.12版本肯定和master版本有区别,需要切换分支

git clone https://github.com/kubernetes/kubernetes.git

#查看所有分支

git branch -a

#切换到远端1.12分支

git checkout remotes/origin/release-1.12

填坑

坑1:需要部署Fluentd的节点必须添加标签beta.kubernetes.io/fluentd-ds-ready=true

#显示节点标签

kubectl get node --show-labels

#给节点添加标签

kubectl label node kube-node-1 beta.kubernetes.io/fluentd-ds-ready=true

坑2:修改默认的镜像源以及让kibana和es的大版本保持对应

先修改镜像k8s.gcr.io为gcrxio

cd /root/kubernetes/cluster/addons/fluentd-elasticsearch

sed -i 's#k8s.gcr.io#gcrxio#g' es-statefulset.yaml

sed -i 's#k8s.gcr.io#gcrxio#g' fluentd-es-ds.yaml

在k8s1.12版本中需要修改es的版本号和kibana对应

sed -i 's/v6.2.5/v6.3.0/g' es-statefulset.yaml

坑3 需要修改docker的日志引擎,否则k8s的log查询不到文件

sed -i 's/journald/json-file/g' /etc/sysconfig/docker

或者直接写入

OPTIONS='--selinux-enabled --log-driver=json-file --signature-verification=false'

填坑4 fluentd-es无法启动,报错

failed to read data from plugin storage file path="/var/log/journald-docker.pos/worker0/storage.json"

error_class=Fluent::ConfigError error="Invalid contents (not object) in plugin storage file: '/var/log/journald-docker.pos/worker0/storage.json'"

config error file="/etc/fluent/fluent.conf" error_class=Fluent::ConfigError error="Unexpected error: failed to read data from plugin storage file: '/var/log/journald-docker.pos/worker0/storage.json'"

解决如下:删除节点机上的日志文件即可

[root@kube-node-2 ~]# rm -rf /var/log/journald-*

[root@kube-node-2 ~]# rm -rf /var/log/kernel.pos/*

原因应该是docker的日志引擎没修改时候使用journald类型启动的,更换类型后并没有删除这些日志文件

部署

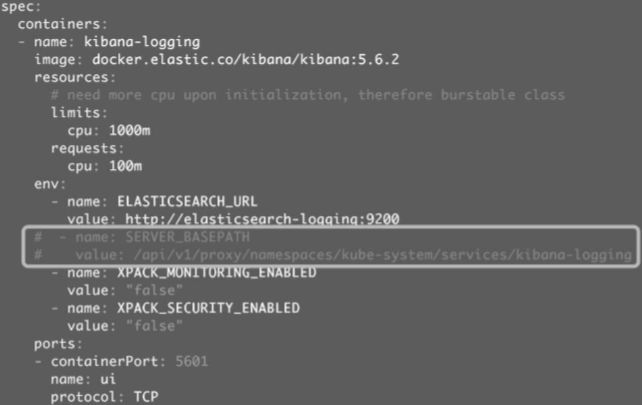

这里有一点需要注意:后面我们会通过NodePort访问Kibana,需要注释掉kibana-deployment.yaml中的环境变量 SERVER_BASEPATH,否则无法访问

[root@kube-master fluentd-elasticsearch]# kubectl apply -f ./

service/elasticsearch-logging created

serviceaccount/elasticsearch-logging created

clusterrole.rbac.authorization.k8s.io/elasticsearch-logging created

clusterrolebinding.rbac.authorization.k8s.io/elasticsearch-logging created

statefulset.apps/elasticsearch-logging created

configmap/fluentd-es-config-v0.1.6 created

serviceaccount/fluentd-es created

clusterrole.rbac.authorization.k8s.io/fluentd-es created

clusterrolebinding.rbac.authorization.k8s.io/fluentd-es created

daemonset.apps/fluentd-es-v2.2.1 created

deployment.apps/kibana-logging created

service/kibana-logging created

[root@kube-master fluentd-elasticsearch]# kubectl -n kube-system get pod -l "k8s-app=fluentd-es"

NAME READY STATUS RESTARTS AGE

fluentd-es-v2.2.0-96t57 1/1 Running 0 14m

fluentd-es-v2.2.0-kcknt 1/1 Running 0 14m

DaemonSet fluentd-es从每个节点收集日志,然后发送给 Elasticsearch

[root@kube-master fluentd-elasticsearch]# kubectl -n kube-system get statefulset

NAME DESIRED CURRENT AGE

elasticsearch-logging 2 2 16m

[root@kube-master fluentd-elasticsearch]# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

elasticsearch-logging-0 1/1 Running 0 16m

elasticsearch-logging-1 1/1 Running 0 15m

fluentd-es-v2.2.0-96t57 1/1 Running 0 16m

fluentd-es-v2.2.0-kcknt 1/1 Running 0 16m

kibana-logging-76ff7dbb49-s5mbt 1/1 Running 0 16m

[root@kube-master fluentd-elasticsearch]# kubectl -n kube-system get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch-logging NodePort 10.98.113.35 9200:32607/TCP 19m

kibana-logging NodePort 10.107.158.36 5601:30319/TCP 19m

修改nodeport暴露端口供外部访问,或者给服务加代理

nohup kubectl proxy --address='10.0.0.146' --port=8080 --accept-hosts='^*$' &

可通过http://10.0.0.146:32607/验证Elasticsearch已正常工作;

通过http://10.0.0.146:30319/访问Kibana