corosync 和openais 各自都能实现群集功能,但是功能比较简单,要想实现功能齐全、复杂的群集,需要将两者结合起来。二者主要提供心跳探测,但是没有资源管理能力。

pacemaker 可以提供资源管理能力,是从heartbeat的v3版本中分离出来的一个项目

高可用群集要求:

硬件一致性

软件(系统)一致性

时间一致性

名称互相能够解析

案例一:corosync+openais+pacemaker+web

1.按照拓扑图分别配置两个节点的参数

节点一:

ip :192.168.2.10/24

修改主机名

# vim /etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=node1.a.com

#hostname node1.a.com

使两个节点可以相互解析

# vim /etc/hosts

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

192.168.2.10 node1.a.com node1

192.168.2.20 node2.a.com node2

节点二:

ip :192.168.2.20/24

修改主机名

# vim /etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=node2.a.com

#hostname node2.a.com

使两个节点可以相互解析

# vim /etc/hosts

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

192.168.2.10 node1.a.com node1

192.168.2.20 node2.a.com node2

2.在节点一(node1)上配置yum工具,并创建挂载点,挂载光盘

# vim /etc/yum.repos.d/rhel-debuginfo.repo

[rhel-server]

name=Red Hat Enterprise Linux serverbaseurl=file:///mnt/cdrom/Server

enabled=1

gpgcheck=1

gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

[rhel-cluster]

name=Red Hat Enterprise Linux cluster

baseurl=file:///mnt/cdrom/Cluster

enabled=1

gpgcheck=1

gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

挂载光盘

# mkdir /mnt/cdrom

# mount /dev/cdrom /mnt/cdrom/

3.在节点2上创建挂载点,挂载光盘

# mkdir /mnt/cdrom

# mount /dev/cdrom /mnt/cdrom/

4.使两个节点的时钟相同,在两个节点上执行以下命令

# hwclock -s

5.利用公钥使两个节点间实现无障碍通信

node1产生自己的密钥对:

# ssh-keygen -t rsa 产生rsa密钥对

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): 密钥保存位置

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase): 输入私钥保护密码

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa. 私钥位置

Your public key has been saved in /root/.ssh/id_rsa.pub. 公钥位置

The key fingerprint is:

be:35:46:8f:72:a8:88:1e:62:44:c0:a1:c2:0d:07:da [email protected]

node2产生密钥对:

# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

5e:4a:1e:db:69:21:4c:79:fa:59:08:83:61:6d:2e:4c [email protected]

6.切换至/root/.ssh下,可以看到公钥和私钥文件

# ll ~/.ssh/

-rw------- 1 root root 1675 10-20 10:37 id_rsa

-rw-r--r-- 1 root root 398 10-20 10:37 id_rsa.pub

7.将两个节点的公钥文件拷贝到对方,此过程需要对方的登录密码

# ssh-copy-id -i id_rsa.pub node2.a.com

# ssh-copy-id -i /root/.ssh/id_rsa.pub node1.a.com

8.将node1的yum配置文件复制到node2,很顺利,不用输入密码

# scp /etc/yum.repos.d/rhel-debuginfo.repo node2.a.com:/etc/yum.repos.d/

rhel-debuginfo.repo 100% 317 0.3KB/s 00:00

9.此时在节点一上直接就可以查看节点二的ip参数

# ssh node2.a.com 'ifconfig'

10.上传用到的软件包到节点1和节点2,并分别安装

cluster-glue-1.0.6-1.6.el5.i386.rpm

cluster-glue-libs-1.0.6-1.6.el5.i386.rpm

corosync-1.2.7-1.1.el5.i386.rpm

corosynclib-1.2.7-1.1.el5.i386.rpm

heartbeat-3.0.3-2.3.el5.i386.rpm

heartbeat-libs-3.0.3-2.3.el5.i386.rpm

libesmtp-1.0.4-5.el5.i386.rpm

openais-1.1.3-1.6.el5.i386.rpm

openaislib-1.1.3-1.6.el5.i386.rpm

pacemaker-1.1.5-1.1.el5.i386.rpm

pacemaker-cts-1.1.5-1.1.el5.i386.rpm

pacemaker-libs-1.1.5-1.1.el5.i386.rpm

perl-TimeDate-1.16-5.el5.noarch.rpm

resource-agents-1.0.4-1.1.el5.i386.rpm

# yum localinstall *.rpm -y --nogpgcheck 安装

11.在节点1上,进入corosync的主目录,将样例文件变为配置文件

# cd /etc/corosync/

#ll

-rw-r--r-- 1 root root 5384 2010-07-28 amf.conf.example openais的配置文件

-rw-r--r-- 1 root root 436 2010-07-28 corosync.conf.example corosync的配置文件

drwxr-xr-x 2 root root 4096 2010-07-28 service.d

drwxr-xr-x 2 root root 4096 2010-07-28 uidgid.d

# cp corosync.conf.example corosync.conf 生成主配置文件

12.编辑corosync.conf

#vim corosync.conf

compatibility: whitetank 向后兼容

totem { 心跳探测

version: 2 版本号

secauth: off 心跳探测时是否验证

threads: 0 为心跳探测启动的线程数量,0表示无限制

interface {

ringnumber: 0

bindnetaddr: 192.168.2.10 心跳探测的网卡ip地址

mcastaddr: 226.94.1.1 组播地址

mcastport: 5405 组播端口号

}

}

logging { 日志选项设置

fileline: off

to_stderr: no 是否将日志输出到标准输出设备(屏幕)上

to_logfile: yes 将日志记录到日志文件中

to_syslog: yes 将日志作为系统日志进行记录

logfile: /var/log/cluster/corosync.log 日志文件路径,该路径要手动创建

debug: off

timestamp: on 为日志打上时间戳

logger_subsys {

subsys: AMF

debug: off

}

}

amf { openais的选项

mode: disabled

}

处理以上外,还要在该文件内添加一些语句:

service {

ver: 0

name: pacemaker 使用pacemaker

}

aisexec { 使用openais的选项

user: root

group: root

}

13.在节点2上做上步类似的修改,只需要将totem { bindnetaddr: 192.168.2.10 }改为 192.168.2.20,其它的和节点1一样

直接将node1的/etc/corosync/corosync.conf文件复制大node2

# scp /etc/corosync/corosync.conf node2.a.com:/etc/corosync/

在修改node2的文件

14.在两个节点上创建目录/var/log/cluster,用来存放corosync的日志

# mkdir /var/log/cluster

15.在其中一个节点上,进入/etc/corosync/目录,然后产生验证文件authkey

# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Press keys on your keyboard to generate entropy (bits = 936).

Press keys on your keyboard to generate entropy (bits = 1000).

Writing corosync key to /etc/corosync/authkey.

16.将验证文件复制到另一个节点,保证两个节点的验证文件相同

# scp -p /etc/corosync/authkey node2.a.com:/etc/corosync/

17.启动节点1的corosync服务

# service corosync start

Starting Corosync Cluster Engine (corosync): [确定]

在节点1上启动节点2的corosync服务

# ssh node2.a.com 'service corosync start'

Starting Corosync Cluster Engine (corosync): [确定]

18.下面进行排错检测

在两个节点上执行以下命令:

检测启动是否正常

# grep -i -e "corosync cluster engine" -e "configuration file" /var/log/messages

Oct 20 14:01:58 localhost corosync[2069]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 20 14:01:58 localhost corosync[2069]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

检测心跳是否正常

# grep -i totem /var/log/messages

Oct 20 14:01:58 localhost corosync[2069]: [TOTEM ] The network interface [192.168.2.10] is now up.

检测其他的错误

# grep -i error: /var/log/messages ,节点1有很多关于stonith的错误,节点2无错误

Oct 20 14:03:02 localhost pengine: [2079]: ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

Oct 20 14:03:02 localhost pengine: [2079]: ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

Oct 20 14:03:02 localhost pengine: [2079]: ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Oct 20 14:04:37 localhost pengine: [2079]: ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

Oct 20 14:04:37 localhost pengine: [2079]: ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

Oct 20 14:04:37 localhost pengine: [2079]: ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

检测pacemaker是否启动

# grep -i pcmk_startup /var/log/messages

Oct 20 14:01:59 localhost corosync[2069]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 20 14:01:59 localhost corosync[2069]: [pcmk ] Logging: Initialized pcmk_startup

18.查看群集的状态

# crm status

============

Last updated: Sat Oct 20 14:24:26 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

0 Resources configured.

============

Online: [ node1.a.com node2.a.com ] 显示两个节点都为在线状态

19.在节点1上禁用stonith功能

# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

20.在节点1上定义资源

资源类型有4总:

primitive 本地主资源(同一时间只能在一个节点上使用)

group 组资源,将资源加入一个组,使组内的资源同时至现在一台节点上(例如ip地址 和 服务)

clone 需要同时在多个节点上同时启用的资源(如ocfs 、stonith,没有主次之分)

master 有主次之分的资源,如drbd

ra类型:

crm(live)ra# classes

heartbeat

lsb

ocf / heartbeat pacemaker ocf的提供者有两个:heartbeat和pacemaker

stonith

资源:

每个ra提供的总类不同,“list ra类型”可查看该ra支持的总类

格式如下:

资源类型 资源名字 ra类型:【提供者】:资源 参数

crm(live)configure# primitive webip ocf:heartbeat:IPaddr params ip=192.168.2.100

crm(live)configure# commit 提交

21.此时在节点1上查看群集状态

# crm

crm(live)# status

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

Online: [ node1.a.com node2.a.com ]

webip (ocf::heartbeat:IPaddr): Started node1.a.com 【webip资源在节点1上】

此时查看ip地址:

[root@node1 ~]# ifconfig

eth0:0 inet addr:192.168.2.100 虚拟ip地址在节点1上

节点二上:

crm(live)# status

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

Online: [ node1.a.com node2.a.com ]

webip (ocf::heartbeat:IPaddr): Started node1.a.com

22.定义服务。在两个节点上安装httpd服务,确保httpd的服务是停止状态,并且开机不能自启动

# yum install httpd -y

由于httpd服务同一时刻只能运行在一台节点上,所以资源类型为primitive

crm(live)configure# primitive webserver lsb:httpd

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webip ocf:heartbeat:IPaddr \

params ip="192.168.2.100" ip资源

primitive webserver lsb:httpd httpd资源

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

提交:

crm(live)configure# commit

23.此时查看群集状态,发现webip在节点1上,httpd在节点2上

crm(live)# status

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

2 Resources configured. 定义了两个资源

Online: [ node1.a.com node2.a.com ]

webip (ocf::heartbeat:IPaddr): Started node1.a.com webip在节点1上

webserver (lsb:httpd): Started node2.a.com httpd在节点2上

24.这个时候,node1 上将有虚拟ip地址,而node2上将启动httpd服务。可以创建一个组资源类型,将webip 和webserver 都加入该组中,同一组内的资源将会分配给同一个节点

group 组名 资源名1 资源名2

crm(live)configure# group web webip webserver

crm(live)configure# commit 提交

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webip ocf:heartbeat:IPaddr \

params ip="192.168.2.100"

primitive webserver lsb:httpd

group web webip webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

25.再次查看群集状态,两个资源都在节点1上

crm(live)# status

Last updated: Sat Oct 20 16:39:37 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webip (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node1.a.com

26.此时ip地址和httpd服务都在节点1上

[root@node1 ~]# service httpd status

httpd (pid 2800) 正在运行...

[root@node1 ~]# ifconfig eth0:0

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:37:3F:E6

inet addr:192.168.2.100 Bcast:192.168.2.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Interrupt:67 Base address:0x2024

27.在两个节点上分别创建网页

node1:

# echo "node1" > /var/www/html/index.html

直接在node1 上为node2创建网页

# ssh node2.a.com 'echo "node2" > /var/www/html/index.html'

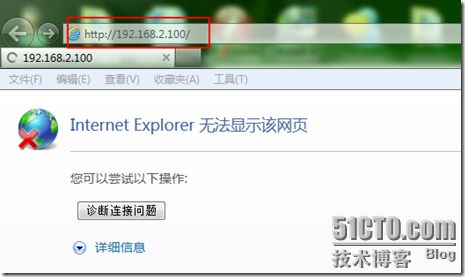

28.在浏览器中输入 http://192.168.2.100访问网页

29.可以访问到node1的网页,这时可以模仿node1节点失效的情况

[root@node1 ~]# service corosync stop

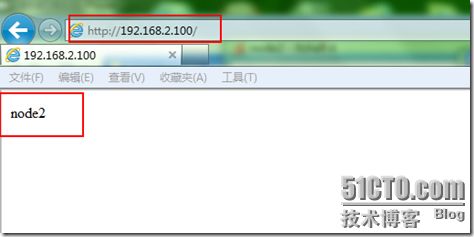

Signaling Corosync Cluster Engine (corosync) to terminate: [确定]

Waiting for corosync services to unload:........ [确定

再次访问该ip地址,发现无法放到网页

30.此时在节点2上查看群集状态,没有显示webip 和 webserver 运行在哪个节点上

[root@node2 ~]# crm

crm(live)# status

Last updated: Sat Oct 20 16:55:16 2012

Stack: openais

Current DC: node2.a.com - partition WITHOUT quorum 显示node2为票数统计者,但是没有票数

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

Online: [ node2.a.com ]

OFFLINE: [ node1.a.com ]

31.此时可以关闭quorum,在此选择ignore

当票数不足一半时,可选的参数有:

ignore 忽略

freeze 冻结,已经启用的资源继续使用,没有启用的资源不能使用

stop 默认选项

suicide 杀死所有资源

32.再次启动node1 的corosync 服务,改变quorum

# service corosync start

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# commit

33.再次关闭node1的corosync服务,在node2 上查看状态

# service corosync stop 关闭node1的服务

Signaling Corosync Cluster Engine (corosync) to terminate: [确定]

Waiting for corosync services to unload:....... [确定]

node2 上的群集状态:

[root@node2 ~]# crm status

Stack: openais

Current DC: node2.a.com - partition WITHOUT quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

Online: [ node2.a.com ]

OFFLINE: [ node1.a.com ]

Resource Group: web

webip (ocf::heartbeat:IPaddr): Started node2.a.com

webserver (lsb:httpd): Started node2.a.com

34.此时访问192.168.2.100,将会看到节点2 的网页

35.此时若再次启用节点1的corosync服务

[root@node1 ~]# service corosync start

将会发现,节点1不会进行资源夺取,直到节点2 失效

[root@node1 ~]# crm status

Last updated: Sat Oct 20 17:17:24 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webip (ocf::heartbeat:IPaddr): Started node2.a.com

webserver (lsb:httpd): Started node2.a.com

DRBD配置

36.为两个节点的磁盘进行分区,要求两个节点上的分区大小要一模一样。

以下操作在两台节点上都进行

# fdisk /dev/sda

Command (m for help): p 显示当前的分区信息

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1288 10241437+ 83 Linux

/dev/sda3 1289 1415 1020127+ 82 Linux swap / Solaris

Command (m for help): n 增加一个分区

Command action

e extended

p primary partition (1-4)

e 增加和一个扩展分区

Selected partition 4

First cylinder (1416-2610, default 1416): 起始柱面

Using default value 1416

Last cylinder or +size or +sizeM or +sizeK (1416-2610, default 2610): 结束柱面

Using default value 2610

Command (m for help): n 增加一个分区(此时默认为逻辑分区)

First cylinder (1416-2610, default 1416): 起始柱面

Using default value 1416

Last cylinder or +size or +sizeM or +sizeK (1416-2610, default 2610): +1G 大小为1G

Command (m for help): p 再次显示分区信息

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1288 10241437+ 83 Linux

/dev/sda3 1289 1415 1020127+ 82 Linux swap / Solaris

/dev/sda4 1416 2610 9598837+ 5 Extended

/dev/sda5 1416 1538 987966 83 Linux

Command (m for help): w 保存分区结果并退出

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 16: 设备或资源忙.

The kernel still uses the old table.

The new table will be used at the next reboot.

Syncing disks.

37.使内核重新读取分区表(两个节点上做同样的操作)

# partprobe /dev/sda

# cat /proc/partitions

major minor #blocks name

8 0 20971520 sda

8 1 104391 sda1

8 2 10241437 sda2

8 3 1020127 sda3

8 4 0 sda4

8 5 987966 sda5

38.上传GRBD主程序和内核模块程序,由于当前内核模块为2.6.18 ,在2.6.33的内核中才开始集成DRBD的内核代码,但是可以使用模块方式将DRBD的载入内核。安装这两个软件

drbd83-8.3.8-1.el5.centos.i386.rpm GRBD主程序

kmod-drbd83-8.3.8-1.el5.centos.i686.rpm 内核模块

# yum localinstall drbd83-8.3.8-1.el5.centos.i386.rpm kmod-drbd83-8.3.8-1.el5.centos.i686.rpm -y --nogpgcheck

39.在两个节点上分别执行以下命令

#modprobe drbd 加载内核模块

# lsmod |grep drbd 显示是否加载成功

40.在两个节点上编辑grbd的配置文件 :/etc/grbd.conf

#

# You can find an example in /usr/share/doc/drbd.../drbd.conf.example

include "drbd.d/global_common.conf"; 包含全局通用配置文件

include "drbd.d/*.res"; 包含资源文件

# please have a a look at the example configuration file in

# /usr/share/doc/drbd83/drbd.conf

41. 在两个节点上编辑global_common.conf文件,编辑之前最好做备份

# cd /etc/drbd.d/

# cp -p global_common.conf global_common.conf.bak

#vim global_common.conf

global {

usage-count no; 不统计用法计数(影响性能)

# minor-count dialog-refresh disable-ip-verification

}

common {

protocol C; 使用C类协议当存储到对方的磁盘后才算结束

handlers {

# fence-peer "/usr/lib/drbd/crm-fence-peer.sh";

# split-brain "/usr/lib/drbd/notify-split-brain.sh root";

# out-of-sync "/usr/lib/drbd/notify-out-of-sync.sh root";

# before-resync-target "/usr/lib/drbd/snapshot-resync-target-lvm.sh -p 15 -- -c 16k";

# after-resync-target /usr/lib/drbd/unsnapshot-resync-target-lvm.sh;

}

startup { 启动时延迟配置

wfc-timeout 120;

degr-wfc-timeout 120;

}

disk {

on-io-error detach; 当io出错时拆除磁盘

fencing resource-only;

}

net {

cram-hmac-alg "sha1";通讯时使用sha1加密

shared-secret "abc"; 预共享密钥,双方应相同

}

syncer {

rate 100M; 同步时的速率

}

}

42.在两个节点上分别编辑资源文件,文件名可随便写,但是不能有空格

#/etc/drbd.d/ web.res

resource web { 资源名

on node1.a.com { node1.a.com的资源

device /dev/drbd0; 逻辑设备名,在/dev/下

disk /dev/sda5; 真实设备名,节点间共享的磁盘或分区

address 192.168.2.10:7789; 节点1的ip地址

meta-disk internal; 磁盘类型

}

on node2.a.com { node2.a.com的资源

device /dev/drbd0;

disk /dev/sda5;

address 192.168.2.20:7789;

meta-disk internal;

}

43.在两个节点上初始化资源web

# drbdadm create-md web 创建多设备web

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created.

44.在两个节点上启动drbd服务

# service drbd start

Starting DRBD resources: [

web

Found valid meta data in the expected location, 1011671040 bytes into /dev/sda5.

d(web) n(web) ]...

45.查看当前哪台设备室激活设备

# cat /proc/drbd

version: 8.3.8 (api:88/proto:86-94)

GIT-hash: d78846e52224fd00562f7c225bcc25b2d422321d build by [email protected], 2010-06-04 08:04:16

0: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r----

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:987896

当前设备的角色/对方的角色 ,可知当前两台设备都未激活,都无权限读取磁盘

或是使用命令drbd-overview 查看当前设备状态

drbd-overview

0:web Connected Secondary/Secondary Inconsistent/Inconsistent C r----

46.在节点1上执行命令,将当前设备成为主设备

# drbdadm -- --overwrite-data-of-peer primary web

# drbd-overview 查看当前激活设备,显示该设备为主设备,已经同步3.4%

0:web SyncSource Primary/Secondary UpToDate/Inconsistent C r----

[>....................] sync'ed: 3.4% (960376/987896)K delay_probe: 87263

节点2 上的情况:

# drbd-overview

0:web SyncTarget Secondary/Primary Inconsistent/UpToDate C r----

[=>..................] sync'ed: 10.0% (630552/692984)K queue_delay: 0.0 ms

47.在节点1上格式化主设备的磁盘

# mkfs -t ext3 -L drbdweb /dev/drbd0

48.在节点1上新建挂载点,将/dev/drbd0挂载到上面

# mkdir /mnt/web

# mount /dev/drbd0 /mnt/web

49.将node1变为备份设备,node2 变为主设备,在node1上执行命令

# drbdadm secondary web

0: State change failed: (-12) Device is held open by someone 提示资源正在被某个用户使用

Command 'drbdsetup 0 secondary' terminated with exit code 11

可以先卸载,然后再执行

# umount /mnt/web/

# drbdadm secondary web

50.查看当前设备node1的状态,显示:两个节点都为备份节点

# drbd-overview

0:web Connected Secondary/Secondary UpToDate/UpToDate C r----

51.在节点2上,将当前设备设置为主设备

# drbdadm primary web

# drbd-overview 当前设备成为主设备

0:web Connected Primary/Secondary UpToDate/UpToDate C r----

52.在节点2上格式化/dev/drbd0

# mkfs -t ext3 -L drbdweb /dev/drbd0

53.节点2上创建挂载点,将/dev/drbd0 挂载上

# mkdir /mnt/web

# mount /dev/drbd0 /mnt/web 若节点2 不是主节点,将不能挂载

54.在节点1上指定默认粘性值

crm(live)configure# rsc_defaults resource-stickiness=100

crm(live)configure# commit

55.在节点1上定义资源

crm(live)configure# primitive webdrbd ocf:heartbeat:drbd params drbd_resource=web op monitor role=Master interval=50s timeout=30s op monitor role=Slave interval=60s timeout=30s

56.创建master类型的资源,将webdrbd 加入

crm(live)configure# master MS_Webdrbd webdrbd meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

57.为Primary节点上的web资源创建自动挂载的集群服务

crm(live)configure# primitive WebFS ocf:heartbeat:Filesystem params device="/dev/drbd0" directory="/mnt/web" fstype="ext3"

58.

crm(live)configure# colocation WebFS_on_MS_webdrbd inf: WebFS MS_Webdrbd:Master

crm(live)configure# order WebFS_after_MS_Webdrbd inf: MS_Webdrbd:promote WebFS:start

crm(live)configure# verify

crm(live)configure# commit

59.将节点1 设置为主节点:drbdadm primary web,然后挂载/dev/drbd0到/mnt/web。切换至/mnt/web,创建目录html

60.编辑node1

# vim /etc/httpd/conf/httpd.conf

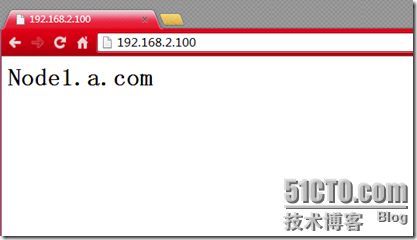

DocumentRoot "/mnt/web/html"

# echo "

Node1.a.org

" > /mnt/debd/html/index.html# crm configure primitive WebSite lsb:httpd //添加httpd为资源

# crm configure colocation website-with-ip INFINITY: WebSite WebIP //是IP和web服务在同一主机上

# crm configure order httpd-after-ip mandatory: WebIP WebSite //定义资源启动顺序