爬虫大作业

爬取西刺代理

生成请求头

#encoding = utf-8;

__all__ = ("Header");

import random;

class Header(object):

'''请求头构造类'''

def __init__(self):

self.__user_agent = [

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0)", #IE

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0", # Fire_Fox

"Mozilla/5.0 (Windows; U; Windows NT 6.1; zh-CN) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16", # Chrome

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11", # taobao

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E; LBBROWSER)", #猎豹

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/30.0.1599.101 Safari/537.36", # 360

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2", # safarir

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0", # 搜狐

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ", # maxthon

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/38.0.2125.122 UBrowser/4.0.3214.0 Safari/537.36" # uc

];

@property

def headers(self):

'''返回一个伪造后的hander'''

headers = {

"User-agent" : self.user_agent,

};

return headers;

@property

def user_agent(self):

index = random.randint(0, len(self.__user_agent)-1);

return self.__user_agent[index];

def __new__(cls):

'''此类创建模式为单实例模式'''

if not hasattr(cls, "__instance"):

cls.__instance = super().__new__(cls);

return cls.__instance;

else:

return cls.__instance;

ip 模块类

#encoding = utf-8;

__all__ = ("IP_Model", "IP_List");

class IP_Model(object):

'''保存代理ip的全部内容'''

def __init__(self):

self._country = None;

self._addres = None;

@property

def country(self):

'''

代理服务器所在国家

'''

return self._country;

@country.setter

def country(self, ip_country):

if ip_country != None:

self._country = ip_country;

else:

self._country = None;

@property

def ip(self):

'''

代理服务器的ip

'''

return self._ip;

@ip.setter

def ip(self, new_ip):

self._ip = new_ip;

@property

def port(self):

'''

访问端口号

'''

return self._port;

@port.setter

def port(self, new_port):

self._port = new_port;

@property

def addres(self):

'''

服务器所在省地址

'''

return self._addres;

@addres.setter

def addres(self, new_addres):

if new_addres != None:

self._addres = new_addres;

else:

self._addres = None;

@property

def http_type(self):

'''

请求类型

'''

return self._http_type;

@http_type.setter

def http_type(self, type):

self._http_type = type;

@property

def velocity(self):

'''服务器速度'''

return self._velocity;

@velocity.setter

def velocity(self, http_velocity):

self._velocity = http_velocity;

@property

def anonymous(self):

return self._anonymous;

@anonymous.setter

def anonymous(self, anonymous_text):

if anonymous_text == "高匿":

self._anonymous = True;

else:

self._anonymous= False;

def __str__(self):

'''

重新__str__方法,

:return: 返回格式化的IP_Model属性内容生成的字符串

'''

return (

"| country: {} |\n"

"| ip: {} |\n"

"| port: {} |\n"

"| address: {} |\n"

"| http_type: {} |\n"

"| velocity: {}|\n"

.format(self.country, self.ip, self.port, self.addres, self.http_type, self.velocity)

);

def to_dict(self):

return {

"country" : self.country,

"ip" : self.ip,

"port" : self.port,

"addres" : self.addres,

"http_type" : self.http_type,

"velocity" : self.velocity

};

def from_dict(self,dict):

self.country = dict.get("country");

self.ip = dict.get("ip");

self.port = dict("port");

self.addres = dict.get("addres");

self.http_type = dict.get("http_type");

self.velocity = dict.get("velocity");

def get_ip_proxies(self):

proxies = None;

if self.http_type == "https":

proxies = { "https" : "{}:{}".format(self.ip, self.port)};

else:

proxies = {"http": "{}:{}".format(self.ip, self.port)};

return proxies;

class IP_List(object):

def __init__(self):

self.http_list = None;

self.https_list = None;

保存到csv

#encoding = utf-8

import pandas;

'''

供simple_proxy使用的保存数据函数集

'''

def to_pandas_DataFrame(ips_list):

'''

适配pandas 的数据类型, 将list表转换为pandas存储的数据类型

:param page_list:

:return: 返回panfas存储数据的类型

'''

page_map = map(lambda ip_model: ip_model.to_dict(), ips_list);

return pandas.DataFrame(list(page_map));

def to_csv(dicts):

to_pandas_DataFrame(dicts).to_csv("./ips_info.csv", mode="a", encoding="ANSI");

def read_csv(path, start, step):

'''

从csv的指定行开始读取对应行数的ip内容

:param path: csv文件路径名

:param start: 开始行

:param step: 每次读取的行数

:return: 返回对应的ip_list

'''

pass;

爬取西刺主体代码

# encoding = utf-8

__all__ = ("html_to_dom", "ProxyIPWorm");

import requests;

from header import Header;

from bs4 import BeautifulSoup;

from ip_model import IP_Model, IP_List;

import save;

import time;

import re;

def simple_proxy(read_out):

'''

简单代理ip构建

:param read_out:

:return:

'''

pass;

def html_to_dom(url, header, proxies=None):

'''

简单封装下requests

:param url: 访问url

:param header: 伪造的请求头

:param proxies: 是否使用代理ip

:return:

'''

if proxies != None:

response = requests.get(url, headers=header, proxies=proxies, verify=True);

else:

response = requests.get(url, headers=header, verify=True);

if response.status_code == 200:

response.encoding = "utf-8";

return BeautifulSoup(response.text, "html.parser");

else:

return None;

def proxy(url, ips, log):

'''

使用代理ip访问指定服务器

:param url: 访问的服务器ip路径

:param ips: 携带http_list和https_list的服务器ip列表

:param log: 是否开启日志

:return: 返回生成的bs4的dom

'''

type = re.match(r"(.*):.*", url).group(1);

if ips == None:

raise RuntimeError("代理列表为空");

ip_list = None;

if type == "http":

ip_list = ips.http_list;

elif type == "https":

ip_list = ips.https_list;

else:

raise RuntimeError("不支持此类请求");

if log == True:

print("请求类型{}\n".format(type));

for ip in ip_list:

proxies = {type : "{}:{}".format(ip.ip, ip.port)};

print(proxies);

dom = html_to_dom(url, Header().headers, proxies);

if log == True:

print("当前ip:\n{}\n".format(ip));

if dom != None:

return dom;

class ProxyIPWorm(object):

'''爬取代理ip'''

def __init__(self):

self.proxy_ip_html = "https://www.xicidaili.com/nn/";

self.dom_tree = html_to_dom(self.proxy_ip_html, Header().headers);

@property

def start_page(self):

'''

开始页

:return:永远返回1

'''

return 1;

@property

def end_page(self):

'''

获取公开的高匿ip的总页数

:return: 返回高匿ip页数

'''

page_dom = self.dom_tree.select(".pagination a");

self._end_page = page_dom[-2];

return int(self._end_page.text);

def page_url(self, type, page):

'''

由给定整数生成对应西刺ip对应的页数的网址

:param page: 指定的页数

:return: 生成后的网址

'''

if page < 1 or page > self.end_page:

raise RuntimeError("页数大于总页数");

elif page == 1:

return "https://www.xicidaili.com/{}/".format(self.http_type(type));

else:

return "https://www.xicidaili.com/{}/{}".format(self.http_type(type) ,page);

def http_type(self, type):

'''

根据http或https返回对应的西刺代理格式

:param type: hhtp 或 https

:return: 对应的西刺代理格式

'''

if type == "http":

return "wt";

elif type == "https":

return "wn";

else:

raise RuntimeError("type应该为http或https");

def get_page_ips(self, type, page):

'''

获取指定页的所有ip

:param type: ip类型 http 或 https

:param page: 爬取页面

:return:返回该页被ip_model封装的所有ip列表

'''

print(self.page_url(type ,page));

page_dom = html_to_dom(self.page_url(type ,page), Header().headers);

page_ips_dom = page_dom.select("table tr");

# print(page_ips_dom[0]);

ip_generator = (ip for ip in page_ips_dom[1:]);

ip_list = [];

for ip_dom in ip_generator:

ip_info = self.get_ip_info(ip_dom);

ip_list.append(ip_info);

return ip_list;

def get_ip_info(self, ip_dom):

'''

获取指定的ip详细信息

:param ip_dom: 存有ip信息的html节点

:return: 返回ip_model结构的ipo封装类

'''

ip_info = IP_Model();

ip_td = ip_dom.select("td");

country = ip_td[0].img;

ip_info.http_type = ip_td[5].text;

if country != None:

ip_info.country = str(country.get("alt"));

ip_info.addres = ip_td[3].text.split()[0];

ip_info.ip = ip_td[1].text;

ip_info.port = ip_td[2].text;

ip_info.anonymous = ip_td[4].text;

ip_info.velocity = ip_td[6].div.get("title");

return ip_info;

def get_pages_ips(self, type, start_page, end_page, save_in=save.to_csv):

'''

获取指定开始页到结束页的所有ip(包括结束页)

:param type: 请求为http还是https

:param start_page: 开始页面

:param end_page: 结束页

:param save_in: 如何保存到文件格式,是一个回调函数,默认保存入csv

:return:

'''

if start_page >= end_page:

raise RuntimeError("开始页大于等于结束页");

elif start_page < 1:

raise RuntimeError("开始页小于结束页");

elif end_page > self.end_page:

raise RuntimeError("结束页大于总页数");

else:

for page in range(start_page, end_page):

print("当前页:{}".format(page));

page_list = self.get_page_ips(page);

save_in(page_list);

time.sleep(10);

return page_list;

测试代码

if __name__ == "__main__":

test = ProxyIPWorm();

#

https_list = test.get_page_ips("https", 1);

http_list = test.get_page_ips("http", 1);

ips = IP_List();

ips.https_list = https_list;

ips.http_list = http_list;

dom = proxy("http://news.gzcc.cn/html/xiaoyuanxinwen/", ips, True);

print(dom);

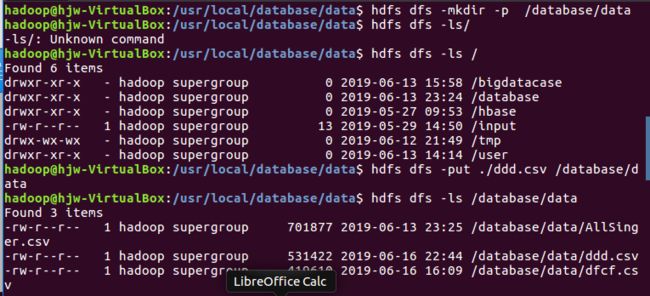

1.将爬虫大作业产生的csv文件上传到HDFS

一、建立一个运行本案例的目录database,data并查看是否创建成功

二、将本地文件csv上传到HDFS并查看是否上传成功

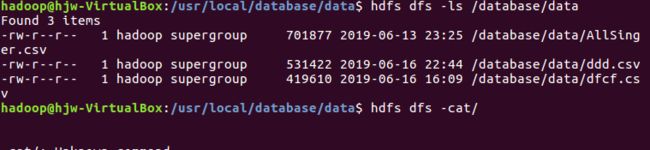

查看

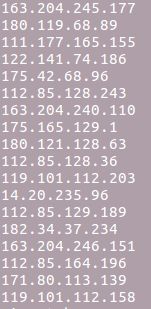

查看最新获取的ip地址

如此便是使用尽量可用的ip了。