1.前言

企业上线openstack,必须要思考和解决三方面的难题:1.控制集群的高可用和负载均衡,保障集群没有单点故障,持续可用,2.网络的规划和neutron L3的高可用和负载均衡,3. 存储的高可用性和性能问题。存储openstack中的痛点与难点之一,在上线和运维中,值得考虑和规划的重要点,openstack支持各种存储,包括分布式的文件系统,常见的有:ceph,glusterfs和sheepdog,同时也支持商业的FC存储,如IBM,EMC,NetApp和huawei的专业存储设备,一方面能够满足企业的利旧和资源的统一管理。

2. ceph概述

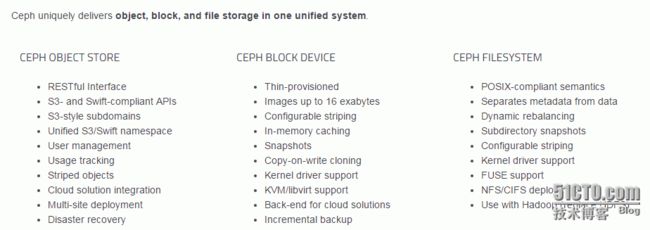

ceph作为近年来呼声最高的统一存储,在云环境下适应而生,ceph成就了openstack和cloudstack这类的开源的平台方案,同时openstack的快速发展,也吸引了越来越多的人参与到ceph的研究中来,在过去的2015年中,ceph在整个社区的活跃度越来越高,越来越多的企业,使用ceph做为openstack的glance,nova,cinder的存储。

ceph是一种统一的分布式文件系统,能够支持三种常用的接口:1.对象存储接口,兼容于S3,用于存储结构化的数据,如图片,视频,音频等文件,其他对象存储有:S3,Swift,FastDFS等;2. 文件系统接口,通过cephfs来完成,能够实现类似于nfs的挂载文件系统,需要由MDS来完成,类似的文件系存储有:nfs,samba,glusterfs等;3. 块存储,通过rbd实现,专门用于存储云环境下块设备,如openstack的cinder卷存储,这也是目前ceph应用最广泛的地方。

ceph的体系结构:

3.ceph的安装

环境介绍,通过ceph-depoly的方式部署ceph,三台角色如下,按照需求配置好hostname并格式化磁盘为xfs并挂载

| ip | 主机名 | 角色 | 磁盘 |

| 10.1.2.230 | controller_10_1_2_230 | Monitor/admin-deploy | |

| 10.1.2.231 | network_10_1_2_231 | OSD | /data1/osd0,格式化为xfs |

| 10.1.2.232 | compute1_10_1_2_232 | OSD | /data1/osd1,格式化为xfs |

2.yum源的准备,需要配置好Epel源和ceph源

vim /etc/yum.repos.d/ceph.repo

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-{ceph-release}/{distro}/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[root@controller_10_1_2_230 ~]# yum repolist Loaded plugins: fastestmirror, priorities Repository epel is listed more than once in the configuration Repository epel-debuginfo is listed more than once in the configuration Repository epel-source is listed more than once in the configuration Determining fastest mirrors base | 3.7 kB 00:00 base/primary_db | 4.4 MB 00:01 ceph | 2.9 kB 00:00 ceph/primary_db | 24 kB 00:00 epel | 4.4 kB 00:00 epel/primary_db | 6.3 MB 00:00 extras | 3.3 kB 00:00 extras/primary_db | 19 kB 00:00 openstack-icehouse | 2.9 kB 00:00 openstack-icehouse/primary_db | 902 kB 00:00 updates | 3.4 kB 00:00 updates/primary_db | 5.3 MB 00:00 211 packages excluded due to repository priority protections repo id repo name status base CentOS-6 - Base 6,311+56 ceph ceph 21+1 epel Extra Packages for Enterprise Linux 6 - x86_64 11,112+36 extras CentOS-6 - Extras 15 openstack-icehouse OpenStack Icehouse Repository 1,353+309 updates CentOS-6 - Updates 1,397+118 repolist: 20,209

3.配置ntp,所有的机器均需要配置ntp服务器,统一同步到内网的ntp服务器,参考:

[root@compute1_10_1_2_232 ~]# vim /etc/ntp.conf server 10.1.2.230 [root@compute1_10_1_2_232 ~]# /etc/init.d/ntpd restart Shutting down ntpd: [ OK ] Starting ntpd: [ OK ] [root@compute1_10_1_2_232 ~]# chkconfig ntpd on [root@compute1_10_1_2_232 ~]# chkconfig --list ntpd ntpd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

4.安装ceph,在所有的节点上都需要安装ceph,在admin节点,需要安装另外一个ceph-deploy,用于软件部署

[root@controller_10_1_2_230 ~]# yum install ceph ceph-deploy #管理节点 [root@network_10_1_2_231 ~]# yum install ceph -y #OSD节点 [root@compute1_10_1_2_232 ~]# yum install ceph -y #OSD节点

5.生成SSH key秘钥,并拷贝至远端

a、生成key [root@controller_10_1_2_230 ~]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 75:b4:24:cd:24:a6:a4:a4:a5:c7:da:6f:e7:29:ce:0f root@controller_10_1_2_230 The key's randomart p_w_picpath is: +--[ RSA 2048]----+ | o . +++ | | * o o =o. | | o + . . o | | + . . | | . . S | | . | | E . | | o.+ . | | .oo+ | +-----------------+ b、将key拷贝至远端 [root@controller_10_1_2_230 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@controller_10_1_2_230 The authenticity of host '[controller_10_1_2_230]:32200 ([10.1.2.230]:32200)' can't be established. RSA key fingerprint is 7c:6b:e6:d5:b9:cc:09:d2:b7:bb:db:a4:41:aa:5e:34. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[controller_10_1_2_230]:32200,[10.1.2.230]:32200' (RSA) to the list of known hosts. root@controller_10_1_2_230's password: Now try logging into the machine, with "ssh 'root@controller_10_1_2_230'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@controller_10_1_2_230 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@network_10_1_2_231 The authenticity of host '[network_10_1_2_231]:32200 ([10.1.2.231]:32200)' can't be established. RSA key fingerprint is de:27:84:74:d3:9c:cf:d5:d8:3e:c4:65:d5:9d:dc:9a. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[network_10_1_2_231]:32200,[10.1.2.231]:32200' (RSA) to the list of known hosts. root@network_10_1_2_231's password: Now try logging into the machine, with "ssh 'root@network_10_1_2_231'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@controller_10_1_2_230 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@compute1_10_1_2_232 The authenticity of host '[compute1_10_1_2_232]:32200 ([10.1.2.232]:32200)' can't be established. RSA key fingerprint is d7:3a:1a:3d:b5:26:78:6a:39:5e:bd:5d:d4:96:29:0f. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '[compute1_10_1_2_232]:32200,[10.1.2.232]:32200' (RSA) to the list of known hosts. root@compute1_10_1_2_232's password: Now try logging into the machine, with "ssh 'root@compute1_10_1_2_232'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. c、测试是否能无密码登陆 [root@controller_10_1_2_230 ~]# ssh root@compute1_10_1_2_232 'df -h' Filesystem Size Used Avail Use% Mounted on /dev/sda2 9.9G 3.3G 6.1G 35% / tmpfs 3.9G 8.0K 3.9G 1% /dev/shm /dev/sda1 1008M 82M 876M 9% /boot /dev/sda4 913G 33M 913G 1% /data1

6.创建初始Monitor节点

a、创建配置目录,后续所有的操作都在该目录下执行

[root@controller_10_1_2_230 ~]# mkdir ceph-deploy

[root@controller_10_1_2_230 ~]# cd ceph-deploy/

b、新建一个集群

[root@controller_10_1_2_230 ceph-deploy]# ceph-deploy new --cluster-network 10.1.2.0/24 --public-network 10.1.2.0/24 controller_10_1_2_230

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.19): /usr/bin/ceph-deploy new --cluster-network 10.1.2.0/24 --public-network 10.1.2.0/24 controller_10_1_2_230

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[controller_10_1_2_230][DEBUG ] connected to host: controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] detect platform information from remote host

[controller_10_1_2_230][DEBUG ] detect machine type

[controller_10_1_2_230][DEBUG ] find the location of an executable

[controller_10_1_2_230][INFO ] Running command: /sbin/ip link show

[controller_10_1_2_230][INFO ] Running command: /sbin/ip addr show

[controller_10_1_2_230][DEBUG ] IP addresses found: ['10.1.2.230']

[ceph_deploy.new][DEBUG ] Resolving host controller_10_1_2_230

[ceph_deploy.new][DEBUG ] Monitor controller_10_1_2_230 at 10.1.2.230

[ceph_deploy.new][DEBUG ] Monitor initial members are ['controller_10_1_2_230']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['10.1.2.230']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

Error in sys.exitfunc:

c、修改副本的个数

[root@controller_10_1_2_230 ceph-deploy]# vim ceph.conf

[global]

auth_service_required = cephx

filestore_xattr_use_omap = true

auth_client_required = cephx

auth_cluster_required = cephx #开启ceph认证

mon_host = 10.1.2.230 #monitor的ip地址

public_network = 10.1.2.0/24 #ceph共有网络,即访问的网络

mon_initial_members = controller_10_1_2_230 #初始的monitor,建议是奇数,防止脑裂

cluster_network = 10.1.2.0/24 #ceph集群内网

fsid = 07462638-a00f-476f-8257-3f4c9ec12d6e #fsid号码

osd pool default size = 2 #replicate的个数

d、monitor初始化

[root@controller_10_1_2_230 ceph-deploy]# ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.19): /usr/bin/ceph-deploy mon create-initial

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts controller_10_1_2_230

[ceph_deploy.mon][DEBUG ] detecting platform for host controller_10_1_2_230 ...

[controller_10_1_2_230][DEBUG ] connected to host: controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] detect platform information from remote host

[controller_10_1_2_230][DEBUG ] detect machine type

[ceph_deploy.mon][INFO ] distro info: CentOS 6.5 Final

[controller_10_1_2_230][DEBUG ] determining if provided host has same hostname in remote

[controller_10_1_2_230][DEBUG ] get remote short hostname

[controller_10_1_2_230][DEBUG ] deploying mon to controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] get remote short hostname

[controller_10_1_2_230][DEBUG ] remote hostname: controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[controller_10_1_2_230][DEBUG ] create the mon path if it does not exist

[controller_10_1_2_230][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-controller_10_1_2_230/done

[controller_10_1_2_230][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-controller_10_1_2_230/done

[controller_10_1_2_230][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-controller_10_1_2_230.mon.keyring

[controller_10_1_2_230][DEBUG ] create the monitor keyring file

[controller_10_1_2_230][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i controller_10_1_2_230 --keyring /var/lib/ceph/tmp/ceph-controller_10_1_2_230.mon.keyring

[controller_10_1_2_230][DEBUG ] ceph-mon: renaming mon.noname-a 10.1.2.230:6789/0 to mon.controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] ceph-mon: set fsid to 07462638-a00f-476f-8257-3f4c9ec12d6e

[controller_10_1_2_230][DEBUG ] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-controller_10_1_2_230 for mon.controller_10_1_2_230

[controller_10_1_2_230][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-controller_10_1_2_230.mon.keyring

[controller_10_1_2_230][DEBUG ] create a done file to avoid re-doing the mon deployment

[controller_10_1_2_230][DEBUG ] create the init path if it does not exist

[controller_10_1_2_230][DEBUG ] locating the `service` executable...

[controller_10_1_2_230][INFO ] Running command: /sbin/service ceph -c /etc/ceph/ceph.conf start mon.controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] === mon.controller_10_1_2_230 ===

[controller_10_1_2_230][DEBUG ] Starting Ceph mon.controller_10_1_2_230 on controller_10_1_2_230...

[controller_10_1_2_230][DEBUG ] Starting ceph-create-keys on controller_10_1_2_230...

[controller_10_1_2_230][INFO ] Running command: chkconfig ceph on

[controller_10_1_2_230][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.controller_10_1_2_230.asok mon_status

[controller_10_1_2_230][DEBUG ] ********************************************************************************

[controller_10_1_2_230][DEBUG ] status for monitor: mon.controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] {

[controller_10_1_2_230][DEBUG ] "election_epoch": 2,

[controller_10_1_2_230][DEBUG ] "extra_probe_peers": [],

[controller_10_1_2_230][DEBUG ] "monmap": {

[controller_10_1_2_230][DEBUG ] "created": "0.000000",

[controller_10_1_2_230][DEBUG ] "epoch": 1,

[controller_10_1_2_230][DEBUG ] "fsid": "07462638-a00f-476f-8257-3f4c9ec12d6e",

[controller_10_1_2_230][DEBUG ] "modified": "0.000000",

[controller_10_1_2_230][DEBUG ] "mons": [

[controller_10_1_2_230][DEBUG ] {

[controller_10_1_2_230][DEBUG ] "addr": "10.1.2.230:6789/0",

[controller_10_1_2_230][DEBUG ] "name": "controller_10_1_2_230",

[controller_10_1_2_230][DEBUG ] "rank": 0

[controller_10_1_2_230][DEBUG ] }

[controller_10_1_2_230][DEBUG ] ]

[controller_10_1_2_230][DEBUG ] },

[controller_10_1_2_230][DEBUG ] "name": "controller_10_1_2_230",

[controller_10_1_2_230][DEBUG ] "outside_quorum": [],

[controller_10_1_2_230][DEBUG ] "quorum": [

[controller_10_1_2_230][DEBUG ] 0

[controller_10_1_2_230][DEBUG ] ],

[controller_10_1_2_230][DEBUG ] "rank": 0,

[controller_10_1_2_230][DEBUG ] "state": "leader",

[controller_10_1_2_230][DEBUG ] "sync_provider": []

[controller_10_1_2_230][DEBUG ] }

[controller_10_1_2_230][DEBUG ] ********************************************************************************

[controller_10_1_2_230][INFO ] monitor: mon.controller_10_1_2_230 is running

[controller_10_1_2_230][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.controller_10_1_2_230.asok mon_status

[ceph_deploy.mon][INFO ] processing monitor mon.controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] connected to host: controller_10_1_2_230

[controller_10_1_2_230][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.controller_10_1_2_230.asok mon_status

[ceph_deploy.mon][INFO ] mon.controller_10_1_2_230 monitor has reached quorum!

[ceph_deploy.mon][INFO ] all initial monitors are running and have formed quorum

[ceph_deploy.mon][INFO ] Running gatherkeys...

[ceph_deploy.gatherkeys][DEBUG ] Checking controller_10_1_2_230 for /etc/ceph/ceph.client.admin.keyring

[controller_10_1_2_230][DEBUG ] connected to host: controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] detect platform information from remote host

[controller_10_1_2_230][DEBUG ] detect machine type

[controller_10_1_2_230][DEBUG ] fetch remote file

[ceph_deploy.gatherkeys][DEBUG ] Got ceph.client.admin.keyring key from controller_10_1_2_230.

[ceph_deploy.gatherkeys][DEBUG ] Have ceph.mon.keyring

[ceph_deploy.gatherkeys][DEBUG ] Checking controller_10_1_2_230 for /var/lib/ceph/bootstrap-osd/ceph.keyring

[controller_10_1_2_230][DEBUG ] connected to host: controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] detect platform information from remote host

[controller_10_1_2_230][DEBUG ] detect machine type

[controller_10_1_2_230][DEBUG ] fetch remote file

[ceph_deploy.gatherkeys][DEBUG ] Got ceph.bootstrap-osd.keyring key from controller_10_1_2_230.

[ceph_deploy.gatherkeys][DEBUG ] Checking controller_10_1_2_230 for /var/lib/ceph/bootstrap-mds/ceph.keyring

[controller_10_1_2_230][DEBUG ] connected to host: controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] detect platform information from remote host

[controller_10_1_2_230][DEBUG ] detect machine type

[controller_10_1_2_230][DEBUG ] fetch remote file

[ceph_deploy.gatherkeys][DEBUG ] Got ceph.bootstrap-mds.keyring key from controller_10_1_2_230.

Error in sys.exitfunc:

e、校验ceph状态

[root@controller_10_1_2_230 ceph-deploy]# ceph -s

cluster 07462638-a00f-476f-8257-3f4c9ec12d6e

health HEALTH_ERR 64 pgs stuck inactive; 64 pgs stuck unclean; no osds

monmap e1: 1 mons at {controller_10_1_2_230=10.1.2.230:6789/0}, election epoch 2, quorum 0 controller_10_1_2_230

osdmap e1: 0 osds: 0 up, 0 in

pgmap v2: 64 pgs, 1 pools, 0 bytes data, 0 objects

0 kB used, 0 kB / 0 kB avail

64 creating

PS:由于目前还没有OSD,所有集群目前的health健康状态为:HEALTH_ERR,添加之后,就不会报错了@@

7.添加OSD,按照准备条件,先格式化为xfs文件系统并挂载

a、创建目录

[root@controller_10_1_2_230 ceph-deploy]# ssh root@network_10_1_2_231 'mkdir -pv /data1/osd0'

mkdir: created directory `/data1/osd0'

[root@controller_10_1_2_230 ceph-deploy]# ssh compute1_10_1_2_232 'mkdir -pv /data1/osd1'

mkdir: created directory `/data1/osd1'

b、OSD准备

[root@controller_10_1_2_230 ceph-deploy]# ceph-deploy osd prepare network_10_1_2_231:/data1/osd0 compute1_10_1_2_232:/data1/osd1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.19): /usr/bin/ceph-deploy osd prepare network_10_1_2_231:/data1/osd0 compute1_10_1_2_232:/data1/osd1

[ceph_deploy.osd][DEBUG ] Preparing cluster ceph disks network_10_1_2_231:/data1/osd0: compute1_10_1_2_232:/data1/osd1:

[network_10_1_2_231][DEBUG ] connected to host: network_10_1_2_231

[network_10_1_2_231][DEBUG ] detect platform information from remote host

[network_10_1_2_231][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: CentOS 6.5 Final

[ceph_deploy.osd][DEBUG ] Deploying osd to network_10_1_2_231

[network_10_1_2_231][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[network_10_1_2_231][WARNIN] osd keyring does not exist yet, creating one

[network_10_1_2_231][DEBUG ] create a keyring file

[network_10_1_2_231][INFO ] Running command: udevadm trigger --subsystem-match=block --action=add

[ceph_deploy.osd][DEBUG ] Preparing host network_10_1_2_231 disk /data1/osd0 journal None activate False

[network_10_1_2_231][INFO ] Running command: ceph-disk -v prepare --fs-type xfs --cluster ceph -- /data1/osd0

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mkfs_options_xfs

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mkfs_options_xfs

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mount_options_xfs

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mount_options_xfs

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=osd_journal_size

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Preparing osd data dir /data1/osd0

[network_10_1_2_231][INFO ] checking OSD status...

[network_10_1_2_231][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host network_10_1_2_231 is now ready for osd use.

[compute1_10_1_2_232][DEBUG ] connected to host: compute1_10_1_2_232

[compute1_10_1_2_232][DEBUG ] detect platform information from remote host

[compute1_10_1_2_232][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: CentOS 6.5 Final

[ceph_deploy.osd][DEBUG ] Deploying osd to compute1_10_1_2_232

[compute1_10_1_2_232][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[compute1_10_1_2_232][WARNIN] osd keyring does not exist yet, creating one

[compute1_10_1_2_232][DEBUG ] create a keyring file

[compute1_10_1_2_232][INFO ] Running command: udevadm trigger --subsystem-match=block --action=add

[ceph_deploy.osd][DEBUG ] Preparing host compute1_10_1_2_232 disk /data1/osd1 journal None activate False

[compute1_10_1_2_232][INFO ] Running command: ceph-disk -v prepare --fs-type xfs --cluster ceph -- /data1/osd1

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mkfs_options_xfs

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mkfs_options_xfs

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mount_options_xfs

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mount_options_xfs

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=osd_journal_size

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Preparing osd data dir /data1/osd1

[compute1_10_1_2_232][INFO ] checking OSD status...

[compute1_10_1_2_232][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host compute1_10_1_2_232 is now ready for osd use.

Error in sys.exitfunc:

c、激活OSD

[root@controller_10_1_2_230 ceph-deploy]# ceph-deploy osd activate network_10_1_2_231:/data1/osd0 compute1_10_1_2_232:/data1/osd1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.19): /usr/bin/ceph-deploy osd activate network_10_1_2_231:/data1/osd0 compute1_10_1_2_232:/data1/osd1

[ceph_deploy.osd][DEBUG ] Activating cluster ceph disks network_10_1_2_231:/data1/osd0: compute1_10_1_2_232:/data1/osd1:

[network_10_1_2_231][DEBUG ] connected to host: network_10_1_2_231

[network_10_1_2_231][DEBUG ] detect platform information from remote host

[network_10_1_2_231][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: CentOS 6.5 Final

[ceph_deploy.osd][DEBUG ] activating host network_10_1_2_231 disk /data1/osd0

[ceph_deploy.osd][DEBUG ] will use init type: sysvinit

[network_10_1_2_231][INFO ] Running command: ceph-disk -v activate --mark-init sysvinit --mount /data1/osd0

[network_10_1_2_231][DEBUG ] === osd.0 ===

[network_10_1_2_231][DEBUG ] Starting Ceph osd.0 on network_10_1_2_231...

[network_10_1_2_231][DEBUG ] starting osd.0 at :/0 osd_data /var/lib/ceph/osd/ceph-0 /var/lib/ceph/osd/ceph-0/journal

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Cluster uuid is 07462638-a00f-476f-8257-3f4c9ec12d6e

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Cluster name is ceph

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:OSD uuid is 6d298aa5-b33b-4d93-8ac4-efaa671200e5

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Allocating OSD id...

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring osd create --concise 6d298aa5-b33b-4d93-8ac4-efaa671200e5

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:OSD id is 0

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Initializing OSD...

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /data1/osd0/activate.monmap

[network_10_1_2_231][WARNIN] got monmap epoch 1

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster ceph --mkfs --mkkey -i 0 --monmap /data1/osd0/activate.monmap --osd-data /data1/osd0 --osd-journal /data1/osd0/journal --osd-uuid 6d298aa5-b33b-4d93-8ac4-efaa671200e5 --keyring /data1/osd0/keyring

[network_10_1_2_231][WARNIN] 2016-01-28 15:48:59.836760 7f2ec01337a0 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

[network_10_1_2_231][WARNIN] 2016-01-28 15:49:00.053509 7f2ec01337a0 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

[network_10_1_2_231][WARNIN] 2016-01-28 15:49:00.053971 7f2ec01337a0 -1 filestore(/data1/osd0) could not find 23c2fcde/osd_superblock/0//-1 in index: (2) No such file or directory

[network_10_1_2_231][WARNIN] 2016-01-28 15:49:00.262211 7f2ec01337a0 -1 created object store /data1/osd0 journal /data1/osd0/journal for osd.0 fsid 07462638-a00f-476f-8257-3f4c9ec12d6e

[network_10_1_2_231][WARNIN] 2016-01-28 15:49:00.262234 7f2ec01337a0 -1 auth: error reading file: /data1/osd0/keyring: can't open /data1/osd0/keyring: (2) No such file or directory

[network_10_1_2_231][WARNIN] 2016-01-28 15:49:00.262280 7f2ec01337a0 -1 created new key in keyring /data1/osd0/keyring

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Marking with init system sysvinit

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Authorizing OSD key...

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring auth add osd.0 -i /data1/osd0/keyring osd allow * mon allow profile osd

[network_10_1_2_231][WARNIN] added key for osd.0

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:ceph osd.0 data dir is ready at /data1/osd0

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Creating symlink /var/lib/ceph/osd/ceph-0 -> /data1/osd0

[network_10_1_2_231][WARNIN] DEBUG:ceph-disk:Starting ceph osd.0...

[network_10_1_2_231][WARNIN] INFO:ceph-disk:Running command: /sbin/service ceph start osd.0

[network_10_1_2_231][WARNIN] create-or-move updating item name 'osd.0' weight 0.89 at location {host=network_10_1_2_231,root=default} to crush map

[network_10_1_2_231][INFO ] checking OSD status...

[network_10_1_2_231][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[network_10_1_2_231][INFO ] Running command: chkconfig ceph on

[compute1_10_1_2_232][DEBUG ] connected to host: compute1_10_1_2_232

[compute1_10_1_2_232][DEBUG ] detect platform information from remote host

[compute1_10_1_2_232][DEBUG ] detect machine type

[ceph_deploy.osd][INFO ] Distro info: CentOS 6.5 Final

[ceph_deploy.osd][DEBUG ] activating host compute1_10_1_2_232 disk /data1/osd1

[ceph_deploy.osd][DEBUG ] will use init type: sysvinit

[compute1_10_1_2_232][INFO ] Running command: ceph-disk -v activate --mark-init sysvinit --mount /data1/osd1

[compute1_10_1_2_232][DEBUG ] === osd.1 ===

[compute1_10_1_2_232][DEBUG ] Starting Ceph osd.1 on compute1_10_1_2_232...

[compute1_10_1_2_232][DEBUG ] starting osd.1 at :/0 osd_data /var/lib/ceph/osd/ceph-1 /var/lib/ceph/osd/ceph-1/journal

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Cluster uuid is 07462638-a00f-476f-8257-3f4c9ec12d6e

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Cluster name is ceph

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:OSD uuid is 770582a6-e408-4fb8-b59b-8b781d5e226b

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Allocating OSD id...

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring osd create --concise 770582a6-e408-4fb8-b59b-8b781d5e226b

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:OSD id is 1

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Initializing OSD...

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /data1/osd1/activate.monmap

[compute1_10_1_2_232][WARNIN] got monmap epoch 1

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster ceph --mkfs --mkkey -i 1 --monmap /data1/osd1/activate.monmap --osd-data /data1/osd1 --osd-journal /data1/osd1/journal --osd-uuid 770582a6-e408-4fb8-b59b-8b781d5e226b --keyring /data1/osd1/keyring

[compute1_10_1_2_232][WARNIN] 2016-01-28 15:49:08.734889 7fad7e2a3800 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

[compute1_10_1_2_232][WARNIN] 2016-01-28 15:49:08.976634 7fad7e2a3800 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

[compute1_10_1_2_232][WARNIN] 2016-01-28 15:49:08.976965 7fad7e2a3800 -1 filestore(/data1/osd1) could not find 23c2fcde/osd_superblock/0//-1 in index: (2) No such file or directory

[compute1_10_1_2_232][WARNIN] 2016-01-28 15:49:09.285254 7fad7e2a3800 -1 created object store /data1/osd1 journal /data1/osd1/journal for osd.1 fsid 07462638-a00f-476f-8257-3f4c9ec12d6e

[compute1_10_1_2_232][WARNIN] 2016-01-28 15:49:09.285287 7fad7e2a3800 -1 auth: error reading file: /data1/osd1/keyring: can't open /data1/osd1/keyring: (2) No such file or directory

[compute1_10_1_2_232][WARNIN] 2016-01-28 15:49:09.285358 7fad7e2a3800 -1 created new key in keyring /data1/osd1/keyring

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Marking with init system sysvinit

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Authorizing OSD key...

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring auth add osd.1 -i /data1/osd1/keyring osd allow * mon allow profile osd

[compute1_10_1_2_232][WARNIN] added key for osd.1

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:ceph osd.1 data dir is ready at /data1/osd1

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Creating symlink /var/lib/ceph/osd/ceph-1 -> /data1/osd1

[compute1_10_1_2_232][WARNIN] DEBUG:ceph-disk:Starting ceph osd.1...

[compute1_10_1_2_232][WARNIN] INFO:ceph-disk:Running command: /sbin/service ceph --cluster ceph start osd.1

[compute1_10_1_2_232][WARNIN] libust[36314/36314]: Warning: HOME environment variable not set. Disabling LTTng-UST per-user tracing. (in setup_local_apps() at lttng-ust-comm.c:305)

[compute1_10_1_2_232][WARNIN] create-or-move updating item name 'osd.1' weight 0.89 at location {host=compute1_10_1_2_232,root=default} to crush map

[compute1_10_1_2_232][WARNIN] libust[36363/36363]: Warning: HOME environment variable not set. Disabling LTTng-UST per-user tracing. (in setup_local_apps() at lttng-ust-comm.c:305)

[compute1_10_1_2_232][INFO ] checking OSD status...

[compute1_10_1_2_232][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[compute1_10_1_2_232][INFO ] Running command: chkconfig ceph on

Error in sys.exitfunc:

d、再次校验ceph状态

[root@controller_10_1_2_230 ceph-deploy]# ceph -s

cluster 07462638-a00f-476f-8257-3f4c9ec12d6e

health HEALTH_OK #状态为OK了

monmap e1: 1 mons at {controller_10_1_2_230=10.1.2.230:6789/0}, election epoch 2, quorum 0 controller_10_1_2_230 #monitor信息

osdmap e8: 2 osds: 2 up, 2 in #osd信息,有2个OSD,2个状态都是up

pgmap v14: 64 pgs, 1 pools, 0 bytes data, 0 objects

10305 MB used, 1814 GB / 1824 GB avail

64 active+clean

8. 拷贝管理秘钥至其他节点

[root@controller_10_1_2_230 ceph-deploy]# ceph-deploy admin controller_10_1_2_230 network_10_1_2_231 compute1_10_1_2_232

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.19): /usr/bin/ceph-deploy admin controller_10_1_2_230 network_10_1_2_231 compute1_10_1_2_232

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] connected to host: controller_10_1_2_230

[controller_10_1_2_230][DEBUG ] detect platform information from remote host

[controller_10_1_2_230][DEBUG ] detect machine type

[controller_10_1_2_230][DEBUG ] get remote short hostname

[controller_10_1_2_230][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to network_10_1_2_231

[network_10_1_2_231][DEBUG ] connected to host: network_10_1_2_231

[network_10_1_2_231][DEBUG ] detect platform information from remote host

[network_10_1_2_231][DEBUG ] detect machine type

[network_10_1_2_231][DEBUG ] get remote short hostname

[network_10_1_2_231][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to compute1_10_1_2_232

[compute1_10_1_2_232][DEBUG ] connected to host: compute1_10_1_2_232

[compute1_10_1_2_232][DEBUG ] detect platform information from remote host

[compute1_10_1_2_232][DEBUG ] detect machine type

[compute1_10_1_2_232][DEBUG ] get remote short hostname

[compute1_10_1_2_232][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

Error in sys.exitfunc:

其他节点也能够管理ceph集群

[root@network_10_1_2_231 ~]# ll /etc/ceph/ceph.client.admin.keyring

-rw-r--r-- 1 root root 63 Jan 28 15:52 /etc/ceph/ceph.client.admin.keyring

[root@network_10_1_2_231 ~]# ceph health

HEALTH_OK

[root@network_10_1_2_231 ~]# ceph -s

cluster 07462638-a00f-476f-8257-3f4c9ec12d6e

health HEALTH_OK

monmap e1: 1 mons at {controller_10_1_2_230=10.1.2.230:6789/0}, election epoch 2, quorum 0 controller_10_1_2_230

osdmap e8: 2 osds: 2 up, 2 in

pgmap v15: 64 pgs, 1 pools, 0 bytes data, 0 objects

10305 MB used, 1814 GB / 1824 GB avail

64 active+clean

至此,基本的ceph配置完毕!!!

4. 总结

以上配置是ceph的最基本的配置,此处只是作为练习使用,在生产中,实际用ceph,需要考虑的因素非常多,如monitor需要有3个,ceph的集群网络和公共网络的规划,OSD策略的调整等等,请继续关注我的blog,后续将会以ceph系列文章的形式出现,此处只是做简单的演示,接下来的博客,将介绍ceph和glance,nova,以及cinder结合,敬请关注!

5.参考链接

http://docs.ceph.com/docs/master/start/quick-ceph-deploy