携程笔试的时候碰到了这个题目,当时其实没多想。贝叶斯这个路子怕也太过气了吧... 携程也真是...

回顾思路

- 计算先验概率

- 计算条件概率

- 不同类别概率估计

原始数据集

代码

加载数据集

import numpy as np

def loadDataSet():

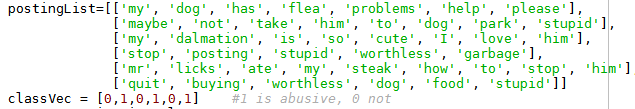

postingList=[['my', 'dog', 'has', 'flea', 'problems', 'help', 'please'],

['maybe', 'not', 'take', 'him', 'to', 'dog', 'park', 'stupid'],

['my', 'dalmation', 'is', 'so', 'cute', 'I', 'love', 'him'],

['stop', 'posting', 'stupid', 'worthless', 'garbage'],

['mr', 'licks', 'ate', 'my', 'steak', 'how', 'to', 'stop', 'him'],

['quit', 'buying', 'worthless', 'dog', 'food', 'stupid']]

classVec = [0,1,0,1,0,1] #1 is abusive, 0 not

return postingList,classVec

这里类别为两类,1-恶意留言;0-非恶意留言。

vocab

def getVocabList(dataSet):

vocab = {}

vocab_reverse = {}

index = 0

for line in dataSet:

for word in line:

if word not in vocab:

vocab[word] = index

vocab_reverse[index] = word

index += 1

return vocab,vocab_reverse

先验概率与条件概率

def native_bayes(vocab,postingList,classVec):

# 先验概率

label = [0,1]

label_num = len(label)

vocab_len = len(vocab)

prior_probability = np.ones(label_num) # 初始化先验概率

conditional_probability = np.ones((label_num,vocab_len)) # 初始化条件概率

postingList_ids = [[vocab[word] for word in line]for line in postingList]

# 默认N为2,

p_n = np.array([2,2])

for i in range(len(postingList_ids)):

for word in postingList_ids[i]:

conditional_probability[classVec[i]][word]+=1

p_n[classVec[i]] += 1

# 条件概率

conditional_probability[0] /= p_n[0]

conditional_probability[1] /= p_n[1]

# 先验概率

all_N = sum(p_n)

p_n = p_n/all_N

return p_n,conditional_probability

argmax 判断

def judge(testEntry):

postingList,classVec = loadDataSet()

vocab,vocab_reverse = getVocabList(postingList)

p_n,conditional_probability = native_bayes(vocab,postingList,classVec)

Ans_p = p_n

testEntry_ids = [vocab[word] for word in testEntry]

for num in testEntry_ids:

Ans_p[0] *= conditional_probability[0][num]

Ans_p[1] *= conditional_probability[1][num]

return np.argmax(Ans_p)

调用

judge(testEntry = ['stupid', 'garbage'])

输出 1,和我们预期的一样。