一、简介

Heartbeat

请参考博客 Heartbeat高可用性和LVS的综合应用 :http://caoruijun.blog.51cto.com/5544226/1021330

DRBD

Distributed Replicated Block Device(DRBD)是一个用软件实现的、无共享的、

服务器之间镜像块设备内容的存储复制解决方案。

数据镜像:实时、透明、同步(所有服务器都成功后返回)、异步(本地服务器成

功后返回)。

DRBD的核心功能通过Linux的内核实现,最接近系统的IO栈,但它不能神奇地添

加上层的功能比如检测到EXT3文件系统的崩溃。

DRBD的位置处于文件系统以下,比文件系统更加靠近操作系统内核及IO栈。

DRBD功能

单主模式:典型的高可靠性集群方案。

复主模式:需要采用共享cluster文件系统,如GFS和OCFS2。用于需要从2个节

点并发访问数据的场合,需要特别配置。

复制模式:3种模式:

协议A:异步复制协议。本地写成功后立即返回,数据放在发送buffer中,可能丢失。

协议B:内存同步(半同步)复制协议。本地写成功并将数据发送到对方后立即返

回,如果双机掉电,数据可能丢失。

协议C:同步复制协议。本地和对方写成功确认后返回。如果双机掉电或磁盘同时

损坏,则数据可能丢失。

一般用协议C。选择协议将影响流量,从而影响网络时延。

NFS

请参考博客 NFS简介及自动挂载配置案例 :http://caoruijun.blog.51cto.com/5544226/998848

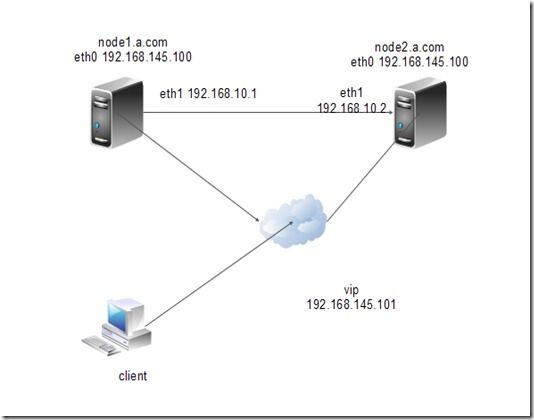

二、案例

拓扑:

配置:

Heartbeat:

node1:

配置地址信息结果:

[root@localhost ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:3C:C1:80

inet addr:192.168.145.99 Bcast:192.168.145.255 Mask:255.255.255.0

eth1 Link encap:Ethernet HWaddr 00:0C:29:3C:C1:8A

inet addr:192.168.10.1 Bcast:192.168.10.255 Mask:255.255.255.0

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

修改服务器名称:

[root@localhost ~]# vim /etc/sysconfig/network

编辑结果:

3 HOSTNAME=node1.a.com

重启:

[root@localhost ~]# reboot

编辑hosts文件:

[root@node1 ~]# vim /etc/hosts

编辑结果:

5 192.168.145.99 node1.a.com

6 192.168.145.100 node2.a.com

编辑本地yum:

[root@node1 ~]# vim /etc/yum.repos.d/rhel-debuginfo.repo

编辑结果:

1 [rhel-server]

2 name=Red Hat Enterprise Linux server

3 baseurl=file:///mnt/cdrom/Server

4 enabled=1

5 gpgcheck=1

6 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

7

8 [rhel-cluster]

9 name=Red Hat Enterprise Linux cluster

10 baseurl=file:///mnt/cdrom/Cluster

11 enabled=1

12 gpgcheck=1

13 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

挂载光盘:

[root@node1 ~]# mkdir /mnt/cdrom

[root@node1 ~]# mount /dev/cdrom /mnt/cdrom

安装heartbeat相关软件:

[root@node1 ~]# yum localinstall -y heartbeat-2.1.4-9.el5.i386.rpm heartbeat-pils-2.1.4-10.el5.i386.rpm heartbeat-stonith-2.1.4-10.el5.i386.rpm libnet-1.1.4-3.el5.i386.rpm perl-MailTools-1.77-1.el5.noarch.rpm –nogpgcheck

node2:

配置地址信息结果:

[root@localhost ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:93:53:D7

inet addr:192.168.145.100 Bcast:192.168.145.255 Mask:255.255.255.0

eth1 Link encap:Ethernet HWaddr 00:0C:29:93:53:E1

inet addr:192.168.10.2 Bcast:192.168.10.255 Mask:255.255.255.0

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

修改服务器名称:

[root@localhost ~]# vim /etc/sysconfig/network

编辑结果:

3 HOSTNAME=node2.a.com

重启:

[root@localhost ~]# reboot

编辑hosts文件:

[root@node2 ~]# vim /etc/hosts

编辑结果:

5 192.168.145.99 node1.a.com

6 192.168.145.100 node2.a.com

编辑本地yum:

[root@node2 ~]# vim /etc/yum.repos.d/rhel-debuginfo.repo

编辑结果:

1 [rhel-server]

2 name=Red Hat Enterprise Linux server

3 baseurl=file:///mnt/cdrom/Server

4 enabled=1

5 gpgcheck=1

6 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

7

8 [rhel-cluster]

9 name=Red Hat Enterprise Linux cluster

10 baseurl=file:///mnt/cdrom/Cluster

11 enabled=1

12 gpgcheck=1

13 gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

挂载光盘:

[root@node2 ~]# mkdir /mnt/cdrom

[root@node2 ~]# mount /dev/cdrom /mnt/cdrom

安装heartbeat相关软件:

[root@node2 ~]# yum localinstall -y heartbeat-2.1.4-9.el5.i386.rpm heartbeat-pils-2.1.4-10.el5.i386.rpm heartbeat-stonith-2.1.4-10.el5.i386.rpm libnet-1.1.4-3.el5.i386.rpm perl-MailTools-1.77-1.el5.noarch.rpm –nogpgcheck

在node1和node2上进行如下操作:

1.复制模版文件

# cd /usr/share/doc/heartbeat-2.1.4/

# cp authkeys ha.cf haresources /etc/ha.d/

2.修改相关的配置信息

# cd /etc/ha.d/ //切换到相关目录

# vim ha.cf

24 debugfile /var/log/ha-debug

29 logfile /var/log/ha-log

34 logfacility local0

48 keepalive 2

56 deadtime 10

76 udpport 694

121 bcast eth0 //此行可以添加在任意一行

157 auto_failback off

211 node node1.a.com

212 node node2.a.com

3.修改资源文件

# echo "node1.a.com IPaddr::192.168.1.1/24/eth0 drbddisk::web Filesystem::/dev/drbd0::/web::ext3 killnfsd">>/etc/ha.d/haresources

4.修改key文件

# vim authkeys

23 auth 1

24 1 crc

5.手工创建文件

# cd /etc/ha.d/resource.d/

# echo "killall -9 nfsd ; /etc/init.d/nfs restart ; exit 0" >>/etc/ha.d/resource.d/killnfsd

6.修改配置文件的权限

# chmod 600 /etc/ha.d/authkeys

# chmod 755 /etc/ha.d/resource.d/killnfsd

7.启动服务

# service heartbeat start

DRBD:

node1:

分区:

[root@node1 ~]# fdisk /dev/sda

Command (m for help): p

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1288 10241437+ 83 Linux

/dev/sda3 1289 1543 2048287+ 82 Linux swap / Solaris

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Selected partition 4

First cylinder (1544-2610, default 1544):

Using default value 1544

Last cylinder or +size or +sizeM or +sizeK (1544-2610, default 2610):

Using default value 2610

Command (m for help): p

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1288 10241437+ 83 Linux

/dev/sda3 1289 1543 2048287+ 82 Linux swap / Solaris

/dev/sda4 1544 2610 8570677+ 5 Extended

Command (m for help): n

First cylinder (1544-2610, default 1544):

Using default value 1544

Last cylinder or +size or +sizeM or +sizeK (1544-2610, default 2610): +1g

Command (m for help): p

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 1288 10241437+ 83 Linux

/dev/sda3 1289 1543 2048287+ 82 Linux swap / Solaris

/dev/sda4 1544 2610 8570677+ 5 Extended

/dev/sda5 1544 1666 987966 83 Linux

Command (m for help): w

The partition table has been altered!

[root@node1 ~]# partprobe /dev/sda

[root@node1 ~]# cat /proc/partitions

major minor #blocks name

8 0 20971520 sda

8 1 104391 sda1

8 2 10241437 sda2

8 3 2048287 sda3

8 4 0 sda4

8 5 987966 sda5

安装软件:

[root@node1 ~]# rpm -ivh drbd83-8.3.8-1.el5.centos.i386.rpm

[root@node1 ~]# rpm -ivh kmod-drbd83-8.3.8-1.el5.centos.i686.rpm

[root@node1 ~]# cd /etc/drbd.d

[root@node1 drbd.d]# ll

总计 4

-rwxr-xr-x 1 root root 1418 2010-06-04 global_common.conf

[root@node1 drbd.d]# vim /etc/drbd.conf

底行模式下运行命令:

r /usr/share/doc/drbd83-8.3.8/drbd.conf

得到如下结果:

include "drbd.d/global_common.conf";

include "drbd.d/*.res";

保存退出。

[root@node1 drbd.d]# vim global_common.conf

编辑结果:

6 common {

7 protocol C;

8

9 startup {

10 wfc-timeout 120; 11 degr-wfc-timeout 120; 12 } 13 disk { 14 on-io-error detach; 15 fencing resource-only;

16 17 }

18 net {

19 cram-hmac-alg "sha1"; 20 shared-secret "mydrbdlab";

21 } 22 syncer {

23 rate 100M; 24 }

25

26 }

配置资源:

[root@node1 drbd.d]# vim web.res

1 resource web {

2 on node1.a.com {

3 device /dev/drbd0;

4 disk /dev/sda5;

5 address 192.168.145.99:7789;

6 meta-disk internal;

7 }

8

9 on node2.a.com {

10 device /dev/drbd0;

11 disk /dev/sda5;

12 address 192.168.145.100:7789;

13 meta-disk internal;

14 }

15 }

复制文件到node2:

[root@node1 drbd.d]# scp * node2.a.com:/etc/drbd.d

[email protected]'s password:

global_common.conf 100% 504 0.5KB/s 00:00 web.res 100% 350 0.3KB/s 00:00

[root@node1 drbd.d]# scp /etc/drbd.conf node2.a.com:/etc

[email protected]'s password:

drbd.conf 100% 100 0.1KB/s 00:00

初始化:

[root@node1 drbd.d]# drbdadm create-md web

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created.

启动服务:

[root@node1 drbd.d]# service drbd start

设为主节点:

[root@node1 drbd.d]# drbdadm -- --overwrite-data-of-peer primary web

格式化:

[root@node1 drbd.d]# mkfs -t ext3 -L drbdweb /dev/drbd0

[root@node1 drbd.d]# mkdir /web

挂载:

[root@node1 drbd.d]# mount /dev/drbd0 /web

[root@node1 drbd.d]# echo "hello" >index.html

node2:

参考node1进行分区(分区大小必须一致)

安装软件:

[root@node2 ~]# rpm -ivh drbd83-8.3.8-1.el5.centos.i386.rpm

[root@node2 ~]# rpm -ivh kmod-drbd83-8.3.8-1.el5.centos.i686.rpm

初始化:

[root@node2 drbd.d]# drbdadm create-md web

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created.

启动服务:

[root@node2 drbd.d]# service drbd start

NFS:

node1:

[root@node1 ~]# vim /etc/exports

编辑结果:

/web *(rw,sync,insecure,no_root_squash,no_wdelay)

启动相关服务:

[root@node1 ~]# service portmap start

启动 portmap: [确定]

[root@node1 ~]# chkconfig portmap on

[root@node1 ~]# service nfs start

启动 NFS 服务: [确定]

关掉 NFS 配额: [确定]

启动 NFS 守护进程: [确定]

启动 NFS mountd: [确定]

[root@node1 ~]# chkconfig nfs on

修改NFS启动脚本:

[root@node1 ~]# vim /etc/init.d/nfs

编辑结果:

122 killproc nfsd –9

node2执行相同操作。