动机

昨天又收到有同学因数据(代码)问题被rccd的邮件,领导也一再提醒数据的安全问题。于是审视了一下硬盘文件,以免存在无心之过… 审视过程中发现了部分日志信息及排错过程中的一些进程状态信息,虽不是什么敏感数据,为了不必要的麻烦决定予以清理,但其中部分信息弃之有些可惜,如排查Yarn RM YGC问题的一些jmap,jstack等信息,由于该问题通过更换了更高性能的机器得以解决,未继续进行深入排查,这部分来之不易信息也就并未派上用场。因此,在清理之前决定结合该部分信息重新梳理分析一下Yarn RM YGC问题。

重温问题解决之道

//未保存现场,聊天记录中截取

2018-01-29T19:09:58.294+0800: 15752.474: [GC (Allocation Failure) [PSYoungGen: 40755358K->2823487K(50331648K)] 56773140K->18863096K(71303168K), 12.4035418 secs] [Times: user=222.88 sys=0.03, real=12.40 secs]

2018-01-29T19:10:38.945+0800: 15793.125: [GC (Allocation Failure) [PSYoungGen: 40572223K->2694856K(50331648K)] 56611832K->18751652K(71303168K), 11.5971746 secs] [Times: user=208.62 sys=0.03, real=11.60 secs]

2018-01-29T19:11:23.713+0800: 15837.893: [GC (Allocation Failure) [PSYoungGen: 40443592K->2834225K(50331648K)] 56500388K->18915647K(71303168K), 12.2938357 secs] [Times: user=221.24 sys=0.00, real=12.29 secs]

2018-01-29T19:12:05.063+0800: 15879.243: [GC (Allocation Failure) [PSYoungGen: 40582961K->2755339K(50331648K)] 56664383K->18858245K(71303168K), 11.9166865 secs] [Times: user=214.42 sys=0.02, real=11.91 secs]

另外, RM日志中存在event-queue堆集问题

如上所示,Yarn RM YGC时长达10s,高峰期可达20s以上。因此,性能问题不言而喻。

先前问题解决方案的制定主要从GC调优角度进行优化,JVM堆内存从40G变迁到了80G,为使对象尽可能止步在YGC,Young区(Young Gen)内存也经历了10g-20g-40g-60g几次飞跃。GC参数也换了一茬又一茬,然而并未从根本上解决问题。由于认定是由于CPU性能问题导致GC效率低下,因此,超线程利器也上阵了,而问题依旧;最后,辛勤的OP同学换了更高性能的服务器,GC时长得以控制在毫秒级。

目前,GC配制情况如下:

[#9#xg-ResouceManager2#yarn@rmserver ~/cluster-data/logs]$ jcmd 27736 VM.flags

27736:

-XX:CICompilerCount=15 -XX:GCLogFileSize=536870912

-XX:InitialHeapSize=85899345920

-XX:MaxHeapSize=85899345920

-XX:MaxNewSize=64424509440 //young区 60g

-XX:MinHeapDeltaBytes=524288 -XX:NewSize=64424509440

-XX:NumberOfGCLogFiles=2 -XX:OldSize=21474836480

-XX:ParGCCardsPerStrideChunk=4096 -XX:+PrintGC

-XX:+PrintGCDateStamps -XX:+PrintGCDetails

-XX:+PrintGCTimeStamps

-XX:SurvivorRatio=3 //单个survivor 12g

-XX:+UnlockDiagnosticVMOptions

-XX:-UseAdaptiveSizePolicy

-XX:+UseFastUnorderedTimeStamps

-XX:+UseGCLogFileRotation

-XX:+UseParallelGC // parallel Scavenge + Serial Old(单线程)

如此的参数配制竟然有效,真是让我大开眼界,也颠覆我传统的认知:Old区比Young区大,那我只能说尽信书则不如无书。

重新梳理问题

问题缓解之后,又转而去解决其它问题了,没有去深究该问题,在检查数据安全的背景下,又将该问题拉回了视野中,一直感觉换机不是根本解决之道,因此决定重新梳理问题,所幸参与解决问题过程中,为方便分析问题一些日志拉到了本地,因此也得以保存,遗憾的是保存的信息量不全,也非同一日的完整信息体。虽如此,问题外显特征一直相同,属于同一问题,因此也能说明一定问题。

Young区大小与YGC时长的关系

通过分析得以存留的GC日志信息,发现YGC时长与YGC后Young区的Young区的大小存在正比关系,即剩余的量越大,YGC越长,为了更直观的说明该结论,我从GC日志中截取了一段连续的存在波动的日志信息。

2018-01-24T15:05:12.055+0800: 2780.121: [GC (Allocation Failure) [PSYoungGen: 8344459K->484310K(9175040K)] 13672674K->5830336K(40632320K), 0.6421833 secs] [Times: user=6.34 sys=0.03, real=0.64 secs]

2018-01-24T15:05:18.403+0800: 2786.468: [GC (Allocation Failure) [PSYoungGen: 8348630K->493586K(9175040K)] 13694656K->5852127K(40632320K), 0.6354490 secs] [Times: user=6.30 sys=0.01, real=0.64 secs]

2018-01-24T15:05:24.810+0800: 2792.876: [GC (Allocation Failure) [PSYoungGen: 8357906K->507046K(9175040K)] 13716447K->5876773K(40632320K), 0.7877401 secs] [Times: user=7.85 sys=0.01, real=0.79 secs]

2018-01-24T15:05:31.743+0800: 2799.808: [GC (Allocation Failure) [PSYoungGen: 8371366K->537442K(9175040K)] 13741093K->5919236K(40632320K), 0.8498489 secs] [Times: user=8.47 sys=0.01, real=0.85 secs]

2018-01-24T15:05:38.765+0800: 2806.831: [GC (Allocation Failure) [PSYoungGen: 8401762K->567214K(9175040K)] 13783556K->5962118K(40632320K), 0.9487537 secs] [Times: user=9.44 sys=0.00, real=0.95 secs]

2018-01-24T15:05:45.791+0800: 2813.856: [GC (Allocation Failure) [PSYoungGen: 8431534K->583819K(9175040K)] 13826438K->5988521K(40632320K), 1.0380007 secs] [Times: user=10.35 sys=0.00, real=1.03 secs]

2018-01-24T15:05:52.966+0800: 2821.031: [GC (Allocation Failure) [PSYoungGen: 8448139K->556379K(9175040K)] 13852841K->5976631K(40632320K), 1.1442949 secs] [Times: user=11.43 sys=0.00, real=1.14 secs]

2018-01-24T15:06:00.030+0800: 2828.095: [GC (Allocation Failure) [PSYoungGen: 8420699K->555026K(9175040K)] 13840951K->5984419K(40632320K), 1.1077380 secs] [Times: user=11.06 sys=0.00, real=1.11 secs]

2018-01-24T15:06:06.335+0800: 2834.400: [GC (Allocation Failure) [PSYoungGen: 8419346K->590867K(9175040K)] 13848739K->6037725K(40632320K), 1.2386294 secs] [Times: user=12.38 sys=0.00, real=1.23 secs]

2018-01-24T15:06:13.638+0800: 2841.703: [GC (Allocation Failure) [PSYoungGen: 8455187K->563957K(9175040K)] 13902045K->6021894K(40632320K), 1.1187463 secs] [Times: user=11.17 sys=0.00, real=1.12 secs]

2018-01-24T15:06:21.030+0800: 2849.095: [GC (Allocation Failure) [PSYoungGen: 8428277K->462155K(9175040K)] 13886214K->5928377K(40632320K), 0.6891712 secs] [Times: user=6.86 sys=0.02, real=0.69 secs]

2018-01-24T15:06:27.777+0800: 2855.842: [GC (Allocation Failure) [PSYoungGen: 8326475K->494792K(9175040K)] 13792697K->5968171K(40632320K), 0.7356112 secs] [Times: user=7.33 sys=0.00, real=0.73 secs]

2018-01-24T15:06:34.361+0800: 2862.426: [GC (Allocation Failure) [PSYoungGen: 8359112K->472731K(9175040K)] 13832491K->5956080K(40632320K), 0.6683025 secs] [Times: user=6.64 sys=0.00, real=0.67 secs]

2018-01-24T15:06:41.069+0800: 2869.134: [GC (Allocation Failure) [PSYoungGen: 8337051K->483344K(9175040K)] 13820400K->5978220K(40632320K), 0.6570753 secs] [Times: user=6.51 sys=0.02, real=0.65 secs]

2018-01-24T15:06:47.772+0800: 2875.837: [GC (Allocation Failure) [PSYoungGen: 8347664K->465064K(9175040K)] 13842540K->5974059K(40632320K), 0.5663590 secs] [Times: user=5.63 sys=0.01, real=0.56 secs]

2018-01-24T15:06:54.389+0800: 2882.455: [GC (Allocation Failure) [PSYoungGen: 8329384K->454076K(9175040K)] 13838379K->5973293K(40632320K), 0.5559584 secs] [Times: user=5.52 sys=0.03, real=0.56 secs]

2018-01-24T15:07:01.635+0800: 2889.700: [GC (Allocation Failure) [PSYoungGen: 8318396K->521880K(9175040K)] 13837613K->6056761K(40632320K), 1.0041698 secs] [Times: user=10.02 sys=0.00, real=1.00 secs]

2018-01-24T15:07:08.663+0800: 2896.729: [GC (Allocation Failure) [PSYoungGen: 8386200K->520605K(9175040K)] 13921081K->6065095K(40632320K), 1.0463873 secs] [Times: user=10.45 sys=0.00, real=1.04 secs]

2018-01-24T15:07:15.796+0800: 2903.861: [GC (Allocation Failure) [PSYoungGen: 8384925K->529044K(9175040K)] 13929415K->6087207K(40632320K), 1.0115040 secs] [Times: user=10.01 sys=0.00, real=1.01 secs]

2018-01-24T15:07:23.222+0800: 2911.288: [GC (Allocation Failure) [PSYoungGen: 8393364K->534805K(9175040K)] 13951527K->6113778K(40632320K), 1.0747669 secs] [Times: user=10.73 sys=0.01, real=1.08 secs]

2018-01-24T15:07:30.332+0800: 2918.397: [GC (Allocation Failure) [PSYoungGen: 8399125K->489447K(9175040K)] 13978098K->6079306K(40632320K), 0.9554071 secs] [Times: user=9.54 sys=0.00, real=0.96 secs]

2018-01-24T15:07:37.296+0800: 2925.361: [GC (Allocation Failure) [PSYoungGen: 8353767K->442958K(9175040K)] 13943626K->6041227K(40632320K), 0.6691608 secs] [Times: user=6.68 sys=0.00, real=0.67 secs]

2018-01-24T15:07:44.914+0800: 2932.979: [GC (Allocation Failure) [PSYoungGen: 8307278K->437538K(9175040K)] 13905547K->6050795K(40632320K), 0.7124929 secs] [Times: user=7.07 sys=0.01, real=0.71 secs]

2018-01-24T15:07:51.793+0800: 2939.858: [GC (Allocation Failure) [PSYoungGen: 8301858K->473921K(9175040K)] 13915115K->6098001K(40632320K), 0.7684869 secs] [Times: user=7.64 sys=0.00, real=0.77 secs]

2018-01-24T15:07:58.598+0800: 2946.663: [GC (Allocation Failure) [PSYoungGen: 8338241K->478071K(9175040K)] 13962321K->6110603K(40632320K), 0.7639746 secs] [Times: user=7.63 sys=0.00, real=0.77 secs]

2018-01-24T15:08:04.382+0800: 2952.448: [GC (Allocation Failure) [PSYoungGen: 8342391K->479205K(9175040K)] 13974923K->6122021K(40632320K), 0.8109380 secs] [Times: user=8.09 sys=0.00, real=0.81 secs]

2018-01-24T15:08:11.056+0800: 2959.121: [GC (Allocation Failure) [PSYoungGen: 8343525K->457732K(9175040K)] 13986341K->6113836K(40632320K), 0.7351115 secs] [Times: user=7.33 sys=0.01, real=0.73 secs]

2018-01-24T15:08:17.686+0800: 2965.751: [GC (Allocation Failure) [PSYoungGen: 8322052K->452644K(9175040K)] 13978156K->6117093K(40632320K), 0.6676740 secs] [Times: user=6.65 sys=0.01, real=0.67 secs]

2018-01-24T15:08:24.360+0800: 2972.425: [GC (Allocation Failure) [PSYoungGen: 8316964K->448014K(9175040K)] 13981413K->6125855K(40632320K), 0.6466176 secs] [Times: user=6.45 sys=0.01, real=0.65 secs]

2018-01-24T15:08:30.842+0800: 2978.907: [GC (Allocation Failure) [PSYoungGen: 8312334K->443836K(9175040K)] 13990175K->6132684K(40632320K), 0.6180497 secs] [Times: user=6.12 sys=0.01, real=0.62 secs]

2018-01-24T15:08:37.439+0800: 2985.504: [GC (Allocation Failure) [PSYoungGen: 8308156K->428507K(9175040K)] 13997004K->6126109K(40632320K), 0.5974813 secs] [Times: user=5.94 sys=0.02, real=0.60 secs]

2018-01-24T15:08:44.154+0800: 2992.219: [GC (Allocation Failure) [PSYoungGen: 8292827K->447938K(9175040K)] 13990429K->6152131K(40632320K), 0.6153070 secs] [Times: user=6.12 sys=0.01, real=0.62 secs]

2018-01-24T15:08:50.816+0800: 2998.882: [GC (Allocation Failure) [PSYoungGen: 8312258K->464488K(9175040K)] 14016451K->6183309K(40632320K), 0.6873898 secs] [Times: user=6.85 sys=0.02, real=0.69 secs]

2018-01-24T15:08:57.834+0800: 3005.900: [GC (Allocation Failure) [PSYoungGen: 8328808K->503555K(9175040K)] 14047629K->6232096K(40632320K), 1.0393343 secs] [Times: user=10.52 sys=0.00, real=1.04 secs]

2018-01-24T15:09:06.484+0800: 3014.549: [GC (Allocation Failure) [PSYoungGen: 8367875K->653313K(9175040K)] 14096416K->6390015K(40632320K), 1.8326990 secs] [Times: user=18.32 sys=0.00, real=1.83 secs]

2018-01-24T15:09:14.517+0800: 3022.582: [GC (Allocation Failure) [PSYoungGen: 8517633K->694205K(9175040K)] 14254335K->6439862K(40632320K), 2.0075142 secs] [Times: user=20.07 sys=0.00, real=2.01 secs]

2018-01-24T15:09:22.442+0800: 3030.508: [GC (Allocation Failure) [PSYoungGen: 8558525K->680568K(9175040K)] 14304182K->6432288K(40632320K), 1.9823928 secs] [Times: user=19.76 sys=0.01, real=1.99 secs]

2018-01-24T15:09:30.425+0800: 3038.490: [GC (Allocation Failure) [PSYoungGen: 8544888K->682694K(9175040K)] 14296608K->6442548K(40632320K), 1.9901893 secs] [Times: user=19.89 sys=0.00, real=1.99 secs]

2018-01-24T15:09:38.166+0800: 3046.232: [GC (Allocation Failure) [PSYoungGen: 8547014K->641436K(9175040K)] 14306868K->6413962K(40632320K), 1.7414826 secs] [Times: user=17.53 sys=0.01, real=1.74 secs]

2018-01-24T15:09:45.767+0800: 3053.833: [GC (Allocation Failure) [PSYoungGen: 8505756K->661152K(9175040K)] 14278282K->6453992K(40632320K), 1.8809221 secs] [Times: user=18.77 sys=0.00, real=1.88 secs]

2018-01-24T15:09:53.823+0800: 3061.888: [GC (Allocation Failure) [PSYoungGen: 8525472K->701454K(9175040K)] 14318312K->6504832K(40632320K), 2.0521165 secs] [Times: user=20.50 sys=0.00, real=2.05 secs]

2018-01-24T15:10:01.415+0800: 3069.480: [GC (Allocation Failure) [PSYoungGen: 8565774K->739864K(9175040K)] 14369152K->6553519K(40632320K), 2.4033559 secs] [Times: user=24.03 sys=0.00, real=2.40 secs]

2018-01-24T15:10:09.770+0800: 3077.835: [GC (Allocation Failure) [PSYoungGen: 8604184K->773748K(9175040K)] 14417839K->6599545K(40632320K), 2.5234427 secs] [Times: user=25.22 sys=0.00, real=2.53 secs]

2018-01-24T15:10:18.510+0800: 3086.576: [GC (Allocation Failure) [PSYoungGen: 8638068K->793254K(9175040K)] 14463865K->6629059K(40632320K), 2.6823239 secs] [Times: user=26.90 sys=0.00, real=2.69 secs]

2018-01-24T15:10:28.140+0800: 3096.205: [GC (Allocation Failure) [PSYoungGen: 8657574K->860322K(9175040K)] 14493379K->6706843K(40632320K), 3.0401675 secs] [Times: user=30.12 sys=0.00, real=3.04 secs]

2018-01-24T15:10:37.600+0800: 3105.665: [GC (Allocation Failure) [PSYoungGen: 8724642K->915220K(9175040K)] 14571163K->6770802K(40632320K), 3.2758327 secs] [Times: user=32.77 sys=0.00, real=3.28 secs]

2018-01-24T15:10:46.836+0800: 3114.901: [GC (Allocation Failure) [PSYoungGen: 8779540K->904150K(9175040K)] 14635122K->6769113K(40632320K), 3.1770298 secs] [Times: user=31.78 sys=0.00, real=3.17 secs]

为了解释上述现象,特对YGC的停顿进行了调研,YGC 停顿时长满足如下公式:

Tyoung = Tstack_scan + Tcard_scan + Told_scan+ Tcopy

其中:

Tyoung YGC总停顿;

Tstack_scan 栈上根对象扫描时间,可以认为是一个应用指定的常数;

Tcard_scan 扫描脏表的时间, 与old区大小有关,另外,Young区对象的移动后,必须要更新指向他们的引用及写入脏表,确保下次Minor GC的时候,脏表可以指向新生代的引用(全集);

Told_scan 老年代跟对象扫描时间,和脏表的数据量有关;

Tcopy 复制存活对象的时间,与堆中存活数据量成正比;

因此,在其它变量一定的情况下,YGC(停顿)时长与堆中存活对象(Tcard_scan)及數據量(Tcopy)正相关。

影响YGC的主要对象构成

上一小节提到,在其它变量一定的情况下,YGC停顿时长与堆中存活对象及数据量正相关,那RM运行中那些对象可能会对GC造成较大的影响呢,为了解答这个问题,特在换机前申请了在线上执行jmap的命令的机会(jmap -histo可能会造成进程进入暂停状态,加live选项时还会引发FullGC, 属于较危险的操作, 本来相多搞两次看一下对象变化呢,最后……),截取量较大的前20条记录如下所示:

1: 118404863 15622861752 [C

2: 16268603 2992477680 [B

3: 69146586 2765863440 java.util.ArrayList

4: 33872192 2283061128 [Ljava.lang.Object;

5: 45116321 1443722272 java.lang.String

6: 7607286 1377877792 [I

7: 8787512 1195101632 org.apache.hadoop.yarn.proto.YarnProtos$ResourceRequestProto$Builder

8: 22939461 1101094128 org.apache.hadoop.yarn.server.resourcemanager.rmnode.RMNodeFinishedContainersPulledByAMEvent

9: 8728662 907780848 org.apache.hadoop.yarn.proto.YarnProtos$ResourceRequestProto

10: 23550270 753608640 java.util.concurrent.LinkedBlockingQueue$Node

11: 22774040 728769280 java.lang.StringBuilder

12: 10450545 668834880 org.apache.hadoop.yarn.proto.YarnProtos$ResourceProto$Builder

13: 8787512 562400768 org.apache.hadoop.yarn.api.records.impl.pb.ResourceRequestPBImpl

14: 11461810 550166880 java.util.concurrent.ConcurrentHashMap$Node

15: 9652655 540548680 org.apache.hadoop.yarn.proto.YarnProtos$ResourceProto

16: 8780794 491724464 org.apache.hadoop.yarn.proto.YarnProtos$PriorityProto

17: 10946511 437860440 java.util.ArrayList$Itr

18: 10598259 423930360 org.apache.hadoop.yarn.api.records.impl.pb.ResourcePBImpl

19: 8780666 351226640 org.apache.hadoop.yarn.api.records.impl.pb.PriorityPBImpl

20: 3672732 264436704 org.apache.hadoop.yarn.proto.YarnProtos$ContainerIdProto

通过jmap -histo信息可知,数量和数据量均较大的有价值的对象有第3、8等,像Object这类JAVA基本对象价值不是太大,而ArrayList也为JAVA内置对象代码,Yarn源码中难以确定影响较大的位置,又RM日志中存在Event-Queue事件堆积现象,因此,从数量与数据量均较大的Yarn专属对象RMNodeFinishedContainersPulledByAMEvent入手进行排查。

RMNodeFinishedContainersPulledByAMEvent对象来源分析

由于对其一无所知,就先搜了一下该对象,发现社区有类似的issue存在(issue: RM is flooded with RMNodeFinishedContainersPulledByAMEvents in the async dispatcher event queue), 视其描述与我们情况大体现同(RM切换前后描述跟我们也基本一致)。

因此坚定了RMNodeFinishedContainersPulledByAMEvent对象为YGC问题的主要祸源。

通过IDE搜索了该对象,该对象只在RMAppAttemptImpl中进行创建,视其创建代码,也找到了大量ArrayList对象存在的原因。

// Ack NM to remove finished containers from context.

private void sendFinishedContainersToNM() {

for (NodeId nodeId : finishedContainersSentToAM.keySet()) {

// Clear and get current values

List currentSentContainers =

finishedContainersSentToAM.put(nodeId,

new ArrayList());

List containerIdList =

new ArrayList(currentSentContainers.size());

for (ContainerStatus containerStatus : currentSentContainers) {

containerIdList.add(containerStatus.getContainerId());

}

eventHandler.handle(new RMNodeFinishedContainersPulledByAMEvent(nodeId,

containerIdList));

}

}

该方法的调用层次结构为(idea快捷键:Command + Shift + H)

其中一支调用层次如下所示:

RMAppAttemptimpl.sendFinishedContainersToNM()

RMAppAttemptimpl.pullJustFinishedContainers()

ApplicationMasterService.allocate()

ApplicationMasterService运行于RM服务端,用于处理AM(ApplicationMaster)的请求,其中allocate方法用于处理AM的资源请求。

@Override

public AllocateResponse allocate(AllocateRequest request)

throws YarnException, IOException {

......

allocateResponse.setAllocatedContainers(allocation.getContainers());

allocateResponse.setCompletedContainersStatuses(appAttempt

.pullJustFinishedContainers());

allocateResponse.setResponseId(lastResponse.getResponseId() + 1);

......

}

通过其实现可知,AM通过 allocate()伸请资源时,都将会调用pullJustFinishedContainers方法。

而pullJustFinishedContainers用以拉取结束的Containers。通过分析其实现逻辑,发现一个有意思的事,每个RMAppAttemptImpl维护了justFinishedContainers和finishedContainersSentToAM两个列表,列表用于记录上次请求之后结束的Container,做最后的处理。然而,代码实现中却只有向列表中存储数据的逻辑,而没有清理数据的逻辑,这就导致AM存活期间justFinishedContainers及finishedContainersSentToAM列表中数据项越来越多,而AM申请资源时,每次都将会调用 sendFinishedContainersToNM对finishedContainersSentToAM列表中所有记录创建RMNodeFinishedContainersPulledByAMEvent对象,而早期被处理过的历史记录可能在应用结束之前多次创建该对象(无效对象)。因此,会出现AM存活期间RMNodeFinishedContainersPulledByAMEvent对象创建的越来越多的现象。

当同时存在的应用数量非常大时,会由于创建过多的RMNodeFinishedContainersPulledByAMEvent对象,而导致处理不及时,导致event-queue堆积,进而造成YGC时因存活对象数量过多而在更新脏表及Copy大量数据耗时过长,导致YGC整体过长现象。且大量AM存活时间越久,问题越严重。

@Override

public List pullJustFinishedContainers() {

this.writeLock.lock();

try {

List returnList = new ArrayList();

// A new allocate means the AM received the previously sent

// finishedContainers. We can ack this to NM now

sendFinishedContainersToNM();

// Mark every containerStatus as being sent to AM though we may return

// only the ones that belong to the current attempt

boolean keepContainersAcressAttempts = this.submissionContext

.getKeepContainersAcrossApplicationAttempts();

for (NodeId nodeId:justFinishedContainers.keySet()) {

// Clear and get current values

List finishedContainers = justFinishedContainers.put

(nodeId, new ArrayList());

if (keepContainersAcressAttempts) {

returnList.addAll(finishedContainers);

} else {

// Filter out containers from previous attempt

for (ContainerStatus containerStatus: finishedContainers) {

if (containerStatus.getContainerId().getApplicationAttemptId()

.equals(this.getAppAttemptId())) {

returnList.add(containerStatus);

}

}

}

finishedContainersSentToAM.putIfAbsent(nodeId, new ArrayList

());

finishedContainersSentToAM.get(nodeId).addAll(finishedContainers);

}

return returnList;

} finally {

this.writeLock.unlock();

}

}

解决方案

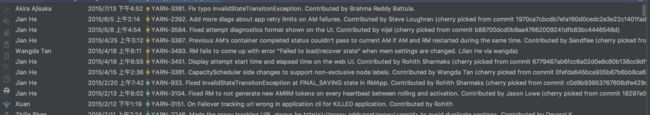

这么大的bug,之前也有人提过该问题,社区应该有解决方案,于是拉了社区最新代码,master分支转到了RMAppAttemptImpl下,git对比了一下版本信息.

fuck!

最近一条更新竟然是2015/7/13日的, 难道 这几年都没人更新这一块。还是我的master分支发生了什么?

记得之前 Hadoop 3.0发布时,更新了好多特性,于是转到了branch 3.0 重新进行git比较,发现还是有不少更新的。

Spark出身的我实在理解不了hadoop的分支是如何搞的。(issue: RM is flooded with RMNodeFinishedContainersPulledByAMEvents in the async dispatcher event queue)解决完了状态竟然还是Open。。。

通过比对发现官方通过YARN-5262 和 YARN-5483两个Patch对上述问题进行了修复。