集群环境说明

hostname ip vip 角色 master1 192.168.6.101 192.168.6.110 etcd集群,k8s-master ,keepalived master2 192.168.6.102 192.168.6.110 etcd集群,k8s-master ,keepalived master3 192.168.6.103 192.168.6.110 etcd集群,k8s-master ,keepalived node1 192.168.6.104 k8s-node node2 192.168.6.105 k8s-node

软件版本说明

系统版本:CentOS 7.4.1708

内核版本:3.10.0-693.17.1.el7.x86_64

etcd版本:3.2.11

docker版本:1.12.6

kubernetes版本:1.9.2准备工作

1.更新软件源

yum -y install epel-release

yum -y update

yum -y install wget net-tools2.停止防火墙

systemctl stop firewalld

systemctl disable firewalld3.时间校时

/usr/sbin/ntpdate asia.pool.ntp.org4.关闭swap

swapoff -a

sed 's/.*swap.*/#&/' /etc/fstabPS: 不做此步骤 kubeadm init 时候出现下面错误

kubelet: error: failed to run Kubelet: Running with swap on is not supported, please disable swap! or set --fail-swap-on5.禁止iptables对bridge数据进行处理

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.conf PS:不做此步骤 kubeadm init 时候出现下面错误

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables6.修改hosts文件

cat >> /etc/hosts << EOF

192.168.6.101 master1

192.168.6.102 master2

192.168.6.103 master3

192.168.6.104 node1

192.168.6.105 node2

EOF7.设置免密码登录

ssh-keygen -t rsa

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]8.关闭selinux

sed -i '/^SELINUX=/s/SELINUX=.*/SELINUX=disabled/g' /etc/sysconfig/selinux9.重启服务器

rebootetcd集群安装

1.下载cfssl,cfssljson,cfsslconfig软件

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo2.生成key所需要文件

cat > ca-csr.json <cat > ca-config.json <cat > etcd-csr.json <3.生成key

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

mkdir -p /etc/etcd/ssl

cp etcd.pem etcd-key.pem ca.pem /etc/etcd/ssl/4.下载etcd

wget https://github.com/coreos/etcd/releases/download/v3.2.11/etcd-v3.2.11-linux-amd64.tar.gz

tar -xvf etcd-v3.2.11-linux-amd64.tar.gz

cp etcd-v3.2.11-linux-amd64/etcd* /usr/local/bin5.生成etcd启动服务文件

cat > /etc/systemd/system/etcd.service <6.同步到其它etcd节点上

scp -r /etc/etcd/ master2:/etc/

scp -r /etc/etcd/ master3:/etc/

scp /usr/local/bin/etcd* master2:/usr/local/bin/

scp /usr/local/bin/etcd* master3:/usr/local/bin/

scp /etc/systemd/system/etcd.service master2:/etc/systemd/system/

scp /etc/systemd/system/etcd.service master2:/etc/systemd/system/PS:注意修改etcd.service相关信息

--name

--initial-advertise-peer-urls

--listen-peer-urls

--listen-client-urls

--advertise-client-urls7.启动etcd集群

mkdir -pv /var/lib/etcd

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd8.验证etcd集群状态

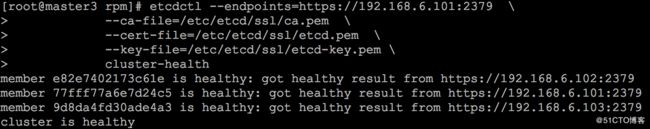

etcdctl --endpoints=https://192.168.6.101:2379 \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

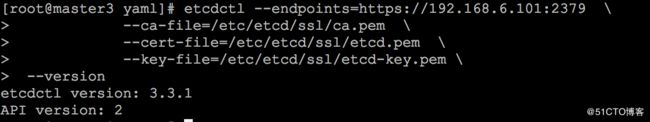

cluster-health说明:目前ETCDCTL_API版本号为2,需要更改版本号3,否则无法操作集群信息

更改API版本,具体操作如下

export ETCDCTL_API=3keepalived安装

1.分别在三台master服务器上安装keepalived

yum -y install keepalived2.生成配置文件,并同步到其它节点,注意修改注释部分

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.6.110:6443" # vip

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 本地网卡名称

virtual_router_id 61

priority 120 # 权重,要唯一

advert_int 1

mcast_src_ip 192.168.6.101 # 本地IP

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

#192.168.6.101 # 注释本地IP

192.168.6.102

192.168.6.103

}

virtual_ipaddress {

192.168.6.110/24 # VIP

}

track_script {

CheckK8sMaster

}

}

EOF3.分别在三台服务器上启动keepalived

systemctl enable keepalived

systemctl start keepalived

systemctl status keepalived安装docker

1.分别在五台服务器上安装docker

yum -y install docker

systemctl enable docker

systemctl start docker安装kubernetes集群

1.docker-image下载

链接:https://pan.baidu.com/s/1rahyOrU 密码:kw12

2.kubernetes rpm包下载

链接:https://pan.baidu.com/s/1dgVjWU 密码:1ejn

3.yaml文件下载

链接:https://pan.baidu.com/s/1gfTnLJ1 密码:9sbl

4.下载文件移动到/root/k8s下,并同步到其它4台服务器

scp -r /root/k8s master2:

scp -r /root/k8s master3:

scp -r /root/k8s node1:

scp -r /root/k8s node2:5.分别在5台服务器运行以下命令

cd /root/k8s/rpm

yum -y install *.rpm

cd /root/k8s/docker-image

for i in `ls`;do docker load < $i;done

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

注意:kubelet 和 docker cgroup driver需要一致

$ grep driver /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=systemd"

$ grep driver /usr/lib/systemd/system/docker.service

--exec-opt native.cgroupdriver=systemd \6.初始化kubernetes

cd /root/k8s/yaml

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=swapPS:记住 kubeadm join --token 开头的信息,加入节点用

如果初始化失败或者想重置集群,可以使用以下命令

$ kubeadm reset

$ etcdctl \

--endpoints="https://192.168.6.101:2379,https://192.168.6.102:2379,https://192.168.6.103:2379" \

--cacert=/etc/etcd/ssl/ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

del /registry --prefix7.kubernetes管理权限授权

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile8.安装网络组件flannel

kube-flannel.yaml文件Network值必须同kubeadm-config.yaml文件podSubnet值相同

kubectl create -f kube-flannel.yaml9.部署其它master节点

kubernetes pki目录同步到其它两个master节点上

scp -r /etc/kubernetes/pki master2:/etc/kubernetes/

scp -r /etc/kubernetes/pki master3:/etc/kubernetes/分别在master2 master3运行以下命令

cd /root/k8s/yaml

kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=swap

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

PS:为了方便测试,你也可以去除master节点污点,以便pod能够分配到master节点上,在本次测试环境我另加了两个worker节点

kubectl taint nodes --all node-role.kubernetes.io/master-标记污点

kubectl taint nodes --all node-role.kubernetes.io/master=:NoSchedule10.添加kubernetes节点,分别在node1,node2运行以下命令

kubeadm join --token be0204.4f256def3933a7d6 192.168.6.110:6443 --discovery-token-ca-cert-hash sha256:9b1677f2a9121e89341daa5ce0dad0da2214cf1210857e1369033c43ad60b559上述命令,在kubeadm init初始化成功后会提示,如果忘记,可以使用以下命令获取

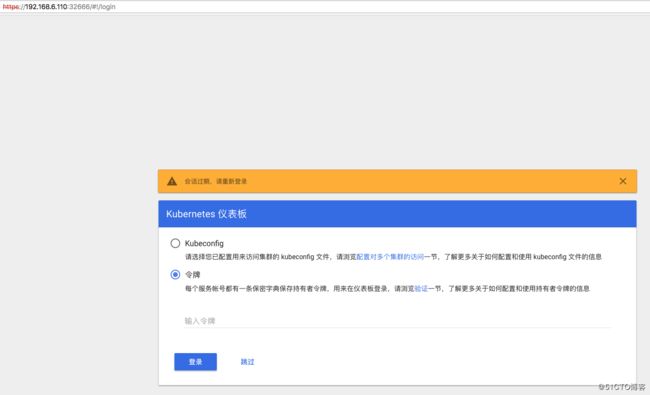

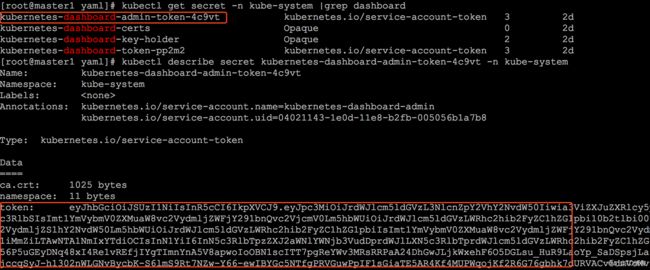

kubeadm token create --print-join-command安装dashboard

1.安装dashboard heapster等组件

cd /root/k8s/yaml

kubectl create -f dashboard.yaml \

-f dashboard-rbac-admin.yml \

-f admin-user.yaml \

-f grafana.yaml \

-f heapster-rbac.yaml \

-f heapster.yaml \

-f influxdb.yamlPS:为方便登录,端口映射到宿主机,dashboard-service类型改为NodePort

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 32666

selector:

k8s-app: kubernetes-dashboard