mongodb分片介绍

分片是由副本集组成的系统

分片就是讲数据库进行拆分,将大型集合分割到不同服务器上,比如:将原本有100G的数据,进行分割成10份存储到不同的服务器上,这样每台服务器只存储有10G的数据

mongodb通过一个mongos的(路由)进程实现分片后的数据存储与访问,也就是说mongos是整个分片架构的核心,对前端的程序而言不会清楚是否有分片的,客户端只需要把读写操作转达给mongos即可

虽然分片会把数据分割到很多台服务器上,但是每一个节点都需要有一台备份的角色,这样能保证数据的高可用性

当系统需要更多空间或资源的时候,分片可以让我们按需方便扩展,只需要把mongodb服务的机器加入到分片集群中即可

mongos分片结构

MongoDB分片相关概念

mongos:数据库群集请求的入口,所有的请求都是通过mongos进行协调,不需要在应用程序上添加一个路由选择器,mongos自己就是一个请求分发中心,它负责把对应的数据请求转发到对应的shard服务器上。在生产环境通常有洞mongos作为请求入口,防止其中一个挂掉后所有的mongodb服务请求都无法访问

config server :配置服务器,存储所有的数据源信息(路由、分片)的配置。mongos本身不在硬盘中存储分片服务器和数据路由信息,只是缓存在内存里,配置服务器则实际存储这些数据。mongos第一次启动或者关闭重启就会从config server中加载配置信息,如果以后配置服务器信息发生变化会通知所有的mongos更新自己的状态,这样mongos就能继续准确的路由。在生产环境中通常有多个config server配置服务器,因为它存储了分片路由的元数据,防止意外宕机故障造成数据丢失

shard :存储了一个集合部分数据的MongoDB实例,每个分片是单独的mongodb服务或者是一个副本集,在生产环境中,所有的分片都应该是副本集

mongodb分片搭建

分片搭建主机

实验规划,这里只为了实现试验需求,对测试中出现的情况实际上是一台服务器相当于部署了一个副本集,实际情况中则是多台服务器组成一个副本集,一台服务器承当三个服务角色。这里请注意区分

三台服务器:1.115、1.223、1.234

以下是创建三个副本集的执行命令,使用如下命令需要注意副本集名称和端口的设定!!

1.234搭建:mongods、config server、副本集1的主节点、副本集2的仲裁、副本集3的从节点

config={_id:"shard1",members:[{_id:0,host:"192.168.1.234:27001"},{_id:1,host:"192.168.1.115:27001"},{_id:2,host:"192.168.1.223:27001",arbiterOnly:true}]}

rs.initiate(config)

设定优先级,仲裁角色设置为最低的优先级

cfg = rs.conf()

cfg.members[0].priority = 3

cfg.members[1].priority = 2

cfg.members[2].priority = 1 仲裁角色

shard2:PRIMARY> rs.reconfig(cfg)

1.115搭建:mongos、config server、副本集1的从节点、副本集2的主节点、副本集3的仲裁

config={_id:"shard2",members:[{_id:0,host:"192.168.1.115:27002"},{_id:1,host:"192.168.1.234:27002",arbiterOnly:true},{_id:2,host:"192.168.1.223:27002"}]}

rs.initiate(config)

设定优先级,仲裁角色设置为最低的优先级

cfg = rs.conf()

cfg.members[0].priority = 3 主优先级

cfg.members[1].priority = 1 仲裁角色

cfg.members[2].priority = 2

shard2:PRIMARY> rs.reconfig(cfg)

1.223搭建:mongos、config server、副本集1的仲裁、副本集2的从节点、副本集3的主节点

config={_id:"shard3",members:[{_id:0,host:"192.168.1.223:27003"},{_id:1,host:"192.168.1.115:27003",arbiterOnly:true},{_id:2,host:"192.168.1.234:27003"}]}

rs.initiate(config)

设定优先级,仲裁角色设置为最低的优先级

cfg = rs.conf()

cfg.members[0].priority = 3 主优先级

cfg.members[1].priority = 1 仲裁角色

cfg.members[2].priority = 2

shard2:PRIMARY> rs.reconfig(cfg)

端口:mongos端口为20000,config server端口为21000,副本集1端口27001、副本集2端口27002、副本集3端口27003

三台服务器全部关闭selinux和firewalld服务,或者增加对应的端口规则

在三台服务器上都创建mongos和应用集的存储目录

[root@localhost ~]# mkdir -p /data/mongodb/mongos/log

[root@localhost ~]# mkdir -p /data/mongodb/config/{data,log}

[root@localhost ~]# mkdir -p /data/mongodb/shard1/{data,log}

[root@localhost ~]# mkdir -p /data/mongodb/shard2/{data,log}

[root@localhost ~]# mkdir -p /data/mongodb/shard3/{data,log}

创建config server的配置文件,修改每台配置。配置文件除了监听ip其他配置都相同

[root@localhost ~]# mkdir /etc/mongod

[root@localhost ~]# vim /etc/mongod/config.cnf

pidfilepath = /var/run/mongodb/configsrv.pid

dbpath = /data/mongodb/config/data

logpath = /data/mongodb/config/log/congigsrv.log

logappend = true

bind_ip = 192.168.1.115

port = 21000

fork = true

configsvr = true #declare this is a config db of a cluster

replSet=configs #副本集名称

maxConns=20000 #最大连接数

启动config server服务,并查看的启动端口

[root@localhost ~]# mongod -f /etc/mongod/config.conf

2018-11-21T04:04:46.882+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process: 3474

child process started successfully, parent exiting

[root@localhost ~]# netstat -ntlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1750/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 2757/master

tcp 0 0 192.168.1.115:21000 0.0.0.0:* LISTEN 3474/mongod

tcp 0 0 192.168.1.115:27017 0.0.0.0:* LISTEN 2797/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 2797/mongod

并登入到config server中,并初始化配置

[root@localhost ~]# mongo --host 192.168.1.115 --port 21000

MongoDB shell version v4.0.4

connecting to: mongodb://192.168.1.115:21000/

Implicit session: session { "id" : UUID("cb007228-2291-4164-9528-c68884a00cee") }

MongoDB server version: 4.0.4

Server has startup warnings:

> config = {_id:"configs",members:[{_id:0,host:"192.168.1.234:21000"},{_id:1,host:"192.168.1.223:21000"},{_id:2,host:"192.168.1.115:21000"}]}

{

"_id" : "configs",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.234:21000"

},

{

"_id" : 1,

"host" : "192.168.1.223:21000"

},

{

"_id" : 2,

"host" : "192.168.1.115:21000"

}

]

}

初始化config server配置

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1542786565, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1542786565, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0),

"$clusterTime" : {

"clusterTime" : Timestamp(1542786565, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

为分片创建三个不同的副本集

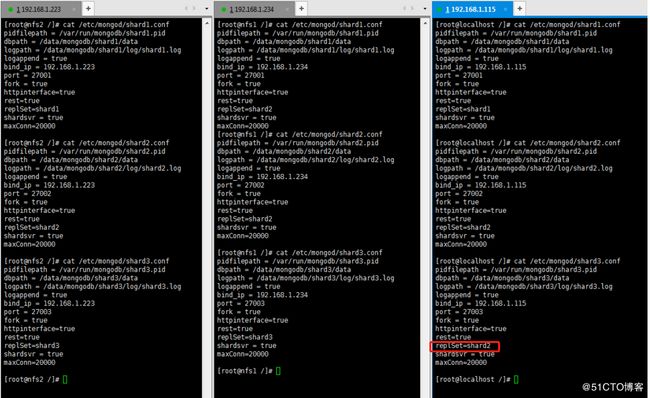

添加副本集的配置文件,三台服务器中都需要配置。每台服务器都要启动一个副本集,所以监听的端口和ip都要配置成不同的

副本集1端口27001、副本集2端口27002、副本集3端口27003

shard1的副本集主上配置,剩下的几台也是同样配置,不同地方在shard的名称和bind_ip 监听的端口

[root@nfs1 ~]# cat /etc/mongod/shard1.conf

pidfilepath = /var/run/mongodb/shard1.pid

dbpath = /data/mongodb/shard1/data

logpath = /data/mongodb/shard1/log/shard1.log 每个副本集存储数据目录名、日志及配置名,如副本集1和2:shard1、shard2

logappend = true

bind_ip = 192.168.1.234 每台配置不一样的监听ip,ip为本地ip

port = 27001 每条配置不一样的监听端口,shard1为27001、shard2为27002、shard3为27003

fork = true

httpinterface=true 打开web监控

rest=true

replSet=shard2

shardsvr = true

maxConn=20000

接下来几台机器可以使用sed批量替换字符来处理配置内容

[root@nfs2 log]# sed -i 's/shard1/shard2/g' /etc/mongod/shard2.conf

启动副本集,并查看监听端口是否正确(此处只摘出副本集2的端口状态,其他主机也是一样的)

[root@nfs2 log]# mongod -f /etc/mongod/config.conf

2018-11-21T15:36:46.642+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process: 13109

child process started successfully, parent exiting

[root@nfs2 log]# netstat -ntlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1641/master

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 9267/nginx: master

tcp 0 0 192.168.1.223:21000 0.0.0.0:* LISTEN 13109/mongod

tcp 0 0 192.168.1.223:27017 0.0.0.0:* LISTEN 13040/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 13040/mongod

完成每台的副本集配置,副本集配置要对每台机器都配置相同的副本集端口监听,比如机器1监听了27001和27002端口,同样机器2也必须监听27001和27002端口。而27001属于副本集1的、27002属于副本集2的端口

配置截图cat参考如下(副本集架构请在文章上面了解):(补充,图中服务器1.115的shard3配置出现一个低级错误,也请各位在配置时认真检查自己的配置文件,以免出现查不到问题的情况。出现错误也可以看mongo产生的日志)

配置中的bind_ip根据机器网卡ip来设定

(配置文件中的配置项:

httpinterface=true

rest=true

maxConn=20000

在添加这几个配置项后启动时不能识别这几个参数,所以后续我没有添加这几个参数来启动的):

[root@localhost /]# cat /etc/mongod/shard1.conf

pidfilepath = /var/run/mongodb/shard1.pid

dbpath = /data/mongodb/shard1/data

logpath = /data/mongodb/shard1/log/shard1.log

logappend = true

bind_ip = 192.168.1.115 这里ip随机器ip做修改,因为这里是服务启动监听的ip

port = 27001 副本集1监听端口

fork = true

httpinterface=true

rest=true

replSet=shard1

shardsvr = true

maxConn=20000

[root@localhost /]# cat /etc/mongod/shard2.conf

pidfilepath = /var/run/mongodb/shard2.pid

dbpath = /data/mongodb/shard2/data

logpath = /data/mongodb/shard2/log/shard2.log

logappend = true

bind_ip = 192.168.1.115

port = 27002 副本集2监听端口

fork = true

httpinterface=true

rest=true

replSet=shard2

shardsvr = true

maxConn=20000

[root@localhost /]# cat /etc/mongod/shard3.conf

pidfilepath = /var/run/mongodb/shard3.pid

dbpath = /data/mongodb/shard3/data

logpath = /data/mongodb/shard3/log/shard3.log

logappend = true

bind_ip = 192.168.1.115

port = 27003 副本集3监听端口

fork = true

httpinterface=true

rest=true

replSet=shard2

shardsvr = true

maxConn=20000

这里先启动shard1的副本集

初始化副本集可以在1.115或者1.234进行,为什么不能在1.223上进行初始化副本集:因为1.223是作为副本集shard1的仲裁者,所以需要把1.223排除在副本集之外,这也就是为什么测试至少需要三台机器的原因,因为如果开始只拿两台做这个测试会因为1台副本集、1台仲裁的架构而不成立。

启动完成后登入每台的副本集中,指定副本集中的主从角色

在副本集中加入其他节点,并执行初始化副本集语句:

[root@nfs1 /]# mongo -host 192.168.1.234 --port 27001

MongoDB shell version v4.0.4

connecting to: mongodb://192.168.1.234:27001/

Implicit session: session { "id" : UUID("0cbd0b1e-db62-4fe2-b20f-a5d23a60fdf5") }

MongoDB server version: 4.0.4

use admin

> use admin

switched to db admin

> config={_id:"shard1",members:[{_id:0,host:"192.168.1.234:27001"},{_id:1,host:"192.168.1.115:27001"},{_id:2,host:"192.168.1.223:27001", arbiterOnly:true}]}

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.234:27001"

},

{

"_id" : 1,

"host" : "192.168.1.115:27001"

},

{

"_id" : 2,

"host" : "192.168.1.223:27001",

"arbiterOnly" : true

}

]

}

初始化副本集,ok提示配置正确初始化成功

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1542796014, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1542796014, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

查看节点信息

shard1:SECONDARY> rs.status()

{

"set" : "shard1",

"date" : ISODate("2018-11-21T10:27:01.259Z"),

"myState" : 2,

"term" : NumberLong(0),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"appliedOpTime" : {

"ts" : Timestamp(1542796014, 1),

"t" : NumberLong(-1)

},

"durableOpTime" : {

"ts" : Timestamp(1542796014, 1),

"t" : NumberLong(-1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(0, 0),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.234:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 606,

"optime" : {

"ts" : Timestamp(1542796014, 1),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("2018-11-21T10:26:54Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.1.115:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 7,

"optime" : {

"ts" : Timestamp(1542796014, 1),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(1542796014, 1),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("2018-11-21T10:26:54Z"),

"optimeDurableDate" : ISODate("2018-11-21T10:26:54Z"),

"lastHeartbeat" : ISODate("2018-11-21T10:27:01.231Z"),

"lastHeartbeatRecv" : ISODate("2018-11-21T10:27:00.799Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.1.223:27001",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 7,

"lastHeartbeat" : ISODate("2018-11-21T10:27:01.231Z"),

"lastHeartbeatRecv" : ISODate("2018-11-21T10:27:00.210Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1542796014, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1542796014, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

其他副本集按照文章头给的架构来分配,后面只给出2、3副本集中执行的语句:

以下是创建三个副本集的执行命令,使用如下命令需要注意副本集名称和端口的设定!!

1.234搭建:mongods、config server、副本集1的主节点、副本集2的仲裁、副本集3的从节点

config={_id:"shard1",members:[{_id:0,host:"192.168.1.234:27001"},{_id:1,host:"192.168.1.115:27001"},{_id:2,host:"192.168.1.223:27001",arbiterOnly:true}]}

rs.initiate(config)

设定优先级,仲裁角色设置为最低的优先级

cfg = rs.conf()

cfg.members[0].priority = 3

cfg.members[1].priority = 2

cfg.members[2].priority = 1 仲裁角色

shard2:PRIMARY> rs.reconfig(cfg)

1.115搭建:mongos、config server、副本集1的从节点、副本集2的主节点、副本集3的仲裁

config={_id:"shard2",members:[{_id:0,host:"192.168.1.115:27002"},{_id:1,host:"192.168.1.234:27002",arbiterOnly:true},{_id:2,host:"192.168.1.223:27002"}]}

rs.initiate(config)

设定优先级,仲裁角色设置为最低的优先级

cfg = rs.conf()

cfg.members[0].priority = 3 主优先级

cfg.members[1].priority = 1 仲裁角色

cfg.members[2].priority = 2

shard2:PRIMARY> rs.reconfig(cfg)

1.223搭建:mongos、config server、副本集1的仲裁、副本集2的从节点、副本集3的主节点

config={_id:"shard3",members:[{_id:0,host:"192.168.1.223:27003"},{_id:1,host:"192.168.1.115:27003",arbiterOnly:true},{_id:2,host:"192.168.1.234:27003"}]}

rs.initiate(config)

设定优先级,仲裁角色设置为最低的优先级

cfg = rs.conf()

cfg.members[0].priority = 3 主优先级

cfg.members[1].priority = 1 仲裁角色

cfg.members[2].priority = 2

shard2:PRIMARY> rs.reconfig(cfg)

至此三个副本集都创建成功了,并且指定了主从、仲裁角色之间的优先级

mongodb分片-配置路由服务

mongos配置

mongos最后配置的原因是因为需要使用到副本集和config server的信息

在三台机器上创建mongos的配置文件,配置文件中的监听ip根据不同机器进行修改

configdb配置中是每个机器的config server的ip和监听端口

[root@nfs2 log]# cat /etc/mongod/mongos.conf

pidfilepath = /var/run/mongodb/mongos.pid

logpath = /data/mongodb/mongos/log/mongos.log

logappend = true

bind_ip = 192.168.1.223

port = 20000

fork = true

configdb = configs/192.168.1.234:21000,192.168.1.115:21000,192.168.1.223:21000

maxConns=20000

启动三台机器上的mongos服务并查看运行的监听端口

[root@nfs2 log]# netstat -ntlp |grep mongos

tcp 0 0 192.168.1.223:20000 0.0.0.0:* LISTEN 14327/mongos

启动每台的mongos后,随意登入一台mongos中(监听端口20000)

把所有的副本集的分片服务与路由器串联起来(添加到服务分发表中)

添加每个副本集到mongos中的时候,需要注意副本集名称和副本集服务器的监听端口和ip是否写入正确

mongos> sh.addShard("shard1/192.168.1.234:27001,192.168.1.115:27001,192.168.1.223:27001")

{

"shardAdded" : "shard1",

"ok" : 1,

"operationTime" : Timestamp(1542893389, 7),

"$clusterTime" : {

"clusterTime" : Timestamp(1542893389, 7),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("shard2/192.168.1.234:27002,192.168.1.115:27002,192.168.1.223:27002")

{

"shardAdded" : "shard2",

"ok" : 1,

"operationTime" : Timestamp(1542893390, 15),

"$clusterTime" : {

"clusterTime" : Timestamp(1542893390, 15),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.addShard("shard3/192.168.1.234:27003,192.168.1.115:27003,192.168.1.223:27003")

{

"shardAdded" : "shard3",

"ok" : 1,

"operationTime" : Timestamp(1542893415, 11),

"$clusterTime" : {

"clusterTime" : Timestamp(1542893415, 11),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

查看mongos分片群集状态信息,着重关注Currently enabled: yes和Currently enabled: yes信息提示。

Currently running: no 未运行是因为这个分片群集中还没有运行任何库跟集合,所以这里显示状态为 no running

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5bf50e12a32cd4af8077b386")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.1.115:27001,192.168.1.234:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.1.115:27002,192.168.1.223:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.1.223:27003,192.168.1.234:27003", "state" : 1 }

active mongoses:

"4.0.4" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

副本集添加完成后就可以创建数据库来进行测试

mongodb分片测试

登入任意一台的mongos20000端口

创建库和集合,并向集合中插入内容。并且查看分片群集状态信息

创建两个库,名字为testdb和db2的库

使用db.runCommand({enablesharding:"库名称"})或者 sh.enableSharding("库名称")来创建

结果返回ok: 1表示创建库成功

mongos> db.runCommand({enablesharding:"testdb"})

{

"ok" : 1,

"operationTime" : Timestamp(1542894725, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1542894725, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.enableSharding("db2")

{

"ok" : 1,

"operationTime" : Timestamp(1542894795, 11),

"$clusterTime" : {

"clusterTime" : Timestamp(1542894795, 11),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

指定数据库里需要分片的集合和片键

执行db.runCommand({shardcollection:"库名.集合名",key:{id:1键值}})或者sh.shardCollection("库名2.集合名2",{"id":1键值})

创建操作结果如下:

mongos> db.runCommand({shardcollection:"testdb.table1",key:{id:1}})

{

"collectionsharded" : "testdb.table1",

"collectionUUID" : UUID("79968692-36d4-4ea4-b095-15ba3e4c8d87"),

"ok" : 1,

"operationTime" : Timestamp(1542895010, 17),

"$clusterTime" : {

"clusterTime" : Timestamp(1542895010, 17),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> sh.shardCollection("db2.tl2",{"id":1})

{

"collectionsharded" : "db2.tl2",

"collectionUUID" : UUID("9a194657-8d47-48c9-885d-6888d0625f91"),

"ok" : 1,

"operationTime" : Timestamp(1542895065, 16),

"$clusterTime" : {

"clusterTime" : Timestamp(1542895065, 16),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

进入库中,向集合内插入10000条数据

mongos> use testdb

switched to db testdb

mongos> for (var i=1;i<10000;i++)db.teble1.save({id:1,"test1":"testval1"})

WriteResult({ "nInserted" : 1 })

mongos> db.table1.stats() 查看teble1这个表的状态信息,显示信息较多,这里就不再列举

查看分片状态

查看创建的库被分片到了哪个副本集当中,以"_id:"格式开头的行,在下面可以看到chunks : shard3或者chunks : shard2的内容,表示了创建数据库会被分片到多个副本集当中存储,简单测试完成

db2库存储到了shard3副本集中。db3存储到了shard2副本集当中

当数据量较大的时候,mongos才能更平均的分片存储数据,测试的数据量太少,本次测试完成不了大数据量存储的存储状态显示

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{ "_id" : "db2", "primary" : "shard3", "partitioned" : true, "version" : { "uuid" : UUID("35e3bf1c-cd35-4b43-89fb-04b16c54d00e"), "lastMod" : 1 } }

db2.tl2

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard3 1

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard3 Timestamp(1, 0)

{ "_id" : "db3", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("a3d2c30b-0842-4d27-9de3-ac4a7710789a"), "lastMod" : 1 } }

db3.tl3

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard2 1

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 0)

{ "_id" : "db4", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("25479d1b-b8b9-48bc-a162-e4da117c021e"), "lastMod" : 1 } }

db4.tl4

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard2 1

{ "id" : { "$minKey" : 1 } } -->> { "id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 0)

mongoDB备份

备份指定库

这里做的是备份分片群集的操作,mongodump的格式

mongodump --host 集合、副本集或分片集的ip --port 监听端口 -d 库 -o 备份存放的路径

备份之前首先把存储备份的目录创建好,然后执行mongodump开始备份。这里以备份testdb库为例:

[root@nfs2 log]# mkdir /tmp/mongobak

[root@nfs2 log]# mongodump --host 192.168.1.223 --port 20000 -d testdb -o /tmp/mongobak/

2018-11-22T14:59:40.035+0800 writing testdb.teble1 to

2018-11-22T14:59:40.035+0800 writing testdb.table1 to

2018-11-22T14:59:40.037+0800 done dumping testdb.table1 (0 documents)

2018-11-22T14:59:40.095+0800 done dumping testdb.teble1 (9999 documents)

进入备份路径下,查看备份的文件,其中bson是备份的数据文件,json是集合语句结构文件,bson数据文件为不可读的二进制文件

[root@nfs2 testdb]# pwd

/tmp/mongobak/testdb

[root@nfs2 testdb]# du -h *

0 table1.bson

4.0K table1.metadata.json

528K teble1.bson

4.0K teble1.metadata.json

使用mongodump备份所有库

mongodump备份所有库格式:

mongodump --host 集合、分片的ip --port 监听端口 -o 备份路径

备份并查看备份出来的所有库,会创建多个库名的目录

[root@nfs2 testdb]# mongodump --host 192.168.1.223 --port 20000 -o /tmp/mongobak/alldatabase

2018-11-22T15:15:01.570+0800 writing admin.system.version to

2018-11-22T15:15:01.573+0800 done dumping admin.system.version (1 document)

2018-11-22T15:15:01.573+0800 writing testdb.teble1 to

2018-11-22T15:15:01.573+0800 writing config.changelog to

2018-11-22T15:15:01.573+0800 writing config.locks to

2018-11-22T15:15:01.574+0800 writing config.lockpings to

2018-11-22T15:15:01.582+0800 done dumping config.changelog (13 documents)

2018-11-22T15:15:01.582+0800 writing config.chunks to

-----------------------------------------------省略

[root@nfs2 alldatabase]# ls

admin config db2 db3 db4 testdb

备份指定集合

-d 指定库名称 -c 指定集合名称 -o 指定保存路径

备份集合到/tmp/mongobak/teble1/testdb目录下,然后查看备份的文件

[root@nfs2 log]# mongodump --host 192.168.1.223 --port 20000 -d testdb -c teble1 -o /tmp/mongobak/teble1

2018-11-22T15:29:34.175+0800 writing testdb.teble1 to

2018-11-22T15:29:34.245+0800 done dumping testdb.teble1 (9999 documents)

[root@nfs2 testdb]# cd /tmp/mongobak/teble1/testdb

[root@nfs2 testdb]# pwd

/tmp/mongobak/teble1/testdb

[root@nfs2 testdb]# du -h *

528K teble1.bson

4.0K teble1.metadata.json

将指定集合导出为json文件

集合导出后缀为json的文件后,可以使用cat等命令工具在终端内进行查看或编辑。并使用head -n查看前10行内容

[root@nfs2 testdb]# mongoexport --host 192.168.1.223 --port 20000 -d testdb -c teble1 -o /tmp/mongobak/testdb/1.json

2018-11-22T15:37:10.508+0800 connected to: 192.168.1.223:20000

2018-11-22T15:37:10.743+0800 exported 9999 records

[root@nfs2 testdb]# du -h 1.json

704K 1.json

[root@nfs2 testdb]# head -n 10 1.json

{"_id":{"$oid":"5bf646a042feeb2b916e3b52"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b53"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b54"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b55"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b56"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b57"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b58"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b59"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b5a"},"id":1.0,"test1":"testval1"}

{"_id":{"$oid":"5bf646a042feeb2b916e3b5b"},"id":1.0,"test1":"testval1"}

mongodb数据恢复

声明:以下所有测试是基于mongodb分片群集中进行操作的,其他环境(单集合、单副本集)暂没有测试过

恢复所有的库

mongorestore --host ip --port 端口 --drop 路径

其中路径是指备份所有的库目录名字,其中--drop可选,含义是表示在恢复之前先把之前的数据删除,不建议使用--drop选项

如:

[root@nfs2 mongobak]# mongorestore --host 192.168.1.223 --port 20000 --drop alldatabase/

2018-11-22T16:05:55.153+0800 preparing collections to restore from

2018-11-22T16:05:55.844+0800 reading metadata for testdb.teble1 from alldatabase/testdb/teble1.metadata.json

2018-11-22T16:05:56.093+0800 reading metadata for db2.tl2 from alldatabase/db2/tl2.metadata.json

2018-11-22T16:05:56.130+0800 reading metadata for db4.tl4 from alldatabase/db4/tl4.metadata.json

如果提示

2018-11-22T15:57:24.747+0800 preparing collections to restore from

2018-11-22T15:57:24.748+0800 Failed: cannot do a full restore on a sharded system - remove the 'config' directory from the dump directory first

表示config、admin库是不能覆盖恢复的,在恢复之前我们可以在alldatabase备份目录下将这两个备份库删除,这样就在恢复操作时不会导入这两个库了

恢复指定库

将库恢复到之前备份的状态,备份路径就是库的存储目录

mongorestore -d 库名 备份路径

[root@nfs2 mongobak]# mongorestore --host 192.168.1.223 --port 20000 -d db2 alldatabase/db2/

2018-11-22T16:09:09.127+0800 the --db and --collection args should only be used when restoring from a BSON file. Other uses are deprecated and will not exist in the future; use --nsInclude instead

2018-11-22T16:09:09.127+0800 building a list of collections to restore from alldatabase/db2 dir

2018-11-22T16:09:09.129+0800 reading metadata for db2.tl2 from alldatabase/db2/tl2.metadata.json

2018-11-22T16:09:09.129+0800 restoring db2.tl2 from alldatabase/db2/tl2.bson

2018-11-22T16:09:09.131+0800 restoring indexes for collection db2.tl2 from metadata

2018-11-22T16:09:09.131+0800 finished restoring db2.tl2 (0 documents)

2018-11-22T16:09:09.131+0800 done

恢复集合

mongorestore --host ip --port 端口 -d 库 -c 集合 备份路径

-c后面要跟恢复集合的名字,备份路径是备份库时生成的文件所在路径,这里是一个bson文件路径,恢复数据时可以只指定bson文件的绝对路径。如:

[root@nfs2 mongobak]# mongorestore --host 192.168.1.223 --port 20000 -d testdb -c table1 testdb/table1.bson

2018-11-22T16:14:38.027+0800 checking for collection data in testdb/table1.bson

2018-11-22T16:14:38.031+0800 reading metadata for testdb.table1 from testdb/table1.metadata.json

2018-11-22T16:14:38.039+0800 restoring testdb.table1 from testdb/table1.bson

2018-11-22T16:14:38.103+0800 restoring indexes for collection testdb.table1 from metadata

2018-11-22T16:14:38.105+0800 finished restoring testdb.table1 (0 documents)

2018-11-22T16:14:38.105+0800 done

导入集合

-d 指定库 -c 指定恢复成的集合名称 --file 指定要导入成集合的json备份文件的路径,具体命令格式如下:

[root@nfs2 mongobak]# mongoimport --host 192.168.1.223 --port 20000 -d testdb -c table1 --file /tmp/mongobak/testdb/1.json

2018-11-22T16:17:41.465+0800 connected to: 192.168.1.223:20000

2018-11-22T16:17:42.226+0800 imported 9999 documents