写在前面

喜欢AVFoundation资料的同学可以关注我的专题:《AVFoundation》专辑

也可以关注我的账号

正文

AVFoundation框架提供了一组功能丰富的类,以便于编辑audio visual assets。AVFoundation的编辑API的核心是组合。合成只是来自一个或多个不同媒体assets的tracks的集合。 AVMutableComposition类提供用于插入和删除tracks以及管理其时间顺序的接口。图3-1显示了如何将新组合从现有assets组合拼凑在一起以形成新的assets。如果你只想将多个asset按顺序合并到一个文件中,那么就可以根据需要进行详细说明。如果要在合成中的曲目上执行任何自定义音频或视频处理,则需要分别合并音频混合或视频合成。

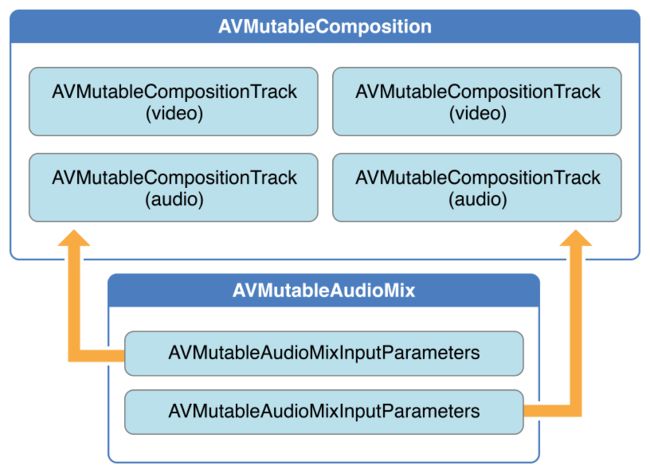

使用 AVMutableAudioMix类,你可以在合成中的音轨上执行自定义音频处理,如图3-2所示。目前,你可以为音轨指定

maximum volume或设置

volume ramp。

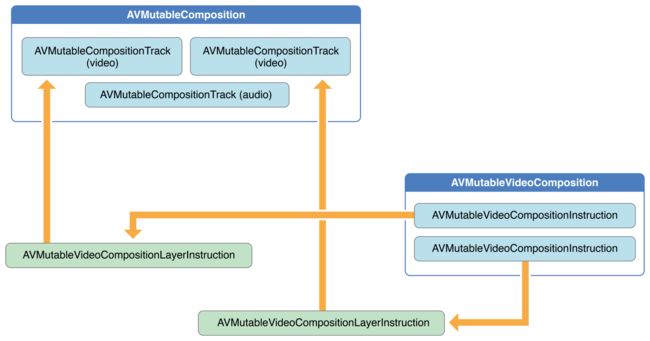

你可以使用AVMutableVideoComposition类直接处理合成中的视频tracks以进行编辑,如图3-3所示。使用单个视频合成,你可以为输出视频指定所需的渲染大小和比例以及帧持续时间。通过视频合成的说明(由AVMutableVideoCompositionInstruction类表示),你可以修改视频的背景颜色并应用图层指令。这些图层指令(由AVMutableVideoCompositionLayerInstruction类表示)可用于将变换,变换ramps,opacity和opacity ramps应用于合成中的视频tracks。视频合成类还使你能够使用·animationTool属性将Core Animation框架中的效果引入到视频中。

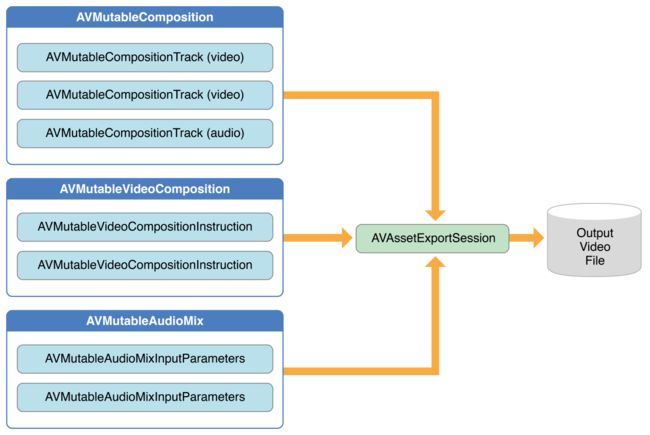

要将合成与音频混合和视频合成相结合,可以使用AVAssetExportSession对象,如图3-4所示。使用合成初始化导出会话,然后分别将音频混合和视频合成分配给audioMix和videoComposition属性。

创建Composition

要创建自己的合成,请使用AVMutableComposition类。要将媒体数据添加到composition(合成)中,必须添加一个或多个composition tracks(合成轨道),由AVMutableCompositionTrack类表示。最简单的情况是创建一个包含一个视频track和一个音频track的可变组合:

AVMutableComposition *mutableComposition = [AVMutableComposition composition];

// Create the video composition track.

AVMutableCompositionTrack *mutableCompositionVideoTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

// Create the audio composition track.

AVMutableCompositionTrack *mutableCompositionAudioTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid]

初始化Composition Track选项

将新tracks添加到合成时,必须同时提供媒体类型和tracks ID。虽然音频和视频是最常用的媒体类型,但你也可以指定其他媒体类型,例如AVMediaTypeSubtitle或AVMediaTypeText。

与某些视听数据相关联的每个track都具有称为track ID的唯一标识符。如果指定kCMPersistentTrackID_Invalid作为首选track ID,则会自动为你生成唯一标识符并与track关联。

将Audiovisual数据添加到Composition中

一旦你有一个或多个tracks的合成,你就可以开始将媒体数据添加到适当的track。要将媒体数据添加到合成轨道,你需要访问媒体数据所在的AVAsset对象。你可以使用可变组合track接口将同一基础媒体类型的多个track放在同一track上。以下示例说明如何将两个不同的视频资源track按顺序添加到同一合成track:

// You can retrieve AVAssets from a number of places, like the camera roll for example.

AVAsset *videoAsset = <#AVAsset with at least one video track#>;

AVAsset *anotherVideoAsset = <#another AVAsset with at least one video track#>;

// Get the first video track from each asset.

AVAssetTrack *videoAssetTrack = [[videoAsset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

AVAssetTrack *anotherVideoAssetTrack = [[anotherVideoAsset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

// Add them both to the composition.

[mutableCompositionVideoTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero,videoAssetTrack.timeRange.duration) ofTrack:videoAssetTrack atTime:kCMTimeZero error:nil];

[mutableCompositionVideoTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero,anotherVideoAssetTrack.timeRange.duration) ofTrack:anotherVideoAssetTrack atTime:videoAssetTrack.timeRange.duration error:nil];

检索兼容的Composition Tracks

在可能的情况下,每种媒体类型只应有一个composition track。兼容asset跟踪的这种统一导致最小量的资源使用。在连续呈现媒体数据时,你应将相同类型的任何媒体数据放在同一composition track上。你可以查询可变组合以查明是否存在与所需asset跟踪兼容的任何合成track:

AVMutableCompositionTrack *compatibleCompositionTrack = [mutableComposition mutableTrackCompatibleWithTrack:<#the AVAssetTrack you want to insert#>];

if (compatibleCompositionTrack) {

// Implementation continues.

}

注意:在同一合成

track上放置多个视频片段可能会导致视频片段之间转换时播放中丢帧,尤其是在嵌入式设备上。为视频片段选择合成track的数量完全取决于你的应用程序及其预期平台的设计。

生成一个Volume Ramp

单个AVMutableAudioMix对象可以单独对合成中的所有音频track执行自定义音频处理。你可以使用audioMix类方法创建音频混合,并使用AVMutableAudioMixInputParameters类的实例将音频混合与合成中的特定track相关联。音频混合可用于改变音频track的volume。以下示例显示如何在特定音频轨道上设置volume ramp,以便在合成期间缓慢淡出音频:

AVMutableAudioMix *mutableAudioMix = [AVMutableAudioMix audioMix];

// Create the audio mix input parameters object.

AVMutableAudioMixInputParameters *mixParameters = [AVMutableAudioMixInputParameters audioMixInputParametersWithTrack:mutableCompositionAudioTrack];

// Set the volume ramp to slowly fade the audio out over the duration of the composition.

[mixParameters setVolumeRampFromStartVolume:1.f toEndVolume:0.f timeRange:CMTimeRangeMake(kCMTimeZero, mutableComposition.duration)];

// Attach the input parameters to the audio mix.

mutableAudioMix.inputParameters = @[mixParameters];

自定义视频处理过程

和音频混合一样,你只需要一个AVMutableVideoComposition对象即可在合成的视频track上执行所有自定义视频处理。使用视频合成,你可以直接为合成的视频track设置适当的渲染的size(尺寸),scale(比例)和frame(帧速率)。有关为这些属性设置适当值的详细示例,请参阅Setting the Render Size and Frame Duration。

更改构图的背景颜色

所有视频合成还必须具有包含至少一个视频合成指令的AVVideoCompositionInstruction对象的数组。你可以使用AVMutableVideoCompositionInstruction类来创建自己的视频合成指令。使用视频合成指令,你可以修改合成的背景颜色,指定是否需要后期处理或应用图层指令。

以下示例说明如何创建视频合成指令,将整个合成的背景颜色更改为红色。

AVMutableVideoCompositionInstruction *mutableVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

mutableVideoCompositionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, mutableComposition.duration);

mutableVideoCompositionInstruction.backgroundColor = [[UIColor redColor] CGColor]

Opacity Ramps的使用

视频合成指令也可用于应用视频合成层指令。 AVMutableVideoCompositionLayerInstruction对象可以将变换,变换ramps,opacity和opacity ramps应用于合成中的某个视频track。视频合成指令的layerInstructions数组中的层指令的顺序决定了如何在该合成指令的持续时间内对来自源track的视频帧进行分层和组合。以下代码片段显示如何设置opacity ramp渐变以在转换到第二个视频之前慢慢淡出合成中的第一个视频:

AVAsset *firstVideoAssetTrack = <#AVAssetTrack representing the first video segment played in the composition#>;

AVAsset *secondVideoAssetTrack = <#AVAssetTrack representing the second video segment played in the composition#>;

// Create the first video composition instruction.

AVMutableVideoCompositionInstruction

*firstVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set its time range to span the duration of the first video track.

firstVideoCompositionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, firstVideoAssetTrack.timeRange.duration);

// Create the layer instruction and associate it with the composition video track.

AVMutableVideoCompositionLayerInstruction *firstVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:mutableCompositionVideoTrack];

// Create the opacity ramp to fade out the first video track over its entire duration.

[firstVideoLayerInstruction setOpacityRampFromStartOpacity:1.f toEndOpacity:0.f timeRange:CMTimeRangeMake(kCMTimeZero, firstVideoAssetTrack.timeRange.duration)];

// Create the second video composition instruction so that the second video track isn't transparent.

AVMutableVideoCompositionInstruction *secondVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set its time range to span the duration of the second video track.

secondVideoCompositionInstruction.timeRange = CMTimeRangeMake(firstVideoAssetTrack.timeRange.duration, CMTimeAdd(firstVideoAssetTrack.timeRange.duration, secondVideoAssetTrack.timeRange.duration));

// Create the second layer instruction and associate it with the composition video track.

AVMutableVideoCompositionLayerInstruction *secondVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:mutableCompositionVideoTrack];

// Attach the first layer instruction to the first video composition instruction.

firstVideoCompositionInstruction.layerInstructions = @[firstVideoLayerInstruction];

// Attach the second layer instruction to the second video composition instruction.

secondVideoCompositionInstruction.layerInstructions = @[secondVideoLayerInstruction];

// Attach both of the video composition instructions to the video composition.

AVMutableVideoComposition *mutableVideoComposition = [AVMutableVideoComposition videoComposition];

mutableVideoComposition.instructions = @[firstVideoCompositionInstruction, secondVideoCompositionInstruction]

结合核心动画效果

视频合成可以通过animationTool属性将Core Animation的强大功能添加到合成中。通过此动画工具,你可以完成诸如水印视频和添加标题或动画叠加层等任务。核心动画可以通过两种不同的方式用于视频合成:你可以将核心动画图层添加为其自己的composition track,或者你可以直接将合成动画效果(使用核心动画图层)渲染到合成中的视频帧中。以下代码通过在视频中心添加水印来显示后一个选项:

CALayer *watermarkLayer = <#CALayer representing your desired watermark image#>;

CALayer *parentLayer = [CALayer layer];

CALayer *videoLayer = [CALayer layer];

parentLayer.frame = CGRectMake(0, 0, mutableVideoComposition.renderSize.width, mutableVideoComposition.renderSize.height);

videoLayer.frame = CGRectMake(0, 0, mutableVideoComposition.renderSize.width, mutableVideoComposition.renderSize.height);

[parentLayer addSublayer:videoLayer];

watermarkLayer.position = CGPointMake(mutableVideoComposition.renderSize.width/2, mutableVideoComposition.renderSize.height/4);

[parentLayer addSublayer:watermarkLayer];

mutableVideoComposition.animationTool = [AVVideoCompositionCoreAnimationTool videoCompositionCoreAnimationToolWithPostProcessingAsVideoLayer:videoLayer inLayer:parentLayer];

综述:组合多个Asset并将结果保存到相册中

此简短的代码示例说明了如何组合两个视频asset track和音频asset track来创建单个视频文件。它显示了如何:

创建 AVMutableComposition对象并添加多个AVMutableCompositionTrack对象

将AVAssetTrack对象的时间范围添加到兼容的

composition tracks。检查视频

assettrack的preferredTransform属性以确定视频的方向。使用AVMutableVideoCompositionLayerInstruction对象将变换应用于合成中的视频

tracks。为视频合成的renderSize和frameDuration属性设置适当的值。

导出到视频文件时,将合成与视频合成结合使用。

将视频文件保存到相册。

注意:为了专注于最相关的代码,此示例省略了完整应用程序的几个方面,例如内存管理和错误处理。要使用

AVFoundation,你应该有足够的经验使用Cocoa来推断缺失的部分。

创建Composition

要将来自不同asset的track拼凑在一起,可以使用AVMutableComposition对象。创建合成并添加一个音频和一个视频track。

AVMutableComposition *mutableComposition = [AVMutableComposition composition];

AVMutableCompositionTrack *videoCompositionTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

AVMutableCompositionTrack *audioCompositionTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

添加Assets

一个空的composition对你是没有意义的。将两个视频asset track和音频asset track添加到composition中。

AVAssetTrack *firstVideoAssetTrack = [[firstVideoAsset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

AVAssetTrack *secondVideoAssetTrack = [[secondVideoAsset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

[videoCompositionTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, firstVideoAssetTrack.timeRange.duration) ofTrack:firstVideoAssetTrack atTime:kCMTimeZero error:nil];

[videoCompositionTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, secondVideoAssetTrack.timeRange.duration) ofTrack:secondVideoAssetTrack atTime:firstVideoAssetTrack.timeRange.duration error:nil];

[audioCompositionTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, CMTimeAdd(firstVideoAssetTrack.timeRange.duration, secondVideoAssetTrack.timeRange.duration)) ofTrack:[[audioAsset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0] atTime:kCMTimeZero error:nil]

注意:这假设你有两个

asset,每个asset至少包含一个视频track,第三个asset包含至少一个音频track。可以从相机胶卷中检索视频,并且可以从音乐库或视频本身检索音频track。

检查视频方向

将视频和音频曲目添加到合成后,你需要确保两个视频track的方向都正确。默认情况下,假设所有视频track都处于横向模式。如果你的视频track是以纵向模式拍摄的,则导出时视频将无法正确定位。同样,如果你尝试将纵向模式下的视频镜头与横向模式下的视频镜头组合在一起,则导出会话将无法完成。

BOOL isFirstVideoPortrait = NO;

CGAffineTransform firstTransform = firstVideoAssetTrack.preferredTransform;

// Check the first video track's preferred transform to determine

if it was recorded in portrait mode.

if (firstTransform.a == 0 && firstTransform.d == 0 && (firstTransform.b == 1.0 || firstTransform.b == -1.0) && (firstTransform.c == 1.0 || firstTransform.c == -1.0)) {

isFirstVideoPortrait = YES;

}

BOOL isSecondVideoPortrait = NO;

CGAffineTransform secondTransform = secondVideoAssetTrack.preferredTransform;

// Check the second video track's preferred transform to determine if it was recorded in portrait mode.

if (secondTransform.a == 0 && secondTransform.d == 0 && (secondTransform.b == 1.0 || secondTransform.b == -1.0) && (secondTransform.c == 1.0 || secondTransform.c == -1.0)) {

isSecondVideoPortrait = YES;

}

if ((isFirstVideoAssetPortrait && !isSecondVideoAssetPortrait) || (!isFirstVideoAssetPortrait && isSecondVideoAssetPortrait)) {

UIAlertView *incompatibleVideoOrientationAlert = [[UIAlertView alloc] initWithTitle:@"Error!" message:@"Cannot combine a video shot in portrait mode with a video shot in landscape mode." delegate:self cancelButtonTitle:@"Dismiss" otherButtonTitles:nil];

[incompatibleVideoOrientationAlert show];

return;

}

应用视频合成层指令

一旦你知道视频片段具有兼容的方向,你就可以对每个视频片段应用必要的图层说明,并将这些图层说明添加到视频合成中。

AVMutableVideoCompositionInstruction *firstVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set the time range of the first instruction to span the duration of the first video track.

firstVideoCompositionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, firstVideoAssetTrack.timeRange.duration);

AVMutableVideoCompositionInstruction * secondVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set the time range of the second instruction to span the duration of the second video track.

secondVideoCompositionInstruction.timeRange = CMTimeRangeMake(firstVideoAssetTrack.timeRange.duration, CMTimeAdd(firstVideoAssetTrack.timeRange.duration, secondVideoAssetTrack.timeRange.duration));

AVMutableVideoCompositionLayerInstruction *firstVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:videoCompositionTrack];

// Set the transform of the first layer instruction to the preferred transform of the first video track.

[firstVideoLayerInstruction setTransform:firstTransform atTime:kCMTimeZero];

AVMutableVideoCompositionLayerInstruction *secondVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:videoCompositionTrack];

// Set the transform of the second layer instruction to the preferred transform of the second video track.

[secondVideoLayerInstruction setTransform:secondTransform atTime:firstVideoAssetTrack.timeRange.duration];

firstVideoCompositionInstruction.layerInstructions = @[firstVideoLayerInstruction];

secondVideoCompositionInstruction.layerInstructions = @[secondVideoLayerInstruction];

AVMutableVideoComposition *mutableVideoComposition = [AVMutableVideoComposition videoComposition];

mutableVideoComposition.instructions = @[firstVideoCompositionInstruction, secondVideoCompositionInstruction];

所有AVAssetTrack对象都具有preferredTransform属性,该属性包含该asset track的方向信息。只要asset track显示在屏幕上,就会应用此转换。在前面的代码中,图层指令的变换设置为asset track的变换,以便在调整渲染大小后,新合成中的视频可以正确显示。

设置渲染Size和帧持续时间

要完成视频方向修复,你必须相应地调整renderSize属性。你还应该为frameDuration属性选择合适的值,例如1/30秒(或每秒30帧)。默认情况下,renderScale属性设置为1.0,这适用于此合成。

CGSize naturalSizeFirst, naturalSizeSecond;

// If the first video asset was shot in portrait mode, then so was the second one if we made it here.

if (isFirstVideoAssetPortrait) {

// Invert the width and height for the video tracks to ensure that they display properly.

naturalSizeFirst = CGSizeMake(firstVideoAssetTrack.naturalSize.height, firstVideoAssetTrack.naturalSize.width);

naturalSizeSecond = CGSizeMake(secondVideoAssetTrack.naturalSize.height, secondVideoAssetTrack.naturalSize.width);

}

else {

// If the videos weren't shot in portrait mode, we can just use their natural sizes.

naturalSizeFirst = firstVideoAssetTrack.naturalSize;

naturalSizeSecond = secondVideoAssetTrack.naturalSize;

}

float renderWidth, renderHeight;

// Set the renderWidth and renderHeight to the max of the two videos widths and heights.

if (naturalSizeFirst.width > naturalSizeSecond.width) {

renderWidth = naturalSizeFirst.width;

}

else {

renderWidth = naturalSizeSecond.width;

}

if (naturalSizeFirst.height > naturalSizeSecond.height) {

renderHeight = naturalSizeFirst.height;

}

else {

renderHeight = naturalSizeSecond.height;

}

mutableVideoComposition.renderSize = CGSizeMake(renderWidth, renderHeight);

// Set the frame duration to an appropriate value (i.e. 30 frames per second for video).

mutableVideoComposition.frameDuration = CMTimeMake(1,30);

导出合成并将其保存到相册

此过程的最后一步是将整个构图导出到单个视频文件中,并将该视频保存到相机胶卷。你使用AVAssetExportSession对象来创建新的视频文件,并将输出文件的所需URL传递给它。然后,你可以使用ALAssetsLibrary类将生成的视频文件保存到相册。

// Create a static date formatter so we only have to initialize it once.

static NSDateFormatter *kDateFormatter;

if (!kDateFormatter) {

kDateFormatter = [[NSDateFormatter alloc] init];

kDateFormatter.dateStyle = NSDateFormatterMediumStyle;

kDateFormatter.timeStyle = NSDateFormatterShortStyle;

}

// Create the export session with the composition and set the preset to the highest quality.

AVAssetExportSession *exporter = [[AVAssetExportSession alloc] initWithAsset:mutableComposition presetName:AVAssetExportPresetHighestQuality];

// Set the desired output URL for the file created by the export process.

exporter.outputURL = [[[[NSFileManager defaultManager] URLForDirectory:NSDocumentDirectory inDomain:NSUserDomainMask appropriateForURL:nil create:@YES error:nil] URLByAppendingPathComponent:[kDateFormatter stringFromDate:[NSDate date]]] URLByAppendingPathExtension:CFBridgingRelease(UTTypeCopyPreferredTagWithClass((CFStringRef)AVFileTypeQuickTimeMovie, kUTTagClassFilenameExtension))];

// Set the output file type to be a QuickTime movie.

exporter.outputFileType = AVFileTypeQuickTimeMovie;

exporter.shouldOptimizeForNetworkUse = YES;

exporter.videoComposition = mutableVideoComposition;

// Asynchronously export the composition to a video file and save this file to the camera roll once export completes.

[exporter exportAsynchronouslyWithCompletionHandler:^{

dispatch_async(dispatch_get_main_queue(), ^{

if (exporter.status == AVAssetExportSessionStatusCompleted) {

ALAssetsLibrary *assetsLibrary = [[ALAssetsLibrary alloc] init];

if ([assetsLibrary videoAtPathIsCompatibleWithSavedPhotosAlbum:exporter.outputURL]) {

[assetsLibrary writeVideoAtPathToSavedPhotosAlbum:exporter.outputURL completionBlock:NULL];

}

}

});

}];

| 上一章 | 目录 | 下一章 |

|---|