centos7+openstack+kvm单节点搭建

简述

本文是基于openstack官方文档和某篇博客结合实践而来的(主要是参考上述的博客)。如果想要更快捷地搭建openstack环境,可以参考DevStack等自动工具。关于openstack更多详细的资料可以参考openstack官网。

本文将介绍在以尽量少的模块在单节点上搭建openstack云平台的具体过程。

环境

- 节点:centos7物理机上的kvm虚拟机CentOS 7.3.1611

- 网络:单网卡

eth0,IP192.168.150.145

openstack

采用Liberty版本(因为有中文官方文档)。

将安装配置如下模块:

| Nova | Neutron | Keystone | Glance | Horizon |

|---|

其中:

- Nova: To implement services and associated libraries to provide massively scalable, on demand, self service access to compute resources, including bare metal, virtual machines, and containers.

- Neutron: OpenStack Neutron is an SDN networking project focused on delivering networking-as-a-service (NaaS) in virtual compute environments.

- Keystone: Keystone is an OpenStack service that provides API client authentication, service discovery, and distributed multi-tenant authorization by implementing OpenStack’s Identity API. It supports LDAP, OAuth, OpenID Connect, SAML and SQL.

- Glance: Glance image services include discovering, registering, and retrieving virtual machine images. Glance has a RESTful API that allows querying of VM image metadata as well as retrieval of the actual image. VM images made available through Glance can be stored in a variety of locations from simple filesystems to object-storage systems like the OpenStack Swift project.

- Horizon: Horizon is the canonical implementation of OpenStack's dashboard, which is extensible and provides a web based user interface to OpenStack services.

这就是openstack:

其他

操作全部在root下进行。

环境准备

为安装配置openstack准备基础环境。

关闭防火墙

关闭 selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

setenforce 0

关闭 iptables

systemctl start firewalld.service

systemctl stop firewalld.service

systemctl disable firewalld.service

安装软件包

base

yum install -y http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-8.noarch.rpm #可能出错,这个应该是没影响的

yum install -y centos-release-openstack-liberty

yum install -y python-openstackclient

MySQL

yum install -y mariadb mariadb-server MySQL-python

RabbitMQ

yum install -y rabbitmq-server

Keystone

yum install -y openstack-keystone httpd mod_wsgi memcached python-memcached

Glance

yum install -y openstack-glance python-glance python-glanceclient

Nova Control

yum install -y openstack-nova-api openstack-nova-cert openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler python-novaclient

Nova compute

yum install -y openstack-nova-compute sysfsutils

Neutron

yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge python-neutronclient ebtables ipset

Dashboard

yum install -y openstack-dashboard

配置mySQL

对mySQL的一些操作可以参考此博客。

cp /usr/share/mariadb/my-medium.cnf /etc/my.cnf #或者是/usr/share/mysql/my-medium.cnf

对于/etc/my.cnf:

[mysqld]

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

systemctl enable mariadb.service #Centos7里面mysql叫maridb`

ln -s '/usr/lib/systemd/system/mariadb.service' '/etc/systemd/system/multi-user.target.wants/mariadb.service'

mysql_install_db --datadir="/var/lib/mysql" --user="mysql" #初始化数据库

systemctl start mariadb.service

mysql_secure_installation #密码 123456,一路 y 回车

到这里已经配置好mysql的配置文件并创建了一个MySQL用户user:mysql&&passwd:123456

创建数据库

[root@localhost ~]# mysql -p123456 #登陆用户准备创建数据库

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 5579

Server version: 5.5.50-MariaDB MariaDB Server

Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE keystone;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'keystone';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'keystone';

MariaDB [(none)]> CREATE DATABASE glance;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'glance';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'glance';

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'nova';

MariaDB [(none)]> CREATE DATABASE neutron;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'neutron';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutron';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| glance |

| keystone |

| mysql |

| neutron |

| nova |

| performance_schema |

+--------------------+

7 rows in set (0.00 sec)

MariaDB [(none)]>\q

参考另一篇博客:这里

修改下mysql的连接数,否则openstack后面的操作会报错:“ERROR 1040 (08004): Too many connections ”

配置/etc/my.cnf

[mysqld]新添加一行如下参数:

max_connections=1000

配置/usr/lib/systemd/system/mariadb.service

[Service]新添加两行如下参数:

LimitNOFILE=10000

LimitNPROC=10000

重新加载系统服务,并重启mariadb服务

systemctl --system daemon-reload

systemctl restart mariadb.service

配置 rabbitmq

对RabbitMQ的了解可以参考这里。

MQ 全称为 Message Queue, 消息队列( MQ)是一种应用程序对应用程序的通信方法。应用

程序通过读写出入队列的消息(针对应用程序的数据)来通信,而无需专用连接来链接它们。

消 息传递指的是程序之间通过在消息中发送数据进行通信,而不是通过直接调用彼此来通

信,直接调用通常是用于诸如远程过程调用的技术。排队指的是应用程序通过 队列来通信。

队列的使用除去了接收和发送应用程序同时执行的要求。

RabbitMQ 是一个在 AMQP 基础上完整的,可复用的企业消息系统。他遵循 Mozilla Public

License 开源协议。

启动 rabbitmq, 端口 5672,添加 openstack 用户

systemctl enable rabbitmq-server.service

ln -s '/usr/lib/systemd/system/rabbitmq-server.service' '/etc/systemd/system/multi-user.target.wants/rabbitmq-server.service'

systemctl start rabbitmq-server.service

rabbitmqctl add_user openstack openstack #添加用户及密码

rabbitmqctl set_permissions openstack ".*" ".*" ".*" #允许配置、写、读访问 openstack

rabbitmq-plugins list #查看支持的插件

.........

[ ] rabbitmq_management 3.6.2 #使用此插件实现 web 管理

.........

rabbitmq-plugins enable rabbitmq_management #启动插件

The following plugins have been enabled:

mochiweb

webmachine

rabbitmq_web_dispatch

amqp_client

rabbitmq_management_agent

rabbitmq_management

Plugin configuration has changed. Restart RabbitMQ for changes to take effect.

systemctl restart rabbitmq-server.service

lsof -i:15672

访问RabbitMQ,访问地址是http://localhost:15672。

默认用户名密码都是guest,使用默认用户登录并到admin标签那里设置用户openstack的的密码(openstack)和tags(administrator)。

之后退出使用 openstack 登录。

安装配置kvm

过程参考自这里。

检查CPU虚拟化支持

grep -E 'svm|vmx' /proc/cpuinfo #有输出就证明支持,否则要另外配置支持了

如果是宿主机是kvm,增加CPU虚拟化支持可以参考这里。

安装软件包

yum install qemu-kvm libvirt virt-install virt-manager #virt-manager是图形界面可以不装

激活并启动libvirtd服务

systemctl enable libvirtd

systemctl start libvirtd

验证内核模块

lsmod |grep kvm

kvm_intel 170181 6

kvm 554609 1 kvm_intel

irqbypass 13503 5 kvm

virsh list

以上完成基础环境的配置,下面开始安装 openstack 的组件

配置 Keystone 验证服务

配置 Keystone

修改/etc/keystone/keystone.conf

取一个随机数

openssl rand -hex 10

bc0aa2b6eae6c007fcbf

cat /etc/keystone/keystone.conf|grep -v "^#"|grep -v "^$"

[DEFAULT]

admin_token = bc0aa2b6eae6c007fcbf #设置 token,和上面产生的随机数值一致

verbose = true

log_dir = log_dir=/var/log/keystone

[assignment]

[auth]

[cache]

[catalog]

[cors]

[cors.subdomain]

[credential]

[database]

connection = mysql://keystone:[email protected]/keystone

[domain_config]

[endpoint_filter]

[endpoint_policy]

[eventlet_server]

[eventlet_server_ssl]

[federation]

[fernet_tokens]

[identity]

[identity_mapping]

[kvs]

[ldap]

[matchmaker_redis]

[matchmaker_ring]

[memcache]

servers = localhost:11211 #或者192.168.150.145?

[oauth1]

[os_inherit]

[oslo_messaging_amqp]

[oslo_messaging_qpid]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

[policy]

[resource]

[revoke]

driver = sql

[role]

[saml]

[signing]

[ssl]

[token]

provider = uuid

driver = memcache

[tokenless_auth]

[trust]

创建数据库表, 使用命令同步

su -s /bin/sh -c "keystone-manage db_sync" keystone

No handlers could be found for logger "oslo_config.cfg" #出现这个信息,不影响后续操作!忽略~

ll /var/log/keystone/keystone.log

-rw-r--r--. 1 keystone keystone 298370 Aug 26 11:36 /var/log/keystone/keystone.log #之所以上面 su 切换是因为这个日志文件属主

mysql -h 192.168.1.17 -u keystone -p #数据库检查表,生产环境密码不要用keystone,改成复杂点的密码

启动 memcached 和 apache

启动 memcached

systemctl enable memcached

ln -s '/usr/lib/systemd/system/memcached.service' '/etc/systemd/system/multi-user.target.wants/memcached.service'

systemctl start memcached

配置 httpd

vim /etc/httpd/conf/httpd.conf

ServerName 192.168.1.17:80

cat /etc/httpd/conf.d/wsgi-keystone.conf

Listen 5000

Listen 35357

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

= 2.4>

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

= 2.4>

Require all granted

Order allow,deny

Allow from all

WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-admin

WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

= 2.4>

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

= 2.4>

Require all granted

Order allow,deny

Allow from all

启动 httpd

systemctl enable httpd

ln -s '/usr/lib/systemd/system/httpd.service' '/etc/systemd/system/multi-user.target.wants/httpd.service'

systemctl start httpd

netstat -lntup|grep httpd

tcp6 0 0 :::80 :::* LISTEN 6191/httpd

tcp6 0 0 :::35357 :::* LISTEN 6191/httpd

tcp6 0 0 :::5000 :::* LISTEN 6191/httpd

如果 http 起不来关闭 selinux 或者安装 yum install openstack-selinux

创建 keystone 用户

临时设置 admin_token 用户的环境变量,用来创建用户

export OS_TOKEN=bc0aa2b6eae6c007fcbf #上面产生的随机数值

export OS_URL=http://192.168.150.145:35357/v3

export OS_IDENTITY_API_VERSION=3

创建 admin 项目---创建 admin 用户(密码 admin,生产不要这么玩) ---创建 admin 角色---把 admin 用户加入到 admin 项目赋予 admin 的角色(三个 admin 的位置:项目,用户,角色)

openstack project create --domain default --description "Admin Project" admin

openstack user create --domain default --password-prompt admin

openstack role create admin

openstack role add --project admin --user admin admin

创建一个普通用户 demo

openstack project create --domain default --description "Demo Project" demo

openstack user create --domain default --password=demo demo

openstack role create user

openstack role add --project demo --user demo user

创建 service 项目,用来管理其他服务用

openstack project create --domain default --description "Service Project" service

以上的名字都是固定的,不能改

查看创建的而用户和项目

openstack user list

+----------------------------------+-------+

| ID | Name |

+----------------------------------+-------+

| b1f164577a2d43b9a6393527f38e3f75 | demo |

| b694d8f0b70b41d883665f9524c77766 | admin |

+----------------------------------+-------+

openstack project list

+----------------------------------+---------+

| ID | Name |

+----------------------------------+---------+

| 604f9f78853847ac9ea3c31f2c7f677d | demo |

| 777f4f0108b1476eabc11e00dccaea9f | admin |

| aa087f62f1d44676834d43d0d902d473 | service |

+----------------------------------+---------+

注册 keystone 服务,以下三种类型分别为公共的、内部的、管理的。

这里的步骤很容易出错,出错原因以及解决方法见这里。

openstack service create --name keystone --description "OpenStack Identity" identity

openstack endpoint create --region RegionOne identity public http://192.168.150.145:5000/v2.0

openstack endpoint create --region RegionOne identity internal http://192.168.150.145:5000/v2.0

openstack endpoint create --region RegionOne identity admin http://192.168.150.145:35357/v2.0

openstack endpoint list #查看

......

一个表格显示有三个endpoint

......

验证,获取 token,只有获取到才能说明 keystone 配置成功

unset OS_TOKEN

unset OS_URL

openstack --os-auth-url http://192.168.150.145:35357/v3 --os-project-domain-id default --os-user-domain-id default --os-project-name admin --os-username admin --os-auth-type password token issue #回车

Password: admin

......

一个表格显示有token信息

......

使用环境变量来获取 token,环境变量在后面创建虚拟机时也需要用。

创建两个环境变量文件,使用时直接 source admin-openrc.sh/demo-openrc.sh(该文件目录下)

cat admin-openrc.sh

export OS_PROJECT_DOMAIN_ID=default

export OS_USER_DOMAIN_ID=default

export OS_PROJECT_NAME=admin

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_AUTH_URL=http://192.168.150.145:35357/v3

export OS_IDENTITY_API_VERSION=3

cat demo-openrc.sh

export OS_PROJECT_DOMAIN_ID=default

export OS_USER_DOMAIN_ID=default

export OS_PROJECT_NAME=demo

export OS_TENANT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=demo

export OS_AUTH_URL=http://192.168.150.145:5000/v3

export OS_IDENTITY_API_VERSION=3

source admin-openrc.sh #载入上述的环境变量

openstack token issue #查看token信息

......

一个表格显示有token信息

......

配置 glance 镜像服务

glance 配置

修改/etc/glance/glance-api.conf 和/etc/glance/glance-registry.conf

cat /etc/glance/glance-api.conf|grep -v "^#"|grep -v "^$"

[DEFAULT]

verbose=True

notification_driver = noop

[database]

connection=mysql://glance:[email protected]/glance

[glance_store]

default_store=file

filesystem_store_datadir=/var/lib/glance/images/

[image_format]

[keystone_authtoken]

auth_uri = http://192.168.150.145:5000

auth_url = http://192.168.150.145:35357

auth_plugin = password

project_domain_id = default

user_domain_id = default

project_name = service

username = glance

password = glance

[matchmaker_redis]

[matchmaker_ring]

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_qpid]

[oslo_messaging_rabbit]

[oslo_policy]

[paste_deploy]

flavor=keystone

[store_type_location_strategy]

[task]

[taskflow_executor]

cat /etc/glance/glance-registry.conf|grep -v "^#"|grep -v "^$"

[DEFAULT]

verbose=True

notification_driver = noop

[database]

connection=mysql://glance:[email protected]/glance

[glance_store]

[keystone_authtoken]

auth_uri = http://192.168.150.145:5000

auth_url = http://192.168.150.145:35357

auth_plugin = password

project_domain_id = default

user_domain_id = default

project_name = service

username = glance

password = glance

[matchmaker_redis]

[matchmaker_ring]

[oslo_messaging_amqp]

[oslo_messaging_qpid]

[oslo_messaging_rabbit]

[oslo_policy]

[paste_deploy]

flavor=keystone

创建数据库表,同步数据库

su -s /bin/sh -c "glance-manage db_sync" glance

mysql -h 192.168.150.145 -uglance -p

创建关于 glance 的 keystone 用户

source admin-openrc.sh

openstack user create --domain default --password=glance glance

openstack role add --project service --user glance admin

启动 glance

systemctl enable openstack-glance-api

systemctl enable openstack-glance-registry

systemctl start openstack-glance-api

systemctl start openstack-glance-registry

netstat -lnutp |grep 9191 #registry

tcp 0 0 0.0.0.0:9191 0.0.0.0:* LISTEN 1333/python2

netstat -lnutp |grep 9292 #api

tcp 0 0 0.0.0.0:9292 0.0.0.0:* LISTEN 1329/python2

在 keystone 上注册

source admin-openrc.sh

openstack service create --name glance --description "OpenStack Image service" image

openstack endpoint create --region RegionOne image public http://192.168.150.145:9292

openstack endpoint create --region RegionOne image internal http://192.168.150.145:9292

openstack endpoint create --region RegionOne image admin http://192.168.150.145:9292

添加 glance 环境变量并测试

echo "export OS_IMAGE_API_VERSION=2" | tee -a admin-openrc.sh demo-openrc.sh

glance image-list

+----+------+

| ID | Name |

+----+------+

+----+------+

下载镜像并上传到 glance(这里用的cirros镜像是专门用来测试的,很小)

wget -q http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

glance image-create --name "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --visibility public --progress

[=============================>] 100%

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

......

......

也可以上传官方制作的镜像,但是这些镜像一般不知道账户密码,所以也可以自制镜像:

参考使用ios镜像进行制作openstack镜像http://www.cnblogs.com/kevingrace/p/5821823.html

查看镜像:

glance image-list

+--------------------------------------+-----------------+

| ID | Name |

+--------------------------------------+-----------------+

| 2fa1b84f-51c0-49c6-af78-b121205eba08 | CentOS-7-x86_64 |

| 722e10fb-9a0b-4c56-9075-f6a3c5bbba66 | cirros |

+--------------------------------------+-----------------+

配置 nova 计算服务

Nova配置

修改/etc/nova/nova.conf

cat /etc/nova/nova.conf|grep -v "^#"|grep -v "^$"

[DEFAULT]

my_ip=192.168.150.145

enabled_apis=osapi_compute,metadata

auth_strategy=keystone

network_api_class=nova.network.neutronv2.api.API

linuxnet_interface_driver=nova.network.linux_net.NeutronLinuxBridgeInterfaceDriver

security_group_api=neutron

firewall_driver = nova.virt.firewall.NoopFirewallDriver

debug=true

verbose=true

rpc_backend=rabbit

allow_resize_to_same_host=True

scheduler_default_filters=RetryFilter,AvailabilityZoneFilter,RamFilter,ComputeFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter,ServerGroupAntiAffinityFilter,ServerGroupAffinityFilter

vif_plugging_is_fatal=false

vif_plugging_timeout=0

log_dir=/var/log/nova

[api_database]

[barbican]

[cells]

[cinder]

[conductor]

[cors]

[cors.subdomain]

[database]

connection=mysql://nova:[email protected]/nova

[ephemeral_storage_encryption]

[glance]

host=$my_ip

[guestfs]

[hyperv]

[image_file_url]

[ironic]

[keymgr]

[keystone_authtoken]

auth_uri = http://192.168.150.145:5000

auth_url = http://192.168.150.145:35357

auth_plugin = password

project_domain_id = default

user_domain_id = default

project_name = service

username = nova

password = nova

[libvirt]

virt_type=kvm

[matchmaker_redis]

[matchmaker_ring]

[metrics]

[neutron]

url = http://192.168.150.145:9696

auth_url = http://192.168.150.145:35357

auth_plugin = password

project_domain_id = default

user_domain_id = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

service_metadata_proxy = True

metadata_proxy_shared_secret = neutron

lock_path=/var/lib/nova/tmp

[osapi_v21]

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_qpid]

[oslo_messaging_rabbit]

rabbit_host=192.168.150.145

rabbit_port=5672

rabbit_userid=openstack

rabbit_password=openstack

[oslo_middleware]

[rdp]

[serial_console]

[spice]

[ssl]

[trusted_computing]

[upgrade_levels]

[vmware]

[vnc]

novncproxy_base_url=http://192.168.150.145:6080/vnc_auto.html

vncserver_listen= $my_ip

vncserver_proxyclient_address= $my_ip

keymap=en-us

[workarounds]

[xenserver]

[zookeeper]

网络部分为啥这么写:network_api_class=nova.network.neutronv2.api.API

ls /usr/lib/python2.7/site-packages/nova/network/neutronv2/api.py

/usr/lib/python2.7/site-packages/nova/network/neutronv2/api.py

这里面有一个 API 方法,其他配置类似

同步数据库

su -s /bin/sh -c "nova-manage db sync" nova

mysql -h 192.168.1.17 -unova -p #检查

创建 nova 的 keystone 用户

openstack user create --domain default --password=nova nova

openstack role add --project service --user nova admin

启动 nova 相关服务

systemctl enable openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl enable libvirtd openstack-nova-compute

systemctl start libvirtd openstack-nova-compute

source admin-openrc.sh

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://192.168.150.145:8774/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne compute internal http://192.168.150.145:8774/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne compute admin http://192.168.150.145:8774/v2/%\(tenant_id\)s

检查

openstack host list

+-----------------------+-------------+----------+

| Host Name | Service | Zone |

+-----------------------+-------------+----------+

| localhost.localdomain | cert | internal |

| localhost.localdomain | conductor | internal |

| localhost.localdomain | consoleauth | internal |

| localhost.localdomain | scheduler | internal |

| localhost.localdomain | compute | nova |

+-----------------------+-------------+----------+

nova image-list #测试 glance 是否正常

+--------------------------------------+-----------------+--------+--------+

| ID | Name | Status | Server |

+--------------------------------------+-----------------+--------+--------+

| 2fa1b84f-51c0-49c6-af78-b121205eba08 | CentOS-7-x86_64 | ACTIVE | |

| 722e10fb-9a0b-4c56-9075-f6a3c5bbba66 | cirros | ACTIVE | |

+--------------------------------------+-----------------+--------+--------+

nova endpoints #测试 keystone

WARNING: keystone has no endpoint in ! Available endpoints for this service:

+-----------+----------------------------------+

| keystone | Value |

+-----------+----------------------------------+

| id | 33f1d5ddb5a14d9fa4bff2e4f047cc02 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| url | http://192.168.150.145:5000/v2.0 |

+-----------+----------------------------------+

......

......

Neutron 网络服务

neutron是最难搞的部分。

Neutron 介绍

来自官方文档的介绍: (链接在这里)

网络提供网络,子网和路由作为对象抽象的概念。每个概念都有自己的功能,可以模拟对应的物理对应设备:网络包括子网,路由在不同的子网和网络间进行路由转发。

每个路由都有一个连接到网络的网关,并且很多接口都连接到子网中。子网可以访问其他连接到相同路由其他子网的机器。

任何给定的Networking设置至少有一个外部网络。不像其他的网络,外部网络不仅仅是一个虚拟定义的网络。相反,它代表了一种OpenStack安装之外的能从物理的,外部的网络访问的视图。外部网络上的IP地址能被任何物理接入外面网络的人所访问。因为外部网络仅仅代表了进入外面网络的一个视图,网络上的DHCP是关闭的。

外部网络之外,任何 Networking 设置拥有一个或多个内部网络。这些软件定义的网络直接连接到虚拟机。仅仅在给定网络上的虚拟机,或那些在通过接口连接到相近路由的子网上的虚拟机,能直接访问连接到那个网络上的虚拟机。

如果外网需要访问虚拟机,或者相反,网络中的路由器就是必须要使用的。每个路由器配有一个网关,可以连接到网络和接口,这些接口又连接着子网。如同实体路由器一样,子网中的机器可以访问连接到同一个路由器的子网中的其它机器,机器可以通过该路由器的网关访问外网。

另外,你可以将外部网络的IP地址分配给内部网络的端口。不管什么时候一旦有什么连接到子网,那个连接就叫做端口。你可以通过端口把外部网络IP地址分给VMs。

网络同样支持security groups。安全组允许管理员在安全组中定义防火墙规则。一个VM可以属于一个或多个安全组,网络为这个VM应用这些安全组中的规则,阻止或者开启端口,端口范围或者通信类型。

neutron的概念很多很复杂,细节可以到这里看看。

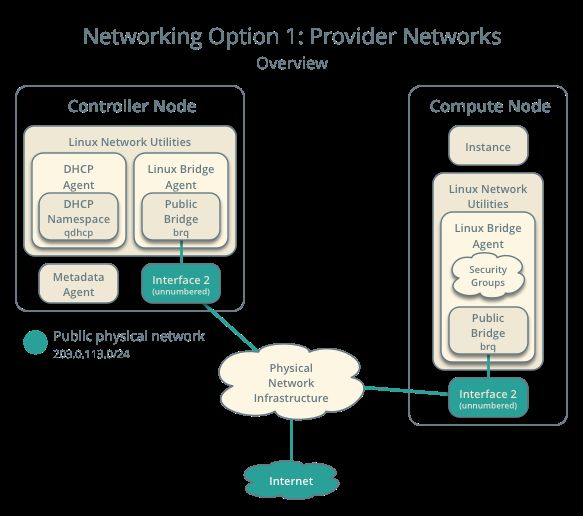

neutron可以提供两种网络选项:

- 提供者网络(Provider NetWorks)

- 自服务网络(Self-Service NetWorks)

提供者网络结构比较简单,所以这里就采用这种方式了。

网卡配置

(我理解的)neutron搭建网络应该是通过在物理网卡上搭设Linux-bridge并将网络的出入口端口设在这条桥上实现的。centos7的网卡的网桥是通过/etc/sysconfig/network-scripts目录里的配置文件来配置的(细节可以看这里),但是neutron配置的网桥没有相关的配置文件(也可能是有的?),所以这里的配置很容易出问题,不是宿主机断网就是虚拟机实例无法访问外网。

我的宿主机网网络配置是:只有一个网卡eth0,其IP是192.168.150.145。

经过多次尝试之后,找出这样的一个方法是成功的:

1.将网卡的配置文件修改如下:

DEVICE=eth0

ONBOOT=yes

TYPE=Ethernet

BOOTPROTO=none #主要是要将这里改成none,如果是DHCP就会冲突断网

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

如上,实测如果是DHCP的话配置出来的网桥和网卡都是同一个IP,如此就会冲突致使宿主机断网。所以将它改成none,这样的结果是网卡没有IP地址,网桥有IP地址,宿主机和虚拟机实例都能连网。(static未试过,不清楚结果会怎样)。

2.按照下述的过程配置neutron,新建网络和子网之后通过systemctl restart network来重启网络并查看结果。

上述方法是在只有一个网卡的情况下进行的,还有一种应该可行的方法是加多一个子网卡,然后配置将网桥搭建在子网卡上,这样就不用担心宿主机断网了,这个有待测试。

Neutron 配置( 5 个配置文件)

结构应该是:

- neutron-->ml2(Module Layer2)-->linuxbridge_agent

- ----------------------------------------->dhcp_agent

- ----------------------------------------->metadata_agent

修改/etc/neutron/neutron.conf 文件

cat /etc/neutron/neutron.conf|grep -v "^#"|grep -v "^$"

[DEFAULT]

state_path = /var/lib/neutron

core_plugin = ml2

service_plugins = router

auth_strategy = keystone

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

nova_url = http://192.168.150.145:8774/v2

rpc_backend=rabbit

[matchmaker_redis]

[matchmaker_ring]

[quotas]

[agent]

[keystone_authtoken]

auth_uri = http://192.168.150.145:5000

auth_url = http://192.168.150.145:35357

auth_plugin = password

project_domain_id = default

user_domain_id = default

project_name = service

username = neutron

password = neutron

admin_tenant_name = %SERVICE_TENANT_NAME%

admin_user = %SERVICE_USER%

admin_password = %SERVICE_PASSWORD%

[database]

connection = mysql://neutron:[email protected]:3306/neutron

[nova]

auth_url = http://192.168.150.145:35357

auth_plugin = password

project_domain_id = default

user_domain_id = default

region_name = RegionOne

project_name = service

username = nova

password = nova

[oslo_concurrency]

lock_path = $state_path/lock

[oslo_policy]

[oslo_messaging_amqp]

[oslo_messaging_qpid]

[oslo_messaging_rabbit]

rabbit_host = 192.168.150.145

rabbit_port = 5672

rabbit_userid = openstack

rabbit_password = openstack

[qos]

配置/etc/neutron/plugins/ml2/ml2_conf.ini

cat /etc/neutron/plugins/ml2/ml2_conf.ini|grep -v "^#"|grep -v "^$"

[ml2]

type_drivers = flat,vlan,gre,vxlan,geneve

tenant_network_types = vlan,gre,vxlan,geneve

mechanism_drivers = openvswitch,linuxbridge

extension_drivers = port_security

[ml2_type_flat]

flat_networks = physnet1

[ml2_type_vlan]

[ml2_type_gre]

[ml2_type_vxlan]

[ml2_type_geneve]

[securitygroup]

enable_ipset = True

配置/etc/neutron/plugins/ml2/ linuxbridge_agent.ini,物理接口设置为:eth0

cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini|grep -v "^#"|grep -v "^$"

[linux_bridge]

physical_interface_mappings = physnet1:eth0

[vxlan]

enable_vxlan = false

[agent]

prevent_arp_spoofing = True

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

enable_security_group = True

修改/etc/neutron/dhcp_agent.ini

cat /etc/neutron/dhcp_agent.ini|grep -v "^#"|grep -v "^$"

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

[AGENT]

修改/etc/neutron/metadata_agent.ini

cat /etc/neutron/metadata_agent.ini|grep -v "^#"|grep -v "^$"

[DEFAULT]

auth_uri = http://192.168.150.145:5000

auth_url = http://192.168.150.145:35357

auth_region = RegionOne

auth_plugin = password

project_domain_id = default

user_domain_id = default

project_name = service

username = neutron

password = neutron

nova_metadata_ip = 192.168.150.145

metadata_proxy_shared_secret = neutron

admin_tenant_name = %SERVICE_TENANT_NAME%

admin_user = %SERVICE_USER%

admin_password = %SERVICE_PASSWORD%

[AGENT]

创建连接并创建 keystone 的用户

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

openstack user create --domain default --password=neutron neutron

openstack role add --project service --user neutron admin

更新数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

注册 keystone

source admin-openrc.sh

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://192.168.150.145:9696

openstack endpoint create --region RegionOne network internal http://192.168.150.145:9696

openstack endpoint create --region RegionOne network admin http://192.168.150.145:9696

启动服务并检查

因为neutron和nova有联系,做neutron时修改nova的配置文件,上面nova.conf已经做了neutron的关联配置,所以要重启openstack-nova-api服务。

这里将nova的关联服务都一并重启了:

systemctl restart openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

启动neutron相关服务

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

检查

neutron agent-list

+--------------------------------------+--------------------+-----------------------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+-----------------------+-------+----------------+---------------------------+

| 36f8e03d-eb99-4161-a5c5-fb96bc1b1bc6 | Metadata agent | localhost.localdomain | :-) | True | neutron-metadata-agent |

| 836ccf30-d057-41e6-8da1-d32c2a8bd0c5 | DHCP agent | localhost.localdomain | :-) | True | neutron-dhcp-agent |

| c58ccbab-1200-4f6c-af25-277b7b147dcb | Linux bridge agent | localhost.localdomain | :-) | True | neutron-linuxbridge-agent |

+--------------------------------------+--------------------+-----------------------+-------+----------------+---------------------------+

openstack endpoint list

+----------------------------------+-----------+--------------+--------------+---------+-----------+----------------------------------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+--------------+---------+-----------+----------------------------------------------+

| 272008321250483ea17950359cf20941 | RegionOne | glance | image | True | admin | http://192.168.150.145:9292 |

| 2b9d38fccb274ffc8e17146e316e7828 | RegionOne | glance | image | True | public | http://192.168.150.145:9292 |

| 33f1d5ddb5a14d9fa4bff2e4f047cc02 | RegionOne | keystone | identity | True | public | http://192.168.150.145:5000/v2.0 |

| 38118c8cdd0448d292b0fc23c2d51bf4 | RegionOne | nova | compute | True | public | http://192.168.150.145:8774/v2/%(tenant_id)s |

| 4cde31f433754b6b972fd53a92622ebe | RegionOne | glance | image | True | internal | http://192.168.150.145:9292 |

| 66b0311e804148acb0c66c091daaa250 | RegionOne | nova | compute | True | admin | http://192.168.150.145:8774/v2/%(tenant_id)s |

| 7a5e79cf7dbb44038925397634d3f2e2 | RegionOne | nova | compute | True | internal | http://192.168.150.145:8774/v2/%(tenant_id)s |

| 8cdd3675482e40228549d323ca856bfc | RegionOne | keystone | identity | True | internal | http://192.168.150.145:5000/v2.0 |

| 99da7b1de15543e7a423d1b58cb2ebc7 | RegionOne | keystone | identity | True | admin | http://192.168.150.145:35357/v2.0 |

| a6c8cb68cef24a10b1f1d3517c33e830 | RegionOne | neutron | network | True | public | http://192.168.150.145:9696 |

| a78485b8a5ac444a8497a571817d3a01 | RegionOne | neutron | network | True | internal | http://192.168.150.145:9696 |

| fb12238385d54ea1b04f47ddbbc8d3e9 | RegionOne | neutron | network | True | admin | http://192.168.150.145:9696 |

+----------------------------------+-----------+--------------+--------------+---------+-----------+----------------------------------------------+

到这里neutron配置完成。

创建虚拟机实例

是时候检验前面的配置了。

创建桥接网络

创建网络(名叫flat,物理接口是physnet1:eth0,网络类型是flat)

source admin-openrc.sh #在哪个项目下创建虚拟机,这里选择在demo下创建;也可以在admin下

neutron net-create flat --shared --provider:physical_network physnet1 --provider:network_type flat

创建子网,这一步很容易出问题(neutron的难点之一),因为这里就要将网桥搭在网卡上了。

这里的参数有:

- 子网的CIDR,应该要与宿主机的相同,因为宿主机的IP是192.168.150.145,所以应该是

192.168.150.0/24; - 子网的IP池,需要网络中未分配的IP,因为不知道校内网有哪些IP是分配的了,所以这里选了一个比较小的区间

[192.168.150.190, 192.168.150.200]; - DNS服务器,查了手上的PC的DNS,然后设为

192.168.247.6; - GATEWAY,网关入口,用

route -n看了一下是192.168.150.33。

综上:

neutron subnet-create flat 192.168.150.0/24 --name flat-subnet --allocation-pool start=192.168.150.190,end=192.168.150.200 --dns-nameserver 192.168.247.6 --gateway 192.168.150.33

查看子网

neutron net-list

+--------------------------------------+------+-------------------------------------------------------+

| id | name | subnets |

+--------------------------------------+------+-------------------------------------------------------+

| 9f42c0f9-56bb-47ab-839e-59bf71276dd5 | flat | c3c8e599-4d36-4997-b9d9-d194710e27ac 192.168.150.0/24 |

+--------------------------------------+------+-------------------------------------------------------+

neutron subnet-list

+--------------------------------------+-------------+------------------+--------------------------------------------------------+

| id | name | cidr | allocation_pools |

+--------------------------------------+-------------+------------------+--------------------------------------------------------+

| c3c8e599-4d36-4997-b9d9-d194710e27ac | flat-subnet | 192.168.150.0/24 | {"start": "192.168.150.190", "end": "192.168.150.200"} |

+--------------------------------------+-------------+------------------+--------------------------------------------------------+

创建虚拟机

创建 key

source demo-openrc.sh #这是在demo账号下创建虚拟机;要是在admin账号下创建虚拟机,就用source admin-openrc.sh

ssh-keygen -q -N "" #默认保存在/root/.ssh里,有公钥id_rsa.pub和私钥id_rsa

将公钥mykey添加到虚拟机

nova keypair-add --pub-key /root/.ssh/id_rsa.pub mykey

nova keypair-list

+-------+-------------------------------------------------+

| Name | Fingerprint |

+-------+-------------------------------------------------+

| mykey | cd:7a:1e:cd:c0:43:9b:b1:f4:3b:cf:cd:5e:95:f8:00 |

+-------+-------------------------------------------------+

创建安全组default

nova secgroup-add-rule default icmp -1 -1 0.0.0.0/0

nova secgroup-add-rule default tcp 22 22 0.0.0.0/0

创建虚拟机需要的参数有:

- 虚拟机类型名;

- 镜像名;

- 网络ID;

- 安全组名;

- key名;

- 虚拟机实例名称。

下面为此做准备:

查看支持的虚拟机类型

nova flavor-list

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

查看镜像

nova image-list

+--------------------------------------+-----------------+--------+--------+

| ID | Name | Status | Server |

+--------------------------------------+-----------------+--------+--------+

| 2fa1b84f-51c0-49c6-af78-b121205eba08 | CentOS-7-x86_64 | ACTIVE | |

| 722e10fb-9a0b-4c56-9075-f6a3c5bbba66 | cirros | ACTIVE | |

+--------------------------------------+-----------------+--------+--------+

查看网络

neutron net-list

+--------------------------------------+------+-------------------------------------------------------+

| id | name | subnets |

+--------------------------------------+------+-------------------------------------------------------+

| 9f42c0f9-56bb-47ab-839e-59bf71276dd5 | flat | c3c8e599-4d36-4997-b9d9-d194710e27ac 192.168.150.0/24 |

+--------------------------------------+------+-------------------------------------------------------+

假设虚拟机实例名为hello-instance,要创建一个最小的实例用来测试,由上可得各参数:

- 虚拟机类型名

m1.tiny; - 镜像名

cirros; - 网络ID

9f42c0f9-56bb-47ab-839e-59bf71276dd5; - 安全组名

default; - key名

mykey;

综上(这部也很容易出错,详情见下文):

nova boot --flavor m1.tiny --image cirros --nic net-id=9f42c0f9-56bb-47ab-839e-59bf71276dd5 --security-group default --key-name mykey hello-instance

查看虚拟机

nova list

+--------------------------------------+----------------+--------+------------+-------------+----------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+----------------+--------+------------+-------------+----------------------+

| 3ae1e9cd-5309-4f0e-bcad-f9211da2df12 | hello-instance | ACTIVE | - | Running | flat=192.168.150.191 |

+--------------------------------------+----------------+--------+------------+-------------+----------------------+

如上,可以看到实例状态良好,到此应该是创建成功了。

可能运气不好,实例的状态是ERROR,那么就要找原因了,可以去dashboard看看该实例的详情,里面会有实例的出错详情,而更详细的信息需要通过查看日志文件来获得,主要日志文件应该在/var/log/nova和/var/log/neutron里,文件应该是nova-compute.log,nova-conductor.log,server.log,dhcp-agent.log,linuxbridge-agent.log等,当然其他log文件也可以看看。

这里和那里(针对实例出错)已经分析了一些出错的情况,可以参考一下。

下面讲一下自己遇到的情况:

创建虚拟机实例的时候开始好像是正常的,实例进入了孵化状态,但是孵化了一会之后就出错了:

Failed to allocate the network(s), not rescheduling.

从日志nova-compute.log还是nova-conductor.log?里可以发现类似的错误信息:

ERROR : Build of instance 5ea8c935-ee07-4788-823f-10e2b003ca89 aborted: Failed to allocate the network(s), not rescheduling.

最终找到的解决方法是这里和更加详细但是是英语的那里,可以参考一下