DRBD

DRBD是由内核模块和相关脚本而构成,用以构建高可用性的集群

drbd 工作原理:DRBD是一种块设备,可以被用于高可用(HA)之中.它类似于一个网络RAID-1功能。

当你将数据写入本地 文件系统时,数据还将会被发送到网络中另一台主机上。以相同的形式记录在一个

文件系统中。 本地(主节点)与远程主机(备节点)的数据可以保证实时同步。当本地系统出现故障时,远

程主机上还会保留有一份相同的数据,可以继续使用。在高可用(HA)中使用DRBD功能,可以代替使用一

个共享盘阵。因为数据同时存在于本地主机和远程主机上,切换时,远程主机只要使用它上面的那份备

份数据,就可以继续进行服务了。

DRBD主要功能:DRBD 负责接收数据,把数据写到本地磁盘,然后通过网络将同样的数据发送给另一

个主机,另一个主机再将数据存到自己的磁盘中。

DRBD 复制模式:

协议A:异步复制协议。本地写成功后立即返回,数据放在发送buffer中,可能丢失。

协议B:内存同步(半同步)复制协议。本地写成功并将数据发送到对方后立即返回,如果双机掉

电,数据可能丢失。

协议C:同步复制协议。本地和对方写成功确认后返回。如果双机掉电或磁盘同时损坏,则数据可能

丢失。

一般用协议C,但选择C协议将影响流量,从而影响网络时延。为了数据可靠性,我们在生产环境中

还是用C协议。

corosync

Corosync是OpenAIS发展到Wilson版本后衍生出来的开放性集群引擎工程,corosync最初只是用来演

示OpenAIS集群框架接口规范的一个应用,可以说corosync是OpenAIS的一部分,但后面的发展明显超越

了官方最初的设想,越来越多的厂商尝试使用corosync作为集群解决方案。如RedHat的RHCS集群套件就

是基于corosync实现。corosync只提供了message layer,而没有直接提供CRM,一般使用Pacemaker进行

资源管理。

Corosync是集群管理套件的一部分,它在传递信息的时候可以通过一个简单的配置文件来定义信息

传递的方式和协议等。

pacemaker

pacemaker就是Heartbeat 到了V3版本后拆分出来的资源管理器(CRM),用来管理整个HA的控制中

心,要想使用pacemaker配置的话需要安装一个pacemaker的接口,它的这个程序的接口叫crmshell,它

在新版本的 pacemaker已经被独立出来了,不再是pacemaker的组成部分。

pacemaker是一个开源的高可用资源管理器(CRM),位于HA集群架构中资源管理、资源代理(RA)这个

层次,它不能提供底层心跳信息传递的功能,要想与对方节点通信需要借助底层的心跳传递服务,将信

息通告给对方。

pacemaker+corosync

Corosync主要就是实现集群中Message layer层的功能:完成集群心跳及事务信息传递

Pacemaker主要实现的是管理集群中的资源(CRM),真正启用、停止集群中的服务是RA(资源代理)

这个子组件。RA的类别有下面几种类型:

LSB:位于/etc/rc.d/init.d/*,至少支持start,stop,restart,status,reload,force-reload;

注意:不能开机自动运行;要有CRM来启动 //centos6用这种类型控制

OCF: /usr/lib/ocf/resource.d/provider/,类似于LSB脚本,但支持start,stop,status,

monitor,meta-data;

STONITH:调用stonith设备的功能

systemd:unit file,/usr/lib/systemd/system/ 注意:服务必须设置enable,开启自

启;//centos7支持

service:调用用户的自定义脚本

mfs

MooseFS(下面统一称为MFS),被称为对象存储,提供了强大的扩展性、高可靠性和持久性。它能够

将文件分布存储于不同的物理机器上,对外却提供的是一个透明的接口的存储资源池。它还具有在线扩

展、文件切块存储、节点无单点故障、读写效率高等特点。

MFS分布式文件系统由元数据服务器(Master Server)、元数据日志服务器(Metalogger Server)、数

据存储服务器(Chunk Server)、客户端(Client)组成。

元数据服务器:MFS系统中的核心组成部分,存储每个文件的元数据,负责文件的读写调度、空间回

收和在多个chunk server之间的数据拷贝等。目前MFS仅支持一个元数据服务器,因此可能会出现单点故

障。针对此问题我们通过结合drbd来实现高可用

元数据日志服务器:元数据服务器的备份节点,按照指定的周期从元数据服务器上将保存元数据、

更新日志和会话信息的文件下载到本地目录下。当元数据服务器出现故障时,我们可以从该服务器的文

件中拿到相关的必要的信息对整个系统进行恢复。

数据存储服务器:负责连接元数据管理服务器,听从元数据服务器的调度,提供存储空间,并为客

户端提供数据传输,MooseFS提供一个手动指定每个目录的备份个数。假设个数为n,那么我们在向系统

写入文件时,系统会将切分好的文件块在不同的chunk server上复制n份。备份数的增加不会影响系统的

写性能,但是可以提高系统的读性能和可用性,这可以说是一种以存储容量换取写性能和可用性的策

略。

客户端:使用mfsmount的方式通过FUSE内核接口挂接远程管理服务器上管理的数据存储服务器到本

地目录上,然后就可以像使用本地文件一样来使用我们的MFS文件系统了。

搭建环境

拓扑图

| 主机 | ip | 角色 | |

| centos-5 | 10.0.0.15 | Master(drbd+corosync+pacemaker+mfs) | vip:10.0.0.150 |

| centos-6 | 10.0.0.16 | Backup(drbd+corosync+pacemaker+mfs) | |

| centos-7 | 10.0.0.17 | Metalogger | |

| centos-8 | 10.0.0.18 | Chunk Server | |

| centos-9 | 10.0.0.19 | Chunk Server | |

| centos-10 | 10.0.0.20 | client |

1、先配置相关主机和相关时间同步服务器:

##同步时间

[root@centos-5 ~]# yum install -y ntpdate [root@centos-5 ~]# crontab -e */5 * * * * ntpdate cn.pool.ntp.org

##修改ssh互信

[root@centos-5 ~]# ssh-keygen [root@centos-5 ~]# ssh-copy-id 10.0.0.16

##修改hosts文件保证hosts之间能够互相访问

[root@centos-5 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.0.0.15 centos-5 10.0.0.16 centos-6 10.0.0.17 centos-7 10.0.0.18 centos-8 10.0.0.19 centos-9 10.0.0.20 centos-10

2、pcs集群的构建:

配置集群的前提:

1、时间同步

2、主机名互相访问

3、是否使用仲裁设备。

生命周期管理工具:

Pcs:agent(pcsd)

Crash:pssh

##在centos-5和centos-6上配置

2.1、在两个节点上执行:

[root@centos-5 ~]# yum install -y pacemaker pcs psmisc policycoreutils-python [root@centos-6 ~]# yum install -y pacemaker pcs psmisc policycoreutils-python

2.2、启动pcs并且让其开机自启:

[root@centos-5 ~]# systemctl start pcsd [root@centos-5 ~]# systemctl enable pcsd [root@centos-6 ~]# systemctl start pcsd [root@centos-6 ~]# systemctl enable pcsd

2.3、修改用户hacluster的密码:

[root@centos-5 ~]# echo 123456 | passwd --stdin hacluster [root@centos-6 ~]# echo 123456 | passwd --stdin hacluster

2.4、注册pcs集群主机(默认注册使用用户名hacluster)

[root@centos-5 ~]# pcs cluster auth centos-5 centos-6 Username: hacluster Password: centos-6: Authorized centos-5: Authorized

2.5、在集群上注册两台集群:

[root@centos-5 ~]# pcs cluster setup --name mycluster centos-5 centos-6 --force ##设置集群

2.6、在其中一个节点上查看已经生成的corosync配置文件

[root@centos-5 ~]# ls /etc/corosync/ corosync.conf corosync.conf.example.udpu uidgid.d corosync.conf.example corosync.xml.example ##可以看到生成有corosync.conf的配置文件

2.7、查看注册进来的配置文件

[root@centos-5 ~]# cat /etc/corosync/corosync.conf

totem {

version: 2

secauth: off

cluster_name: mycluster

transport: udpu

}

nodelist {

node {

ring0_addr: centos-5

nodeid: 1

}

node {

ring0_addr: centos-6

nodeid: 2

}

}

quorum {

provider: corosync_votequorum

two_node: 1

}

logging {

to_logfile: yes

logfile: /var/log/cluster/corosync.log

to_syslog: yes

}

2.8、启动集群:

[root@centos-5 ~]# pcs cluster start --all ##相当于用来启动corosync和pacemaker centos-5: Starting Cluster... centos-6: Starting Cluster...

##查看是否启动成功

[root@centos-5 ~]# ps -ef | grep corosync root 2294 1 10 16:29 ? 00:00:01 corosync root 2311 980 0 16:29 pts/0 00:00:00 grep --color=auto corosync [root@centos-5 ~]# ps -ef | grep pacemaker root 2301 1 0 16:29 ? 00:00:00 /usr/sbin/pacemakerd -f haclust+ 2302 2301 1 16:29 ? 00:00:00 /usr/libexec/pacemaker/cib root 2303 2301 0 16:29 ? 00:00:00 /usr/libexec/pacemaker/stonithd root 2304 2301 0 16:29 ? 00:00:00 /usr/libexec/pacemaker/lrmd haclust+ 2305 2301 0 16:29 ? 00:00:00 /usr/libexec/pacemaker/attrd haclust+ 2306 2301 0 16:29 ? 00:00:00 /usr/libexec/pacemaker/pengine haclust+ 2307 2301 0 16:29 ? 00:00:00 /usr/libexec/pacemaker/crmd root 2317 980 0 16:29 pts/0 00:00:00 grep --color=auto pacemaker

2.9、查看集群的状态(显示为no faults即为OK)

[root@centos-5 ~]# corosync-cfgtool -s Printing ring status. Local node ID 1 RING ID 0 id = 10.0.0.15 status = ring 0 active with no faults [root@centos-6 ~]# corosync-cfgtool -s Printing ring status. Local node ID 2 RING ID 0 id = 10.0.0.16 status = ring 0 active with no faults

2.10、查看与集群相关的子节点的信息

[root@centos-5 ~]# corosync-cmapctl | grep members runtime.totem.pg.mrp.srp.members.1.config_version (u64) = 0 runtime.totem.pg.mrp.srp.members.1.ip (str) = r(0) ip(10.0.0.15) runtime.totem.pg.mrp.srp.members.1.join_count (u32) = 1 runtime.totem.pg.mrp.srp.members.1.status (str) = joined runtime.totem.pg.mrp.srp.members.2.config_version (u64) = 0 runtime.totem.pg.mrp.srp.members.2.ip (str) = r(0) ip(10.0.0.16) runtime.totem.pg.mrp.srp.members.2.join_count (u32) = 1 runtime.totem.pg.mrp.srp.members.2.status (str) = joined

2.11、查看当前集群的描述信息

[root@centos-5 ~]# pcs status Cluster name: mycluster WARNING: no stonith devices and stonith-enabled is not false ##在这里我们得实现隔离设备:stonith Stack: corosync ##底层是由哪个来传递信息的 Current DC: centos-6 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum ##DC指定的协调员(DC:是由所有节点选举出来的) Last updated: Fri Oct 27 16:33:29 2017 Last change: Fri Oct 27 16:29:48 2017 by hacluster via crmd on centos-6 2 nodes configured 0 resources configured Online: [ centos-5 centos-6 ] No resources ##还没有资源 Daemon Status: ##各守护进程准备正常 corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

2.12、配置全局选项

[root@centos-5 ~]# pcs property -h Usage: pcs property [commands]... Configure pacemaker properties

##查看哪项是可以配置的

[root@centos-5 ~]# pcs property list --all

2.13、查看集群是否有错误

[root@centos-5 ~]# crm_verify -L -V error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity Errors found during check: config not valid ##出现这个错误是因为我们没有配置STONITH设备,所以,我们要关闭STONITH设备 [root@centos-5 ~]# pcs property set stonith-enabled=false [root@centos-5 ~]# crm_verify -L -V [root@centos-5 ~]# pcs property list Cluster Properties: cluster-infrastructure: corosync cluster-name: mycluster dc-version: 1.1.16-12.el7_4.4-94ff4df have-watchdog: false stonith-enabled: false ##现在就可以了

2.14、集群的话,我们可以通过下载安装crmsh来操作(从github上面来下载,然后解压并直接安装,我事先已经先下载好放在/usr/local/src下面了),只需要在其中一个节点上安装就可以了,在这里我在centos-5上配置crm :

[root@centos-5 src]# ls crmsh-2.3.2.tar [root@centos-5 src]# tar -xf crmsh-2.3.2.tar [root@centos-5 src]# cd crmsh-2.3.2 [root@centos-5 crmsh-2.3.2]# ls AUTHORS crm.conf.in NEWS TODO autogen.sh crmsh README.md tox.ini ChangeLog crmsh.spec requirements.txt update-data-manifest.sh configure.ac data-manifest scripts utils contrib doc setup.py version.in COPYING hb_report templates crm Makefile.am test [root@centos-5 crmsh-2.3.2]# python setup.py install

2.15、安装完成之后,我们可以查看一下集群的状态

[root@centos-5 ~]# crm status Stack: corosync Current DC: centos-6 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Fri Oct 27 16:47:30 2017 Last change: Fri Oct 27 16:40:34 2017 by root via cibadmin on centos-5 2 nodes configured 0 resources configured Online: [ centos-5 centos-6 ] No resources

到这里,pcs集群我们就已经配置好了,接下来就开始配置drbd和mfs了。

3、配置drbd和mfs(Master和Backup):

3.1、安装drbd

[root@centos-5 ~]# cd /etc/yum.repos.d/ [root@centos-5 yum.repos.d]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org [root@centos-5 yum.repos.d]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm [root@centos-5 yum.repos.d]# yum install -y kmod-drbd84 drbd84-utils [root@centos-6 ~]# cd /etc/yum.repos.d/ [root@centos-6 yum.repos.d]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org [root@centos-6 yum.repos.d]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm [root@centos-6 yum.repos.d]# yum install -y kmod-drbd84 drbd84-utils

3.2、查看配置文件

##查看主配置文件

[root@centos-5 ~]# cat /etc/drbd.conf # You can find an example in /usr/share/doc/drbd.../drbd.conf.example include "drbd.d/global_common.conf"; include "drbd.d/*.res";

##配置全局文件

[root@centos-5 ~]# cd /etc/drbd.d/

[root@centos-5 drbd.d]# ls

global_common.conf

[root@centos-5 drbd.d]# cp global_common.conf global_common.conf.bak

[root@centos-5 drbd.d]# vim global_common.conf

global {

usage-count no; #是否参加DRBD使用统计,默认为yes。官方统计drbd的装机量

# minor-count dialog-refresh disable-ip-verification

}

common {

protocol C; #使用DRBD的同步协议

handlers {

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f";

}

startup {

# wfc-timeout degr-wfc-timeout outdated-wfc-timeout wait-after-sb

}

options {

# cpu-mask on-no-data-accessible

}

disk {

on-io-error detach; #配置I/O错误处理策略为分离

# size max-bio-bvecs on-io-error fencing disk-barrier disk-flushes

# disk-drain md-flushes resync-rate resync-after al-extents

# c-plan-ahead c-delay-target c-fill-target c-max-rate

# c-min-rate disk-timeout

}

net {

# protocol timeout max-epoch-size max-buffers unplug-watermark

# connect-int ping-int sndbuf-size rcvbuf-size ko-count

# allow-two-primaries cram-hmac-alg shared-secret after-sb-0pri

# after-sb-1pri after-sb-2pri always-asbp rr-conflict

# ping-timeout data-integrity-alg tcp-cork on-congestion

# congestion-fill congestion-extents csums-alg verify-alg

# use-rle

}

syncer {

rate 1024M; #设置主备节点同步时的网络速率

}

}

注释: on-io-error 策略可能为以下选项之一

detach 分离:这是默认和推荐的选项,如果在节点上发生底层的硬盘I/O错误,它会将设备运行在

Diskless无盘模式下

pass_on:DRBD会将I/O错误报告到上层,在主节点上,它会将其报告给挂载的文件系统,但是在此

节点上就往往忽略(因此此节点上没有可以报告的上层)

-local-in-error:调用本地磁盘I/O处理程序定义的命令;这需要有相应的local-io-error调用的

资源处理程序处理错误的命令;这就给管理员有足够自由的权力命令命令或是脚本调用local-io-error

处理I/O错误定义一个资源

3.3、创建配置文件:

[root@centos-5 ~]# cat /etc/drbd.d/mfs.res

resource mfs { #资源名称

protocol C; #使用协议

meta-disk internal;

device /dev/drbd1; #DRBD设备名称

syncer {

verify-alg sha1;# 加密算法

}

net {

allow-two-primaries;

}

on centos-5 {

disk /dev/sdb1; #drbd1使用的磁盘分区为"mfs"

address 10.0.0.15:7789; #设置DRBD监听地址与端口

}

on centos-6 {

disk /dev/sdb1;

address 10.0.0.16:7789;

}

}

##注:/dev/sdb1是我自己添加的一块硬盘,然后分区出来的。

##把配置文件同步到对端

[root@centos-5 ~]# scp -rp /etc/drbd.d/* centos-6:/etc/drbd.d/

3.4、启动drbd

##在centos-5上启动

[root@centos-5 ~]# drbdadm create-md mfs initializing activity log initializing bitmap (96 KB) to all zero Writing meta data... New drbd meta data block successfully created.

##查看内核是否已经加载了模块

[root@centos-5 ~]# modprobe drbd [root@centos-5 ~]# lsmod | grep drbd drbd 396875 0 libcrc32c 12644 2 xfs,drbd

##启动并查看状态

[root@centos-5 ~]# drbdadm up mfs [root@centos-5 ~]# drbdadm -- --force primary mfs [root@centos-5 ~]# cat /proc/drbd version: 8.4.10-1 (api:1/proto:86-101) GIT-hash: a4d5de01fffd7e4cde48a080e2c686f9e8cebf4c build by mockbuild@, 2017-09-15 14:23:22 1: cs:WFConnection ro:Primary/Unknown ds:UpToDate/DUnknown C r----s ns:0 nr:0 dw:0 dr:912 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:3144572

##在对端(即centos-6)上执行

[root@centos-6 ~]# drbdadm create-md mfs initializing activity log initializing bitmap (96 KB) to all zero Writing meta data... New drbd meta data block successfully created. [root@centos-6 ~]# modprobe drbd [root@centos-6 ~]# drbdadm up mfs

##可以看到数据在同步的状态

[root@centos-5 ~]# cat /proc/drbd version: 8.4.10-1 (api:1/proto:86-101) GIT-hash: a4d5de01fffd7e4cde48a080e2c686f9e8cebf4c build by mockbuild@, 2017-09-15 14:23:22 1: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r---n- ns:138948 nr:0 dw:0 dr:140176 al:8 bm:0 lo:0 pe:2 ua:1 ap:0 ep:1 wo:f oos:3007356 [>....................] sync'ed: 4.5% (3007356/3144572)K finish: 0:01:27 speed: 34,304 (34,304) K/sec ##同步完成 [root@centos-5 ~]# cat /proc/drbd version: 8.4.10-1 (api:1/proto:86-101) GIT-hash: a4d5de01fffd7e4cde48a080e2c686f9e8cebf4c build by mockbuild@, 2017-09-15 14:23:22 1: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r----- ns:3144572 nr:0 dw:0 dr:3145484 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

3.5、格式化drbd1并挂载

##首先先要创建mfs的用户mfs,并将挂载点的所属用户和组改为mfs

[root@centos-5 ~]# mkdir /usr/local/mfs [root@centos-5 ~]# useradd mfs [root@centos-5 ~]# id mfs ##用户id和组id一定要相同 uid=1000(mfs) gid=1000(mfs) groups=1000(mfs) [root@centos-5 ~]# chown -R mfs:mfs /usr/local/mfs [root@centos-5 ~]# ll /usr/local total 0 drwxr-xr-x 2 mfs mfs 6 Oct 28 23:28 mfs [root@centos-6 ~]# mkdir /usr/local/mfs [root@centos-6 ~]# useradd mfs [root@centos-6 ~]# id mfs uid=1000(mfs) gid=1000(mfs) groups=1000(mfs) [root@centos-6 ~]# chown -R mfs:mfs /usr/local/mfs

##格式化drbd1并挂载

[root@centos-5 ~]# mkfs.ext4 /dev/drbd1 [root@centos-5 ~]# mount /dev/drbd1 /usr/local/mfs [root@centos-5 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 50G 3.9G 47G 8% / devtmpfs 478M 0 478M 0% /dev tmpfs 489M 39M 450M 8% /dev/shm tmpfs 489M 6.7M 482M 2% /run tmpfs 489M 0 489M 0% /sys/fs/cgroup /dev/mapper/cl-home 47G 33M 47G 1% /home /dev/sda1 1014M 150M 865M 15% /boot tmpfs 98M 0 98M 0% /run/user/0 /dev/drbd1 2.9G 9.0M 2.8G 1% /usr/local/mfs

##挂载之后,我们发现目录/usr/local/mfs的所属组和所属用户发生的变化,这时我们要把所属用户和所属组都改为mfs [root@centos-5 ~]# ll /usr/local/ total 4 drwxr-xr-x 3 root root 4096 Oct 28 23:38 mfs [root@centos-5 ~]# chown -R mfs:mfs /usr/local/mfs [root@centos-5 ~]# ll /usr/local/ total 4 drwxr-xr-x 3 mfs mfs 4096 Oct 28 23:38 mfs

3.6、在drbd上安装mfs Master

##安装软件包

[root@centos-5 src]# yum install zlib-devel -y [root@centos-6 src]# yum install zlib-devel -y

##下载mfs安装包

[root@centos-5 src]# wget https://github.com/moosefs/moosefs/archive/v3.0.96.tar.gz

##安装mfs Master

[root@centos-5 src]# tar -xf v3.0.96.tar.gz [root@centos-5 src]# cd moosefs-3.0.96/ [root@centos-5 moosefs-3.0.96]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --disable-mfschunkserver --disable-mfsmount [root@centos-5 moosefs-3.0.96]# make && make install ##安装完之后也要检查一下/usr/local/mfs目录下的文件所属用户和所属组是否是mfs,如果不是,就要改为mfs [root@centos-5 ~]# cd /usr/local/mfs/ [root@centos-5 mfs]# ll total 36 drwxr-xr-x 2 root root 4096 Oct 28 23:47 bin drwxr-xr-x 3 root root 4096 Oct 28 23:47 etc drwx------ 2 mfs mfs 16384 Oct 28 23:38 lost+found drwxr-xr-x 2 root root 4096 Oct 28 23:47 sbin drwxr-xr-x 4 root root 4096 Oct 28 23:47 share drwxr-xr-x 3 root root 4096 Oct 28 23:47 var [root@centos-5 mfs]# chown -R mfs:mfs /usr/local/mfs [root@centos-5 mfs]# ll total 36 drwxr-xr-x 2 mfs mfs 4096 Oct 28 23:47 bin drwxr-xr-x 3 mfs mfs 4096 Oct 28 23:47 etc drwx------ 2 mfs mfs 16384 Oct 28 23:38 lost+found drwxr-xr-x 2 mfs mfs 4096 Oct 28 23:47 sbin drwxr-xr-x 4 mfs mfs 4096 Oct 28 23:47 share drwxr-xr-x 3 mfs mfs 4096 Oct 28 23:47 var

3.7、做主Master

[root@centos-5 moosefs-3.0.96]# cd /usr/local/mfs/etc/mfs/ [root@centos-5 mfs]# ls mfsexports.cfg.sample mfsmaster.cfg.sample mfsmetalogger.cfg.sample mfstopology.cfg.sample [root@centos-5 mfs]# cp mfsexports.cfg.sample mfsexports.cfg [root@centos-5 mfs]# cp mfsmaster.cfg.sample mfsmaster.cfg ##因为是官方的默认配置,我们投入即可使用。 [root@centos-5 mfs]# cp /usr/local/mfs/var/mfs/metadata.mfs.empty /usr/local/mfs/var/mfs/metadata.mfs ##开启元数据文件默认是empty文件,需要我们手工打开 ##修改控制文件: [root@centos-5 mfs]# vim mfsexports.cfg * / rw,alldirs,mapall=mfs:mfs,password=123456 * . rw ##mfsexports.cfg 文件中,每一个条目就是一个配置规则,而每一个条目又分为三个部分,其中第一部分是mfs客户端的ip地址或地址范围,第二部分是被挂载的目录,第三个部分用来设置mfs客户端可以拥有的访问权限。

3.8、启动mfs

##做启动脚本

[root@centos-5 ~]# cat /etc/systemd/system/mfsmaster.service [Unit] Description=mfs After=network.target [Service] Type=forking ExecStart=/usr/local/mfs/sbin/mfsmaster start ExecStop=/usr/local/mfs/sbin/mfsmaster stop PrivateTmp=true [Install] WantedBy=multi-user.target [root@centos-5 ~]# chmod a+x /etc/systemd/system/mfsmaster.service ##给执行权限 ##同步启动脚本到对端 [root@centos-5 ~]# scp /etc/systemd/system/mfsmaster.service centos-6:/etc/systemd/system/ [root@centos-5 ~]# ssh centos-6 chmod a+x /etc/systemd/system/mfsmaster.service

##做启动测试,如果没有问题就启动脚本

[root@centos-5 ~]# /usr/local/mfs/sbin/mfsmaster start

##设为开机自启

[root@centos-5 ~]# systemctl enable mfsmaster

3.9、测试一下drbd的主从切换是否成功

##停掉服务并切换主从

[root@centos-5 ~]# systemctl stop mfsmaster [root@centos-5 ~]# umount /usr/local/mfs [root@centos-5 ~]# drbdadm secondary mfs

##从挂载测试

[root@centos-6 ~]# drbdadm primary mfs [root@centos-6 ~]# mount /dev/drbd1 /usr/local/mfs [root@centos-6 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/drbd1 2.9G 17M 2.8G 1% /usr/local/mfs [root@centos-6 ~]# ll /usr/local/ ##检查一下/usr/local/mfs的所属组和用户是否是mfs total 4 drwxr-xr-x 8 mfs mfs 4096 Oct 28 23:47 mfs [root@centos-6 ~]# systemctl start mfsmaster [root@centos-6 ~]# ps -ef | grep mfs root 6520 2 0 08:21 ? 00:00:00 [drbd_w_mfs] root 6523 2 0 08:21 ? 00:00:14 [drbd_r_mfs] root 7439 2 0 08:59 ? 00:00:00 [drbd_a_mfs] root 7440 2 0 08:59 ? 00:00:00 [drbd_as_mfs] mfs 8242 1 41 09:32 ? 00:00:06 /usr/local/mfs/sbin/mfsmaster start root 8244 7791 0 09:32 pts/1 00:00:00 grep --color=auto mfs

##测试成功,关闭mfs和drbd,并设为开机自启

[root@centos-6 ~]# systemctl stop drbd [root@centos-6 ~]# systemctl enable drbd [root@centos-6 ~]# systemctl stop mfsmaster [root@centos-6 ~]# systemctl enable mfsmaster [root@centos-5 ~]# systemctl stop drbd [root@centos-5 ~]# systemctl enable drbd [root@centos-5 ~]# systemctl enable mfsmaster

到这里drbd和mfs在Master和Backup上就配完了,接下来在Master上配置crm。

4、配置crm

4.1、开启配置工具

[root@centos-5 ~]# systemctl start corosync [root@centos-5 ~]# systemctl enable corosync [root@centos-5 ~]# systemctl start pacemaker [root@centos-5 ~]# systemctl enable pacemaker [root@centos-5 ~]# ssh centos-6 systemctl restart corosync [root@centos-5 ~]# ssh centos-6 systemctl enable corosync [root@centos-5 ~]# ssh centos-6 systemctl restart pacemaker [root@centos-5 ~]# ssh centos-6 systemctl enable pacemaker

4.2、配置资源

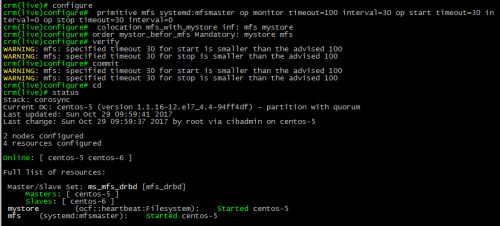

##具体配置 [root@centos-5 ~]# crm crm(live)# configure crm(live)configure# primitive mfs_drbd ocf:linbit:drbd params drbd_resource=mfs op monitor role=Master interval=10 timeout=20 op monitor role=Slave interval=20 timeout=20 op start timeout=240 op stop timeout=100 crm(live)configure# verify crm(live)configure# ms ms_mfs_drbd mfs_drbd meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true" crm(live)configure# verify crm(live)configure# commit crm(live)configure# cd crm(live)# status ##配完一项资源都要查看一下,确保没有问题 Stack: corosync Current DC: centos-5 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Sun Oct 29 09:54:00 2017 Last change: Sun Oct 29 09:53:53 2017 by root via cibadmin on centos-5 2 nodes configured 2 resources configured Online: [ centos-5 centos-6 ] Full list of resources: Master/Slave Set: ms_mfs_drbd [mfs_drbd] Masters: [ centos-5 ] Slaves: [ centos-6 ]

4.3、配置挂载资源

crm(live)# configure crm(live)configure# primitive mystore ocf:heartbeat:Filesystem params device=/dev/drbd1 directory=/usr/local/mfs fstype=ext4 op start timeout=60 op stop timeout=60 crm(live)configure# verify crm(live)configure# colocation ms_mfs_drbd_with_mystore inf: mystore ms_mfs_drbd crm(live)configure# order ms_mfs_drbd_before_mystore Mandatory: ms_mfs_drbd:promote mystore:start crm(live)configure# verify crm(live)configure# commit crm(live)configure# cd crm(live)# status Stack: corosync Current DC: centos-5 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Sun Oct 29 09:57:10 2017 Last change: Sun Oct 29 09:57:06 2017 by root via cibadmin on centos-5 2 nodes configured 3 resources configured Online: [ centos-5 centos-6 ] Full list of resources: Master/Slave Set: ms_mfs_drbd [mfs_drbd] Masters: [ centos-5 ] Slaves: [ centos-6 ] mystore (ocf::heartbeat:Filesystem): Started centos-5

4.4、配置mfs资源

crm(live)# configure crm(live)configure# primitive mfs systemd:mfsmaster op monitor timeout=100 interval=30 op start timeout=30 interval=0 op stop timeout=30 interval=0 crm(live)configure# colocation mfs_with_mystore inf: mfs mystore crm(live)configure# order mystor_befor_mfs Mandatory: mystore mfs crm(live)configure# verify WARNING: mfs: specified timeout 30 for start is smaller than the advised 100 ##可以不用管,只是我们设的时间低于默认时间 WARNING: mfs: specified timeout 30 for stop is smaller than the advised 100 crm(live)configure# commit WARNING: mfs: specified timeout 30 for start is smaller than the advised 100 WARNING: mfs: specified timeout 30 for stop is smaller than the advised 100 crm(live)configure# cd crm(live)# status Stack: corosync Current DC: centos-5 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Sun Oct 29 09:59:41 2017 Last change: Sun Oct 29 09:59:37 2017 by root via cibadmin on centos-5 2 nodes configured 4 resources configured Online: [ centos-5 centos-6 ] Full list of resources: Master/Slave Set: ms_mfs_drbd [mfs_drbd] Masters: [ centos-5 ] Slaves: [ centos-6 ] mystore (ocf::heartbeat:Filesystem): Started centos-5 mfs (systemd:mfsmaster): Started centos-5

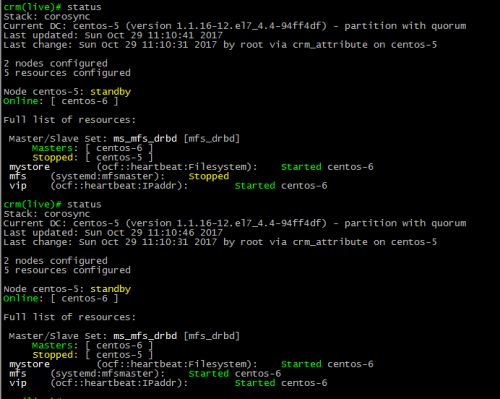

4.5、配置vip

crm(live)# configure crm(live)configure# primitive vip ocf:heartbeat:IPaddr params ip=10.0.0.150 crm(live)configure# colocation vip_with_msf inf: vip mfs crm(live)configure# verify WARNING: mfs: specified timeout 30 for start is smaller than the advised 100 WARNING: mfs: specified timeout 30 for stop is smaller than the advised 100 crm(live)configure# commit crm(live)configure# cd crm(live)# status Stack: corosync Current DC: centos-5 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Sun Oct 29 10:05:45 2017 Last change: Sun Oct 29 10:05:42 2017 by root via cibadmin on centos-5 2 nodes configured 5 resources configured Online: [ centos-5 centos-6 ] Full list of resources: Master/Slave Set: ms_mfs_drbd [mfs_drbd] Masters: [ centos-5 ] Slaves: [ centos-6 ] mystore (ocf::heartbeat:Filesystem): Started centos-5 mfs (systemd:mfsmaster): Started centos-5 vip (ocf::heartbeat:IPaddr): Stopped ##再查看一次,可以发现vip已经起来了 crm(live)# status Stack: corosync Current DC: centos-5 (version 1.1.16-12.el7_4.4-94ff4df) - partition with quorum Last updated: Sun Oct 29 10:05:58 2017 Last change: Sun Oct 29 10:05:42 2017 by root via cibadmin on centos-5 2 nodes configured 5 resources configured Online: [ centos-5 centos-6 ] Full list of resources: Master/Slave Set: ms_mfs_drbd [mfs_drbd] Masters: [ centos-5 ] Slaves: [ centos-6 ] mystore (ocf::heartbeat:Filesystem): Started centos-5 mfs (systemd:mfsmaster): Started centos-5 vip (ocf::heartbeat:IPaddr): Started centos-5

4.6、show查看

到这里Master和Backup就已经配置完成了,接下来就要配置Metalogger、chunk server和client了。

5、安装Metalogger Server

前面已经介绍了,Metalogger Server 是 Master Server 的备份服务器。因此,Metalogger

Server 的安装步骤和 Master Server 的安装步骤相同。并且,最好使用和 Master Server 配置一样的

服务器来做 Metalogger Server。这样,一旦主服务器master宕机失效,我们只要导入备份信息

changelogs到元数据文件,备份服务器可直接接替故障的master继续提供服务。这是还没有做高可用

时,为了防止master因为故障导致服务中断,由于我们这次已经做了高可用,所以Metalogger Server可

以要也可以不要,在这里,做最坏的打算,假设Master和Backup两台机都故障了,这时就需要

Metalogger Server了,在Master和Backup都没有发生故障的情况下,Metalogger Server就作为mfs的日

志服务器。

5.1、安装Metalogger Server

##从Master上把安装包copy过来

[root@centos-5 ~]# scp /usr/local/src/v3.0.96.tar.gz centos-7:/usr/local/src/

##安装mfs

[root@centos-7 ~]# cd /usr/local/src/ [root@centos-7 src]# tar -xf v3.0.96.tar.gz [root@centos-7 src]# useradd mfs [root@centos-7 src]# id mfs ##一定要确保用户id和组id一致 uid=1000(mfs) gid=1000(mfs) groups=1000(mfs) [root@centos-7 src]# cd moosefs-3.0.96/ [root@centos-7 moosefs-3.0.96]# yum install zlib-devel -y [root@centos-7 moosefs-3.0.96]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --disable-mfschunkserver --disable-mfsmount [root@centos-7 moosefs-3.0.96]# make && make install

##配置Metalogger Server

[root@centos-7 mfs]# chown -R mfs:mfs /usr/local/mfs ##确保/usr/local/mfs的所属用户和组都是mfs [root@centos-7 mfs]# ll total 0 drwxr-xr-x 2 mfs mfs 20 Oct 29 10:26 bin drwxr-xr-x 3 mfs mfs 17 Oct 29 10:26 etc drwxr-xr-x 2 mfs mfs 123 Oct 29 10:26 sbin drwxr-xr-x 4 mfs mfs 31 Oct 29 10:26 share drwxr-xr-x 3 mfs mfs 17 Oct 29 10:26 var [root@centos-7 mfs]# cd /usr/local/mfs/etc/mfs/ [root@centos-7 mfs]# ls mfsexports.cfg.sample mfsmaster.cfg.sample mfsmetalogger.cfg.sample mfstopology.cfg.sample [root@centos-7 mfs]# cp mfsmetalogger.cfg.sample mfsmetalogger.cfg [root@centos-7 mfs]# vim mfsmetalogger.cfg MASTER_HOST = 10.0.0.150

注: #MASTER_PORT = 9419 链接端口 # META_DOWNLOAD_FREQ = 24 # 元数据备份文件下载请求频率,默认为24小时,即每个一天从元数据服务器下载一个metadata.mfs.back文件。当元数据服务器关闭或者出故障时,metadata.mfs.back文件将小时,那么要恢复整个mfs,则需要从metalogger服务器取得该文件。请注意该文件,它与日志文件在一起,才能够恢复整个被损坏的分布式文件系统。

##启动Metalogger Server

[root@centos-7 ~]# /usr/local/mfs/sbin/mfsmetalogger start open files limit has been set to: 4096 working directory: /usr/local/mfs/var/mfs lockfile created and locked initializing mfsmetalogger modules ... mfsmetalogger daemon initialized properly [root@centos-7 ~]# netstat -lantp|grep metalogger tcp 0 0 10.0.0.17:41208 10.0.0.150:9419 ESTABLISHED 6823/mfsmetalogger

##查看Metalogger Server的日志文件

[root@centos-7 ~]# ls /usr/local/mfs/var/mfs/ changelog_ml_back.0.mfs changelog_ml_back.1.mfs metadata.mfs.empty metadata_ml.mfs.back

到这Metalogger Server就已经配置完成了。

6、配置chunk server

两台chunk server(centos-8和centos-9)的配置都是一样的。

6.1、安装chunk server

[root@centos-5 ~]# scp /usr/local/src/v3.0.96.tar.gz centos-8:/usr/local/src/ [root@centos-8 ~]# useradd mfs [root@centos-8 ~]# id mfs uid=1000(mfs) gid=1000(mfs) groups=1000(mfs) [root@centos-8 ~]# yum install zlib-devel -y [root@centos-8 ~]# cd /usr/local/src/ [root@centos-8 src]# tar -xf v3.0.96.tar.gz [root@centos-8 src]# cd moosefs-3.0.96/ [root@centos-8 moosefs-3.0.96]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --disable-mfsmaster --disable-mfsmount [root@centos-8 moosefs-3.0.96]# make && make install [root@centos-8 ~]# chown -R mfs:mfs /usr/local/mfs

6.2、配置chunk server

[root@centos-8 ~]# cd /usr/local/mfs/etc/mfs/ [root@centos-8 mfs]# mv mfschunkserver.cfg.sample mfschunkserver.cfg [root@centos-8 mfs]# vim mfschunkserver.cfg MASTER_HOST = 10.0.0.150

6.3、配置mfshdd.cfg主配置文件

[root@centos-8 mfs]# mkdir /mfsdata [root@centos-8 mfs]# chown -R mfs:mfs /mfsdata [root@centos-8 mfs]# cp /usr/local/mfs/etc/mfs/mfshdd.cfg.sample /usr/local/mfs/etc/mfs/mfshdd.cfg [root@centos-8 mfs]# vim /usr/local/mfs/etc/mfs/mfshdd.cfg /mfsdata

6.4、启动chunk server

[root@centos-8 ~]# /usr/local/mfs/sbin/mfschunkserver start open files limit has been set to: 16384 working directory: /usr/local/mfs/var/mfs lockfile created and locked setting glibc malloc arena max to 4 setting glibc malloc arena test to 4 initializing mfschunkserver modules ... hdd space manager: path to scan: /mfsdata/ hdd space manager: start background hdd scanning (searching for available chunks) main server module: listen on *:9422 no charts data file - initializing empty charts mfschunkserver daemon initialized properly [root@centos-8 ~]# netstat -lantp|grep 9420 tcp 0 0 10.0.0.18:58920 10.0.0.150:9420 ESTABLISHED 6713/mfschunkserver

到这里chunk server也配置完成了,接下来配置客户端了。

7、配置client客户端

7.1、安装fuse

[root@centos-10 ~]# yum install fuse fuse-devel [root@centos-10 ~]# modprobe fuse [root@centos-10 ~]# lsmod | grep fuse fuse 87741 1

7.2、安装挂载客户端

[root@centos-5 ~]# scp /usr/local/src/v3.0.96.tar.gz centos-10:/usr/local/src/ [root@centos-10 ~]# yum install zlib-devel -y [root@centos-10 ~]# useradd mfs [root@centos-10 ~]# id mfs uid=1000(mfs) gid=1000(mfs) groups=1000(mfs) [root@centos-10 ~]# cd /usr/local/src/ [root@centos-10 src]# tar -xf v3.0.96.tar.gz [root@centos-10 src]# cd moosefs-3.0.96/ [root@centos-10 moosefs-3.0.96]# ./configure --prefix=/usr/local/mfs --with-default-user=mfs --with-default-group=mfs --disable-mfsmaster --disable-mfschunkserver --enable-mfsmount [root@centos-10 moosefs-3.0.96]# make && make install [root@centos-10 ~]# chown -R mfs:mfs /usr/local/mfs

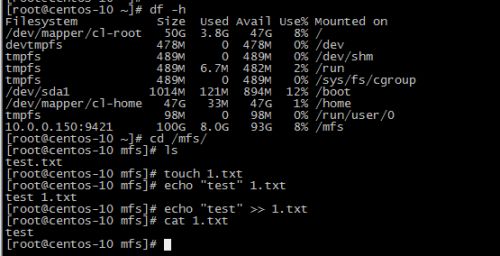

7.3、在客户端上挂载文件系统

[root@centos-10 ~]# mkdir /mfs ##创建挂载目录 [root@centos-10 ~]# chown -R mfs:mfs /mfs [root@centos-10 ~]# /usr/local/mfs/bin/mfsmount /mfs -H 10.0.0.150 -p MFS Password: mfsmaster accepted connection with parameters: read-write,restricted_ip,map_all ; root mapped to mfs:mfs ; users mapped to mfs:mfs [root@centos-10 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 50G 3.8G 47G 8% / devtmpfs 478M 0 478M 0% /dev tmpfs 489M 0 489M 0% /dev/shm tmpfs 489M 6.7M 482M 2% /run tmpfs 489M 0 489M 0% /sys/fs/cgroup /dev/sda1 1014M 121M 894M 12% /boot /dev/mapper/cl-home 47G 33M 47G 1% /home tmpfs 98M 0 98M 0% /run/user/0 10.0.0.150:9421 100G 8.0G 93G 8% /mfs

##可以看到磁盘瞬间多了好多

7.4、我们可以写入本地文件进行测试一下

[root@centos-10 ~]# cd /mfs/ [root@centos-10 mfs]# ls [root@centos-10 mfs]# touch test.txt [root@centos-10 mfs]# echo "123456" > test.txt [root@centos-10 mfs]# cat test.txt 123456

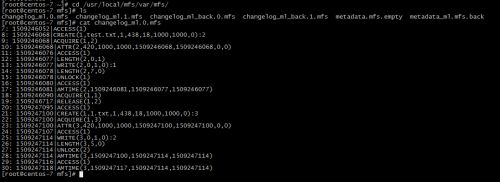

7.5、观察一下Master Server端的变化

[root@centos-5 ~]# cd /usr/local/mfs/var/mfs/ [root@centos-5 mfs]# ls changelog.0.mfs changelog.4.mfs metadata.mfs.back.1 stats.mfs changelog.1.mfs metadata.mfs.back metadata.mfs.empty [root@centos-5 mfs]# cat changelog.0.mfs 7: 1509246052|ACCESS(1) 8: 1509246068|CREATE(1,test.txt,1,438,18,1000,1000,0):2 9: 1509246068|ACQUIRE(1,2) 10: 1509246068|ATTR(2,420,1000,1000,1509246068,1509246068,0,0) 11: 1509246076|ACCESS(1) 12: 1509246077|LENGTH(2,0,1) 13: 1509246077|WRITE(2,0,1,0):1 14: 1509246078|LENGTH(2,7,0) 15: 1509246078|UNLOCK(1) 16: 1509246080|ACCESS(1) 17: 1509246081|AMTIME(2,1509246081,1509246077,1509246077) 18: 1509246090|ACQUIRE(1,1)

7.6、查看一下日志数据的变化

[root@centos-7 ~]# cd /usr/local/mfs/var/mfs/ [root@centos-7 mfs]# cat changelog_ml.0.mfs 7: 1509246052|ACCESS(1) 8: 1509246068|CREATE(1,test.txt,1,438,18,1000,1000,0):2 9: 1509246068|ACQUIRE(1,2) 10: 1509246068|ATTR(2,420,1000,1000,1509246068,1509246068,0,0) 11: 1509246076|ACCESS(1) 12: 1509246077|LENGTH(2,0,1) 13: 1509246077|WRITE(2,0,1,0):1 14: 1509246078|LENGTH(2,7,0) 15: 1509246078|UNLOCK(1) 16: 1509246080|ACCESS(1) 17: 1509246081|AMTIME(2,1509246081,1509246077,1509246077) 18: 1509246090|ACQUIRE(1,1)

到这里为止,drbd+corosync+pacemaker+mfs构建高可用就已经搭建完成了,接下来,我们来测试一

下当Master宕机了,Backup会不会自动接管Master的服务;然后再测试一下,当Master和Backup都故障

了(当然了,做了高可用集群之后一般不可能出现这种情况的,现在我们就是在模拟最极端的情况),

在Metalogger Server上,导入备份信息changelogs到元数据文件,备份服务器Metalogger Server

可直接接替故障的master和backup继续提供服务。

8、测试

8.1、测试一

在测试之前,先查看一下集群的状态

状态正常,接下来就让Master(centos-5)宕机,看看Backup(centos-6)会不会自动接管Master的服务。

##可以看到,当Master宕机了之后,vip先飘到Backup上,接着是挂载盘drbd1(mystore),最后才是mfs服务。

接下来检查一下在客户端还能不能正常写文件到挂载点

##依旧可以写文件到挂载点

查看Backup端上的变化

查看一下日志数据的变化

8.2、测试二

直接暂停centos-5和centos-6,来模拟两台机都发生故障了

##Metalogger Server服务器上的配置

[root@centos-7 ~]# cd /usr/local/mfs/var/mfs/ [root@centos-7 mfs]# ls changelog_ml.0.mfs changelog_ml_back.0.mfs metadata.mfs.empty changelog_ml.1.mfs changelog_ml_back.1.mfs metadata_ml.mfs.back [root@centos-7 mfs]# mkdir /usr/local/mfs/mfs_backup ##备份日志文件 [root@centos-7 mfs]# cp * /usr/local/mfs/mfs_backup/ [root@centos-7 mfs]# cd /usr/local/mfs/etc/mfs/ [root@centos-7 mfs]# ls mfsexports.cfg.sample mfsmetalogger.cfg mfstopology.cfg.sample mfsmaster.cfg.sample mfsmetalogger.cfg.sample [root@centos-7 mfs]# cp mfsexports.cfg.sample mfsexports.cfg [root@centos-7 mfs]# cp mfsmaster.cfg.sample mfsmaster.cfg [root@centos-7 mfs]# vim mfsexports.cfg * / rw,alldirs,mapall=mfs:mfs,password=123456 * . rw [root@centos-7 mfs]# cp /usr/local/mfs/var/mfs/metadata.mfs.empty /usr/local/mfs/var/mfs/metadata.mfs [root@centos-7 mfs]# /usr/local/mfs/sbin/mfsmaster -a ##当Master和Backup故障时,启动要加-a open files limit has been set to: 16384 working directory: /usr/local/mfs/var/mfs lockfile created and locked initializing mfsmaster modules ... exports file has been loaded mfstopology configuration file (/usr/local/mfs/etc/mfstopology.cfg) not found - using defaults loading metadata ... loading sessions data ... ok (0.0000) loading storage classes data ... ok (0.0000) loading objects (files,directories,etc.) ... ok (0.6748) loading names ... ok (0.0000) loading deletion timestamps ... ok (0.0000) loading quota definitions ... ok (0.0000) loading xattr data ... ok (0.0000) loading posix_acl data ... ok (0.0000) loading open files data ... ok (0.0000) loading flock_locks data ... ok (0.0000) loading posix_locks data ... ok (0.0000) loading chunkservers data ... ok (0.0000) loading chunks data ... ok (0.0000) checking filesystem consistency ... ok connecting files and chunks ... ok all inodes: 1 directory inodes: 1 file inodes: 0 chunks: 0 metadata file has been loaded no charts data file - initializing empty charts master <-> metaloggers module: listen on *:9419 master <-> chunkservers module: listen on *:9420 main master server module: listen on *:9421 mfsmaster daemon initialized properly

##两台chunk server上的配置

[root@centos-8 ~]# vim /usr/local/mfs/etc/mfs/mfschunkserver.cfg ##Metalogger Server启动完成之后修改checkserver的指向IP MASTER_HOST = 10.0.0.17 [root@centos-8 ~]# /usr/local/mfs/sbin/mfschunkserver restart open files limit has been set to: 16384 working directory: /usr/local/mfs/var/mfs sending SIGTERM to lock owner (pid:6713) waiting for termination terminated setting glibc malloc arena max to 4 setting glibc malloc arena test to 4 initializing mfschunkserver modules ... hdd space manager: path to scan: /mfsdata/ hdd space manager: start background hdd scanning (searching for available chunks) main server module: listen on *:9422 stats file has been loaded mfschunkserver daemon initialized properly

##客户端的配置

[root@centos-10 ~]# umount /mfs -l ##由于Master和Backup故障时,客户端这里还在使用着,所有要取消挂载时,要加-l。 [root@centos-10 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 50G 3.8G 47G 8% / devtmpfs 478M 0 478M 0% /dev tmpfs 489M 0 489M 0% /dev/shm tmpfs 489M 6.7M 482M 2% /run tmpfs 489M 0 489M 0% /sys/fs/cgroup /dev/sda1 1014M 121M 894M 12% /boot /dev/mapper/cl-home 47G 33M 47G 1% /home tmpfs 98M 0 98M 0% /run/user/0

##现在验证客户端还能正常使用挂载目录

[root@centos-10 ~]# /usr/local/mfs/bin/mfsmount /mfs -H 10.0.0.17 -p MFS Password: mfsmaster accepted connection with parameters: read-write,restricted_ip,map_all ; root mapped to mfs:mfs ; users mapped to mfs:mfs [root@centos-10 ~]# df -h ##挂载成功 Filesystem Size Used Avail Use% Mounted on /dev/mapper/cl-root 50G 3.8G 47G 8% / devtmpfs 478M 0 478M 0% /dev tmpfs 489M 0 489M 0% /dev/shm tmpfs 489M 6.7M 482M 2% /run tmpfs 489M 0 489M 0% /sys/fs/cgroup /dev/sda1 1014M 121M 894M 12% /boot /dev/mapper/cl-home 47G 33M 47G 1% /home tmpfs 98M 0 98M 0% /run/user/0 10.0.0.17:9421 100G 8.0G 93G 8% /mfs [root@centos-10 ~]# cd /mfs [root@centos-10 mfs]# ls ##可以看到原来写入的文件还存在 1.txt test.txt [root@centos-10 mfs]# cat 1.txt test [root@centos-10 mfs]# cat test.txt 123456 [root@centos-10 mfs]# touch 2.txt ##创建新文件 [root@centos-10 mfs]# echo "12345" > 2.txt [root@centos-10 mfs]# cat 2.txt 12345

##查看Metalogger Server服务器上的变化

[root@centos-7 ~]# cd /usr/local/mfs/var/mfs/ [root@centos-7 mfs]# ls changelog.0.mfs changelog_ml.1.mfs changelog_ml_back.1.mfs metadata.mfs.empty changelog_ml.0.mfs changelog_ml_back.0.mfs metadata.mfs.back metadata_ml.mfs.back [root@centos-7 mfs]# cat changelog_ml.0.mfs ##之前的状态 7: 1509246052|ACCESS(1) 8: 1509246068|CREATE(1,test.txt,1,438,18,1000,1000,0):2 9: 1509246068|ACQUIRE(1,2) 10: 1509246068|ATTR(2,420,1000,1000,1509246068,1509246068,0,0) 11: 1509246076|ACCESS(1) 12: 1509246077|LENGTH(2,0,1) 13: 1509246077|WRITE(2,0,1,0):1 14: 1509246078|LENGTH(2,7,0) 15: 1509246078|UNLOCK(1) 16: 1509246080|ACCESS(1) 17: 1509246081|AMTIME(2,1509246081,1509246077,1509246077) 18: 1509246090|ACQUIRE(1,1) 19: 1509246717|RELEASE(1,2) 20: 1509247095|ACCESS(1) 21: 1509247100|CREATE(1,1.txt,1,438,18,1000,1000,0):3 22: 1509247100|ACQUIRE(1,3) 23: 1509247100|ATTR(3,420,1000,1000,1509247100,1509247100,0,0) 24: 1509247107|ACCESS(1) 25: 1509247114|WRITE(3,0,1,0):2 26: 1509247114|LENGTH(3,5,0) 27: 1509247114|UNLOCK(2) 28: 1509247114|AMTIME(3,1509247100,1509247114,1509247114) 29: 1509247116|ACCESS(1) 30: 1509247118|AMTIME(3,1509247117,1509247114,1509247114) 31: 1509247755|RELEASE(1,3) [root@centos-7 mfs]# cat changelog.0.mfs ##现在的状态 32: 1509248470|SESDEL(1) 33: 1509248536|SESADD(#17965257504845738375,1,16,0000,1000,1000,1000,1000,1,9,0,4294967295,167772180,/mfs):2 34: 1509248548|ACCESS(1) 35: 1509248588|ACCESS(1) 36: 1509248604|ACCESS(1) 37: 1509248611|CREATE(1,2.txt,1,438,18,1000,1000,0):4 38: 1509248611|ACQUIRE(2,4) 39: 1509248611|ATTR(4,420,1000,1000,1509248611,1509248611,0,0) 40: 1509248618|ACCESS(1) 41: 1509248619|LENGTH(4,0,1) 42: 1509248619|WRITE(4,0,1,0):3 43: 1509248619|LENGTH(4,6,0) 44: 1509248619|UNLOCK(3) 45: 1509248621|ACCESS(1) 46: 1509248623|AMTIME(4,1509248622,1509248619,1509248619) 47: 1509248780|ACQUIRE(2,1) 48: 1509249231|ACCESS(1) 49: 1509249232|ACQUIRE(2,3) 50: 1509249233|AMTIME(3,1509249232,1509247114,1509247114) 51: 1509249234|ACCESS(1) 52: 1509249234|ACQUIRE(2,2) 53: 1509249235|AMTIME(2,1509249234,1509246077,1509246077) 54: 1509249268|RELEASE(2,4)

9、注意事项

1、用户mfs的用户id和组id一定要一致;

2、要检查一下跟配置mfs服务有关的目录所属组和所属用户是否是mfs,一旦不是mfs的话,可能会导致服务启动不了或者客户端挂载不了目录;

3、当master(或者backup)主机断电活着重启的时候先用-a恢复启动;

4、在测试二中,客户端在取消目录挂载时,由于之前还在使用中,如果强行取消挂载会导致终端卡死在那,而且也无法取消挂载,要想取消挂载,要加 -l 这个选项才能取消挂载。

这次的drbd+corosync+pacemaker+mfs构建高可用的实验就完成了,如果有写错的地方,欢迎各位大神指出来,我会去修改的。如果有写得不好的地方,请多多见谅!!!