DWD层:对ODS层数据进行清洗(去除空值,脏数据,超过极限范围的数据,行式存储改为列存储,改压缩格式)

接上一节内容。在事件表解析成功后,我们需要将事件表中的数据详细解析。以字段“en”来判断事件名称,最后具体到确定的表。

-

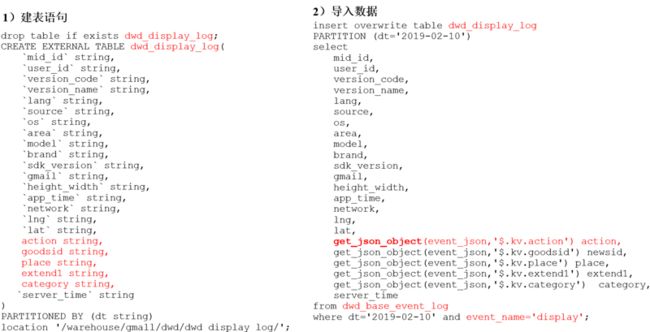

- 建表语句

hive (gmall)> drop table if exists dwd_display_log; CREATE EXTERNAL TABLE dwd_display_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `action` string, `goodsid` string, `place` string, `extend1` string, `category` string, `server_time` string ) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_display_log/';- 导入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_display_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.action') action, get_json_object(event_json,'$.kv.goodsid') goodsid, get_json_object(event_json,'$.kv.place') place, get_json_object(event_json,'$.kv.extend1') extend1, get_json_object(event_json,'$.kv.category') category, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='display';- 测试

hive (gmall)> select * from dwd_display_log limit 2; -

商品详情页表

- 建表语句

hive (gmall)> drop table if exists dwd_newsdetail_log; CREATE EXTERNAL TABLE dwd_newsdetail_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `entry` string, `action` string, `goodsid` string, `showtype` string, `news_staytime` string, `loading_time` string, `type1` string, `category` string, `server_time` string) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_newsdetail_log/';- 导入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_newsdetail_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.entry') entry, get_json_object(event_json,'$.kv.action') action, get_json_object(event_json,'$.kv.goodsid') goodsid, get_json_object(event_json,'$.kv.showtype') showtype, get_json_object(event_json,'$.kv.news_staytime') news_staytime, get_json_object(event_json,'$.kv.loading_time') loading_time, get_json_object(event_json,'$.kv.type1') type1, get_json_object(event_json,'$.kv.category') category, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='newsdetail';- 测试

hive (gmall)> select * from dwd_newsdetail_log limit 2; -

商品列表页表

- 建表语句

hive (gmall)> drop table if exists dwd_loading_log; CREATE EXTERNAL TABLE dwd_loading_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `action` string, `loading_time` string, `loading_way` string, `extend1` string, `extend2` string, `type` string, `type1` string, `server_time` string) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_loading_log/';- 导入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_loading_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.action') action, get_json_object(event_json,'$.kv.loading_time') loading_time, get_json_object(event_json,'$.kv.loading_way') loading_way, get_json_object(event_json,'$.kv.extend1') extend1, get_json_object(event_json,'$.kv.extend2') extend2, get_json_object(event_json,'$.kv.type') type, get_json_object(event_json,'$.kv.type1') type1, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='loading';- 测试

hive (gmall)> select * from dwd_loading_log limit 2; -

广告表

- 建表语句

hive (gmall)> drop table if exists dwd_ad_log; CREATE EXTERNAL TABLE dwd_ad_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `entry` string, `action` string, `content` string, `detail` string, `ad_source` string, `behavior` string, `newstype` string, `show_style` string, `server_time` string) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_ad_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_ad_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.entry') entry, get_json_object(event_json,'$.kv.action') action, get_json_object(event_json,'$.kv.content') content, get_json_object(event_json,'$.kv.detail') detail, get_json_object(event_json,'$.kv.source') ad_source, get_json_object(event_json,'$.kv.behavior') behavior, get_json_object(event_json,'$.kv.newstype') newstype, get_json_object(event_json,'$.kv.show_style') show_style, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='ad';- 测试

hive (gmall)> select * from dwd_ad_log limit 2; -

消息通知表

- 建表语句

hive (gmall)> drop table if exists dwd_notification_log; CREATE EXTERNAL TABLE dwd_notification_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `action` string, `noti_type` string, `ap_time` string, `content` string, `server_time` string ) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_notification_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_notification_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.action') action, get_json_object(event_json,'$.kv.noti_type') noti_type, get_json_object(event_json,'$.kv.ap_time') ap_time, get_json_object(event_json,'$.kv.content') content, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='notification';- 测试

hive (gmall)> select * from dwd_notification_log limit 2; -

用户前台活跃表

- 建表语句

hive (gmall)> drop table if exists dwd_active_foreground_log; CREATE EXTERNAL TABLE dwd_active_foreground_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `push_id` string, `access` string, `server_time` string) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_foreground_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_active_foreground_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.push_id') push_id, get_json_object(event_json,'$.kv.access') access, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='active_foreground';- 测试

hive (gmall)> select * from dwd_active_foreground_log limit 2; -

用户后台活跃表

- 建表语句

hive (gmall)> drop table if exists dwd_active_background_log; CREATE EXTERNAL TABLE dwd_active_background_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `active_source` string, `server_time` string ) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_background_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_active_background_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.active_source') active_source, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='active_background';- 测试

hive (gmall)> select * from dwd_active_background_log limit 2; -

评论表

- 建表语句

hive (gmall)> drop table if exists dwd_comment_log; CREATE EXTERNAL TABLE dwd_comment_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `comment_id` int, `userid` int, `p_comment_id` int, `content` string, `addtime` string, `other_id` int, `praise_count` int, `reply_count` int, `server_time` string ) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_comment_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_comment_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.comment_id') comment_id, get_json_object(event_json,'$.kv.userid') userid, get_json_object(event_json,'$.kv.p_comment_id') p_comment_id, get_json_object(event_json,'$.kv.content') content, get_json_object(event_json,'$.kv.addtime') addtime, get_json_object(event_json,'$.kv.other_id') other_id, get_json_object(event_json,'$.kv.praise_count') praise_count, get_json_object(event_json,'$.kv.reply_count') reply_count, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='comment';- 测试

hive (gmall)> select * from dwd_comment_log limit 2; -

收藏表

- 建表语句

hive (gmall)> drop table if exists dwd_favorites_log; CREATE EXTERNAL TABLE dwd_favorites_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `id` int, `course_id` int, `userid` int, `add_time` string, `server_time` string ) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_favorites_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_favorites_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.id') id, get_json_object(event_json,'$.kv.course_id') course_id, get_json_object(event_json,'$.kv.userid') userid, get_json_object(event_json,'$.kv.add_time') add_time, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='favorites';- 测试

hive (gmall)> select * from dwd_favorites_log limit 2; -

点赞表

- 建表语句

hive (gmall)> drop table if exists dwd_praise_log; CREATE EXTERNAL TABLE dwd_praise_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `id` string, `userid` string, `target_id` string, `type` string, `add_time` string, `server_time` string ) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_praise_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_praise_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.id') id, get_json_object(event_json,'$.kv.userid') userid, get_json_object(event_json,'$.kv.target_id') target_id, get_json_object(event_json,'$.kv.type') type, get_json_object(event_json,'$.kv.add_time') add_time, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='praise';- 测试

hive (gmall)> select * from dwd_praise_log limit 2; -

错误日志表

- 建表语句

hive (gmall)> drop table if exists dwd_error_log; CREATE EXTERNAL TABLE dwd_error_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `errorBrief` string, `errorDetail` string, `server_time` string) PARTITIONED BY (dt string) location '/warehouse/gmall/dwd/dwd_error_log/';- 插入数据

hive (gmall)> set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_error_log PARTITION (dt='2020-02-03') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, get_json_object(event_json,'$.kv.errorBrief') errorBrief, get_json_object(event_json,'$.kv.errorDetail') errorDetail, server_time from dwd_base_event_log where dt='2020-02-03' and event_name='error';- 测试

hive (gmall)> select * from dwd_error_log limit 2; -

将自己所建数据全部导入到数仓中

[hadoop@hadoop151 bin]$ dwd_event_log.sh 2020-01-01 2020-01-31测试

hive (gmall)> select * from dwd_comment_log where dt='2020-01-11' limit 2;