参考资料:

CS 224N: Assignment #1

CS224n笔记2 词的向量表示:word2vec

CS224n异闻录(一)

李宏毅 机器学习 25.14 Unsupervised Learning - Word Embe(Av10590361,P25).Flv

CS20 lecture4

CS224n lecture2-Word Vectors

CS224n lecture3-More Word Vectors

Distributed Representations of Words and Phrases and their Compositionality

-

文本转化为向量的重要性

数据决定了模型的上限,如果你模型再好,来一大堆垃圾数据,不行。

向量化之后才能进行训练模型,第一步的处理如果丢掉很重要的信息,后面很难做

-

word2vector原理

马英九和蔡英文两个词汇输入最后都得预测得到宣誓就职,那么内部的网络(对应词汇的向量)计算后肯定是很相像,有点像PCA。

为什么不用deep,因为很容易训练,另外还要作为其他网络的输入

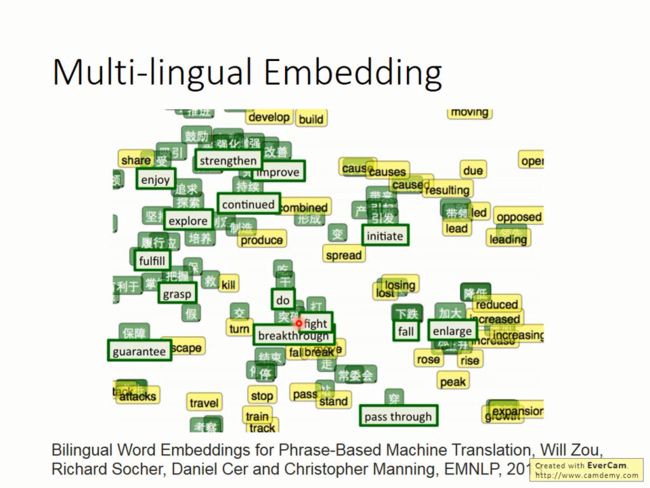

两个训练模型各自训练出中文和英文的词汇向量,然后用部分对应的词汇训练一个转换矩阵,然后将其他词汇也转换后画出下图

-

实现softmax函数

实现上需要注意的一些细节:

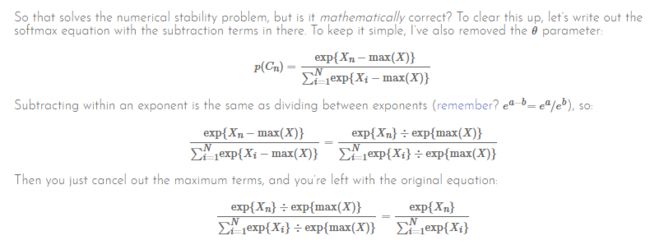

1.exp操作容易溢出,所以在这个操作之前需要减去那一行的最大值

这篇文章给出公式

2.矩阵减去行向量或者列向量会自动拓展,在我自己的文章—— 使用numpy对矩阵常见的操作中第一条说明了。

import numpy as np

def softmax(x):

"""Compute the softmax function for each row of the input x.

It is crucial that this function is optimized for speed because

it will be used frequently in later code. You might find numpy

functions np.exp, np.sum, np.reshape, np.max, and numpy

broadcasting useful for this task.

Numpy broadcasting documentation:

http://docs.scipy.org/doc/numpy/user/basics.broadcasting.html

You should also make sure that your code works for a single

D-dimensional vector (treat the vector as a single row) and

for N x D matrices. This may be useful for testing later. Also,

make sure that the dimensions of the output match the input.

You must implement the optimization in problem 1(a) of the

written assignment!

Arguments:

x -- A D dimensional vector or N x D dimensional numpy matrix.

Return:

x -- You are allowed to modify x in-place

"""

orig_shape = x.shape

if len(x.shape) > 1:

# Matrix

### YOUR CODE HERE

max = np.max(x,axis=1).reshape(-1,1)

x -= max

x = np.exp(x)

sum = np.sum(x,axis=1).reshape(-1,1)

x /= sum

### END YOUR CODE

else:

# Vector

### YOUR CODE HERE

max = np.max(x)

x -= max

x = np.exp(x)

x /= np.sum(x)

### END YOUR CODE

assert x.shape == orig_shape

return x

def test_softmax_basic():

"""

Some simple tests to get you started.

Warning: these are not exhaustive.

"""

print("Running basic tests...")

test1 = softmax(np.array([1,2]))

print(test1)

ans1 = np.array([0.26894142, 0.73105858])

assert np.allclose(test1, ans1, rtol=1e-05, atol=1e-06)

test2 = softmax(np.array([[1001,1002],[3,4]]))

print(test2)

ans2 = np.array([

[0.26894142, 0.73105858],

[0.26894142, 0.73105858]])

assert np.allclose(test2, ans2, rtol=1e-05, atol=1e-06)

test3 = softmax(np.array([[-1001,-1002]]))

print(test3)

ans3 = np.array([0.73105858, 0.26894142])

assert np.allclose(test3, ans3, rtol=1e-05, atol=1e-06)

print("You should be able to verify these results by hand!\n")

def test_softmax():

"""

Use this space to test your softmax implementation by running:

python q1_softmax.py

This function will not be called by the autograder, nor will

your tests be graded.

"""

print("Running your tests...")

### YOUR CODE HERE

### END YOUR CODE

if __name__ == "__main__":

test_softmax_basic()

test_softmax()

-

神经网络基础

sigmoid函数导数推导

很简单就不放上来了

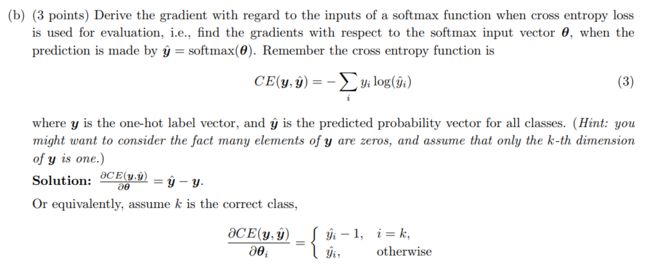

θ是全连接层输出,送到softmax,然后再经过cross entropy函数计算损失函数,求最后的导数

三层神经网络对输入求导

根据链式法则和向量化可求得

参数个数

不要忘了bias

sigmoid及其导数的实现

#!/usr/bin/env python

import numpy as np

def sigmoid(x):

"""

Compute the sigmoid function for the input here.

Arguments:

x -- A scalar or numpy array.

Return:

s -- sigmoid(x)

"""

### YOUR CODE HERE

e_x = np.exp(x)

s = e_x / (e_x + 1)

### END YOUR CODE

return s

def sigmoid_grad(s):

"""

Compute the gradient for the sigmoid function here. Note that

for this implementation, the input s should be the sigmoid

function value of your original input x.

Arguments:

s -- A scalar or numpy array.

Return:

ds -- Your computed gradient.

"""

### YOUR CODE HERE

ds = (1-s)*s

### END YOUR CODE

return ds

def test_sigmoid_basic():

"""

Some simple tests to get you started.

Warning: these are not exhaustive.

"""

print("Running basic tests...")

x = np.array([[1, 2], [-1, -2]])

f = sigmoid(x)

g = sigmoid_grad(f)

print(f)

f_ans = np.array([

[0.73105858, 0.88079708],

[0.26894142, 0.11920292]])

assert np.allclose(f, f_ans, rtol=1e-05, atol=1e-06)

print(g)

g_ans = np.array([

[0.19661193, 0.10499359],

[0.19661193, 0.10499359]])

assert np.allclose(g, g_ans, rtol=1e-05, atol=1e-06)

print("You should verify these results by hand!\n")

def test_sigmoid():

"""

Use this space to test your sigmoid implementation by running:

python q2_sigmoid.py

This function will not be called by the autograder, nor will

your tests be graded.

"""

print("Running your tests...")

### YOUR CODE HERE

### END YOUR CODE

if __name__ == "__main__":

test_sigmoid_basic();

test_sigmoid()

导数验证

#!/usr/bin/env python

import numpy as np

import random

# First implement a gradient checker by filling in the following functions

def gradcheck_naive(f, x):

""" Gradient check for a function f.

Arguments:

f -- a function that takes a single argument and outputs the

cost and its gradients

x -- the point (numpy array) to check the gradient at

"""

rndstate = random.getstate()

random.setstate(rndstate)

fx, grad = f(x) # Evaluate function value at original point

h = 1e-4 # Do not change this!

# Iterate over all indexes ix in x to check the gradient.

it = np.nditer(x, flags=['multi_index'], op_flags=['readwrite'])

while not it.finished:

ix = it.multi_index

# Try modifying x[ix] with h defined above to compute numerical

# gradients (numgrad).

# Use the centered difference of the gradient.

# It has smaller asymptotic error than forward / backward difference

# methods. If you are curious, check out here:

# https://math.stackexchange.com/questions/2326181/when-to-use-forward-or-central-difference-approximations

# Make sure you call random.setstate(rndstate)

# before calling f(x) each time. This will make it possible

# to test cost functions with built in randomness later.

### YOUR CODE HERE:

random.setstate(rndstate)

x[ix] -= h

a,_ = f(x)

random.setstate(rndstate)

x[ix] += 2*h

b,_ = f(x)

numgrad = (b - a) / (2 * h)

# 恢复x

x[ix] -= h

### END YOUR CODE

# Compare gradients

reldiff = abs(numgrad - grad[ix]) / max(1, abs(numgrad), abs(grad[ix]))

if reldiff > 1e-5:

print("Gradient check failed.")

print("First gradient error found at index %s" % str(ix))

print("Your gradient: %f \t Numerical gradient: %f" % (

grad[ix], numgrad))

return

it.iternext() # Step to next dimension

print("Gradient check passed!")

def sanity_check():

"""

Some basic sanity checks.

"""

quad = lambda x: (np.sum(x ** 2), x * 2)

print("Running sanity checks...")

gradcheck_naive(quad, np.array(123.456)) # scalar test

gradcheck_naive(quad, np.random.randn(3,)) # 1-D test

gradcheck_naive(quad, np.random.randn(4,5)) # 2-D test

print("")

def your_sanity_checks():

"""

Use this space add any additional sanity checks by running:

python q2_gradcheck.py

This function will not be called by the autograder, nor will

your additional tests be graded.

"""

print("Running your sanity checks...")

### YOUR CODE HERE

### END YOUR CODE

if __name__ == "__main__":

sanity_check()

your_sanity_checks()

-

神经网络前向和后向

#!/usr/bin/env python

import numpy as np

import random

from q1_softmax import softmax

from q2_sigmoid import sigmoid, sigmoid_grad

from q2_gradcheck import gradcheck_naive

def forward_backward_prop(X, labels, params, dimensions):

"""

Forward and backward propagation for a two-layer sigmoidal network

Compute the forward propagation and for the cross entropy cost,

the backward propagation for the gradients for all parameters.

Notice the gradients computed here are different from the gradients in

the assignment sheet: they are w.r.t. weights, not inputs.

Arguments:

X -- M x Dx matrix, where each row is a training example x.

labels -- M x Dy matrix, where each row is a one-hot vector.

params -- Model parameters, these are unpacked for you.

dimensions -- A tuple of input dimension, number of hidden units

and output dimension

"""

### Unpack network parameters (do not modify)

ofs = 0

Dx, H, Dy = (dimensions[0], dimensions[1], dimensions[2])

W1 = np.reshape(params[ofs:ofs+ Dx * H], (Dx, H))

ofs += Dx * H

b1 = np.reshape(params[ofs:ofs + H], (1, H))

ofs += H

W2 = np.reshape(params[ofs:ofs + H * Dy], (H, Dy))

ofs += H * Dy

b2 = np.reshape(params[ofs:ofs + Dy], (1, Dy))

# Note: compute cost based on `sum` not `mean`.

### YOUR CODE HERE: forward propagation

z1 = np.dot(X,W1)+b1

a1 = sigmoid(z1)

z2 = np.dot(a1,W2)+b2

y_ = softmax(z2)

# cost entropyZ

cost = (-1)*np.sum(labels*np.log(y_))

### END YOUR CODE

### YOUR CODE HERE: backward propagation

gradz2 = y_ - labels

gradb2 = gradz2.sum(axis=0)

gradW2 = np.dot(a1.T,gradz2)

# 下面这两个跟上面是一样的

# gradW2 = np.zeros((5,10))

# for i in range(gradz2.shape[0]):

# gradW2 += np.dot(a1[i].reshape((5,1)),gradz2[i].reshape((1,10)))

grada1 = np.dot(gradz2,W2.T)

# 注意这里sigmoid_grad(a1)可能有的人像我一样像输入z1,但这里是因为sigmoid_grad实现的特殊,要输入a1

gradz1 = grada1*sigmoid_grad(a1)

gradb1 = gradz1.sum(axis=0)

gradW1 = np.dot(X.T,gradz1)

### END YOUR CODE

### Stack gradients (do not modify)

grad = np.concatenate((gradW1.flatten(), gradb1.flatten(),

gradW2.flatten(), gradb2.flatten()))

return cost, grad

def sanity_check():

"""

Set up fake data and parameters for the neural network, and test using

gradcheck.

"""

print("Running sanity check...")

N = 20

dimensions = [10, 5, 10]

data = np.random.randn(N, dimensions[0]) # each row will be a datum

labels = np.zeros((N, dimensions[2]))

for i in range(N):

labels[i, random.randint(0,dimensions[2]-1)] = 1

params = np.random.randn((dimensions[0] + 1) * dimensions[1] + (

dimensions[1] + 1) * dimensions[2], )

# params = np.zeros((dimensions[0] + 1) * dimensions[1] + (

# dimensions[1] + 1) * dimensions[2])

gradcheck_naive(lambda params:

forward_backward_prop(data, labels, params, dimensions), params)

def your_sanity_checks():

"""

Use this space add any additional sanity checks by running:

python q2_neural.py

This function will not be called by the autograder, nor will

your additional tests be graded.

"""

print("Running your sanity checks...")

### YOUR CODE HERE

### END YOUR CODE

if __name__ == "__main__":

sanity_check()

your_sanity_checks()

-

word2vec

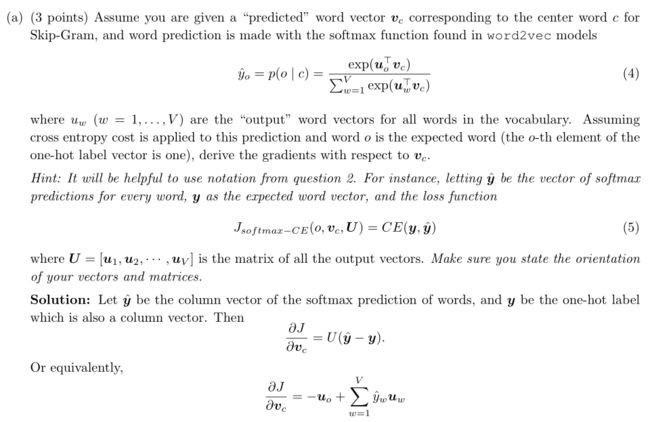

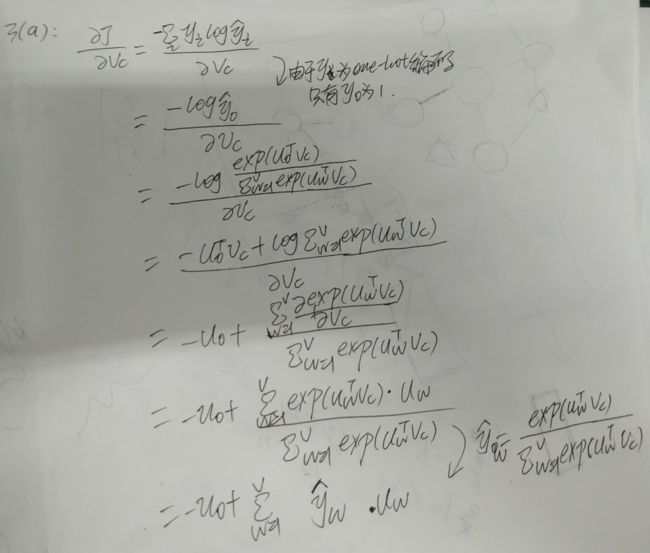

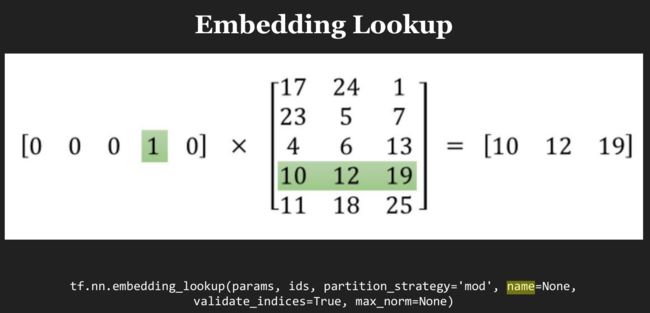

对中心词向量vc(onehot乘以w提取出来的)求导

对U的每个向量求导

跟上面大同小异

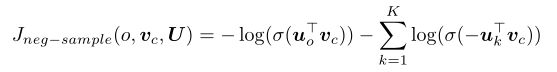

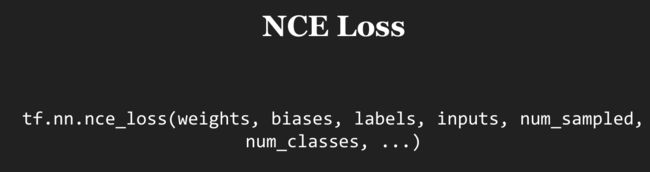

证明neg-sample的损失函数计算比较快

neg-sample选出K个样本进行下面的计算,由于K要远小于词表大小V,所以这里效率高很多。

q3_word2vec.py代码及其思路

这个文件实现的功能主要有:

1、向量长度归一化,很简单,不说了,直接看代码即可。

2、实现skip-gram方式的cost函数,及其对输入向量和输出向量(这两个在模型中都是参数)的导数,然后进行验证。

F代表的是模型在特定输入下的损失函数,skip-gram方式就是将相邻的都加起来。

其中计算F又有前面两种不同的方式。

test_word2vec 首先确定词表等基础工作,然后调用测试

word2vec_sgd_wrapper输出cost(w)函数大小,及cost'(w),虽然用word2vecModel计算了多次后相加,但是都是一样的,cost也计算了多次。

gradcheck_naive前面已经做过,就是输入某个f,w0,f输出cost(w)函数大小,及cost'(w),gradcheck_naive根据这个验证导数是否计算正确。

#!/usr/bin/env python

import numpy as np

import random

from q1_softmax import softmax

from q2_gradcheck import gradcheck_naive

from q2_sigmoid import sigmoid, sigmoid_grad

def normalizeRows(x):

""" Row normalization function

Implement a function that normalizes each row of a matrix to have

unit length.

"""

### YOUR CODE HERE

x_square = x**2

x_square_sum = np.sqrt(x_square.sum(axis=1)).reshape((-1,1))

x /= x_square_sum

### END YOUR CODE

return x

def test_normalize_rows():

print("Testing normalizeRows...")

x = normalizeRows(np.array([[3.0,4.0],[1, 2]]))

print(x)

ans = np.array([[0.6,0.8],[0.4472136,0.89442719]])

assert np.allclose(x, ans, rtol=1e-05, atol=1e-06)

print("Testing normalizeRows pass")

def softmaxCostAndGradient(predicted, target, outputVectors, dataset):

""" Softmax cost function for word2vec models

Implement the cost and gradients for one predicted word vector

and one target word vector as a building block for word2vec

models, assuming the softmax prediction function and cross

entropy loss.

Arguments:

predicted -- numpy ndarray, predicted word vector (\hat{v} in

the written component)

target -- integer, the index of the target word

outputVectors -- "output" vectors (as rows) for all tokens

dataset -- needed for negative sampling, unused here.

Return:

cost -- cross entropy cost for the softmax word prediction

gradPred -- the gradient with respect to the predicted word

vector

grad -- the gradient with respect to all the other word

vectors

We will not provide starter code for this function, but feel

free to reference the code you previously wrote for this

assignment!

"""

### YOUR CODE HERE

# V代表词汇个数,d代表输入向量维数

V,d = outputVectors.shape

# predicted 是vc,也就是中心词对应的输入向量

output = softmax(np.dot(predicted,outputVectors.T))

cost = (-1) * np.log(output[target])

# 3(a)公式

gradPred = np.sum(outputVectors * output.reshape((-1,1)),axis=0) - outputVectors[target]

# 3(b)公式

onehot = np.zeros(V)

onehot[target] = 1

grad = np.dot((output-onehot).reshape(-1,1),predicted.reshape(1,-1))

### END YOUR CODE

return cost, gradPred, grad

def getNegativeSamples(target, dataset, K):

""" Samples K indexes which are not the target """

indices = [None] * K

for k in range(K):

newidx = dataset.sampleTokenIdx()

while newidx == target:

newidx = dataset.sampleTokenIdx()

indices[k] = newidx

return indices

def negSamplingCostAndGradient(predicted, target, outputVectors, dataset,

K=10):

""" Negative sampling cost function for word2vec models

Implement the cost and gradients for one predicted word vector

and one target word vector as a building block for word2vec

models, using the negative sampling technique. K is the sample

size.

Note: See test_word2vec below for dataset's initialization.

Arguments/Return Specifications: same as softmaxCostAndGradient

"""

# Sampling of indices is done for you. Do not modify this if you

# wish to match the autograder and receive points!

indices = [target]

indices.extend(getNegativeSamples(target, dataset, K))

### YOUR CODE HERE

vc = predicted

U = outputVectors

uo = U[target]

# 3(c)公式6

cost = np.log(sigmoid(np.dot(uo,vc)))

for i in range(1,len(indices)):

uk = U[indices[i]]

cost += np.log(sigmoid(-np.dot(uk,vc)))

cost *= -1

#

gradPred = (sigmoid(np.dot(uo,vc)) - 1) * uo

for i in range(1,len(indices)):

uk = U[indices[i]]

gradPred -= (sigmoid(-np.dot(uk,vc))-1)*uk

#

grad = np.zeros(U.shape)

for i in range(1,len(indices)):

index = indices[i]

uk = U[index]

grad[index] += (1-sigmoid(-np.dot(uk,vc)))*vc

grad[target] = (sigmoid(np.dot(uo,vc))-1)*vc

### END YOUR CODE

return cost, gradPred, grad

def skipgram(currentWord, C, contextWords, tokens, inputVectors, outputVectors,

dataset, word2vecCostAndGradient=softmaxCostAndGradient):

""" Skip-gram model in word2vec

Implement the skip-gram model in this function.

Arguments:

currentWord -- a string of the current center word

C -- integer, context size

contextWords -- list of no more than 2*C strings, the context words

tokens -- a dictionary that maps words to their indices in

the word vector list

inputVectors -- "input" word vectors (as rows) for all tokens

outputVectors -- "output" word vectors (as rows) for all tokens

word2vecCostAndGradient -- the cost and gradient function for

a prediction vector given the target

word vectors, could be one of the two

cost functions you implemented above.

Return:

cost -- the cost function value for the skip-gram model

grad -- the gradient with respect to the word vectors

"""

cost = 0.0

gradIn = np.zeros(inputVectors.shape)

gradOut = np.zeros(outputVectors.shape)

### YOUR CODE HERE

inputIndex = tokens[currentWord]

predicted = inputVectors[inputIndex]

cost = 0

# 正常情况下len(contextWords)应该要等于2C

for contextWord in contextWords:

target = tokens[contextWord]

costTemp,gradPred,grad= word2vecCostAndGradient(predicted,target,outputVectors,dataset)

cost += costTemp

gradOut += grad

gradIn[inputIndex] += gradPred

# 求predicted,这个比较容易。也不用先把输入词转为one-hot向量,然后再用矩阵乘法。直接在inputVectors里面查找就行了。

currentWordIndex = tokens.get(currentWord)

predicted = inputVectors[currentWordIndex]

for i in range(2*C):

if i < len(contextWords):

contextWord = contextWords[i]

# token是词表,target是contextWord在词表中对应的位置,target是根据predicted预测出来的。

target = tokens.get(contextWord)

costTemp, gradPred, grad = word2vecCostAndGradient(predicted, target, outputVectors, dataset)

cost = cost + costTemp

gradIn[currentWordIndex] = gradIn[currentWordIndex] + gradPred

gradOut = gradOut + grad

else:

break

### END YOUR CODE

return cost, gradIn, gradOut

def cbow(currentWord, C, contextWords, tokens, inputVectors, outputVectors,

dataset, word2vecCostAndGradient=softmaxCostAndGradient):

"""CBOW model in word2vec

Implement the continuous bag-of-words model in this function.

Arguments/Return specifications: same as the skip-gram model

Extra credit: Implementing CBOW is optional, but the gradient

derivations are not. If you decide not to implement CBOW, remove

the NotImplementedError.

"""

cost = 0.0

gradIn = np.zeros(inputVectors.shape)

gradOut = np.zeros(outputVectors.shape)

### YOUR CODE HERE

target = tokens[currentWord]

contextWordsSum = np.zeros(len(inputVectors[0]))

for contextWord in contextWords:

index = tokens[contextWord]

contextWordsSum += inputVectors[index]

cost, grad, gradOut = word2vecCostAndGradient(contextWordsSum,target,outputVectors,dataset)

for contextWord in contextWords:

index = tokens[contextWord]

gradIn[index] += grad

### END YOUR CODE

return cost, gradIn, gradOut

#############################################

# Testing functions below. DO NOT MODIFY! #

#############################################

def word2vec_sgd_wrapper(word2vecModel, tokens, wordVectors, dataset, C,

word2vecCostAndGradient=softmaxCostAndGradient):

batchsize = 50

cost = 0.0

grad = np.zeros(wordVectors.shape)

N = wordVectors.shape[0]

N_half = int(N/2)

inputVectors = wordVectors[:N_half,:]

outputVectors = wordVectors[N_half:,:]

for i in range(batchsize):

# 邻居长度C1

C1 = random.randint(1,C)

# 随机得到一个centerword,和包含2*C1的context

centerword, context = dataset.getRandomContext(C1)

if word2vecModel == skipgram:

denom = 1

else:

denom = 1

c, gin, gout = word2vecModel(

centerword, C1, context, tokens, inputVectors, outputVectors,

dataset, word2vecCostAndGradient)

cost += c / batchsize / denom

grad[:N_half, :] += gin / batchsize / denom

grad[N_half:, :] += gout / batchsize / denom

return cost, grad

def test_word2vec():

""" Interface to the dataset for negative sampling """

dataset = type('dummy', (), {})()

def dummySampleTokenIdx():

return random.randint(0, 4)

def getRandomContext(C):

tokens = ["a", "b", "c", "d", "e"]

return tokens[random.randint(0,4)], \

[tokens[random.randint(0,4)] for i in range(2*C)]

dataset.sampleTokenIdx = dummySampleTokenIdx

dataset.getRandomContext = getRandomContext

random.seed(31415)

np.random.seed(9265)

# 10个向量会分成5个input向量和5个output向量

dummy_vectors = normalizeRows(np.random.randn(10,3))

dummy_tokens = dict([("a",0), ("b",1), ("c",2),("d",3),("e",4)])

print("==== Gradient check for skip-gram ====")

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

skipgram, dummy_tokens, vec, dataset, 5, softmaxCostAndGradient),

dummy_vectors)

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

skipgram, dummy_tokens, vec, dataset, 5, negSamplingCostAndGradient),

dummy_vectors)

print("\n==== Gradient check for CBOW ====")

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

cbow, dummy_tokens, vec, dataset, 5, softmaxCostAndGradient),

dummy_vectors)

gradcheck_naive(lambda vec: word2vec_sgd_wrapper(

cbow, dummy_tokens, vec, dataset, 5, negSamplingCostAndGradient),

dummy_vectors)

print("\n=== Results ===")

print(skipgram("c", 3, ["a", "b", "e", "d", "b", "c"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset))

print(skipgram("c", 1, ["a", "b"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset,

negSamplingCostAndGradient))

print(cbow("a", 2, ["a", "b", "c", "a"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset))

print(cbow("a", 2, ["a", "b", "a", "c"],

dummy_tokens, dummy_vectors[:5,:], dummy_vectors[5:,:], dataset,

negSamplingCostAndGradient))

if __name__ == "__main__":

# test_normalize_rows()

test_word2vec()

sgd

作业给出的代码真的让我五体投地:

1.测试案例写的非常好

2.运行几次迭代保存参数也非常好,后面重新来就不用重头开始了

#!/usr/bin/env python

# Save parameters every a few SGD iterations as fail-safe

SAVE_PARAMS_EVERY = 5000

import glob

import random

import numpy as np

import os.path as op

import pickle

def load_saved_params():

"""

A helper function that loads previously saved parameters and resets

iteration start.

"""

st = 0

for f in glob.glob("saved_params_*.npy"):

iter = int(op.splitext(op.basename(f))[0].split("_")[2])

if (iter > st):

st = iter

if st > 0:

with open("saved_params_%d.npy" % st, "r") as f:

params = pickle.load(f)

state = pickle.load(f)

return st, params, state

else:

return st, None, None

def save_params(iter, params):

with open("saved_params_%d.npy" % iter, "w") as f:

pickle.dump(params, f)

pickle.dump(random.getstate(), f)

def sgd(f, x0, step, iterations, postprocessing=None, useSaved=False,

PRINT_EVERY=10):

""" Stochastic Gradient Descent

Implement the stochastic gradient descent method in this function.

Arguments:

f -- the function to optimize, it should take a single

argument and yield two outputs, a cost and the gradient

with respect to the arguments

x0 -- the initial point to start SGD from

step -- the step size for SGD

iterations -- total iterations to run SGD for

postprocessing -- postprocessing function for the parameters

if necessary. In the case of word2vec we will need to

normalize the word vectors to have unit length.

PRINT_EVERY -- specifies how many iterations to output loss

Return:

x -- the parameter value after SGD finishes

"""

# Anneal learning rate every several iterations

ANNEAL_EVERY = 20000

if useSaved:

start_iter, oldx, state = load_saved_params()

if start_iter > 0:

x0 = oldx

step *= 0.5 ** (start_iter / ANNEAL_EVERY)

if state:

random.setstate(state)

else:

start_iter = 0

x = x0

if not postprocessing:

postprocessing = lambda x: x

expcost = None

for iter in range(start_iter + 1, iterations + 1):

# Don't forget to apply the postprocessing after every iteration!

# You might want to print the progress every few iterations.

cost = None

### YOUR CODE HERE

cost,grad = f(x)

x -= step*grad

x = postprocessing(x)

### END YOUR CODE

if iter % PRINT_EVERY == 0:

if not expcost:

expcost = cost

else:

expcost = .95 * expcost + .05 * cost

print("iter %d: %f" % (iter, expcost))

if iter % SAVE_PARAMS_EVERY == 0 and useSaved:

save_params(iter, x)

if iter % ANNEAL_EVERY == 0:

step *= 0.5

return x

def sanity_check():

quad = lambda x: (np.sum(x ** 2), x * 2)

print("Running sanity checks...")

t1 = sgd(quad, 0.5, 0.01, 1000, PRINT_EVERY=100)

print("test 1 result:", t1)

assert abs(t1) <= 1e-6

t2 = sgd(quad, 0.0, 0.01, 1000, PRINT_EVERY=100)

print("test 2 result:", t2)

assert abs(t2) <= 1e-6

t3 = sgd(quad, -1.5, 0.01, 1000, PRINT_EVERY=100)

print("test 3 result:", t3)

assert abs(t3) <= 1e-6

print("check passed!")

def your_sanity_checks():

"""

Use this space add any additional sanity checks by running:

python q3_sgd.py

This function will not be called by the autograder, nor will

your additional tests be graded.

"""

print("Running your sanity checks...")

### YOUR CODE HERE

raise NotImplementedError

### END YOUR CODE

if __name__ == "__main__":

sanity_check()

# your_sanity_checks()

-

tensorflow版本