第五部分:集群中node节点部署【高可用方式】

----Node 端---

Node 部分 需要部署的组件有 docker calico/flannel kubelet kube-proxy 这几个组件。

Node 节点 基于 Nginx 负载 API 做 Master HA

# master 之间除 api server 以外其他组件通过 etcd 选举,api server 默认不作处理;

在每个 node 上启动一个 nginx,每个 nginx 反向代理所有 api server;

node 上 kubelet、kube-proxy 连接本地的 nginx 代理端口;

当 nginx 发现无法连接后端时会自动踢掉出问题的 api server,从而实现 api server 的 HA;

图示-------(较丑)

1,发布证书到ALL node

mkdir -p /etc/kubernetes/ssl/

scp ca.pem kube-proxy.pem kube-proxy-key.pem node-*:/etc/kubernetes/ssl/

2,创建Nginx 代理【本人更倾向于传统的nginx部署方式,单独一个服务来做代理,后半部分会有配置文件参考,如下是docker方式】

在每个 node 都必须创建一个 Nginx 代理(只是node), 当 Master 也做为 Node 的时候 不需要配置 Nginx-proxy

# 创建配置目录

mkdir -p /etc/nginx

# 写入代理配置

cat << EOF >> /etc/nginx/nginx.conf

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

upstream kube_apiserver {

least_conn;

server 10.180.160.110:6443;

server 10.180.160.112:6443;

}

server {

listen 0.0.0.0:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

EOF

# 更新权限

chmod +r /etc/nginx/nginx.conf

# 配置 Nginx 基于 docker 进程,然后配置 systemd 来启动

cat << EOF >> /etc/systemd/system/nginx-proxy.service

[Unit]

Description=kubernetes apiserver docker wrapper

Wants=docker.socket

After=docker.service

[Service]

User=root

PermissionsStartOnly=true

ExecStart=/usr/bin/docker run -p 127.0.0.1:6443:6443 \\

-v /etc/nginx:/etc/nginx \\

--name nginx-proxy \\

--net=host \\

--restart=on-failure:5 \\

--memory=512M \\

nginx:1.13.7-alpine

ExecStartPre=-/usr/bin/docker rm -f nginx-proxy

ExecStop=/usr/bin/docker stop nginx-proxy

Restart=always

RestartSec=15s

TimeoutStartSec=30s

[Install]

WantedBy=multi-user.target

EOF

3,# 启动 Nginx

systemctl daemon-reload

systemctl start nginx-proxy

systemctl enable nginx-proxy

systemctl status nginx-proxy

4,传统服务部署方式的nginx【我更喜欢这种,不依赖于docker】

nginx,配置文件---------

# vim nginx.conf

error_log stderr notice;

worker_processes auto;

events {

multi_accept on;

use epoll;

worker_connections 1024;

}

stream {

upstream kube_apiserver {

least_conn;

server 10.180.160.110:6443;

server 10.180.160.112:6443;

}

server {

listen 0.0.0.0:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

--------------------------------------------------

5, 配置 Kubelet.service 文件

systemd kubelet 配置

动态 kubelet 配置

官方说明 https://kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file/ https://kubernetes.io/docs/tasks/administer-cluster/reconfigure-kubelet/

目前官方还只是 beta 阶段, 动态配置 json 的具体参数可以参考

https://github.com/kubernetes/kubernetes/blob/release-1.10/pkg/kubelet/apis/kubeletconfig/v1beta1/types.go

# 创建 kubelet 目录

vi /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--hostname-override=kubernetes-64 \

--pod-infra-container-image=jicki/pause-amd64:3.1 \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.config.json \

--cert-dir=/etc/kubernetes/ssl \

--logtostderr=true \

--v=2

[Install]

WantedBy=multi-user.target

# 创建 kubelet config 配置文件

vi /etc/kubernetes/kubelet.config.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "10.180.160.113",

"port": 10250,

"readOnlyPort": 0,

"cgroupDriver": "cgroupfs",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletClientCertificate": true,

"RotateKubeletServerCertificate": true

},

"MaxPods": "512",

"failSwapOn": false,

"containerLogMaxSize": "10Mi",

"containerLogMaxFiles": 5,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.254.0.2"]

}

6,配置 kube-proxy.service

#cd /etc/kubernetes/ && vi kube-proxy.config.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 10.180.160.113

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.254.64.0/18

healthzBindAddress: 10.180.160.113:10256

hostnameOverride: kubernetes-113

kind: KubeProxyConfiguration

metricsBindAddress: 10.180.160.113:10249

mode: "ipvs"

# 创建 kube-proxy 目录

# vi /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.config.yaml \

--logtostderr=true \

--v=1

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# 启动

systemctl start kube-proxy

systemctl status kube-proxy

第六部分,网络

本次采用 Flannel配置 网络

1,# rpm -ivh flannel-0.10.0-1.x86_64.rpm

# 配置 flannel

# 由于我们docker更改了 docker.service.d 的路径(按docker安装配置而定!!!)

# 所以这里把 flannel.conf 的配置拷贝到 这个目录去

mv /usr/lib/systemd/system/docker.service.d/flannel.conf /etc/systemd/system/docker.service.d

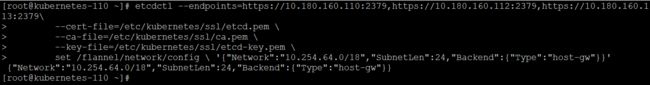

2,# 配置 flannel 网段【master节点操作一次就行】

etcdctl --endpoints=https://10.180.160.110:2379,https://10.180.160.112:2379,https://10.180.160.113:2379\

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

set /flannel/network/config \ '{"Network":"10.254.64.0/18","SubnetLen":24,"Backend":{"Type":"host-gw"}}'

3,# 修改 flanneld 配置

vi /etc/sysconfig/flanneld

# Flanneld configuration options

# etcd 地址

FLANNEL_ETCD_ENDPOINTS="https://10.180.160.110:2379,https://10.180.160.112:2379,https://10.180.160.113:2379"

# 配置为上面的路径 flannel/network

FLANNEL_ETCD_PREFIX="/flannel/network"

# 其他的配置,可查看 flanneld --help,这里添加了 etcd ssl 认证

FLANNEL_OPTIONS="-ip-masq=true -etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/kubernetes/ssl/etcd.pem -etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem -iface=ens160"

4,# 启动 flannel

systemctl daemon-reload

systemctl enable flanneld

systemctl start flanneld

systemctl status flanneld

# 如果报错 请使用

journalctl -f -t flanneld 和 journalctl -u flanneld 来定位问题

# 配置完毕,重启 docker

systemctl daemon-reload

systemctl enable docker

systemctl restart docker

systemctl status docker

# 重启 kubelet

systemctl daemon-reload

systemctl restart kubelet

systemctl status kubelet

5,# 验证 网络

ifconfig 查看 docker0 网络 是否已经更改为配置IP网段

------

第八部分:测试集群

1,# 创建一个 nginx deplyment

vim nginx.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-dm

spec:

replicas: 2

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx

2,导入yaml 文件

kubectl apply -f nginx.yaml

[root@kubernetes-110 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-dm-fff68d674-vz2k2 1/1 Running 0 1m 10.254.104.2 kubernetes-115

nginx-dm-fff68d674-wdp2k 1/1 Running 0 1m 10.254.119.2 kubernetes-113

[root@kubernetes-110 ~]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.254.0.1

nginx-svc ClusterIP 10.254.56.221

3,验证

# 在 安装了 Flannel 网络的节点 里 curl

[root@kubernetes-114 ~]# curl 10.254.119.2

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

Commercial support is available at

Thank you for using nginx.

4,# 查看 ipvs 规则

[root@kubernetes-113 sysconfig]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr

-> 10.180.160.110:6443 Masq 1 0 0

-> 10.180.160.112:6443 Masq 1 0 0

TCP 10.254.56.221:80 rr

-> 10.254.104.2:80 Masq 1 0 0

-> 10.254.119.2:80 Masq 1 0 0

第九部分:配置 CoreDNS

官方 地址 https://coredns.io

1,下载 yaml 文件

wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed

mv coredns.yaml.sed coredns.yaml

2,# vi coredns.yaml

...

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local 10.254.0.0/18 {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

}

...

clusterIP: 10.254.0.2

# 配置说明

# 这里 kubernetes cluster.local 为 创建 svc 的 IP 段

kubernetes cluster.local 10.254.0.0/18

# clusterIP 为 指定 DNS 的 IP

clusterIP: 10.254.0.2

3,导入 CoreDNS yaml 文件

# 导入

[root@kubernetes-110 ~]# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.extensions/coredns created

service/kube-dns created

4,查看 coredns 服务

# kubectl get pod,svc -n kube-system

[root@kubernetes-110 ~]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-55f86bf584-2rz4q 1/1 Running 0 1h

pod/coredns-55f86bf584-mnd2d 1/1 Running 0 1h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.254.0.2

5,检查日志

# kubectl logs -n kube-system coredns-55f86bf584-2rz4q

[root@kubernetes-110 ~]# kubectl logs -n kube-system coredns-55f86bf584-2rz4q

.:53

2018/08/31 07:58:59 [INFO] CoreDNS-1.2.2

2018/08/31 07:58:59 [INFO] linux/amd64, go1.11, eb51e8b

CoreDNS-1.2.2

linux/amd64, go1.11, eb51e8b

6,验证 dns 服务

在验证 dns 之前,在 dns 未部署之前创建的 pod 与 deployment 等,都必须删除,重新部署,否则无法解析

# 创建一个 pods 来测试一下 dns

apiVersion: v1

kind: Pod

metadata:

name: alpine

spec:

containers:

- name: alpine

image: alpine

command:

- sh

- -c

- while true; do sleep 1; done

7,# 查看 创建的服务

[root@kubernetes-110 yaml]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

po/alpine 1/1 Running 0 19s

po/nginx-dm-84f8f49555-tmqzm 1/1 Running 0 23s

po/nginx-dm-84f8f49555-wdk67 1/1 Running 0 23s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes ClusterIP 10.254.0.1

svc/nginx-svc ClusterIP 10.254.40.179

8,# 测试

[root@kubernetes-110 ~]# kubectl exec -it alpine nslookup nginx-svc

nslookup: can't resolve '(null)': Name does not resolve

Name: nginx-svc

Address 1: 10.254.40.179 nginx-svc.default.svc.cluster.local

[root@kubernetes-64 yaml]# kubectl exec -it alpine nslookup kubernetes

nslookup: can't resolve '(null)': Name does not resolve

Name: kubernetes

Address 1: 10.254.0.1 kubernetes.default.svc.cluster.local

9,部署 DNS 自动伸缩

按照 node 数量 自动伸缩 dns 数量

vi dns-auto-scaling.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: kube-dns-autoscaler

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:kube-dns-autoscaler

labels:

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

- apiGroups: [""]

resources: ["replicationcontrollers/scale"]

verbs: ["get", "update"]

- apiGroups: ["extensions"]

resources: ["deployments/scale", "replicasets/scale"]

verbs: ["get", "update"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:kube-dns-autoscaler

labels:

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: kube-dns-autoscaler

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:kube-dns-autoscaler

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-dns-autoscaler

namespace: kube-system

labels:

k8s-app: kube-dns-autoscaler

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kube-dns-autoscaler

template:

metadata:

labels:

k8s-app: kube-dns-autoscaler

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: autoscaler

image: jicki/cluster-proportional-autoscaler-amd64:1.1.2-r2

resources:

requests:

cpu: "20m"

memory: "10Mi"

command:

- /cluster-proportional-autoscaler

- --namespace=kube-system

- --configmap=kube-dns-autoscaler

- --target=Deployment/coredns

- --default-params={"linear":{"coresPerReplica":256,"nodesPerReplica":16,"preventSinglePointFailure":true}}

- --logtostderr=true

- --v=2

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

serviceAccountName: kube-dns-autoscaler

10,# 导入文件

[root@kubernetes-110 ~]# kubectl apply -f dns-auto-scaling.yaml

serviceaccount/kube-dns-autoscaler created

clusterrole.rbac.authorization.k8s.io/system:kube-dns-autoscaler created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-dns-autoscaler created

deployment.apps/kube-dns-autoscaler created