在我们 使用tensorflow 和 spark 结合的时候 ,肯定非常激动,关键 我们打算 使用哪一种语言 建构我们的机器学习代码,最主要的的有四种 ,python java scala R,当然 python 是门槛较低的。使用java scala 一般人不一定能hold 的住,所以我们首先 讲 python版的工具链

首先 假设你已经有了一台 mac pro

安装了 python 3.5 或者3.6

jdk 8 ,最好不要用jdk9 ,jdk9 有很多问题

本地 安装 了 tensorflow 和pyspark

当然 本地 homebrew 安装 spark 2.1 和hadoop 2.8.1

想要 spark 和tensorflow 串联在一起,你需要 spark-deeplearning 这个神器

我们git 一下 威廉大哥的 ,因为 威廉大哥扩展了 原来 的databircks ,添加了新功能的,一定要用威廉大哥的,否则 威廉大哥用的一些 方法 你找不到的,比如

from sparkdl import TextEstimator

from sparkdl.transformers.easy_feature import EasyFeature

这些都是威廉自己扩展的,非常好用

https://github.com/allwefantasy/spark-deep-learning

mkdir sparkdl && cd sparkdl

git clone https://github.com/allwefantasy/spark-deep-learning.git . # 注意 点号

git checkout release

当然 单单它还不够,我们还需要 tensorflow 与spark 底层元素交互的 媒介

tensorframe,并且 spark-DeepLearning 本身依赖 tensorframes

https://github.com/databricks/tensorframes/

git clone https://github.com/databricks/tensorframes.git

本身 如果你想直接使用pip 来安装这两个包 ,抱歉 pip仓库没有。

这里就涉及到了 pip 安装 本地 package

其实 也没有多难,幸好威廉大哥给了我一些锦囊妙计

想pip 安装本地的package ,大致分两步,

1.创建 一个启动文件 setup.py, setup 文件可以参考威廉大哥的

[https://github.com/allwefantasy/spark-deep-learning/blob/release/python/setup.py](https://github.com/allwefantasy/spark-deep-learning/blob/release/python/setup.py)

2.在 setup.py文件中 配置package的属性 文件路径 等信息

- 执行 一系列命令 最重要的是 执行

python setup.py bdist_wheel

这样就会生成 二进制文件 package Name-Version-py3.whl

4.然后 进入这个文件目录 在Terminal 中执行

pip install package-Name-Version-py3.whl # [anaconda]

pip3 install package-Name-Version-py3.whl # [python 3.6]

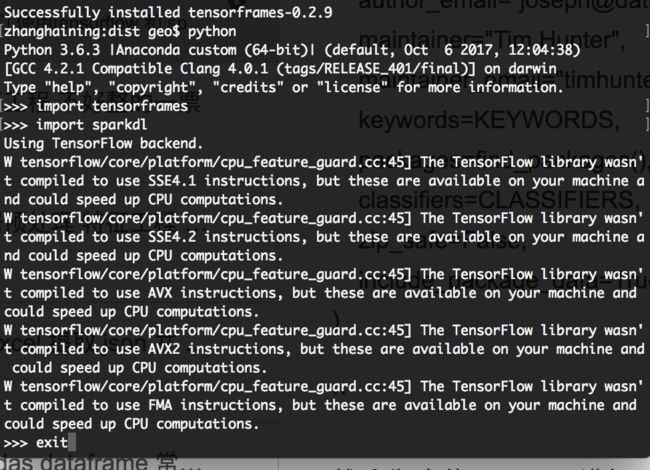

- pip list 和 pip3 list 验证 是否安装成功

- import package 查看模块是否真实可以使用

这里面要注意的就是 在执行 2的时候 要确定在同目录下必须有package对应的python源文件,否则即使生成了 whl文件,这个包也是一个假的不可以被使用的

另外 编写 setup.py文件 要注意的就是包名和版本要 与实际一致,否则可能真包安装了,本身依赖的其他包也找不到它

sparkdl 的 setup.py

import codecs

import os

from setuptools import setup, find_packages

# See this web page for explanations:

# https://hynek.me/articles/sharing-your-labor-of-love-pypi-quick-and-dirty/

PACKAGES = ["sparkdl"]

KEYWORDS = ["spark", "deep learning", "distributed computing", "machine learning"]

CLASSIFIERS = [

"Programming Language :: Python :: 2.7",

"Programming Language :: Python :: 3.4",

"Programming Language :: Python :: 3.5",

"Development Status :: 4 - Beta",

"Intended Audience :: Developers",

"Natural Language :: English",

"License :: OSI Approved :: Apache Software License",

"Operating System :: OS Independent",

"Programming Language :: Python",

"Topic :: Scientific/Engineering",

]

# Project root

ROOT = os.path.abspath(os.path.dirname(__file__))

#

#

# def read(*parts):

# """

# Build an absolute path from *parts* and and return the contents of the

# resulting file. Assume UTF-8 encoding.

# """

# with codecs.open(os.path.join(ROOT, *parts), "rb", "utf-8") as f:

# return f.read()

#

# def configuration(parent_package='', top_path=None):

# if os.path.exists('MANIFEST'):

# os.remove('MANIFEST')

#

# from numpy.distutils.misc_util import Configuration

# config = Configuration(None, parent_package, top_path)

#

# # Avoid non-useful msg:

# # "Ignoring attempt to set 'name' (from ... "

# config.set_options(ignore_setup_xxx_py=True,

# assume_default_configuration=True,

# delegate_options_to_subpackages=True,

# quiet=True)

#

# config.add_subpackage('sparkdl')

#

# return config

setup(

name="sparkdl",

description="Integration tools for running deep learning on Spark",

license="Apache 2.0",

url="https://github.com/allwefantasy/spark-deep-learning",

version="0.2.2",

author="Joseph Bradley",

author_email="[email protected]",

maintainer="Tim Hunter",

maintainer_email="[email protected]",

keywords=KEYWORDS,

packages=find_packages(),

classifiers=CLASSIFIERS,

zip_safe=False,

include_package_data=True

)

tensorframes 的 setup.py

import codecs

import os

from setuptools import setup, find_packages

# See this web page for explanations:

# https://hynek.me/articles/sharing-your-labor-of-love-pypi-quick-and-dirty/

PACKAGES = ["tensorframes"]

KEYWORDS = ["spark", "deep learning", "distributed computing", "machine learning"]

CLASSIFIERS = [

"Programming Language :: Python :: 2.7",

"Programming Language :: Python :: 3.4",

"Programming Language :: Python :: 3.5",

"Development Status :: 4 - Beta",

"Intended Audience :: Developers",

"Natural Language :: English",

"License :: OSI Approved :: Apache Software License",

"Operating System :: OS Independent",

"Programming Language :: Python",

"Topic :: Scientific/Engineering",

]

# Project root

ROOT = os.path.abspath(os.path.dirname(__file__))

#

#

# def read(*parts):

# """

# Build an absolute path from *parts* and and return the contents of the

# resulting file. Assume UTF-8 encoding.

# """

# with codecs.open(os.path.join(ROOT, *parts), "rb", "utf-8") as f:

# return f.read()

#

# def configuration(parent_package='', top_path=None):

# if os.path.exists('MANIFEST'):

# os.remove('MANIFEST')

#

# from numpy.distutils.misc_util import Configuration

# config = Configuration(None, parent_package, top_path)

#

# # Avoid non-useful msg:

# # "Ignoring attempt to set 'name' (from ... "

# config.set_options(ignore_setup_xxx_py=True,

# assume_default_configuration=True,

# delegate_options_to_subpackages=True,

# quiet=True)

#

# config.add_subpackage('sparkdl')

#

# return config

setup(

name="tensorframes",

description="Integration tools for running deep learning on Spark",

license="Apache 2.0",

url="https://github.com/databricks/tensorframes",

version="0.2.9",

author="Joseph Bradley",

author_email="[email protected]",

maintainer="Tim Hunter",

maintainer_email="[email protected]",

keywords=KEYWORDS,

packages=find_packages(),

classifiers=CLASSIFIERS,

zip_safe=False,

include_package_data=True

)

然后 先 安装 sparkdl ,进入 spark-deeplearning 目录 打开Terminal

cd ./python && python setup.py bdist_wheel && cd dist

pip install sparkdl-0.2.2-py3-none-any.whl

pip3 install sparkdl-0.2.2-py3-none-any.whl

在安装tensorframes 要注意的就是 tensorframes的根目录下的python目录没有对应的源文件,需要找到源文件 复制到这里,一定要把 ./src/main/python/ 下的两个文件目录 tensorframes 和tensorframes_snippets 拷贝到 根目录下的python目录下,否则即使 安装了tensorframes 也是不可以用的,另外一定要 提前把pyspark 安装好,否则 也是不可以用的

cp ./src/main/python/* ./python

cd ./python && python setup.py bdist_wheel && cd dist

pip install tensorframes-0.2.9-py3-none-any.whl

pip3 install tensorframes-0.2.9-py3-none-any.whl

命令可以参考 威廉大哥的

cd ./python && python [setup.py](setup.py) bdist_wheel && cd dist && pip uninstall sparkdl && pip install ./sparkdl-0.2.2-py2-none-any.whl && cd ..

然后我们在pycharm里就可以愉快的使用了