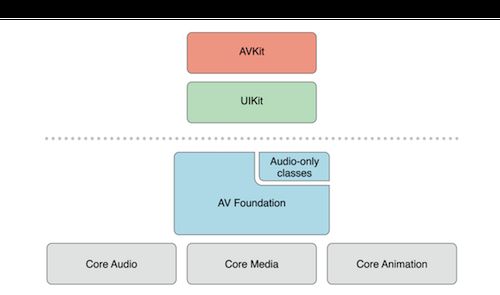

概述

AVFoundation 是一个可以用来使用和创建基于时间的视听媒体数据的框架。AVFoundation 的构建考虑到了目前的硬件环境和应用程序,其设计过程高度依赖多线程机制。充分利用了多核硬件的优势并大量使用block和GCD机制,将复杂的计算机进程放到了后台线程运行。会自动提供硬件加速操作,确保在大部分设备上应用程序能以最佳性能运行。该框架就是针对64位处理器设计的,可以发挥64位处理器的所有优势。

AVAssetReader

AVAssetReader用于从AVAsset中读取媒体样本。通常会配置一个或多个AVAssetReaderOutput,并通过- (CMSampleBufferRef)copyNextSampleBuffer;方法访问音频样本和视频帧。以下是AVAssetReader的读取媒体数据的相关状态:

typedef NS_ENUM(NSInteger, AVAssetReaderStatus) {

AVAssetReaderStatusUnknown = 0,

AVAssetReaderStatusReading,

AVAssetReaderStatusCompleted,

AVAssetReaderStatusFailed,

AVAssetReaderStatusCancelled,

};

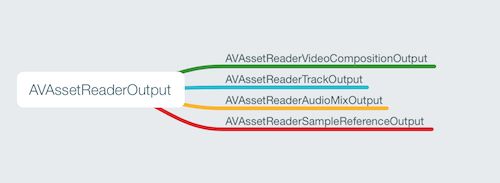

AVAssetReaderOutput

AVAssetReaderOutput是一个抽象类,不过框架定义许多具体实例来从指定的AVAssetTrack中读取解码的媒体样本。从多音频轨道中读取混合输出,或从多视频轨道中读取组合输出。

AVAssetWriter

AVAssetWriter是AVAssetReader的兄弟类,它的作用是对媒体文件进行编码,并写入到相关的媒体文件中,如MPEG-4文件或QuickTime文件。AVAssetWriter可以自动支持交叉媒体样本,为了保持合适的交叉模式,AVAssetWriterInput提供了一个readyForMoreMediaData属性来指示在保持所需的交叉情况下输入信息是否还可以附加更多信息。以下是AVAssetWriter的写入媒体数据的相关状态:

typedef NS_ENUM(NSInteger, AVAssetWriterStatus) {

AVAssetWriterStatusUnknown = 0,

AVAssetWriterStatusWriting,

AVAssetWriterStatusCompleted,

AVAssetWriterStatusFailed,

AVAssetWriterStatusCancelled

};

AVAssetWriterInput

AVAssetWriterInput用于配置AVAssetWriter可以处理的媒体类型,如音频或视频。并且附加在其后的样本最终会生成一个独立的AVAssetTrack。当需要处理视频样本的时候,我们通常会使用一个适配器对象AVAssetWriterInputPixelBufferAdaptor。之所以会使用它,是因为它在处理视频的时候具有最优的性能。

资源读取

1、新建QMMediaReader类用于读取媒体文件;

2、在- (void)startProcessing方法中使用- (void)loadValuesAsynchronouslyForKeys:(NSArray异步加载tracks属性;

3、加载成功后,创建AVAssetReader;

4、创建AVAssetReaderTrackOutput,创建时分别设置音视频的解码格式为kCVPixelFormatType_32BGRA和kAudioFormatLinearPCM;

5、当AVAssetReader状态为AVAssetReaderStatusReading循环读取媒体数据,并回调CMSampleBufferRef给消费者。

6、当AVAssetReader读取结束后取消读取,并回调消费者。

//

// QMMediaReader.h

// AVFoundation

//

// Created by mac on 17/8/28.

// Copyright © 2017年 Qinmin. All rights reserved.

//

#import

@interface QMMediaReader : NSObject

@property (nonatomic, strong) void(^videoReaderCallback)(CMSampleBufferRef videoBuffer);

@property (nonatomic, strong) void(^audioReaderCallback)(CMSampleBufferRef audioBuffer);

@property (nonatomic, strong) void(^readerCompleteCallback)(void);

- (instancetype)initWithAsset:(AVAsset *)asset;

- (instancetype)initWithURL:(NSURL *)url;

- (void)startProcessing;

- (void)cancelProcessing;

@end

//

// QMMediaReader.m

// AVFoundation

//

// Created by mac on 17/8/28.

// Copyright © 2017年 Qinmin. All rights reserved.

//

#import "QMMediaReader.h"

#import

#import

@interface QMMediaReader ()

@property (nonatomic, strong) NSURL *url;

@property (nonatomic, strong) AVAsset *asset;

@property (nonatomic, strong) AVAssetReader *reader;

@property (nonatomic, assign) CMTime previousFrameTime;

@property (nonatomic, assign) CFAbsoluteTime previousActualFrameTime;

@end

@implementation QMMediaReader

- (id)initWithURL:(NSURL *)url;

{

if (self = [super init])

{

self.url = url;

self.asset = nil;

}

return self;

}

- (id)initWithAsset:(AVAsset *)asset;

{

if (self = [super init])

{

self.url = nil;

self.asset = asset;

}

return self;

}

#pragma mark - cancelProcessing

- (void)cancelProcessing

{

if (self.reader) {

[self.reader cancelReading];

}

}

#pragma mark - startProcessing

- (void)startProcessing

{

_previousFrameTime = kCMTimeZero;

_previousActualFrameTime = CFAbsoluteTimeGetCurrent();

NSDictionary *inputOptions = @{AVURLAssetPreferPreciseDurationAndTimingKey : @(YES)};

self.asset = [[AVURLAsset alloc] initWithURL:self.url options:inputOptions];

__weak typeof(self) weakSelf = self;

[self.asset loadValuesAsynchronouslyForKeys:@[@"tracks"] completionHandler: ^{

dispatch_async(dispatch_get_global_queue(0, 0), ^{

NSError *error = nil;

AVKeyValueStatus tracksStatus = [weakSelf.asset statusOfValueForKey:@"tracks" error:&error];

if (tracksStatus != AVKeyValueStatusLoaded) {

return;

}

[weakSelf processAsset];

});

}];

}

- (AVAssetReader *)createAssetReader

{

NSError *error = nil;

AVAssetReader *assetReader = [AVAssetReader assetReaderWithAsset:self.asset error:&error];

// Video

NSArray *videoTracks = [self.asset tracksWithMediaType:AVMediaTypeVideo];

BOOL shouldRecordVideoTrack = [videoTracks count] > 0;

AVAssetReaderTrackOutput *readerVideoTrackOutput = nil;

if (shouldRecordVideoTrack) {

AVAssetTrack* videoTrack = [videoTracks firstObject];

NSDictionary *outputSettings = @{

(id)kCVPixelBufferPixelFormatTypeKey:@(kCVPixelFormatType_32BGRA)

};

readerVideoTrackOutput = [AVAssetReaderTrackOutput assetReaderTrackOutputWithTrack:videoTrack outputSettings:outputSettings];

readerVideoTrackOutput.alwaysCopiesSampleData = NO;

[assetReader addOutput:readerVideoTrackOutput];

}

// Audio

NSArray *audioTracks = [self.asset tracksWithMediaType:AVMediaTypeAudio];

BOOL shouldRecordAudioTrack = [audioTracks count] > 0;

AVAssetReaderTrackOutput *readerAudioTrackOutput = nil;

if (shouldRecordAudioTrack)

{

AVAssetTrack* audioTrack = [audioTracks firstObject];

NSDictionary *audioOutputSetting = @{

AVFormatIDKey : @(kAudioFormatLinearPCM),

AVNumberOfChannelsKey : @(2),

};

readerAudioTrackOutput = [AVAssetReaderTrackOutput assetReaderTrackOutputWithTrack:audioTrack outputSettings:audioOutputSetting];

readerAudioTrackOutput.alwaysCopiesSampleData = NO;

[assetReader addOutput:readerAudioTrackOutput];

}

return assetReader;

}

- (void)processAsset

{

self.reader = [self createAssetReader];

AVAssetReaderOutput *readerVideoTrackOutput = nil;

AVAssetReaderOutput *readerAudioTrackOutput = nil;

for( AVAssetReaderOutput *output in self.reader.outputs ) {

if( [output.mediaType isEqualToString:AVMediaTypeAudio] ) {

readerAudioTrackOutput = output;

}else if( [output.mediaType isEqualToString:AVMediaTypeVideo] ) {

readerVideoTrackOutput = output;

}

}

if ([self.reader startReading] == NO) {

NSLog(@"Error reading from file at URL: %@", self.url);

return;

}

while (self.reader.status == AVAssetReaderStatusReading ) {

if (readerVideoTrackOutput) {

[self readNextVideoFrameFromOutput:readerVideoTrackOutput];

}

if (readerAudioTrackOutput) {

[self readNextAudioSampleFromOutput:readerAudioTrackOutput];

}

}

if (self.reader.status == AVAssetReaderStatusCompleted) {

[self.reader cancelReading];

if (self.readerCompleteCallback) {

self.readerCompleteCallback();

}

}

}

- (void)readNextVideoFrameFromOutput:(AVAssetReaderOutput *)readerVideoTrackOutput;

{

if (self.reader.status == AVAssetReaderStatusReading)

{

CMSampleBufferRef sampleBufferRef = [readerVideoTrackOutput copyNextSampleBuffer];

if (sampleBufferRef)

{

//NSLog(@"read a video frame: %@", CFBridgingRelease(CMTimeCopyDescription(kCFAllocatorDefault, CMSampleBufferGetOutputPresentationTimeStamp(sampleBufferRef))));

BOOL playAtActualSpeed = YES;

if (playAtActualSpeed) {

// Do this outside of the video processing queue to not slow that down while waiting

CMTime currentSampleTime = CMSampleBufferGetOutputPresentationTimeStamp(sampleBufferRef);

CMTime differenceFromLastFrame = CMTimeSubtract(currentSampleTime, _previousFrameTime);

CFAbsoluteTime currentActualTime = CFAbsoluteTimeGetCurrent();

CGFloat frameTimeDifference = CMTimeGetSeconds(differenceFromLastFrame);

CGFloat actualTimeDifference = currentActualTime - _previousActualFrameTime;

if (frameTimeDifference > actualTimeDifference)

{

usleep(1000000.0 * (frameTimeDifference - actualTimeDifference));

}

_previousFrameTime = currentSampleTime;

_previousActualFrameTime = CFAbsoluteTimeGetCurrent();

}

if (self.videoReaderCallback) {

self.videoReaderCallback(sampleBufferRef);

}

CMSampleBufferInvalidate(sampleBufferRef);

CFRelease(sampleBufferRef);

}

}

}

- (void)readNextAudioSampleFromOutput:(AVAssetReaderOutput *)readerAudioTrackOutput;

{

if (self.reader.status == AVAssetReaderStatusReading)

{

CMSampleBufferRef audioSampleBufferRef = [readerAudioTrackOutput copyNextSampleBuffer];

if (audioSampleBufferRef)

{

//NSLog(@"read an audio frame: %@", CFBridgingRelease(CMTimeCopyDescription(kCFAllocatorDefault, CMSampleBufferGetOutputPresentationTimeStamp(audioSampleBufferRef))));

if (self.audioReaderCallback) {

self.audioReaderCallback(audioSampleBufferRef);

}

CFRelease(audioSampleBufferRef);

}

}

}

@end

资源写入

1、新建MediaWriter类用于保存媒体文件;

2、根据传入的输出路径、文件类型创建AVAssetWriter;

3、创建音视频AVAssetWriterInput,并设置相关的编码格式为AVVideoCodecH264和kAudioFormatMPEG4AAC;

4、当AVAssetWriter状态为AVAssetWriterStatusWriting的时候追加写入音视频数据,当AVAssetWriterInput不为readyForMoreMediaData的时候则等待;

5、结束的时候调用AVAssetWriter的- (void)finishWritingWithCompletionHandler:。

//

// QMMediaWriter.h

// AVFoundation

//

// Created by mac on 17/8/28.

// Copyright © 2017年 Qinmin. All rights reserved.

//

#import

#import

@interface QMMediaWriter : NSObject

- (instancetype)initWithOutputURL:(NSURL *)URL size:(CGSize)newSize;

- (instancetype)initWithOutputURL:(NSURL *)URL size:(CGSize)newSize fileType:(NSString *)newFileType;

- (void)processVideoBuffer:(CMSampleBufferRef)videoBuffer;

- (void)processAudioBuffer:(CMSampleBufferRef)audioBuffer;

- (void)finishWriting;

@end

//

// QMMediaWriter.m

// AVFoundation

//

// Created by mac on 17/8/28.

// Copyright © 2017年 Qinmin. All rights reserved.

//

#import "QMMediaWriter.h"

#import

@interface QMMediaWriter ()

@property (nonatomic, strong) AVAssetWriter *assetWriter;

@property (nonatomic, strong) AVAssetWriterInput *assetWriterAudioInput;

@property (nonatomic, strong) AVAssetWriterInput *assetWriterVideoInput;

@property (nonatomic, strong) AVAssetWriterInputPixelBufferAdaptor *assetWriterPixelBufferInput;

@property (nonatomic, assign) BOOL encodingLiveVideo;

@property (nonatomic, assign) CGSize videoSize;

@property (nonatomic, assign) CMTime startTime;

@end

@implementation QMMediaWriter

- (instancetype)initWithOutputURL:(NSURL *)URL size:(CGSize)newSize

{

return [self initWithOutputURL:URL size:newSize fileType:AVFileTypeQuickTimeMovie];

}

- (instancetype)initWithOutputURL:(NSURL *)URL size:(CGSize)newSize fileType:(NSString *)newFileType

{

if (self = [super init]) {

_videoSize = newSize;

_startTime = kCMTimeInvalid;

_encodingLiveVideo = YES;

[self buildAssetWriterWithURL:URL fileType:newFileType];

[self buildVideoWriter];

[self buildAudioWriter];

}

return self;

}

#pragma mark - AVAssetWriter

- (void)buildAssetWriterWithURL:(NSURL *)url fileType:(NSString *)fileType

{

NSError *error;

self.assetWriter = [AVAssetWriter assetWriterWithURL:url fileType:fileType error:&error];

if (error) {

NSLog(@"%@", [error localizedDescription]);

exit(0);

}

self.assetWriter.movieFragmentInterval = CMTimeMakeWithSeconds(1.0, 1000);

}

- (void)buildVideoWriter

{

NSDictionary *dict = @{

AVVideoWidthKey:@(_videoSize.width),

AVVideoHeightKey:@(_videoSize.height),

AVVideoCodecKey:AVVideoCodecH264

};

self.assetWriterVideoInput = [AVAssetWriterInput assetWriterInputWithMediaType:AVMediaTypeVideo outputSettings:dict];

self.assetWriterVideoInput.expectsMediaDataInRealTime = _encodingLiveVideo;

NSDictionary *attributesDictionary = @{

(id)kCVPixelBufferPixelFormatTypeKey : @(kCVPixelFormatType_32BGRA),

(id)kCVPixelBufferWidthKey : @(_videoSize.width),

(id)kCVPixelBufferHeightKey : @(_videoSize.height)

};

self.assetWriterPixelBufferInput = [AVAssetWriterInputPixelBufferAdaptor assetWriterInputPixelBufferAdaptorWithAssetWriterInput:self.assetWriterVideoInput sourcePixelBufferAttributes:attributesDictionary];

[self.assetWriter addInput:self.assetWriterVideoInput];

}

- (void)buildAudioWriter

{

NSDictionary *audioOutputSettings = @{

AVFormatIDKey : @(kAudioFormatMPEG4AAC),

AVNumberOfChannelsKey : @(2),

AVSampleRateKey : @(48000),

};

self.assetWriterAudioInput = [AVAssetWriterInput assetWriterInputWithMediaType:AVMediaTypeAudio outputSettings:audioOutputSettings];

[self.assetWriter addInput:self.assetWriterAudioInput];

self.assetWriterAudioInput.expectsMediaDataInRealTime = _encodingLiveVideo;

}

#pragma mark - AudioBuffer

- (void)processVideoBuffer:(CMSampleBufferRef)videoBuffer

{

if (!CMSampleBufferIsValid(videoBuffer)) {

return;

}

CFRetain(videoBuffer);

CMTime currentSampleTime = CMSampleBufferGetOutputPresentationTimeStamp(videoBuffer);

if (CMTIME_IS_INVALID(_startTime))

{

if (self.assetWriter.status != AVAssetWriterStatusWriting)

{

[self.assetWriter startWriting];

}

[self.assetWriter startSessionAtSourceTime:currentSampleTime];

_startTime = currentSampleTime;

}

while(!self.assetWriterVideoInput.readyForMoreMediaData && !_encodingLiveVideo) {

NSDate *maxDate = [NSDate dateWithTimeIntervalSinceNow:0.1];

[[NSRunLoop currentRunLoop] runUntilDate:maxDate];

}

NSLog(@"video => %ld %@", (long)self.assetWriter.status, [self.assetWriter.error localizedDescription]);

if (!self.assetWriterVideoInput.readyForMoreMediaData) {

NSLog(@"had to drop a video frame");

} else if(self.assetWriter.status == AVAssetWriterStatusWriting) {

CVImageBufferRef cvimgRef = CMSampleBufferGetImageBuffer(videoBuffer);

if (![self.assetWriterPixelBufferInput appendPixelBuffer:cvimgRef withPresentationTime:currentSampleTime]) {

NSLog(@"appending pixel fail");

}

} else {

NSLog(@"write frame fail");

}

CFRelease(videoBuffer);

}

- (void)processAudioBuffer:(CMSampleBufferRef)audioBuffer;

{

if (!CMSampleBufferIsValid(audioBuffer)) {

return;

}

CFRetain(audioBuffer);

CMTime currentSampleTime = CMSampleBufferGetOutputPresentationTimeStamp(audioBuffer);

if (CMTIME_IS_INVALID(_startTime))

{

if (self.assetWriter.status != AVAssetWriterStatusWriting)

{

[self.assetWriter startWriting];

}

[self.assetWriter startSessionAtSourceTime:currentSampleTime];

_startTime = currentSampleTime;

}

NSLog(@"audio => %ld %@", (long)self.assetWriter.status, [self.assetWriter.error localizedDescription]);

while(!self.assetWriterAudioInput.readyForMoreMediaData && ! _encodingLiveVideo) {

NSDate *maxDate = [NSDate dateWithTimeIntervalSinceNow:0.5];

[[NSRunLoop currentRunLoop] runUntilDate:maxDate];

}

if (!self.assetWriterAudioInput.readyForMoreMediaData) {

NSLog(@"had to drop an audio frame");

} else if(self.assetWriter.status == AVAssetWriterStatusWriting) {

if (![self.assetWriterAudioInput appendSampleBuffer:audioBuffer]) {

NSLog(@"appending audio buffer fail");

}

} else {

NSLog(@"write audio frame fail");

}

CFRelease(audioBuffer);

}

- (void)finishWriting

{

if (self.assetWriter.status == AVAssetWriterStatusCompleted || self.assetWriter.status == AVAssetWriterStatusCancelled || self.assetWriter.status == AVAssetWriterStatusUnknown) {

return;

}

if(self.assetWriter.status == AVAssetWriterStatusWriting) {

[self.assetWriterVideoInput markAsFinished];

}

if(self.assetWriter.status == AVAssetWriterStatusWriting) {

[self.assetWriterAudioInput markAsFinished];

}

[self.assetWriter finishWritingWithCompletionHandler:^{

}];

}

@end

使用MediaReader和MediaWriter

_mediaWriter = [[QMMediaWriter alloc] initWithOutputURL:[NSURL fileURLWithPath:kDocumentPath(@"1.mp4")] size:CGSizeMake(640, 360)];

_mediaReader = [[QMMediaReader alloc] initWithURL:[[NSBundle mainBundle] URLForResource:@"1" withExtension:@"mp4"]];

__weak typeof(self) weakSelf = self;

[_mediaReader setVideoReaderCallback:^(CMSampleBufferRef videoBuffer) {

[weakSelf.mediaWriter processVideoBuffer:videoBuffer];

}];

[_mediaReader setAudioReaderCallback:^(CMSampleBufferRef audioBuffer) {

[weakSelf.mediaWriter processAudioBuffer:audioBuffer];

}];

[_mediaReader setReaderCompleteCallback:^{

NSLog(@"==finish===");

[weakSelf.mediaWriter finishWriting];

}];

[_mediaReader startProcessing];

参考

AVFoundation开发秘籍:实践掌握iOS & OSX应用的视听处理技术

源码地址:AVFoundation开发 https://github.com/QinminiOS/AVFoundation