assignment2下,在cnn之前还有fully connected neural nets,batch norm和dropout的assignment,dropout实现起来还是挺简单的,batch norm的话在上一章我参考了别人的解法(一开始自己想错了,后来还没来得及自己重新推导一遍,之后希望可以补上这个过程),fcn_net的实现主要依靠forward:存储cache并逐层计算layer的输出,backward:根据cache和dout来计算当前层的参数的梯度下降增量(dout是上一层传递下来的,cache是当前层的,注意cache在forward过程中存下的是当前layer的输入量和其他有关计算的,比如第1层(输入层)的cache就是输入x和第一层的weight和bias,如果是一些特殊的layer,比如dropout层需要保存当前层的mask【 决定哪些输入feature在此置0, 即np.random.rand(x.shape[0], x.shape[1]) 】)。

接下来是重点...实现一个CNN,毕竟fully connected nets只是一个testbed。对于实现cnn,我会一步一步记录自己的理解和做法。

1. Conv Layer Naive Forward

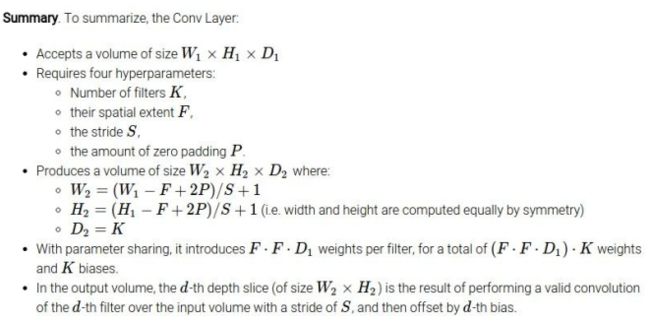

卷积层的pad,输出的dimension计算如下:

注意kernel是对输入层的所有层都进行卷积,所以如果只有一个filter那么输出只有1层,如果有n个filter,那么输出就有n层了。 一个filter中用于卷积的kernel数取决于上一层的输入channel的个数,每个channel对应一个kernel,代码:

def conv_forward_naive(x, w, b, conv_param):

"""

A naive implementation of the forward pass for a convolutional layer.

The input consists of N data points, each with C channels, height H and width

W. We convolve each input with F different filters, where each filter spans

all C channels and has height HH and width HH.

Input:

- x: Input data of shape (N, C, H, W)

- w: Filter weights of shape (F, C, HH, WW)

- b: Biases, of shape (F,)

- conv_param: A dictionary with the following keys:

- 'stride': The number of pixels between adjacent receptive fields in the

horizontal and vertical directions.

- 'pad': The number of pixels that will be used to zero-pad the input.

Returns a tuple of:

- out: Output data, of shape (N, F, H', W') where H' and W' are given by

H' = 1 + (H + 2 * pad - HH) / stride

W' = 1 + (W + 2 * pad - WW) / stride

- cache: (x, w, b, conv_param)

"""

out = None

#############################################################################

# TODO: Implement the convolutional forward pass. #

# Hint: you can use the function np.pad for padding. #

#############################################################################

stride = conv_param['stride']

pad = conv_param['pad']

print 'pad:', pad

# print 'stride:', stride, 'pad:', pad

# print 'x', x.shape, 'w', w.shape

x_pad = np.zeros((x.shape[0], x.shape[1], x.shape[2]+pad*2, x.shape[3]+pad*2))

# pad 0 to image

for i in range(x.shape[0]):

for j in range(x.shape[1]):

x_pad[i,j,:,:]=np.pad(x[i,j,:,:], pad, 'constant', constant_values=0)

H_out = (x.shape[2]+2*pad-w.shape[2])/stride+1

W_out = (x.shape[3]+2*pad-w.shape[3])/stride+1

out = np.zeros((x.shape[0], w.shape[0], H_out, W_out))

# convolution

for i in range(H_out):

for j in range(W_out):

for k in range(w.shape[0]):

out[:, k, i, j] = np.sum(w[k,:,:,:]*x_pad[:, :, i*stride:i*stride+w.shape[2], j*stride:j*stride+w.shape[3]], axis=(1,2,3))+b[k]

#############################################################################

# END OF YOUR CODE #

#############################################################################

cache = (x, w, b, conv_param)

return out, cache

2. Conv Layer Naive Backward

【推荐阅读】:

http://www.jefkine.com/general/2016/09/05/backpropagation-in-convolutional-neural-networks/

个人总结的感性认识:

- dx一定和x的shape相同,dw一定和w的shape相同,db一定和b的shape相同,dout和out的shape一定相同。只要考虑下它们的意义就可以知道。dL/dx的含义(也就是代码中的dx)是x的改变会多大程度地改变L,而x的改变也只能是在自己的shape下。

- 在计算的时候先将x进行pad。我们知道在卷积的过程中,每一次卷积运算,对应于上图的2×2和2×2矩阵的作用,其实就是一个相乘累加过程。对于out层的每一个元素,都是由input layer和weight矩阵对应的相乘累加得到的。以dx为例,input矩阵x中的每个元素可能会参与1到几次不等的运算,与weight相乘将结果贡献到out layer中,比如x(0,0)只参与了一次,那么它的dx(0,0)=weight(0,0)×dout(...),而x(0,1)参与了两次,分别贡献到out的不同位置,那么dx(0,1)=weight(0,0)×dout(...)+weight(0,1)×dout(...),以此类推。

代码:

def conv_backward_naive(dout, cache):

"""

A naive implementation of the backward pass for a convolutional layer.

Inputs:

- dout: Upstream derivatives.

- cache: A tuple of (x, w, b, conv_param) as in conv_forward_naive

Returns a tuple of:

- dx: Gradient with respect to x

- dw: Gradient with respect to w

- db: Gradient with respect to b

"""

dx, dw, db = None, None, None

#############################################################################

# TODO: Implement the convolutional backward pass. #

#############################################################################

x = cache[0]

w = cache[1]

b = cache[2]

stride = cache[3]['stride']

pad = cache[3]['pad']

x_pad=np.pad(x, [(0,0),(0,0),(pad,pad),(pad,pad)], 'constant', constant_values=0)

dx_pad = np.zeros((x.shape[0], x.shape[1], x.shape[2]+2*pad, x.shape[3]+2*pad))

dw = np.zeros(w.shape)

db = np.zeros(b.shape)

# k,即第一维是样本个数;f,即第二维是filter个数;xi和xj是该层输出map的长宽。

# 以下代码根据上面的分析就很好理解了

for k in range(dout.shape[0]):

for f in range(dout.shape[1]):

for xi in range(dout.shape[2]):

for xj in range(dout.shape[3]):

dx_pad[k,:,xi*stride:xi*stride+w.shape[2],xj*stride:xj*stride+w.shape[3]] += w[f,:]*dout[k,f,xi,xj]

dw[f] += x_pad[k,:,xi*stride:xi*stride+w.shape[2],xj*stride:xj*stride+w.shape[3]]*dout[k,f,xi,xj]

dx = dx_pad[:,:,pad:pad+x.shape[2],pad:pad+x.shape[3]]

db = np.sum(dout, axis=(0,2,3))

#############################################################################

# END OF YOUR CODE #

#############################################################################

return dx, dw, db

3. Max Pooling Naive Forward

def max_pool_forward_naive(x, pool_param):

"""

A naive implementation of the forward pass for a max pooling layer.

Inputs:

- x: Input data, of shape (N, C, H, W)

- pool_param: dictionary with the following keys:

- 'pool_height': The height of each pooling region

- 'pool_width': The width of each pooling region

- 'stride': The distance between adjacent pooling regions

Returns a tuple of:

- out: Output data

- cache: (x, pool_param)

"""

out = None

#############################################################################

# TODO: Implement the max pooling forward pass #

#############################################################################

pool_height = pool_param['pool_height']

pool_width = pool_param['pool_width']

stride = pool_param['stride']

out = np.zeros(( x.shape[0], x.shape[1],

(x.shape[2]-pool_height)//stride+1,

(x.shape[3]-pool_width)//stride+1 ))

for n in range(x.shape[0]):

for c in range(x.shape[1]):

for i in range(out.shape[2]):

for j in range(out.shape[3]):

out[n,c,i,j] = np.max( x[n,c,i*stride:i*stride+pool_height, j*stride:j*stride+pool_width] )

#############################################################################

# END OF YOUR CODE #

#############################################################################

cache = (x, pool_param)

return out, cache

4. Max Pooling Naive Backward

def max_pool_backward_naive(dout, cache):

"""

A naive implementation of the backward pass for a max pooling layer.

Inputs:

- dout: Upstream derivatives

- cache: A tuple of (x, pool_param) as in the forward pass.

Returns:

- dx: Gradient with respect to x

"""

dx = None

#############################################################################

# TODO: Implement the max pooling backward pass #

#############################################################################

x = cache[0]

dx = np.zeros(x.shape)

pool_param = cache[1]

stride = pool_param['stride']

pool_height = pool_param['pool_height']

pool_width = pool_param['pool_width']

for n in range(x.shape[0]):

for c in range(x.shape[1]):

for i in range(dout.shape[2]):

for j in range(dout.shape[3]):

tmp = x[n,c,i*stride:i*stride+pool_height,j*stride:j*stride+pool_width]

binary = tmp==np.max(tmp)

dx[n,c,i*stride:i*stride+pool_height,j*stride:j*stride+pool_width] = binary*dout[n,c,i,j]

#############################################################################

# END OF YOUR CODE #

#############################################################################

return dx

5. 训练一个(Conv + Relu + Pooling) + (Affine + Relu) + (Affine) + Softmax 三层网络

class ThreeLayerConvNet(object):

"""

A three-layer convolutional network with the following architecture:

conv - relu - 2x2 max pool - affine - relu - affine - softmax

The network operates on minibatches of data that have shape (N, C, H, W)

consisting of N images, each with height H and width W and with C input

channels.

"""

def __init__(self, input_dim=(3, 32, 32), num_filters=32, filter_size=7,

hidden_dim=100, num_classes=10, weight_scale=1e-3, reg=0.0,

dtype=np.float32):

"""

Initialize a new network.

Inputs:

- input_dim: Tuple (C, H, W) giving size of input data

- num_filters: Number of filters to use in the convolutional layer

- filter_size: Size of filters to use in the convolutional layer

- hidden_dim: Number of units to use in the fully-connected hidden layer

- num_classes: Number of scores to produce from the final affine layer.

- weight_scale: Scalar giving standard deviation for random initialization

of weights.

- reg: Scalar giving L2 regularization strength

- dtype: numpy datatype to use for computation.

"""

self.params = {}

self.reg = reg

self.dtype = dtype

############################################################################

# TODO: Initialize weights and biases for the three-layer convolutional #

# network. Weights should be initialized from a Gaussian with standard #

# deviation equal to weight_scale; biases should be initialized to zero. #

# All weights and biases should be stored in the dictionary self.params. #

# Store weights and biases for the convolutional layer using the keys 'W1' #

# and 'b1'; use keys 'W2' and 'b2' for the weights and biases of the #

# hidden affine layer, and keys 'W3' and 'b3' for the weights and biases #

# of the output affine layer. #

############################################################################

self.params['W1'] = np.random.normal(0, weight_scale, (num_filters, input_dim[0], filter_size, filter_size))

self.params['b1'] = np.zeros(num_filters)

W2_rol_size = num_filters * input_dim[1]/2 * input_dim[2]/2

self.params['W2'] = np.random.normal(0, weight_scale, (W2_rol_size, hidden_dim))

self.params['b2'] = np.zeros(hidden_dim)

self.params['W3'] = np.random.normal(0, weight_scale, (hidden_dim, num_classes))

self.params['b3'] = np.zeros(num_classes)

############################################################################

# END OF YOUR CODE #

############################################################################

for k, v in self.params.iteritems():

self.params[k] = v.astype(dtype)

def loss(self, X, y=None):

"""

Evaluate loss and gradient for the three-layer convolutional network.

Input / output: Same API as TwoLayerNet in fc_net.py.

"""

W1, b1 = self.params['W1'], self.params['b1']

W2, b2 = self.params['W2'], self.params['b2']

W3, b3 = self.params['W3'], self.params['b3']

# pass conv_param to the forward pass for the convolutional layer

filter_size = W1.shape[2]

conv_param = {'stride': 1, 'pad': (filter_size - 1) / 2}

# pass pool_param to the forward pass for the max-pooling layer

pool_param = {'pool_height': 2, 'pool_width': 2, 'stride': 2}

scores = None

loss, grads = 0, {}

############################################################################

# TODO: Implement the forward pass for the three-layer convolutional net, #

# computing the class scores for X and storing them in the scores #

# variable. #

############################################################################

out1, cache1 = conv_relu_pool_forward(X, W1, b1, conv_param, pool_param)

out2, cache2 = affine_relu_forward(out1, W2, b2)

out3, cache3 = affine_forward(out2, W3, b3)

score = out3

loss, dscore = softmax_loss(out3, y)

loss += np.sum(0.5*self.reg*np.sum(W**2) for W in [W1, W2, W3])

############################################################################

# END OF YOUR CODE #

############################################################################

if y is None:

return scores

############################################################################

# TODO: Implement the backward pass for the three-layer convolutional net, #

# storing the loss and gradients in the loss and grads variables. Compute #

# data loss using softmax, and make sure that grads[k] holds the gradients #

# for self.params[k]. Don't forget to add L2 regularization! #

############################################################################

dout3, grads['W3'], grads['b3'] = affine_backward(dscore, cache3)

dout2, grads['W2'], grads['b2'] = affine_relu_backward(dout3, cache2)

dout1, grads['W1'], grads['b1'] = conv_relu_pool_backward(dout2, cache1)

grads['W3'] += self.reg*W3

grads['W2'] += self.reg*W2

grads['W1'] += self.reg*W1

############################################################################

# END OF YOUR CODE #

############################################################################

return loss, grads

6. Spatial Batch Normalization

We already saw that batch normalization is a very useful technique for training deep fully-connected networks. Batch normalization can also be used for convolutional networks, but we need to tweak it a bit; the modification will be called "spatial batch normalization."

Normally batch-normalization accepts inputs of shape (N, D) and produces outputs of shape (N, D), where we normalize across the minibatch dimension N. For data coming from convolutional layers, batch normalization needs to accept inputs of shape (N, C, H, W) and produce outputs of shape (N, C, H, W) where the N dimension gives the minibatch size and the (H, W) dimensions give the spatial size of the feature map.

If the feature map was produced using convolutions, then we expect the statistics of each feature channel to be relatively consistent both between different images and different locations within the same image. Therefore spatial batch normalization computes a mean and variance for each of the C feature channels by computing statistics over both the minibatch dimension N and the spatial dimensions H and W.

CNN和全连接的NN的BN方法不一样的地方在于,Conv layer使用的Spatial BN的思想是按照Channel(RGB通道)来进行归一化,保证不同图和同一图的不同位置在一个通道的分布上是一致的、归一化的。这部分我没有自己去写,直接给出github上martinkersner的代码:

def spatial_batchnorm_forward(x, gamma, beta, bn_param):

"""

Computes the forward pass for spatial batch normalization.

Inputs:

- x: Input data of shape (N, C, H, W)

- gamma: Scale parameter, of shape (C,)

- beta: Shift parameter, of shape (C,)

- bn_param: Dictionary with the following keys:

- mode: 'train' or 'test'; required

- eps: Constant for numeric stability

- momentum: Constant for running mean / variance. momentum=0 means that old information is discarded completely at every time step, while momentum=1 means that new information is never incorporated. The default of momentum=0.9 should work well in most situations.

- running_mean: Array of shape (D,) giving running mean of features

- running_var Array of shape (D,) giving running variance of features

Returns a tuple of:

- out: Output data, of shape (N, C, H, W)

- cache: Values needed for the backward pass

"""

out, cache = None, None

##############################################################################

# Implement the forward pass for spatial batch normalization.

# HINT: You can implement spatial batch normalization using the vanilla #

# version of batch normalization defined above. Your implementation should #

# be very short; ours is less than five lines.

##############################################################################

N, C, H, W = x.shape

x_reshaped = x.transpose(0,2,3,1).reshape(N*H*W, C)

out_tmp, cache = batchnorm_forward(x_reshaped, gamma, beta, bn_param)

out = out_tmp.reshape(N, H, W, C).transpose(0, 3, 1, 2)

return out, cache

def spatial_batchnorm_backward(dout, cache):

"""

Computes the backward pass for spatial batch normalization.

Inputs:

- dout: Upstream derivatives, of shape (N, C, H, W)

- cache: Values from the forward pass

Returns a tuple of:

- dx: Gradient with respect to inputs, of shape (N, C, H, W)

- dgamma: Gradient with respect to scale parameter, of shape (C,)

- dbeta: Gradient with respect to shift parameter, of shape (C,)

"""

dx, dgamma, dbeta = None, None, None

#############################################################################

# Implement the backward pass for spatial batch normalization.

## HINT: You can implement spatial batch normalization using the vanilla

## version of batch normalization defined above. Your implementation should

## be very short; ours is less than five lines.

##############################################################################

N, C, H, W = dout.shape

dout_reshaped = dout.transpose(0,2,3,1).reshape(N*H*W, C)

dx_tmp, dgamma, dbeta = batchnorm_backward(dout_reshaped, cache)

dx = dx_tmp.reshape(N, H, W, C).transpose(0, 3, 1, 2)

return dx, dgamma, dbeta

【写在最后的tip】Tips for training:

For each network architecture that you try, you should tune the learning rate and regularization strength. When doing this there are a couple important things to keep in mind:

- If the parameters are working well, you should see improvement within a few hundred iterations

- Remember the course-to-fine approach for hyperparameter tuning: start by testing a large range of hyperparameters for just a few training iterations to find the combinations of parameters that are working at all.

- Once you have found some sets of parameters that seem to work, search more finely around these parameters. You may need to train for more epochs.

另外对于cnn的梯度反向传播来说,可以看看更高效的做法,而不是用程序中的for loop:

http://www.cnblogs.com/pinard/p/6494810.html