1.文章介绍

**FFMPEG**作为传统音视频编解码处理的开源项目被广泛使用,各种音视频终端应用如**VLC**,暴风影音等,又如各种手机终端播放音视频的应用等,都会多多少少使用**FFMPEG**某个特性,如**decode,encode,filter,mux**等,这篇文章就来分析在**Android libstagefright**框架中是如何扩展和使用**FFMPEG**的。

2.干货

本文以FFMPEG如何在Android源码中扩展并使用为路线来分析。接着上一篇文章《FFMPEG在Android libstagefright上的扩展使用分析(一)》继续分析。

②.来看看Codec组件又是如何扩展的?

为了区别之前的解复用扩展,接下来在 Hisi Android SDK源码上,来说明 FFMPEG在 Android多媒体框架的 Codec扩展:

为了方便读者理解,我写了一份使用 Codec组件编解码的 Demo代码如下:

/**根据音视频的type创建一个codec对象,用于解码或编码*/

state->mCodec = MediaCodec::CreateByType(

looper, mime.c_str(), false /* encoder */);

/**配置编解码格式,用于解码或编码*/

err = state->mCodec->configure(

codec_format, NULL/*surface*/,

NULL /* crypto */,

0 /* flags */);

CHECK_EQ(err, (status_t)OK);

/**启动编解码组件*/

CHECK_EQ((status_t)OK, state->mCodec->start());

/**配置编解码Buffer*/

CHECK_EQ((status_t)OK, state->mCodec->getInputBuffers(&state->mCodecInBuffers));

CHECK_EQ((status_t)OK, state->mCodec->getOutputBuffers(&state->mCodecOutBuffers));

/**以下是部分源码,只是为了方便读者理解*/

#step1:获取空闲可用的InputBuffer

status_t err = state->mCodec->dequeueInputBuffer(&index, kTimeout);

const sp &inBuffer = state->mDecodecInBuffers.itemAt(index);

#step2.往InputBuffer里面填充需要编解码的数据

err = extractor->readSampleData(inBuffer);

#step3.把填充过数据的InputBuffer推到解码数据Buffer队列中

err = state->mDecodec->queueInputBuffer(

index,

buffer->offset(),

buffer->size(),

timeUs,

bufferFlags);

#step4.如果有需要从编解码队列中获取codec的数据,则通知组件释放出已经准备好的OutputBuffer

err = state->mCodec->dequeueOutputBuffer(

&index, &offset, &size, &presentationTimeUs, &flags,

kTimeout);

#step5.outBuffer即为codec后的buffer,例如视频解码后数据在这里就是yuv的buffer,音频解码后数据在这里就是pcm的buffer。

const sp &outBuffer = state->mDecodecOutBuffers.itemAt(index);

以上代码是创建一个编解码器的简单DEMO。

/**MediaCodec.cpp*/

// static

sp MediaCodec::CreateByType(

const sp &looper, const char *mime, bool encoder) {

sp codec = new MediaCodec(looper);

if (codec->init(mime, true /* nameIsType */, encoder) != OK) {

return NULL;

}

return codec;

}

status_t MediaCodec::init(const char *name, bool nameIsType, bool encoder) {

if (mStats) {

bool isVideo = false;

if (nameIsType) {

isVideo = !strncasecmp(name, "video/", 6);

} else {

AString nameString = name;

nameString.trim();

if (nameString.find("video", 0) >= 0) {

isVideo = true;

}

}

if (isVideo) {

ALOGI("enable stats");

} else {

mStats = false;

}

}

// Current video decoders do not return from OMX_FillThisBuffer

// quickly, violating the OpenMAX specs, until that is remedied

// we need to invest in an extra looper to free the main event

// queue.

bool needDedicatedLooper = false;

if (nameIsType && !strncasecmp(name, "video/", 6)) {

needDedicatedLooper = true;

} else {

AString tmp = name;

if (tmp.endsWith(".secure")) {

tmp.erase(tmp.size() - 7, 7);

}

const MediaCodecList *mcl = MediaCodecList::getInstance();

ssize_t codecIdx = mcl->findCodecByName(tmp.c_str());

if (codecIdx >= 0) {

Vector types;

if (mcl->getSupportedTypes(codecIdx, &types) == OK) {

for (int i = 0; i < types.size(); i++) {

if (types[i].startsWith("video/")) {

needDedicatedLooper = true;

break;

}

}

}

}

}

if (needDedicatedLooper) {

if (mCodecLooper == NULL) {

mCodecLooper = new ALooper;

mCodecLooper->setName("CodecLooper");

mCodecLooper->start(false, false, ANDROID_PRIORITY_AUDIO);

}

mCodecLooper->registerHandler(mCodec);

} else {

mLooper->registerHandler(mCodec);

}

mLooper->registerHandler(this);

######节点1########

mCodec->setNotificationMessage(new AMessage(kWhatCodecNotify, id()));

sp msg = new AMessage(kWhatInit, id());

msg->setString("name", name);

msg->setInt32("nameIsType", nameIsType);

if (nameIsType) {

msg->setInt32("encoder", encoder);

}

sp response;

return PostAndAwaitResponse(msg, &response);

}

在这里看了一个MediaCodecList:

// static

MediaCodecList *MediaCodecList::sCodecList;

// static

const MediaCodecList *MediaCodecList::getInstance() {

Mutex::Autolock autoLock(sInitMutex);

if (sCodecList == NULL) {

sCodecList = new MediaCodecList;

}

return sCodecList->initCheck() == OK ? sCodecList : NULL;

}

MediaCodecList::MediaCodecList()

: mInitCheck(NO_INIT) {

FILE *file = fopen("/etc/media_codecs.xml", "r");

if (file == NULL) {

ALOGW("unable to open media codecs configuration xml file.");

return;

}

parseXMLFile(file);

if (mInitCheck == OK) {

// These are currently still used by the video editing suite.

addMediaCodec(true /* encoder */, "AACEncoder", "audio/mp4a-latm");

addMediaCodec(

false /* encoder */, "OMX.google.raw.decoder", "audio/raw");

}

fclose(file);

file = NULL;

}

void MediaCodecList::addMediaCodec(

bool encoder, const char *name, const char *type) {

mCodecInfos.push();

CodecInfo *info = &mCodecInfos.editItemAt(mCodecInfos.size() - 1);

info->mName = name;

info->mIsEncoder = encoder;

info->mTypes = 0;

info->mQuirks = 0;

if (type != NULL) {

addType(type);

}

}

为了填充list,需要去解析media_codecs.xml的配置文件:

最简单的就是name和type的匹配,如下:

这行代码就是告诉解析器mpeg-L2编码的音频流就使用OMX.ffmpeg.audio.decoder解码库来解音频。

StartElementHandlerWrapper,EndElementHandlerWrapper就是解析回调函数:

/**MediaCodecList.cpp*/

void MediaCodecList::parseXMLFile(FILE *file) {

mInitCheck = OK;

mCurrentSection = SECTION_TOPLEVEL;

mDepth = 0;

XML_Parser parser = ::XML_ParserCreate(NULL);

CHECK(parser != NULL);

::XML_SetUserData(parser, this);

::XML_SetElementHandler(

parser, StartElementHandlerWrapper, EndElementHandlerWrapper);

const int BUFF_SIZE = 512;

while (mInitCheck == OK) {

void *buff = ::XML_GetBuffer(parser, BUFF_SIZE);

if (buff == NULL) {

ALOGE("failed to in call to XML_GetBuffer()");

mInitCheck = UNKNOWN_ERROR;

break;

}

int bytes_read = ::fread(buff, 1, BUFF_SIZE, file);

if (bytes_read < 0) {

ALOGE("failed in call to read");

mInitCheck = ERROR_IO;

break;

}

if (::XML_ParseBuffer(parser, bytes_read, bytes_read == 0)

!= XML_STATUS_OK) {

mInitCheck = ERROR_MALFORMED;

break;

}

if (bytes_read == 0) {

break;

}

}

::XML_ParserFree(parser);

if (mInitCheck == OK) {

for (size_t i = mCodecInfos.size(); i-- > 0;) {

CodecInfo *info = &mCodecInfos.editItemAt(i);

if (info->mTypes == 0) {

// No types supported by this component???

ALOGW("Component %s does not support any type of media?",

info->mName.c_str());

mCodecInfos.removeAt(i);

}

}

}

if (mInitCheck != OK) {

mCodecInfos.clear();

mCodecQuirks.clear();

}

}

// static

void MediaCodecList::StartElementHandlerWrapper(

void *me, const char *name, const char **attrs) {

static_cast(me)->startElementHandler(name, attrs);

}

// static

void MediaCodecList::EndElementHandlerWrapper(void *me, const char *name) {

static_cast(me)->endElementHandler(name);

}

void MediaCodecList::startElementHandler(

const char *name, const char **attrs) {

if (mInitCheck != OK) {

return;

}

switch (mCurrentSection) {

case SECTION_TOPLEVEL:

{

if (!strcmp(name, "Decoders")) {

mCurrentSection = SECTION_DECODERS;

} else if (!strcmp(name, "Encoders")) {

mCurrentSection = SECTION_ENCODERS;

}

break;

}

case SECTION_DECODERS:

{

if (!strcmp(name, "MediaCodec")) {

mInitCheck =

addMediaCodecFromAttributes(false /* encoder */, attrs);

mCurrentSection = SECTION_DECODER;

}

break;

}

case SECTION_ENCODERS:

{

if (!strcmp(name, "MediaCodec")) {

mInitCheck =

addMediaCodecFromAttributes(true /* encoder */, attrs);

mCurrentSection = SECTION_ENCODER;

}

break;

}

case SECTION_DECODER:

case SECTION_ENCODER:

{

if (!strcmp(name, "Quirk")) {

mInitCheck = addQuirk(attrs);

} else if (!strcmp(name, "Type")) {

mInitCheck = addTypeFromAttributes(attrs);

}

break;

}

default:

break;

}

++mDepth;

}

void MediaCodecList::endElementHandler(const char *name) {

if (mInitCheck != OK) {

return;

}

switch (mCurrentSection) {

case SECTION_DECODERS:

{

if (!strcmp(name, "Decoders")) {

mCurrentSection = SECTION_TOPLEVEL;

}

break;

}

case SECTION_ENCODERS:

{

if (!strcmp(name, "Encoders")) {

mCurrentSection = SECTION_TOPLEVEL;

}

break;

}

case SECTION_DECODER:

{

if (!strcmp(name, "MediaCodec")) {

mCurrentSection = SECTION_DECODERS;

}

break;

}

case SECTION_ENCODER:

{

if (!strcmp(name, "MediaCodec")) {

mCurrentSection = SECTION_ENCODERS;

}

break;

}

default:

break;

}

--mDepth;

}

解析成功后回调函数会调用addMediaCodecFromAttributes,把Codec信息添加到数组列表中:

status_t MediaCodecList::addMediaCodecFromAttributes(

bool encoder, const char **attrs) {

const char *name = NULL;

const char *type = NULL;

size_t i = 0;

while (attrs[i] != NULL) {

if (!strcmp(attrs[i], "name")) {

if (attrs[i + 1] == NULL) {

return -EINVAL;

}

name = attrs[i + 1];

++i;

} else if (!strcmp(attrs[i], "type")) {

if (attrs[i + 1] == NULL) {

return -EINVAL;

}

type = attrs[i + 1];

++i;

} else {

return -EINVAL;

}

++i;

}

if (name == NULL) {

return -EINVAL;

}

addMediaCodec(encoder, name, type);

return OK;

}

void MediaCodecList::addMediaCodec(

bool encoder, const char *name, const char *type) {

mCodecInfos.push();

CodecInfo *info = &mCodecInfos.editItemAt(mCodecInfos.size() - 1);

info->mName = name;

info->mIsEncoder = encoder;

info->mTypes = 0;

info->mQuirks = 0;

if (type != NULL) {

addType(type);

}

}

void MediaCodecList::addType(const char *name) {

uint32_t bit;

ssize_t index = mTypes.indexOfKey(name);

if (index < 0) {

bit = mTypes.size();

if (bit == 64) {

ALOGW("Too many distinct type names in configuration.");

return;

}

mTypes.add(name, bit);

} else {

bit = mTypes.valueAt(index);

}

CodecInfo *info = &mCodecInfos.editItemAt(mCodecInfos.size() - 1);

info->mTypes |= 1ull << bit;

}

那么在需要使用编解码器时,是如何匹配Codec组件:

ssize_t MediaCodecList::findCodecByName(const char *name) const {

for (size_t i = 0; i < mCodecInfos.size(); ++i) {

const CodecInfo &info = mCodecInfos.itemAt(i);

if (info.mName == name) {

return i;

}

}

return -ENOENT;

}

可以看到在匹配时会在队列中轮询到匹配名字的组件。

继续分析节点1流程:

void MediaCodec::onMessageReceived(const sp &msg) {

switch (msg->what()) {

...

case kWhatInit:

{

uint32_t replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

if (mState != UNINITIALIZED) {

sp response = new AMessage;

response->setInt32("err", INVALID_OPERATION);

response->postReply(replyID);

break;

}

mReplyID = replyID;

setState(INITIALIZING);

AString name;

CHECK(msg->findString("name", &name));

int32_t nameIsType;

int32_t encoder = false;

CHECK(msg->findInt32("nameIsType", &nameIsType));

if (nameIsType) {

CHECK(msg->findInt32("encoder", &encoder));

}

sp format = new AMessage;

if (nameIsType) {

format->setString("mime", name.c_str());

format->setInt32("encoder", encoder);

} else {

format->setString("componentName", name.c_str());

}

mCodec->initiateAllocateComponent(format);

break;

}

...

ACodec模块的初始化:

/**ACodec.cpp*/

void ACodec::initiateAllocateComponent(const sp &msg) {

msg->setWhat(kWhatAllocateComponent);

msg->setTarget(id());

msg->post();

}

bool ACodec::UninitializedState::onMessageReceived(const sp &msg) {

bool handled = false;

switch (msg->what()) {

...

case ACodec::kWhatAllocateComponent:

{

onAllocateComponent(msg);

handled = true;

break;

}

...

}

bool ACodec::UninitializedState::onAllocateComponent(const sp &msg) {

ALOGI("onAllocateComponent");

CHECK(mCodec->mNode == NULL);

OMXClient client;

CHECK_EQ(client.connect(), (status_t)OK);

sp omx = client.interface();

sp notify = new AMessage(kWhatOMXDied, mCodec->id());

mDeathNotifier = new DeathNotifier(notify);

if (omx->asBinder()->linkToDeath(mDeathNotifier) != OK) {

// This was a local binder, if it dies so do we, we won't care

// about any notifications in the afterlife.

mDeathNotifier.clear();

}

Vector matchingCodecs;

AString mime;

AString componentName;

uint32_t quirks = 0;

if (msg->findString("componentName", &componentName)) {

ssize_t index = matchingCodecs.add();

OMXCodec::CodecNameAndQuirks *entry = &matchingCodecs.editItemAt(index);

entry->mName = String8(componentName.c_str());

if (!OMXCodec::findCodecQuirks(

componentName.c_str(), &entry->mQuirks)) {

entry->mQuirks = 0;

}

} else {

CHECK(msg->findString("mime", &mime));

int32_t encoder;

if (!msg->findInt32("encoder", &encoder)) {

encoder = false;

}

OMXCodec::findMatchingCodecs(

mime.c_str(),

encoder, // createEncoder

NULL, // matchComponentName

0, // flags

&matchingCodecs);

}

sp observer = new CodecObserver;

IOMX::node_id node = NULL;

for (size_t matchIndex = 0; matchIndex < matchingCodecs.size();

++matchIndex) {

componentName = matchingCodecs.itemAt(matchIndex).mName.string();

quirks = matchingCodecs.itemAt(matchIndex).mQuirks;

pid_t tid = androidGetTid();

int prevPriority = androidGetThreadPriority(tid);

androidSetThreadPriority(tid, ANDROID_PRIORITY_FOREGROUND);

status_t err = omx->allocateNode(componentName.c_str(), observer, &node);

androidSetThreadPriority(tid, prevPriority);

if (err == OK) {

break;

}

node = NULL;

}

if (node == NULL) {

if (!mime.empty()) {

ALOGE("Unable to instantiate a decoder for type '%s'.",

mime.c_str());

} else {

ALOGE("Unable to instantiate decoder '%s'.", componentName.c_str());

}

mCodec->signalError(OMX_ErrorComponentNotFound);

return false;

}

notify = new AMessage(kWhatOMXMessage, mCodec->id());

observer->setNotificationMessage(notify);

mCodec->mComponentName = componentName;

mCodec->mFlags = 0;

if (componentName.endsWith(".secure")) {

mCodec->mFlags |= kFlagIsSecure;

mCodec->mFlags |= kFlagPushBlankBuffersToNativeWindowOnShutdown;

}

mCodec->mQuirks = quirks;

mCodec->mOMX = omx;

mCodec->mNode = node;

{

sp notify = mCodec->mNotify->dup();

notify->setInt32("what", ACodec::kWhatComponentAllocated);

notify->setString("componentName", mCodec->mComponentName.c_str());

notify->post();

}

mCodec->changeState(mCodec->mLoadedState);

return true;

}

/**OMXCodec.cpp*/

// static

void OMXCodec::findMatchingCodecs(

const char *mime,

bool createEncoder, const char *matchComponentName,

uint32_t flags,

Vector *matchingCodecs) {

matchingCodecs->clear();

const MediaCodecList *list = MediaCodecList::getInstance();

if (list == NULL) {

return;

}

size_t index = 0;

for (;;) {

ssize_t matchIndex =

list->findCodecByType(mime, createEncoder, index);

if (matchIndex < 0) {

break;

}

index = matchIndex + 1;

const char *componentName = list->getCodecName(matchIndex);

// If a specific codec is requested, skip the non-matching ones.

if (matchComponentName && strcmp(componentName, matchComponentName)) {

continue;

}

//h264 is a special case.

bool bH264Codec = false;

if (!strncmp("OMX.google.h264.decoder", componentName, 23)) {

bH264Codec = true;

}

// When requesting software-only codecs, only push software codecs

// When requesting hardware-only codecs, only push hardware codecs

// When there is request neither for software-only nor for

// hardware-only codecs, push all codecs

if (((flags & kSoftwareCodecsOnly) && IsSoftwareCodec(componentName)) ||

((flags & kHardwareCodecsOnly) && !IsSoftwareCodec(componentName)) ||

(!(flags & (kSoftwareCodecsOnly | kHardwareCodecsOnly)))||

(bH264Codec)) {

ssize_t index = matchingCodecs->add();

CodecNameAndQuirks *entry = &matchingCodecs->editItemAt(index);

entry->mName = String8(componentName);

entry->mQuirks = getComponentQuirks(list, matchIndex);

ALOGV("matching '%s' quirks 0x%08x",

entry->mName.string(), entry->mQuirks);

}

}

if (flags & kPreferSoftwareCodecs) {

matchingCodecs->sort(CompareSoftwareCodecsFirst);

}

}

先通过MediaCodecList中找出匹配的组件并排序,然后通过组件名创建出组件节点(allocateNode)。

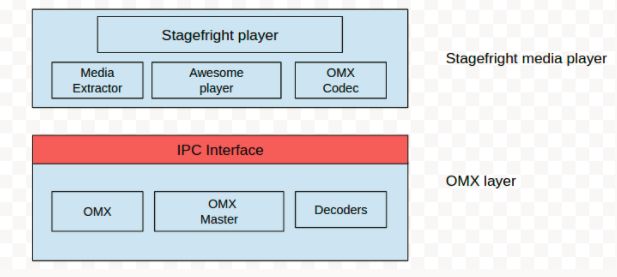

需要非常注意:在ACodec初始化流程中,一个非常关键的组件OMXClient出现了,OMXClient是OMX Layer层的Bp端,IOMX是OMX Layer的Binder接口,OMX是对应的Bn端。

/**OMXClient.cpp*/

status_t MuxOMX::allocateNode(

const char *name, const sp &observer,

node_id *node) {

Mutex::Autolock autoLock(mLock);

sp omx;

if (IsSoftwareComponent(name)) {

if (mLocalOMX == NULL) {

mLocalOMX = new OMX;

}

omx = mLocalOMX;

} else {

omx = mRemoteOMX;

}

status_t err = omx->allocateNode(name, observer, node);

if (err != OK) {

return err;

}

if (omx == mLocalOMX) {

mIsLocalNode.add(*node, true);

}

return OK;

}

bool MuxOMX::IsSoftwareComponent(const char *name) {

return !strncasecmp(name, "OMX.google.", 11);

}

IOMX属于IBinder的实现,本篇文章不做分析,直接分析到Bn端:

/**OMX.cpp*/

OMX::OMX()

: mMaster(new OMXMaster),

mNodeCounter(0) {

}

status_t OMX::allocateNode(

const char *name, const sp &observer, node_id *node) {

Mutex::Autolock autoLock(mLock);

*node = 0;

OMXNodeInstance *instance = new OMXNodeInstance(this, observer, name);

OMX_COMPONENTTYPE *handle;

OMX_ERRORTYPE err = mMaster->makeComponentInstance(

name, &OMXNodeInstance::kCallbacks,

instance, &handle);

if (err != OMX_ErrorNone) {

ALOGV("FAILED to allocate omx component '%s'", name);

instance->onGetHandleFailed();

return UNKNOWN_ERROR;

}

*node = makeNodeID(instance);

mDispatchers.add(*node, new CallbackDispatcher(instance));

instance->setHandle(*node, handle);

mLiveNodes.add(observer->asBinder(), instance);

observer->asBinder()->linkToDeath(this);

return OK;

}

节点2

/**OMXMaster.cpp*/

OMXMaster::OMXMaster()

: mVendorLibHandle(NULL) {

addVendorPlugin();

addPlugin(new SoftOMXPlugin);

}

void OMXMaster::addPlugin(OMXPluginBase *plugin) {

Mutex::Autolock autoLock(mLock);

mPlugins.push_back(plugin);

OMX_U32 index = 0;

char name[128];

OMX_ERRORTYPE err;

while ((err = plugin->enumerateComponents(

name, sizeof(name), index++)) == OMX_ErrorNone) {

String8 name8(name);

if (mPluginByComponentName.indexOfKey(name8) >= 0) {

ALOGE("A component of name '%s' already exists, ignoring this one.",

name8.string());

continue;

}

mPluginByComponentName.add(name8, plugin);

}

if (err != OMX_ErrorNoMore) {

ALOGE("OMX plugin failed w/ error 0x%08x after registering %d "

"components", err, mPluginByComponentName.size());

}

}

OMX_ERRORTYPE OMXMaster::makeComponentInstance(

const char *name,

const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData,

OMX_COMPONENTTYPE **component) {

Mutex::Autolock autoLock(mLock);

*component = NULL;

ssize_t index = mPluginByComponentName.indexOfKey(String8(name));

if (index < 0) {

return OMX_ErrorInvalidComponentName;

}

OMXPluginBase *plugin = mPluginByComponentName.valueAt(index);

OMX_ERRORTYPE err =

plugin->makeComponentInstance(name, callbacks, appData, component);

if (err != OMX_ErrorNone) {

return err;

}

mPluginByInstance.add(*component, plugin);

return err;

}

上面的代码把SoftOMXPlugin组件组合成mPluginByComponentName:

/**SoftOMXPlugin.cpp*/

static const struct {

const char *mName;

const char *mLibNameSuffix;

const char *mRole;

} kComponents[] = {

{ "OMX.google.aac.decoder", "aacdec", "audio_decoder.aac" },

{ "OMX.google.aac.encoder", "aacenc", "audio_encoder.aac" },

{ "OMX.google.amrnb.decoder", "amrdec", "audio_decoder.amrnb" },

{ "OMX.google.amrnb.encoder", "amrnbenc", "audio_encoder.amrnb" },

{ "OMX.google.amrwb.decoder", "amrdec", "audio_decoder.amrwb" },

{ "OMX.google.amrwb.encoder", "amrwbenc", "audio_encoder.amrwb" },

{ "OMX.google.h264.decoder", "h264dec", "video_decoder.avc" },

{ "OMX.google.h264.encoder", "h264enc", "video_encoder.avc" },

{ "OMX.google.g711.alaw.decoder", "g711dec", "audio_decoder.g711alaw" },

{ "OMX.google.g711.mlaw.decoder", "g711dec", "audio_decoder.g711mlaw" },

{ "OMX.google.h263.decoder", "mpeg4dec", "video_decoder.h263" },

{ "OMX.google.h263.encoder", "mpeg4enc", "video_encoder.h263" },

{ "OMX.google.mpeg4.decoder", "mpeg4dec", "video_decoder.mpeg4" },

{ "OMX.google.mpeg4.encoder", "mpeg4enc", "video_encoder.mpeg4" },

{ "OMX.google.mp3.decoder", "mp3dec", "audio_decoder.mp3" },

{ "OMX.google.vorbis.decoder", "vorbisdec", "audio_decoder.vorbis" },

{ "OMX.google.vp8.decoder", "vpxdec", "video_decoder.vp8" },

{ "OMX.google.vp9.decoder", "vpxdec", "video_decoder.vp9" },

{ "OMX.google.vp8.encoder", "vpxenc", "video_encoder.vp8" },

{ "OMX.google.raw.decoder", "rawdec", "audio_decoder.raw" },

{ "OMX.google.flac.encoder", "flacenc", "audio_encoder.flac" },

{ "OMX.google.gsm.decoder", "gsmdec", "audio_decoder.gsm" },

{ "OMX.ffmpeg.audio.decoder", "ffmpegaudiodec", "audio_decoder.ffmpeg" },

};

static const size_t kNumComponents =

sizeof(kComponents) / sizeof(kComponents[0]);

SoftOMXPlugin::SoftOMXPlugin() {

}

OMX_ERRORTYPE SoftOMXPlugin::makeComponentInstance(

const char *name,

const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData,

OMX_COMPONENTTYPE **component) {

ALOGV("makeComponentInstance '%s'", name);

for (size_t i = 0; i < kNumComponents; ++i) {

if (strcmp(name, kComponents[i].mName)) {

continue;

}

AString libName = "libstagefright_soft_";

libName.append(kComponents[i].mLibNameSuffix);

libName.append(".so");

void *libHandle = dlopen(libName.c_str(), RTLD_NOW);

if (libHandle == NULL) {

ALOGE("unable to dlopen %s", libName.c_str());

return OMX_ErrorComponentNotFound;

}

typedef SoftOMXComponent *(*CreateSoftOMXComponentFunc)(

const char *, const OMX_CALLBACKTYPE *,

OMX_PTR, OMX_COMPONENTTYPE **);

CreateSoftOMXComponentFunc createSoftOMXComponent =

(CreateSoftOMXComponentFunc)dlsym(

libHandle,

"_Z22createSoftOMXComponentPKcPK16OMX_CALLBACKTYPE"

"PvPP17OMX_COMPONENTTYPE");

if (createSoftOMXComponent == NULL) {

dlclose(libHandle);

libHandle = NULL;

return OMX_ErrorComponentNotFound;

}

sp codec =

(*createSoftOMXComponent)(name, callbacks, appData, component);

if (codec == NULL) {

dlclose(libHandle);

libHandle = NULL;

return OMX_ErrorInsufficientResources;

}

OMX_ERRORTYPE err = codec->initCheck();

if (err != OMX_ErrorNone) {

dlclose(libHandle);

libHandle = NULL;

return err;

}

codec->incStrong(this);

codec->setLibHandle(libHandle);

return OMX_ErrorNone;

}

return OMX_ErrorInvalidComponentName;

}

其中:

{ "OMX.ffmpeg.audio.decoder", "ffmpegaudiodec", "audio_decoder.ffmpeg" }

就是添加FFMPEG组件到Android多媒体框架音频解码中。

继续节点2中的分析:OMXPluginBase调用makeComponentInstance,完成组件模块的初始化。由于SoftOMXPlugin继承于OMXPluginBase:

/**SoftOMXPlugin.cpp*/

OMX_ERRORTYPE SoftOMXPlugin::makeComponentInstance(

const char *name,

const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData,

OMX_COMPONENTTYPE **component) {

ALOGV("makeComponentInstance '%s'", name);

for (size_t i = 0; i < kNumComponents; ++i) {

if (strcmp(name, kComponents[i].mName)) {

continue;

}

AString libName = "libstagefright_soft_";

libName.append(kComponents[i].mLibNameSuffix);

libName.append(".so");

void *libHandle = dlopen(libName.c_str(), RTLD_NOW);

if (libHandle == NULL) {

ALOGE("unable to dlopen %s", libName.c_str());

return OMX_ErrorComponentNotFound;

}

typedef SoftOMXComponent *(*CreateSoftOMXComponentFunc)(

const char *, const OMX_CALLBACKTYPE *,

OMX_PTR, OMX_COMPONENTTYPE **);

CreateSoftOMXComponentFunc createSoftOMXComponent =

(CreateSoftOMXComponentFunc)dlsym(

libHandle,

"_Z22createSoftOMXComponentPKcPK16OMX_CALLBACKTYPE"

"PvPP17OMX_COMPONENTTYPE");

if (createSoftOMXComponent == NULL) {

dlclose(libHandle);

libHandle = NULL;

return OMX_ErrorComponentNotFound;

}

sp codec =

(*createSoftOMXComponent)(name, callbacks, appData, component);

if (codec == NULL) {

dlclose(libHandle);

libHandle = NULL;

return OMX_ErrorInsufficientResources;

}

OMX_ERRORTYPE err = codec->initCheck();

if (err != OMX_ErrorNone) {

dlclose(libHandle);

libHandle = NULL;

return err;

}

codec->incStrong(this);

codec->setLibHandle(libHandle);

return OMX_ErrorNone;

}

return OMX_ErrorInvalidComponentName;

}

从这段代码就可以看出来会根据对应的codec type动态加载so库文件。

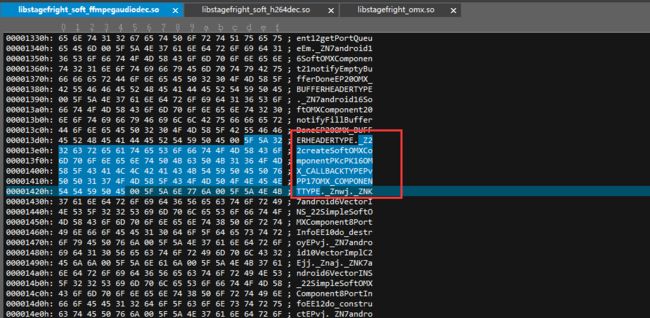

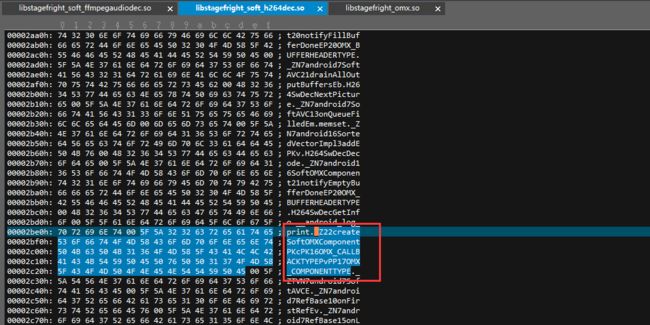

dlopen动态加载so后,通过dlsym创建一个SoftOMXComponent组件,关于dlsym,这里是去构造对象:

CreateSoftOMXComponentFunc createSoftOMXComponent =

(CreateSoftOMXComponentFunc)dlsym(

libHandle,

"_Z22createSoftOMXComponentPKcPK16OMX_CALLBACKTYPEPvPP17OMX_COMPONENTTYPE");

关于这个奇怪的字串,我知道的是这些:

每个子类需实现以下方法 createSoftOMXComponent:

android::SoftOMXComponent *createSoftOMXComponent(

const char *name, const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData, OMX_COMPONENTTYPE **component)

{

ALOGI("createSoftOMXComponent : %s", name);

return new android::SoftFFmpegAudioDec(name, callbacks, appData, component);

}

libstagefright_omx.so,libstagefright_soft_h264dec.so,libstagefright_soft_ffmpegaudiodec.so都是包含SoftOMXComponent组件的动态库,当然还有好多其他包含SoftOMXComponent的的子类,从so的二进制文件中可以看到都包含了这个奇怪的字串(哪位好心读者如果能说明白其中奥妙,可以留言给我分析下,感谢感谢)

sp codec =

(*createSoftOMXComponent)(name, callbacks, appData, component);

上面的代码就真真切切的实例化了一个Codec对象了,没错是对象(程序猿的老梗)。

比如扩展的FFMPEG audio音频解码:

SoftFFmpegAudioDec::SoftFFmpegAudioDec(

const char *name,

const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData,

OMX_COMPONENTTYPE **component)

: SimpleSoftOMXComponent(name, callbacks, appData, component),

mAnchorTimeUs(0),

mNumFramesOutput(0),

mSamplingRate(0),

mOutputPortSettingsChange(NONE),

mInputBufferCount(0),

mAudioConvert(NULL),

mOutFormat(AV_SAMPLE_FMT_S16),

mAudioConvertBuffer(NULL)

{

ALOGV("enter %s()", __FUNCTION__);

mCodecctx = NULL;

mCodec = NULL;

mCodecID = CODEC_ID_NONE;

mNumChannels = 0;

mRequestChannels = 2;

mCodecOpened = 0;

initPorts();

avcodec_register_all();

mCodecctx = avcodec_alloc_context2(AVMEDIA_TYPE_AUDIO);

CHECK(mCodecctx);

ALOGV("exit %s()", __FUNCTION__);

}

怎样,看到熟悉的avcodec_alloc_context2接口了吧。

Codec的Creat过程到这里就分析完毕了,那么创建了Codec组件后,就该configure组件了:

err = state->mDecodec->configure(

decode_format, NULL/*surface*/,

NULL /* crypto */,

0 /* flags */);

/**MediaCodec.cpp*/

status_t MediaCodec::configure(

const sp &format,

const sp &nativeWindow,

const sp &crypto,

uint32_t flags) {

sp msg = new AMessage(kWhatConfigure, id());

msg->setMessage("format", format);

msg->setInt32("flags", flags);

if (nativeWindow != NULL) {

msg->setObject(

"native-window",

new NativeWindowWrapper(nativeWindow));

}

if (crypto != NULL) {

msg->setPointer("crypto", crypto.get());

}

// add for quick output

ALOGV("MediaCodec::configure, flags = %d", flags);

if(flags == 2) {

HiSysManagerClient sysclient;

sysclient.setDVFS(1);

}

sp response;

return PostAndAwaitResponse(msg, &response);

}

void MediaCodec::onMessageReceived(const sp &msg) {

switch (msg->what()) {

...

case kWhatConfigure:

{

uint32_t replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

if (mState != INITIALIZED) {

sp response = new AMessage;

response->setInt32("err", INVALID_OPERATION);

response->postReply(replyID);

break;

}

sp obj;

if (!msg->findObject("native-window", &obj)) {

obj.clear();

}

sp format;

CHECK(msg->findMessage("format", &format));

if (obj != NULL) {

format->setObject("native-window", obj);

status_t err = setNativeWindow(

static_cast(obj.get())

->getSurfaceTextureClient());

if (err != OK) {

sp response = new AMessage;

response->setInt32("err", err);

response->postReply(replyID);

break;

}

} else {

setNativeWindow(NULL);

}

mReplyID = replyID;

setState(CONFIGURING);

void *crypto;

if (!msg->findPointer("crypto", &crypto)) {

crypto = NULL;

}

mCrypto = static_cast(crypto);

uint32_t flags;

CHECK(msg->findInt32("flags", (int32_t *)&flags));

if (flags & CONFIGURE_FLAG_ENCODE) {

format->setInt32("encoder", true);

mFlags |= kFlagIsEncoder;

}

if (flags & CONFIGURE_FLAG_DECODER_QUICK_OUTPUT) {

format->setInt32("quick-output", true);

}

extractCSD(format);

mCodec->initiateConfigureComponent(format);

break;

}

...

}

可以看到configure最终就是发了一个消息,其实start也是如此,PostAndAwaitResponse,onMessageReceived是AMessage的消息回调:

/**ACodec.cpp*/

void ACodec::initiateConfigureComponent(const sp &msg) {

msg->setWhat(kWhatConfigureComponent);

msg->setTarget(id());

msg->post();

}

bool ACodec::LoadedState::onMessageReceived(const sp &msg) {

bool handled = false;

switch (msg->what()) {

case ACodec::kWhatConfigureComponent:

{

onConfigureComponent(msg);

handled = true;

break;

}

...

}

}

bool ACodec::LoadedState::onConfigureComponent(

const sp &msg) {

ALOGV("onConfigureComponent");

CHECK(mCodec->mNode != NULL);

AString mime;

CHECK(msg->findString("mime", &mime));

status_t err = mCodec->configureCodec(mime.c_str(), msg);

if (err != OK) {

ALOGE("[%s] configureCodec returning error %d",

mCodec->mComponentName.c_str(), err);

mCodec->signalError(OMX_ErrorUndefined, err);

return false;

}

sp obj;

if (msg->findObject("native-window", &obj)

&& strncmp("OMX.google.", mCodec->mComponentName.c_str(), 11)) {

sp nativeWindow(

static_cast(obj.get()));

CHECK(nativeWindow != NULL);

mCodec->mNativeWindow = nativeWindow->getNativeWindow();

mCodec->mLocalNativeWindow = NULL;

if (mCodec->mNativeWindow != NULL) {

native_window_set_scaling_mode(

mCodec->mNativeWindow.get(),

NATIVE_WINDOW_SCALING_MODE_SCALE_TO_WINDOW);

}

} else if(msg->findObject("native-window", &obj)) {

sp nativeWindow(

static_cast(obj.get()));

CHECK(nativeWindow != NULL);

mCodec->mLocalNativeWindow = nativeWindow->getNativeWindow();

mCodec->mNativeWindow = NULL;

native_window_set_scaling_mode(

mCodec->mLocalNativeWindow.get(),

NATIVE_WINDOW_SCALING_MODE_SCALE_TO_WINDOW);

}

CHECK_EQ((status_t)OK, mCodec->initNativeWindow());

{

sp notify = mCodec->mNotify->dup();

notify->setInt32("what", ACodec::kWhatComponentConfigured);

notify->post();

}

return true;

}

status_t ACodec::configureCodec(

const char *mime, const sp &msg) {

int32_t encoder;

if (!msg->findInt32("encoder", &encoder)) {

encoder = false;

}

mIsEncoder = encoder;

status_t err = setComponentRole(encoder /* isEncoder */, mime);

if (err != OK) {

return err;

}

int32_t bitRate = 0;

// FLAC encoder doesn't need a bitrate, other encoders do

if (encoder && strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_FLAC)

&& !msg->findInt32("bitrate", &bitRate)) {

return INVALID_OPERATION;

}

int32_t storeMeta;

if (encoder

&& msg->findInt32("store-metadata-in-buffers", &storeMeta)

&& storeMeta != 0) {

err = mOMX->storeMetaDataInBuffers(mNode, kPortIndexInput, OMX_TRUE);

if (err != OK) {

ALOGE("[%s] storeMetaDataInBuffers (input) failed w/ err %d",

mComponentName.c_str(), err);

return err;

}

}

int32_t prependSPSPPS = 0;

if (encoder

&& msg->findInt32("prepend-sps-pps-to-idr-frames", &prependSPSPPS)

&& prependSPSPPS != 0) {

OMX_INDEXTYPE index;

err = mOMX->getExtensionIndex(

mNode,

"OMX.google.android.index.prependSPSPPSToIDRFrames",

&index);

if (err == OK) {

PrependSPSPPSToIDRFramesParams params;

InitOMXParams(¶ms);

params.bEnable = OMX_TRUE;

err = mOMX->setParameter(

mNode, index, ¶ms, sizeof(params));

}

if (err != OK) {

ALOGE("Encoder could not be configured to emit SPS/PPS before "

"IDR frames. (err %d)", err);

return err;

}

}

// Only enable metadata mode on encoder output if encoder can prepend

// sps/pps to idr frames, since in metadata mode the bitstream is in an

// opaque handle, to which we don't have access.

int32_t video = !strncasecmp(mime, "video/", 6);

mIsVideo = video;

if (mStats) {

if (mIsVideo) {

ALOGI("enable stats");

} else {

mStats = false;

}

}

if (encoder && video) {

OMX_BOOL enable = (OMX_BOOL) (prependSPSPPS

&& msg->findInt32("store-metadata-in-buffers-output", &storeMeta)

&& storeMeta != 0);

err = mOMX->storeMetaDataInBuffers(mNode, kPortIndexOutput, enable);

if (err != OK) {

ALOGE("[%s] storeMetaDataInBuffers (output) failed w/ err %d",

mComponentName.c_str(), err);

mUseMetadataOnEncoderOutput = 0;

} else {

mUseMetadataOnEncoderOutput = enable;

}

if (!msg->findInt64(

"repeat-previous-frame-after",

&mRepeatFrameDelayUs)) {

mRepeatFrameDelayUs = -1ll;

}

}

// Always try to enable dynamic output buffers on native surface

sp obj;

int32_t haveNativeWindow = msg->findObject("native-window", &obj) &&

obj != NULL;

mStoreMetaDataInOutputBuffers = false;

if (!encoder && video && haveNativeWindow) {

err = mOMX->storeMetaDataInBuffers(mNode, kPortIndexOutput, OMX_TRUE);

if (err != OK) {

ALOGE("[%s] storeMetaDataInBuffers failed w/ err %d",

mComponentName.c_str(), err);

// if adaptive playback has been requested, try JB fallback

// NOTE: THIS FALLBACK MECHANISM WILL BE REMOVED DUE TO ITS

// LARGE MEMORY REQUIREMENT

// we will not do adaptive playback on software accessed

// surfaces as they never had to respond to changes in the

// crop window, and we don't trust that they will be able to.

int usageBits = 0;

bool canDoAdaptivePlayback;

sp windowWrapper(

static_cast(obj.get()));

sp nativeWindow = windowWrapper->getNativeWindow();

if (nativeWindow->query(

nativeWindow.get(),

NATIVE_WINDOW_CONSUMER_USAGE_BITS,

&usageBits) != OK) {

canDoAdaptivePlayback = false;

} else {

canDoAdaptivePlayback =

(usageBits &

(GRALLOC_USAGE_SW_READ_MASK |

GRALLOC_USAGE_SW_WRITE_MASK)) == 0;

}

int32_t maxWidth = 0, maxHeight = 0;

if (canDoAdaptivePlayback &&

msg->findInt32("max-width", &maxWidth) &&

msg->findInt32("max-height", &maxHeight)) {

ALOGI("[%s] prepareForAdaptivePlayback(%dx%d)",

mComponentName.c_str(), maxWidth, maxHeight);

err = mOMX->prepareForAdaptivePlayback(

mNode, kPortIndexOutput, OMX_TRUE, maxWidth, maxHeight);

ALOGW_IF(err != OK,

"[%s] prepareForAdaptivePlayback failed w/ err %d",

mComponentName.c_str(), err);

}

// allow failure

err = OK;

} else {

ALOGV("[%s] storeMetaDataInBuffers succeeded", mComponentName.c_str());

mStoreMetaDataInOutputBuffers = true;

}

int32_t push;

if (msg->findInt32("push-blank-buffers-on-shutdown", &push)

&& push != 0) {

mFlags |= kFlagPushBlankBuffersToNativeWindowOnShutdown;

}

}

if (video) {

if (encoder) {

err = setupVideoEncoder(mime, msg);

} else {

int32_t width, height;

if (!msg->findInt32("width", &width)

|| !msg->findInt32("height", &height)) {

err = INVALID_OPERATION;

} else {

err = setupVideoDecoder(mime, width, height);

}

}

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_MPEG)) {

int32_t numChannels, sampleRate;

if (!msg->findInt32("channel-count", &numChannels)

|| !msg->findInt32("sample-rate", &sampleRate)) {

// Since we did not always check for these, leave them optional

// and have the decoder figure it all out.

err = OK;

} else {

err = setupRawAudioFormat(

encoder ? kPortIndexInput : kPortIndexOutput,

sampleRate,

numChannels);

}

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_AAC)) {

int32_t numChannels, sampleRate;

if (!msg->findInt32("channel-count", &numChannels)

|| !msg->findInt32("sample-rate", &sampleRate)) {

err = INVALID_OPERATION;

} else {

int32_t isADTS, aacProfile;

if (!msg->findInt32("is-adts", &isADTS)) {

isADTS = 0;

}

if (!msg->findInt32("aac-profile", &aacProfile)) {

aacProfile = OMX_AUDIO_AACObjectNull;

}

err = setupAACCodec(

encoder, numChannels, sampleRate, bitRate, aacProfile,

isADTS != 0);

}

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_AMR_NB)) {

err = setupAMRCodec(encoder, false /* isWAMR */, bitRate);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_AMR_WB)) {

err = setupAMRCodec(encoder, true /* isWAMR */, bitRate);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_G711_ALAW)

|| !strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_G711_MLAW)) {

// These are PCM-like formats with a fixed sample rate but

// a variable number of channels.

int32_t numChannels;

if (!msg->findInt32("channel-count", &numChannels)) {

err = INVALID_OPERATION;

} else {

err = setupG711Codec(encoder, numChannels);

}

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_FLAC)) {

int32_t numChannels, sampleRate, compressionLevel = -1;

if (encoder &&

(!msg->findInt32("channel-count", &numChannels)

|| !msg->findInt32("sample-rate", &sampleRate))) {

ALOGE("missing channel count or sample rate for FLAC encoder");

err = INVALID_OPERATION;

} else {

if (encoder) {

if (!msg->findInt32(

"flac-compression-level", &compressionLevel)) {

compressionLevel = 5;// default FLAC compression level

} else if (compressionLevel < 0) {

ALOGW("compression level %d outside [0..8] range, "

"using 0",

compressionLevel);

compressionLevel = 0;

} else if (compressionLevel > 8) {

ALOGW("compression level %d outside [0..8] range, "

"using 8",

compressionLevel);

compressionLevel = 8;

}

}

err = setupFlacCodec(

encoder, numChannels, sampleRate, compressionLevel);

}

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_RAW)) {

int32_t numChannels, sampleRate;

if (encoder

|| !msg->findInt32("channel-count", &numChannels)

|| !msg->findInt32("sample-rate", &sampleRate)) {

err = INVALID_OPERATION;

} else {

err = setupRawAudioFormat(kPortIndexInput, sampleRate, numChannels);

}

} else if (!strcasecmp(MEDIA_MIMETYPE_AUDIO_MPEG_LAYER_II, mime)) {

status_t err = setupFFmpegAudioFormat(msg, mime);

if (err != OK) {

ALOGE("setupFFmpegAudioFormat() failed (err = %d)", err);

return err;

}

}

if (err != OK) {

return err;

}

if (!msg->findInt32("encoder-delay", &mEncoderDelay)) {

mEncoderDelay = 0;

}

if (!msg->findInt32("encoder-padding", &mEncoderPadding)) {

mEncoderPadding = 0;

}

if (msg->findInt32("channel-mask", &mChannelMask)) {

mChannelMaskPresent = true;

} else {

mChannelMaskPresent = false;

}

int32_t maxInputSize;

if (msg->findInt32("max-input-size", &maxInputSize)) {

err = setMinBufferSize(kPortIndexInput, (size_t)maxInputSize);

} else if (!strcmp("OMX.Nvidia.aac.decoder", mComponentName.c_str())) {

err = setMinBufferSize(kPortIndexInput, 8192); // XXX

}

int32_t fastOutput = 0;

msg->findInt32("fast-output-mode", &fastOutput);

int32_t QuickOutputMode = 0;

msg->findInt32("quick-output", &QuickOutputMode);

if ((fastOutput != 0) || (QuickOutputMode != 0)) {

OMX_INDEXTYPE index;

err = mOMX->getExtensionIndex(mNode, "OMX.Hisi.Param.Index.FastOutputMode", &index);

if (err == OK) {

ALOGI("[%s] Enable fast output mode", mComponentName.c_str());

OMX_HISI_PARAM_FASTOUTPUT outputMode;

InitOMXParams(&outputMode);

mFastOutput = true;

outputMode.bEnabled = OMX_TRUE;

err = mOMX->setParameter(

mNode, index, &outputMode, sizeof(outputMode));

} else {

ALOGE("[%s] GetExtensionIndex of OMX.Hisi.Param.Index.FastOutputMode fail", mComponentName.c_str());

return err;

}

if (err != OK) {

ALOGE("[%s] set fast output mode fail", mComponentName.c_str());

return err;

}

}

return err;

}

onConfigureComponent代码很多,其实除开Surface处理(本文只分析解码的输出,不需要考虑Surface),就只需要分析configureCodec以及kWhatComponentConfigured的消息。

在configureCodec中,其中mOMX是OMX Layer层,上面这段源码除了需要分析下setComponentRole外,其他的都是针对音视频编解码配置的其他特殊参数,我们来分析下setComponentRole:

节点3

status_t ACodec::setComponentRole(

bool isEncoder, const char *mime) {

struct MimeToRole {

const char *mime;

const char *decoderRole;

const char *encoderRole;

};

static const MimeToRole kMimeToRole[] = {

{ MEDIA_MIMETYPE_AUDIO_MPEG,

"audio_decoder.mp3", "audio_encoder.mp3" },

{ MEDIA_MIMETYPE_AUDIO_MPEG_LAYER_I,

"audio_decoder.mp1", "audio_encoder.mp1" },

{ MEDIA_MIMETYPE_AUDIO_MPEG_LAYER_II,

// "audio_decoder.mp2", "audio_encoder.mp2" },

"audio_decoder.ffmpeg", "audio_encoder.ffmpeg"},

{ MEDIA_MIMETYPE_AUDIO_AMR_NB,

"audio_decoder.amrnb", "audio_encoder.amrnb" },

{ MEDIA_MIMETYPE_AUDIO_AMR_WB,

"audio_decoder.amrwb", "audio_encoder.amrwb" },

{ MEDIA_MIMETYPE_AUDIO_AAC,

"audio_decoder.aac", "audio_encoder.aac" },

{ MEDIA_MIMETYPE_AUDIO_VORBIS,

"audio_decoder.vorbis", "audio_encoder.vorbis" },

{ MEDIA_MIMETYPE_AUDIO_G711_MLAW,

"audio_decoder.g711mlaw", "audio_encoder.g711mlaw" },

{ MEDIA_MIMETYPE_AUDIO_G711_ALAW,

"audio_decoder.g711alaw", "audio_encoder.g711alaw" },

{ MEDIA_MIMETYPE_VIDEO_AVC,

"video_decoder.avc", "video_encoder.avc" },

{ MEDIA_MIMETYPE_VIDEO_MPEG4,

"video_decoder.mpeg4", "video_encoder.mpeg4" },

{ MEDIA_MIMETYPE_VIDEO_H263,

"video_decoder.h263", "video_encoder.h263" },

{ MEDIA_MIMETYPE_VIDEO_VP8,

"video_decoder.vp8", "video_encoder.vp8" },

{ MEDIA_MIMETYPE_VIDEO_VP9,

"video_decoder.vp9", "video_encoder.vp9" },

{ MEDIA_MIMETYPE_AUDIO_RAW,

"audio_decoder.raw", "audio_encoder.raw" },

{ MEDIA_MIMETYPE_AUDIO_FLAC,

"audio_decoder.flac", "audio_encoder.flac" },

{ MEDIA_MIMETYPE_AUDIO_MSGSM,

"audio_decoder.gsm", "audio_encoder.gsm" },

{ MEDIA_MIMETYPE_VIDEO_MPEG2,

"video_decoder.mpeg2", "video_encoder.mpeg2" },

{ MEDIA_MIMETYPE_VIDEO_HEVC,

"video_decoder.hevc", "video_encoder.hevc" },

};

static const size_t kNumMimeToRole =

sizeof(kMimeToRole) / sizeof(kMimeToRole[0]);

size_t i;

for (i = 0; i < kNumMimeToRole; ++i) {

if (!strcasecmp(mime, kMimeToRole[i].mime)) {

break;

}

}

if (i == kNumMimeToRole) {

return ERROR_UNSUPPORTED;

}

const char *role =

isEncoder ? kMimeToRole[i].encoderRole

: kMimeToRole[i].decoderRole;

if (role != NULL) {

OMX_PARAM_COMPONENTROLETYPE roleParams;

InitOMXParams(&roleParams);

strncpy((char *)roleParams.cRole,

role, OMX_MAX_STRINGNAME_SIZE - 1);

roleParams.cRole[OMX_MAX_STRINGNAME_SIZE - 1] = '\0';

status_t err = mOMX->setParameter(

mNode, OMX_IndexParamStandardComponentRole,

&roleParams, sizeof(roleParams));

if (err != OK) {

ALOGW("[%s] Failed to set standard component role '%s'.",

mComponentName.c_str(), role);

return err;

}

}

return OK;

}

从上面这段源码可知,在这里配置了编解码器,例如对MEDIA_MIMETYPE_AUDIO_MPEG_LAYER_II配置的编解码器组件如下:

static const MimeToRole kMimeToRole[] = {

...

{ MEDIA_MIMETYPE_AUDIO_MPEG_LAYER_II,

// "audio_decoder.mp2", "audio_encoder.mp2" },

"audio_decoder.ffmpeg", "audio_encoder.ffmpeg"},

...

}

其中在节点3中mNode就是在Creat阶段创建的OMXNodeInstance引用。

这里使用ffmpeg组件来扩展了AUDIO_MPEG_LAYER_II也就是mpeg-L2音频的编解码。

ACodec调用到OMX Layer层:

/**OMXClient.cpp*/

status_t MuxOMX::setParameter(

node_id node, OMX_INDEXTYPE index,

const void *params, size_t size) {

return getOMX(node)->setParameter(node, index, params, size);

}

对应Binder的服务端实现:

/**OMX.cpp*/

status_t OMX::setParameter(

node_id node, OMX_INDEXTYPE index,

const void *params, size_t size) {

return findInstance(node)->setParameter(

index, params, size);

}

OMXNodeInstance *OMX::findInstance(node_id node) {

Mutex::Autolock autoLock(mLock);

ssize_t index = mNodeIDToInstance.indexOfKey(node);

return index < 0 ? NULL : mNodeIDToInstance.valueAt(index);

}

/**OMXNodeInstance.cpp*/

status_t OMXNodeInstance::setParameter(

OMX_INDEXTYPE index, const void *params, size_t size) {

Mutex::Autolock autoLock(mLock);

OMX_ERRORTYPE err = OMX_SetParameter(

mHandle, index, const_cast(params));

return StatusFromOMXError(err);

}

这里就涉及到OpenMax的API了:

/**OMX_Core.h*/

#define OMX_SetParameter( \

hComponent, \

nParamIndex, \

pComponentParameterStructure) \

((OMX_COMPONENTTYPE*)hComponent)->SetParameter( \

hComponent, \

nParamIndex, \

pComponentParameterStructure) /* Macro End */

从这里可以看出来,其实就是mHandle:

typedef struct OMX_COMPONENTTYPE

{

OMX_U32 nSize;

OMX_VERSIONTYPE nVersion;

OMX_PTR pComponentPrivate;

OMX_PTR pApplicationPrivate;

OMX_ERRORTYPE (*GetComponentVersion)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_OUT OMX_STRING pComponentName,

OMX_OUT OMX_VERSIONTYPE* pComponentVersion,

OMX_OUT OMX_VERSIONTYPE* pSpecVersion,

OMX_OUT OMX_UUIDTYPE* pComponentUUID);

OMX_ERRORTYPE (*SendCommand)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_COMMANDTYPE Cmd,

OMX_IN OMX_U32 nParam1,

OMX_IN OMX_PTR pCmdData);

OMX_ERRORTYPE (*GetParameter)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_INDEXTYPE nParamIndex,

OMX_INOUT OMX_PTR pComponentParameterStructure);

OMX_ERRORTYPE (*SetParameter)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_INDEXTYPE nIndex,

OMX_IN OMX_PTR pComponentParameterStructure);

OMX_ERRORTYPE (*GetConfig)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_INDEXTYPE nIndex,

OMX_INOUT OMX_PTR pComponentConfigStructure);

OMX_ERRORTYPE (*SetConfig)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_INDEXTYPE nIndex,

OMX_IN OMX_PTR pComponentConfigStructure);

OMX_ERRORTYPE (*GetExtensionIndex)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_STRING cParameterName,

OMX_OUT OMX_INDEXTYPE* pIndexType);

OMX_ERRORTYPE (*GetState)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_OUT OMX_STATETYPE* pState);

OMX_ERRORTYPE (*ComponentTunnelRequest)(

OMX_IN OMX_HANDLETYPE hComp,

OMX_IN OMX_U32 nPort,

OMX_IN OMX_HANDLETYPE hTunneledComp,

OMX_IN OMX_U32 nTunneledPort,

OMX_INOUT OMX_TUNNELSETUPTYPE* pTunnelSetup);

OMX_ERRORTYPE (*UseBuffer)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_INOUT OMX_BUFFERHEADERTYPE** ppBufferHdr,

OMX_IN OMX_U32 nPortIndex,

OMX_IN OMX_PTR pAppPrivate,

OMX_IN OMX_U32 nSizeBytes,

OMX_IN OMX_U8* pBuffer);

OMX_ERRORTYPE (*AllocateBuffer)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_INOUT OMX_BUFFERHEADERTYPE** ppBuffer,

OMX_IN OMX_U32 nPortIndex,

OMX_IN OMX_PTR pAppPrivate,

OMX_IN OMX_U32 nSizeBytes);

OMX_ERRORTYPE (*FreeBuffer)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_U32 nPortIndex,

OMX_IN OMX_BUFFERHEADERTYPE* pBuffer);

OMX_ERRORTYPE (*EmptyThisBuffer)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_BUFFERHEADERTYPE* pBuffer);

OMX_ERRORTYPE (*FillThisBuffer)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_BUFFERHEADERTYPE* pBuffer);

OMX_ERRORTYPE (*SetCallbacks)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_CALLBACKTYPE* pCallbacks,

OMX_IN OMX_PTR pAppData);

OMX_ERRORTYPE (*ComponentDeInit)(

OMX_IN OMX_HANDLETYPE hComponent);

OMX_ERRORTYPE (*UseEGLImage)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_INOUT OMX_BUFFERHEADERTYPE** ppBufferHdr,

OMX_IN OMX_U32 nPortIndex,

OMX_IN OMX_PTR pAppPrivate,

OMX_IN void* eglImage);

OMX_ERRORTYPE (*ComponentRoleEnum)(

OMX_IN OMX_HANDLETYPE hComponent,

OMX_OUT OMX_U8 *cRole,

OMX_IN OMX_U32 nIndex);

} OMX_COMPONENTTYPE;

回想之前的代码中:

sp codec =

(*createSoftOMXComponent)(name, callbacks, appData, component);

在构造对应的组件时,例如SoftFFmpegAudioDec

SoftFFmpegAudioDec::SoftFFmpegAudioDec(

const char *name,

const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData,

OMX_COMPONENTTYPE **component)

: SimpleSoftOMXComponent(name, callbacks, appData, component),

mAnchorTimeUs(0),

mNumFramesOutput(0),

mSamplingRate(0),

mOutputPortSettingsChange(NONE),

mInputBufferCount(0),

mAudioConvert(NULL),

mOutFormat(AV_SAMPLE_FMT_S16),

mAudioConvertBuffer(NULL)

{

ALOGV("enter %s()", __FUNCTION__);

mCodecctx = NULL;

mCodec = NULL;

mCodecID = CODEC_ID_NONE;

mNumChannels = 0;

mRequestChannels = 2;

mCodecOpened = 0;

initPorts();

avcodec_register_all();

mCodecctx = avcodec_alloc_context2(AVMEDIA_TYPE_AUDIO);

CHECK(mCodecctx);

ALOGV("exit %s()", __FUNCTION__);

}

继承SimpleSoftOMXComponent:

SimpleSoftOMXComponent::SimpleSoftOMXComponent(

const char *name,

const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData,

OMX_COMPONENTTYPE **component)

: SoftOMXComponent(name, callbacks, appData, component),

mLooper(new ALooper),

mHandler(new AHandlerReflector(this)),

mState(OMX_StateLoaded),

mTargetState(OMX_StateLoaded) {

mLooper->setName(name);

mLooper->registerHandler(mHandler);

mLooper->start(

false, // runOnCallingThread

false, // canCallJava

ANDROID_PRIORITY_FOREGROUND);

}

继承SoftOMXComponent:

SoftOMXComponent::SoftOMXComponent(

const char *name,

const OMX_CALLBACKTYPE *callbacks,

OMX_PTR appData,

OMX_COMPONENTTYPE **component)

: mName(name),

mCallbacks(callbacks),

mComponent(new OMX_COMPONENTTYPE),

mLibHandle(NULL) {

mComponent->nSize = sizeof(*mComponent);

mComponent->nVersion.s.nVersionMajor = 1;

mComponent->nVersion.s.nVersionMinor = 0;

mComponent->nVersion.s.nRevision = 0;

mComponent->nVersion.s.nStep = 0;

mComponent->pComponentPrivate = this;

mComponent->pApplicationPrivate = appData;

mComponent->GetComponentVersion = NULL;

mComponent->SendCommand = SendCommandWrapper;

mComponent->GetParameter = GetParameterWrapper;

mComponent->SetParameter = SetParameterWrapper;

mComponent->GetConfig = GetConfigWrapper;

mComponent->SetConfig = SetConfigWrapper;

mComponent->GetExtensionIndex = GetExtensionIndexWrapper;

mComponent->GetState = GetStateWrapper;

mComponent->ComponentTunnelRequest = NULL;

mComponent->UseBuffer = UseBufferWrapper;

mComponent->AllocateBuffer = AllocateBufferWrapper;

mComponent->FreeBuffer = FreeBufferWrapper;

mComponent->EmptyThisBuffer = EmptyThisBufferWrapper;

mComponent->FillThisBuffer = FillThisBufferWrapper;

mComponent->SetCallbacks = NULL;

mComponent->ComponentDeInit = NULL;

mComponent->UseEGLImage = NULL;

mComponent->ComponentRoleEnum = NULL;

*component = mComponent;

}

从mComponent->SetParameter = SetParameterWrapper;

我们可以看到是这样的调用途径:

/**SoftOMXComponent.cpp*/

// static

OMX_ERRORTYPE SoftOMXComponent::SetParameterWrapper(

OMX_HANDLETYPE component,

OMX_INDEXTYPE index,

OMX_PTR params) {

SoftOMXComponent *me =

(SoftOMXComponent *)

((OMX_COMPONENTTYPE *)component)->pComponentPrivate;

return me->setParameter(index, params);

}

OMX_ERRORTYPE SoftOMXComponent::setParameter(

OMX_INDEXTYPE index, const OMX_PTR params) {

return OMX_ErrorUndefined;

}

由于SimpleSoftOMXComponent继承于SoftOMXComponent,查看子类SimpleSoftOMXComponent的实现:

OMX_ERRORTYPE SimpleSoftOMXComponent::setParameter(

OMX_INDEXTYPE index, const OMX_PTR params) {

Mutex::Autolock autoLock(mLock);

CHECK(isSetParameterAllowed(index, params));

return internalSetParameter(index, params);

}

OMX_ERRORTYPE SimpleSoftOMXComponent::internalSetParameter(

OMX_INDEXTYPE index, const OMX_PTR params) {

switch (index) {

case OMX_IndexParamPortDefinition:

{

OMX_PARAM_PORTDEFINITIONTYPE *defParams =

(OMX_PARAM_PORTDEFINITIONTYPE *)params;

if (defParams->nPortIndex >= mPorts.size()

|| defParams->nSize

!= sizeof(OMX_PARAM_PORTDEFINITIONTYPE)) {

return OMX_ErrorUndefined;

}

PortInfo *port =

&mPorts.editItemAt(defParams->nPortIndex);

if (defParams->nBufferSize != port->mDef.nBufferSize) {

CHECK_GE(defParams->nBufferSize, port->mDef.nBufferSize);

port->mDef.nBufferSize = defParams->nBufferSize;

}

if (defParams->nBufferCountActual

!= port->mDef.nBufferCountActual) {

CHECK_GE(defParams->nBufferCountActual,

port->mDef.nBufferCountMin);

port->mDef.nBufferCountActual = defParams->nBufferCountActual;

}

return OMX_ErrorNone;

}

default:

return OMX_ErrorUnsupportedIndex;

}

}

以SoftFFmpegAudioDec为例,SoftFFmpegAudioDec继承于SimpleSoftOMXComponent:

OMX_ERRORTYPE SoftFFmpegAudioDec::internalSetParameter(

OMX_INDEXTYPE index, const OMX_PTR params)

{

ALOGV("enter %s()", __FUNCTION__);

switch (index)

{

case OMX_IndexParamStandardComponentRole:

{

const OMX_PARAM_COMPONENTROLETYPE *roleParams =

(const OMX_PARAM_COMPONENTROLETYPE *)params;

if(0 == strncmp((const char *)roleParams->cRole,

"audio_decoder.aac",

OMX_MAX_STRINGNAME_SIZE - 1))

{

mCodecID = CODEC_ID_AAC;

return OMX_ErrorNone;

}

else if (0 == strncmp((const char *)roleParams->cRole,

"audio_decoder.ffmpeg",

OMX_MAX_STRINGNAME_SIZE - 1))

{

return OMX_ErrorNone;

}

return OMX_ErrorUndefined;

}

case OMX_IndexConfigAudioFfmpeg:

{

OMX_AUDIO_PARAM_FFMPEGCONFIG *FFmpegParams =

(OMX_AUDIO_PARAM_FFMPEGCONFIG *)params;

CHECK(FFmpegParams);

if (FFmpegParams->nPortIndex != 0)

{

ALOGE("output no need to set index %x", OMX_IndexConfigAudioFfmpeg);

return OMX_ErrorUndefined;

}

mSamplingRate = FFmpegParams->nSampleRate;

mNumChannels = FFmpegParams->nChannels;

//if id is 0, it is invalid

mCodecID = FFmpegParams->nAvCodecCtxID;

//init decoder

if (initDecoder(FFmpegParams->pFfmpegFormatCtx) != OMX_ErrorNone)

{

ALOGE("cannot init decoder");

return OMX_ErrorUndefined;

}

return OMX_ErrorNone;

}

case OMX_IndexParamAudioPcm:

{

OMX_AUDIO_PARAM_PCMMODETYPE *pcmParams = (OMX_AUDIO_PARAM_PCMMODETYPE*)params;

mNumChannels = pcmParams->nChannels;

pcmParams->nSamplingRate = mSamplingRate;

/* ignore other parameters */

initDecoder(NULL);

return OMX_ErrorNone;

}

default:

return SimpleSoftOMXComponent::internalSetParameter(index, params);

}

}

OMX_ERRORTYPE SoftFFmpegAudioDec::initDecoder(OMX_PTR formatCtx)

{

ALOGV("enter %s()", __FUNCTION__);

AVCodecContext *pFormatCtx = (AVCodecContext *)formatCtx;

mAudioConvert = NULL;

mOutFormat = AV_SAMPLE_FMT_S16;

mAudioConvertBuffer = NULL;

if (pFormatCtx != NULL)

{

avcodec_copy_context(mCodecctx, pFormatCtx);

mCodecID = mCodecctx->codec_id;

}

mCodecctx->request_channels = mRequestChannels;

mCodec = avcodec_find_decoder((enum CodecID)mCodecID);

if (NULL == mCodec)

{

ALOGE("Unknow codec format");

return OMX_ErrorUndefined;

}

ALOGI("Audio codec name : %s", mCodec->name);

mCodecctx->thread_count = 4;

//for origin extractor with aac codec. Create decoder later.

if (CODEC_ID_AAC == mCodecID && (NULL == mCodecctx->extradata || 0 == mCodecctx->extradata_size))

{

ALOGW("AAC and no extradata, create decoder later!");

return OMX_ErrorNone;

}

if (!mCodec || (avcodec_open(mCodecctx, mCodec) < 0))

{

ALOGE("Open avcodec fail");

return OMX_ErrorUndefined;

}

mCodecOpened = 1;

return OMX_ErrorNone;

}

从源码中也可以发现在configure阶段会调用多次SetParameter接口,比如在之前分析的setComponentRole流程里,就会调用到OMX_IndexParamStandardComponentRole流程。那么节点3的configureCodec就分析完了,剩下kWhatComponentConfigured消息又有什么处理,请继续往下看,如下:

{

sp notify = mCodec->mNotify->dup();

notify->setInt32("what", ACodec::kWhatComponentConfigured);

notify->post();

}

void MediaCodec::onMessageReceived(const sp &msg) {

switch (msg->what()) {

case kWhatCodecNotify:

{

int32_t what;

CHECK(msg->findInt32("what", &what));

switch (what) {

...

case ACodec::kWhatComponentConfigured:

{

CHECK_EQ(mState, CONFIGURING);

setState(CONFIGURED);

// reset input surface flag

mHaveInputSurface = false;

(new AMessage)->postReply(mReplyID);

break;

}

...

}

configure之后,剩下的就是start组件层了。好人做到底,继续分析(直接分析调用流程,如何调用的可参考configure阶段的分析):

state->mDecodec->start()

/**MediaCodec.cpp*/

status_t MediaCodec::start() {

sp msg = new AMessage(kWhatStart, id());

sp response;

return PostAndAwaitResponse(msg, &response);

}

// static

status_t MediaCodec::PostAndAwaitResponse(

const sp &msg, sp *response) {

status_t err = msg->postAndAwaitResponse(response);

if (err != OK) {

return err;

}

if (!(*response)->findInt32("err", &err)) {

err = OK;

}

return err;

}

void MediaCodec::onMessageReceived(const sp &msg) {

switch (msg->what()) {

...

case kWhatStart:

{

uint32_t replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

if (mState != CONFIGURED) {

sp response = new AMessage;

response->setInt32("err", INVALID_OPERATION);

response->postReply(replyID);

break;

}

mReplyID = replyID;

setState(STARTING);

mCodec->initiateStart();

break;

}

...

}

}

在start消息时就会调用initiateStart:

/**ACodec.cpp*/

void ACodec::initiateStart() {

(new AMessage(kWhatStart, id()))->post();

}

bool ACodec::UninitializedState::onMessageReceived(const sp &msg) {

bool handled = false;

switch (msg->what()) {

...

case ACodec::kWhatStart:

{

onStart();

handled = true;

break;

}

...

}

}

void ACodec::LoadedState::onStart() {

ALOGV("onStart");

CHECK_EQ(mCodec->mOMX->sendCommand(

mCodec->mNode, OMX_CommandStateSet, OMX_StateIdle),

(status_t)OK);

mCodec->changeState(mCodec->mLoadedToIdleState);

}

/**OMXClient.cpp*/

status_t MuxOMX::sendCommand(

node_id node, OMX_COMMANDTYPE cmd, OMX_S32 param) {

return getOMX(node)->sendCommand(node, cmd, param);

}

Binder调用:

/**OMX.cpp*/

status_t OMX::sendCommand(

node_id node, OMX_COMMANDTYPE cmd, OMX_S32 param) {

return findInstance(node)->sendCommand(cmd, param);

}

/**OMXNodeInstance.cpp*/

status_t OMXNodeInstance::sendCommand(

OMX_COMMANDTYPE cmd, OMX_S32 param) {

const sp& bufferSource(getGraphicBufferSource());

if (bufferSource != NULL && cmd == OMX_CommandStateSet) {

if (param == OMX_StateIdle) {

// Initiating transition from Executing -> Idle

// ACodec is waiting for all buffers to be returned, do NOT

// submit any more buffers to the codec.

bufferSource->omxIdle();

} else if (param == OMX_StateLoaded) {

// Initiating transition from Idle/Executing -> Loaded

// Buffers are about to be freed.

bufferSource->omxLoaded();

setGraphicBufferSource(NULL);

}

// fall through

}

Mutex::Autolock autoLock(mLock);

OMX_ERRORTYPE err = OMX_SendCommand(mHandle, cmd, param, NULL);

return StatusFromOMXError(err);

}

sp OMXNodeInstance::getGraphicBufferSource() {

Mutex::Autolock autoLock(mGraphicBufferSourceLock);

return mGraphicBufferSource;

}

/**GraphicBufferSource.cpp*/

void GraphicBufferSource::omxIdle() {

ALOGV("omxIdle");

Mutex::Autolock autoLock(mMutex);

if (mExecuting) {

// We are only interested in the transition from executing->idle,

// not loaded->idle.

mExecuting = false;

}

}

#define OMX_SendCommand( \

hComponent, \

Cmd, \

nParam, \

pCmdData) \

((OMX_COMPONENTTYPE*)hComponent)->SendCommand( \

hComponent, \

Cmd, \

nParam, \

pCmdData) /* Macro End */

以SoftFFmpegAudioDec为例,子类没有该方法,从父类SimpleSoftOMXComponent去看:

OMX_ERRORTYPE SimpleSoftOMXComponent::sendCommand(

OMX_COMMANDTYPE cmd, OMX_U32 param, OMX_PTR data) {

CHECK(data == NULL);

sp msg = new AMessage(kWhatSendCommand, mHandler->id());

msg->setInt32("cmd", cmd);

msg->setInt32("param", param);

msg->post();

return OMX_ErrorNone;

}

void SimpleSoftOMXComponent::onMessageReceived(const sp &msg) {

Mutex::Autolock autoLock(mLock);

uint32_t msgType = msg->what();

ALOGV("msgType = %d", msgType);

switch (msgType) {

case kWhatSendCommand:

{

int32_t cmd, param;

CHECK(msg->findInt32("cmd", &cmd));

CHECK(msg->findInt32("param", ¶m));

onSendCommand((OMX_COMMANDTYPE)cmd, (OMX_U32)param);

break;

}

...

}

void SimpleSoftOMXComponent::onSendCommand(

OMX_COMMANDTYPE cmd, OMX_U32 param) {

switch (cmd) {

case OMX_CommandStateSet:

{

onChangeState((OMX_STATETYPE)param);

break;

}

case OMX_CommandPortEnable:

case OMX_CommandPortDisable:

{

onPortEnable(param, cmd == OMX_CommandPortEnable);

break;

}

case OMX_CommandFlush:

{

onPortFlush(param, true /* sendFlushComplete */);

break;

}

default:

TRESPASS();

break;

}

}

void SimpleSoftOMXComponent::onChangeState(OMX_STATETYPE state) {

// We shouldn't be in a state transition already.

CHECK_EQ((int)mState, (int)mTargetState);

switch (mState) {

case OMX_StateLoaded:

CHECK_EQ((int)state, (int)OMX_StateIdle);

break;

case OMX_StateIdle:

CHECK(state == OMX_StateLoaded || state == OMX_StateExecuting);

break;

case OMX_StateExecuting:

{

CHECK_EQ((int)state, (int)OMX_StateIdle);

for (size_t i = 0; i < mPorts.size(); ++i) {

onPortFlush(i, false /* sendFlushComplete */);

}

mState = OMX_StateIdle;

notify(OMX_EventCmdComplete, OMX_CommandStateSet, state, NULL);

break;

}

default:

TRESPASS();

}

mTargetState = state;

checkTransitions();

}

void SimpleSoftOMXComponent::checkTransitions() {

if (mState != mTargetState) {

bool transitionComplete = true;

if (mState == OMX_StateLoaded) {

CHECK_EQ((int)mTargetState, (int)OMX_StateIdle);

for (size_t i = 0; i < mPorts.size(); ++i) {

const PortInfo &port = mPorts.itemAt(i);

if (port.mDef.bEnabled == OMX_FALSE) {

continue;

}

if (port.mDef.bPopulated == OMX_FALSE) {

transitionComplete = false;

break;

}

}

} else if (mTargetState == OMX_StateLoaded) {

CHECK_EQ((int)mState, (int)OMX_StateIdle);

for (size_t i = 0; i < mPorts.size(); ++i) {

const PortInfo &port = mPorts.itemAt(i);

if (port.mDef.bEnabled == OMX_FALSE) {

continue;

}

size_t n = port.mBuffers.size();

if (n > 0) {

CHECK_LE(n, port.mDef.nBufferCountActual);

if (n == port.mDef.nBufferCountActual) {

CHECK_EQ((int)port.mDef.bPopulated, (int)OMX_TRUE);

} else {

CHECK_EQ((int)port.mDef.bPopulated, (int)OMX_FALSE);

}

transitionComplete = false;

break;

}

}

}

if (transitionComplete) {

mState = mTargetState;

if (mState == OMX_StateLoaded) {

onReset();

}

notify(OMX_EventCmdComplete, OMX_CommandStateSet, mState, NULL);

}

}

for (size_t i = 0; i < mPorts.size(); ++i) {

PortInfo *port = &mPorts.editItemAt(i);

if (port->mTransition == PortInfo::DISABLING) {

if (port->mBuffers.empty()) {

ALOGV("Port %d now disabled.", i);

port->mTransition = PortInfo::NONE;

notify(OMX_EventCmdComplete, OMX_CommandPortDisable, i, NULL);

onPortEnableCompleted(i, false /* enabled */);

}

} else if (port->mTransition == PortInfo::ENABLING) {

if (port->mDef.bPopulated == OMX_TRUE) {

ALOGV("Port %d now enabled.", i);

port->mTransition = PortInfo::NONE;

port->mDef.bEnabled = OMX_TRUE;

notify(OMX_EventCmdComplete, OMX_CommandPortEnable, i, NULL);

onPortEnableCompleted(i, true /* enabled */);

}

}

}

}

在这里看到start处理了一些状态,接下来就是就是解码了:

//start decoder

CHECK_EQ((status_t)OK, state->mDecodec->start());

CHECK_EQ((status_t)OK, state->mDecodec->getInputBuffers(&state->mDecodecInBuffers));

CHECK_EQ((status_t)OK, state->mDecodec->getOutputBuffers(&state->mDecodecOutBuffers));

/**get available buffers*/

status_t err = state->mDecodec->dequeueInputBuffer(&index, kTimeout);

const sp &buffer = state->mDecodecInBuffers.itemAt(index);

/**pull data to buffers*/

err = extractor->readSampleData(buffer);

/**send docodec buffer to queue*/

err = state->mDecodec->queueInputBuffer(index,buffer->offset(),buffer->size(),timeUs,bufferFlags);

/**get docodec buffer to local*/

status_t err = state->mDecodec->dequeueOutputBuffer(&index, offset, &size, &presentationTimeUs, &flags,kTimeout);

const sp &srcBuffer = state->mDecodecOutBuffers.itemAt(pendingIndex);

上面几行代码是调用MediaCodec API实现解码的处理,其中:

mDecodecInBuffers是解码器的输入buffer;

mDecodecOutBuffers是解码器的输出buffer;

填充mDecodecInBuffers,然后只要把数据推到解码队列中,就可以等待解码后输出的数据mDecodecOutBuffers。

比如把H264的视频帧填充到mDecodecInBuffers,mDecodecOutBuffers就会是解码后的YUV数据,拿到YUV数据后就可以填充到Surface或做其他处理,这样就把数据解码或者显示的出来。

(这里看不懂的读者建议先读下我的文章MediaCodec之Decoder)

status_t MediaCodec::getInputBuffers(Vector > *buffers) const {

sp msg = new AMessage(kWhatGetBuffers, id());

msg->setInt32("portIndex", kPortIndexInput);

msg->setPointer("buffers", buffers);

sp response;

return PostAndAwaitResponse(msg, &response);

}

status_t MediaCodec::getOutputBuffers(Vector > *buffers) const {

sp msg = new AMessage(kWhatGetBuffers, id());

msg->setInt32("portIndex", kPortIndexOutput);

msg->setPointer("buffers", buffers);

sp response;

return PostAndAwaitResponse(msg, &response);

}

void MediaCodec::onMessageReceived(const sp &msg) {

switch (msg->what()) {

...

case kWhatGetBuffers:

{

uint32_t replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

if (mState != STARTED || (mFlags & kFlagStickyError)) {

sp response = new AMessage;

response->setInt32("err", INVALID_OPERATION);

response->postReply(replyID);

break;

}

int32_t portIndex;

CHECK(msg->findInt32("portIndex", &portIndex));

Vector > *dstBuffers;

CHECK(msg->findPointer("buffers", (void **)&dstBuffers));

dstBuffers->clear();

const Vector &srcBuffers = mPortBuffers[portIndex];

for (size_t i = 0; i < srcBuffers.size(); ++i) {

const BufferInfo &info = srcBuffers.itemAt(i);

dstBuffers->push_back(

(portIndex == kPortIndexInput && mCrypto != NULL)

? info.mEncryptedData : info.mData);

}

(new AMessage)->postReply(replyID);

break;

}

...

}

/**MediaCodec.h*/

enum {

kPortIndexInput = 0,

kPortIndexOutput = 1,

};

List mAvailPortBuffers[2];

Vector mPortBuffers[2];

首先需要分析mPortBuffers和mAvailPortBuffers的用途。

来看一看源码:

status_t MediaCodec::dequeueInputBuffer(size_t *index, int64_t timeoutUs) {

sp msg = new AMessage(kWhatDequeueInputBuffer, id());

msg->setInt64("timeoutUs", timeoutUs);

sp response;

status_t err;

if ((err = PostAndAwaitResponse(msg, &response)) != OK) {

return err;

}

CHECK(response->findSize("index", index));

return OK;

}

void MediaCodec::onMessageReceived(const sp &msg) {

switch (msg->what()) {

...

case kWhatDequeueInputBuffer:

{

uint32_t replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

if (mHaveInputSurface) {

ALOGE("dequeueInputBuffer can't be used with input surface");

sp response = new AMessage;

response->setInt32("err", INVALID_OPERATION);

response->postReply(replyID);

break;

}

if (handleDequeueInputBuffer(replyID, true /* new request */)) {

break;

}

int64_t timeoutUs;

CHECK(msg->findInt64("timeoutUs", &timeoutUs));

if (timeoutUs == 0ll) {

sp response = new AMessage;

response->setInt32("err", -EAGAIN);

response->postReply(replyID);

break;

}

mFlags |= kFlagDequeueInputPending;

mDequeueInputReplyID = replyID;

if (timeoutUs > 0ll) {

sp timeoutMsg =

new AMessage(kWhatDequeueInputTimedOut, id());

timeoutMsg->setInt32(

"generation", ++mDequeueInputTimeoutGeneration);

timeoutMsg->post(timeoutUs);

}

break;

}

...

}

bool MediaCodec::handleDequeueInputBuffer(uint32_t replyID, bool newRequest) {

if (mState != STARTED

|| (mFlags & kFlagStickyError)

|| (newRequest && (mFlags & kFlagDequeueInputPending))) {

sp response = new AMessage;

response->setInt32("err", INVALID_OPERATION);

response->postReply(replyID);

return true;

}

ssize_t index = dequeuePortBuffer(kPortIndexInput);

if (index < 0) {

CHECK_EQ(index, -EAGAIN);

return false;

}

sp response = new AMessage;

response->setSize("index", index);

response->postReply(replyID);

return true;

}

ssize_t MediaCodec::dequeuePortBuffer(int32_t portIndex) {

CHECK(portIndex == kPortIndexInput || portIndex == kPortIndexOutput);

List *availBuffers = &mAvailPortBuffers[portIndex];

if (availBuffers->empty()) {

return -EAGAIN;

}

size_t index = *availBuffers->begin();

availBuffers->erase(availBuffers->begin());

BufferInfo *info = &mPortBuffers[portIndex].editItemAt(index);

CHECK(!info->mOwnedByClient);

info->mOwnedByClient = true;

return index;

}

从上面的代码中看到,从mAvailPortBuffers中获取一个空闲可用的availBuffers在mAvailPortBuffers池里的索引值,然后通过

const sp &buffer = state->mCodecInBuffers.itemAt(index);

来获取buffer并且填充数据。

填充数据后需要通知组件进行编解码:

status_t MediaCodec::queueInputBuffer(

size_t index,

size_t offset,

size_t size,

int64_t presentationTimeUs,

uint32_t flags,

AString *errorDetailMsg) {

if (errorDetailMsg != NULL) {

errorDetailMsg->clear();

}

sp msg = new AMessage(kWhatQueueInputBuffer, id());

if (mStats) {

int64_t nowUs = ALooper::GetNowUs();

msg->setInt64("qibtime", nowUs / 1000ll);

}

msg->setSize("index", index);

msg->setSize("offset", offset);

msg->setSize("size", size);

msg->setInt64("timeUs", presentationTimeUs);

msg->setInt32("flags", flags);

msg->setPointer("errorDetailMsg", errorDetailMsg);

sp response;

return PostAndAwaitResponse(msg, &response);

}

void MediaCodec::onMessageReceived(const sp &msg) {

switch (msg->what()) {

...

case kWhatQueueInputBuffer:

{

uint32_t replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

if (mState != STARTED || (mFlags & kFlagStickyError)) {

sp response = new AMessage;

response->setInt32("err", INVALID_OPERATION);

response->postReply(replyID);

break;

}

status_t err = onQueueInputBuffer(msg);

sp response = new AMessage;

response->setInt32("err", err);

response->postReply(replyID);

break;

}

...

}

status_t MediaCodec::onQueueInputBuffer(const sp &msg) {

size_t index;

size_t offset;

size_t size;

int64_t timeUs;

uint32_t flags;

CHECK(msg->findSize("index", &index));

CHECK(msg->findSize("offset", &offset));

CHECK(msg->findInt64("timeUs", &timeUs));

CHECK(msg->findInt32("flags", (int32_t *)&flags));

const CryptoPlugin::SubSample *subSamples;

size_t numSubSamples;

const uint8_t *key;

const uint8_t *iv;

CryptoPlugin::Mode mode = CryptoPlugin::kMode_Unencrypted;

// We allow the simpler queueInputBuffer API to be used even in

// secure mode, by fabricating a single unencrypted subSample.

CryptoPlugin::SubSample ss;

if (msg->findSize("size", &size)) {

if (mCrypto != NULL) {

ss.mNumBytesOfClearData = size;

ss.mNumBytesOfEncryptedData = 0;

subSamples = &ss;

numSubSamples = 1;

key = NULL;

iv = NULL;

}

} else {

if (mCrypto == NULL) {

return -EINVAL;

}

CHECK(msg->findPointer("subSamples", (void **)&subSamples));

CHECK(msg->findSize("numSubSamples", &numSubSamples));

CHECK(msg->findPointer("key", (void **)&key));

CHECK(msg->findPointer("iv", (void **)&iv));

int32_t tmp;

CHECK(msg->findInt32("mode", &tmp));

mode = (CryptoPlugin::Mode)tmp;

size = 0;

for (size_t i = 0; i < numSubSamples; ++i) {

size += subSamples[i].mNumBytesOfClearData;

size += subSamples[i].mNumBytesOfEncryptedData;

}

}

if (index >= mPortBuffers[kPortIndexInput].size()) {

return -ERANGE;

}

#这个BufferInfo实际上就是在dequeueInputBuffer时获取的那个buffer