主机规划:

| 主机名 | 角色 | IP |

| hdss7-11.host.com | k8s代理节点1,ZK1 | 10.4.7.11 |

| hdss7-12.host.com | k8s代理节点2,ZK2 | 10.4.7.12 |

| hdss7-21.host.com | k8s运算节点1,ZK3 | 10.4.7.21 |

| hdss7-22.host.com | k8s运算节点2,jenkins | 10.4.7.22 |

| hdss7-200.host.com | k8s运维节点1,harbor | 10.4.7.200 |

部署zookeeper:

安装jdk1.8(三台zk角色机器):

[root@hdss7-12 tmp]# mkdir /usr/java

[root@hdss7-12 tmp]# tar xf jdk-8u221-linux-x64.tar.gz -C /usr/java/

[root@hdss7-12 tmp]# ln -s /usr/java/jdk1.8.0_221/ /usr/java/jdk

[root@hdss7-12 tmp]# cd /usr/java/

[root@hdss7-12 java]# ll

总用量 0

lrwxrwxrwx 1 root root 23 3月 28 16:49 jdk -> /usr/java/jdk1.8.0_221/

drwxr-xr-x 7 10 143 245 7月 4 2019 jdk1.8.0_221

[root@hdss7-12 java]# tail -n 3 /etc/profile

export JAVA_HOME=/usr/java/jdk

export PATH=$JAVA_HOME/bin:$JAVA_HOME/bin:$PATH

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

[root@hdss7-12 java]# source /etc/profile

[root@hdss7-12 java]# java -version

java version "1.8.0_221"

Java(TM) SE Runtime Environment (build 1.8.0_221-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)

安装zookeeper:

[root@hdss7-11 tmp]# tar xf zookeeper-3.4.14.tar.gz -C /opt/

[root@hdss7-11 tmp]# ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

[root@hdss7-11 tmp]# cd /opt/

[root@hdss7-11 opt]# ls

zookeeper zookeeper-3.4.14

[root@hdss7-11 opt]#

[root@hdss7-11 opt]#

[root@hdss7-11 opt]# ll

总用量 4

lrwxrwxrwx 1 root root 22 3月 28 16:53 zookeeper -> /opt/zookeeper-3.4.14/

drwxr-xr-x 14 2002 2002 4096 3月 7 2019 zookeeper-3.4.14

[root@hdss7-11 opt]# mkdir -p^C

[root@hdss7-11 opt]# mkdir -pv /data/zookeeper/data /data/zookeeper/logs

mkdir: 已创建目录 "/data"

mkdir: 已创建目录 "/data/zookeeper"

mkdir: 已创建目录 "/data/zookeeper/data"

mkdir: 已创建目录 "/data/zookeeper/logs"

[root@hdss7-11 opt]# cd /data/

[root@hdss7-11 data]# ll

总用量 0

drwxr-xr-x 4 root root 30 3月 28 16:54 zookeeper

[root@hdss7-11 data]# cd zookeeper/

[root@hdss7-11 zookeeper]# ls

data logs

[root@hdss7-11 zookeeper]# ll

总用量 0

drwxr-xr-x 2 root root 6 3月 28 16:54 data

drwxr-xr-x 2 root root 6 3月 28 16:54 logs

[root@hdss7-11 zookeeper]# vi /opt/zookeeper/conf/zoo.cfg

[root@hdss7-11 zookeeper]#

[root@hdss7-11 zookeeper]#

[root@hdss7-11 zookeeper]#

[root@hdss7-11 zookeeper]# cat /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.od.com:2888:3888

server.2=zk2.od.com:2888:3888

server.3=zk3.od.com:2888:3888

在内网dns中配置域名解析:

[root@hdss7-11 named]# cat od.com.zone

$ORIGIN od.com.

$TTL 600; 10 minutes

@ IN SOAdns.od.com. dnsadmin.od.com. (

2019111006 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

fraefik A 10.4.7.11

dashboard A 10.4.7.11

zk1 A 10.4.7.11

zk2 A 10.4.7.12

zk3 A 10.4.7.21

[root@hdss7-11 named]# systemctl restart named

配置mydi:

[root@hdss7-11 named]# cat /data/zookeeper/data/myid

1

[root@hdss7-12 zookeeper]# cat /data/zookeeper/data/myid

2

[root@hdss7-21 zookeeper]# cat /data/zookeeper/data/myid

3

启动服务:

[root@hdss7-11 named]# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

部署jenkins:

准备镜像:

docker pull jenkins/jenkins:2.190.3

[root@hdss7-200 ~]# docker images|grep jenkins

jenkins/jenkins 2.190.3 22b8b9a84dbe 4 months ago 568MB

[root@hdss7-200 ~]# docker tag 22b8b9a84dbe harbor.od.com/public/jenkins:v2.190.3

[root@hdss7-200 ~]# docker push harbor.od.com/public/jenkins:v2.190.3

The push refers to repository [harbor.od.com/public/jenkins]

制作ssh秘钥:

[root@hdss7-200 ~]# ssh-keygen -t rsa -b 2048 -C "[email protected]" -N "" -f /root/.ssh/id_rsa

自定义Dockerfile:

[root@hdss7-200 jenkins]# pwd

/data/dockerfile/jenkins

[root@hdss7-200 jenkins]# cat Dockerfile

FROM harbor.od.com/public/jenkins:v2.190.3

USER root

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD id_rsa /root/.ssh/id_rsa

ADD config.json /root/.docker/config.json

ADD get-docker.sh /get-docker.sh

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.sh

mkdir -p /data/dockerfile/jenkins

jenkins]# cp /root/.docker/config.json .

jenkins]# cp /root/.ssh/id_rsa .

jenkins]# curl -fsSL get.docker.com -o get-docker.sh

jenkins]# chmod +x get-docker.sh

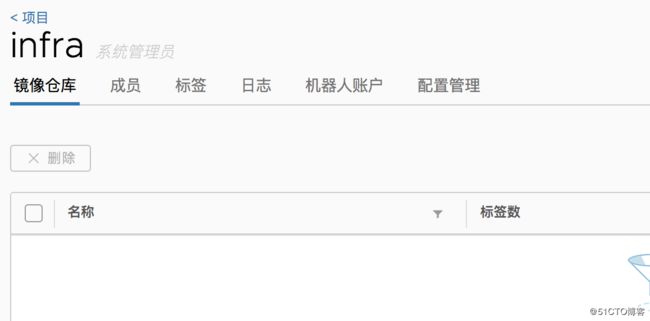

harbor新建私有仓库,因为自己制作的镜像包含了我们的秘钥:

[root@hdss7-200 jenkins]# docker build . -t harbor.od.com/infra/jenkins:v2.190.3

docker push harbor.od.com/infra/jenkins:v2.190.3

[root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n infra

secret/harbor created

[root@hdss7-21 ~]# kubectl create ns infra

namespace/infra created

在任意运算节点为infra创建一个secret:

[root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n infra

secret/harbor created

配置共享存储NFS:

hdss7-21/hdss7-22/hdss7-200 三台机器安装nfs-utils

yum install -y nfs-utils

在hdss7-200主机上:

[root@hdss7-200 ~]# mkdir -p /data/nfs-volume

[root@hdss7-200 ~]# ll

总用量 0

[root@hdss7-200 ~]# grep /data/nfs-volume /etc/exports

/data/nfs-volume 10.4.7.0/24(rw,no_root_squash)

[root@hdss7-200 ~]# systemctl start nfs

[root@hdss7-200 ~]# systemctl enable nfs

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

准备资源配置清单

[root@hdss7-200 k8s-yaml]# mkdir /data/k8s-yaml/jenkins

[root@hdss7-200 jenkins]# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: hdss7-200

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.od.com/infra/jenkins:v2.190.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

[root@hdss7-200 jenkins]# cat svc.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 8080

selector:

app: jenkins

[root@hdss7-200 jenkins]# cat ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.od.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80

mkdir -p /data/nfs-volume/jenkins_home

创建资源配置清单:

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/dp.yaml

deployment.extensions/jenkins created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/svc.yaml

service/jenkins created

[root@hdss7-22 ~]# kubectl apply -f http://k8s-yaml.od.com/jenkins/ingress.yaml

ingress.extensions/jenkins created

[root@hdss7-22 ~]# kubectl -n infra get pod

NAME READY STATUS RESTARTS AGE

jenkins-54b8469cf9-fcmvn 0/1 ContainerCreating 0 18s

[root@hdss7-22 ~]# kubectl -n infra get all

NAME READY STATUS RESTARTS AGE

pod/jenkins-54b8469cf9-fcmvn 0/1 ContainerCreating 0 28s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/jenkins ClusterIP 192.168.46.180

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/jenkins 0/1 1 0 28s

NAME DESIRED CURRENT READY AGE

replicaset.apps/jenkins-54b8469cf9 1 1 0 28s

在dashboard中看到,POD已经running了

看下nfs目录中有无数据:

[root@hdss7-200 ~]# cd /data/nfs-volume/jenkins_home/

[root@hdss7-200 jenkins_home]# ls

config.xml hudson.model.UpdateCenter.xml jenkins.install.UpgradeWizard.state jobs nodeMonitors.xml plugins secret.key.not-so-secret updates users

copy_reference_file.log identity.key.enc jenkins.telemetry.Correlator.xml logs nodes secret.key secrets userContent war

配置域名:

[root@hdss7-11 named]# cat od.com.zone

$ORIGIN od.com.

$TTL 600; 10 minutes

@ IN SOAdns.od.com. dnsadmin.od.com. (

2019111007 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

fraefik A 10.4.7.11

dashboard A 10.4.7.11

zk1 A 10.4.7.11

zk2 A 10.4.7.12

zk3 A 10.4.7.21

jenkins A 10.4.7.11

systemctl restart named

[root@hdss7-11 named]# dig jenkins.od.com +short

10.4.7.11

创建管理员用户:

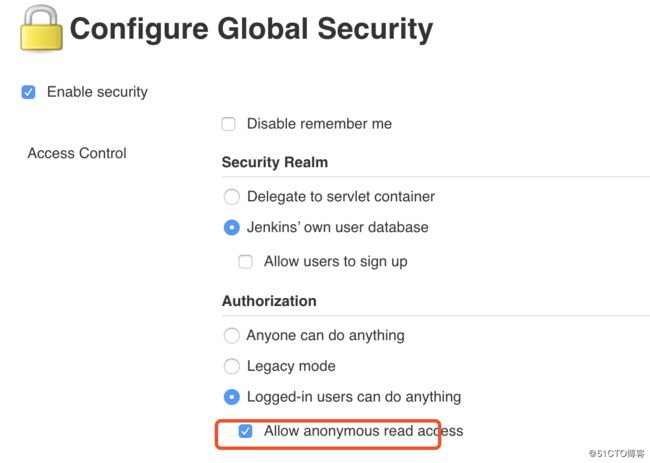

jenkins相关配置:

允许匿名用户访问,但是无法配置

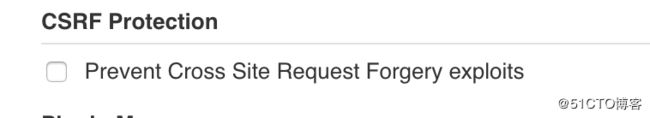

跨请请求保护取消掉

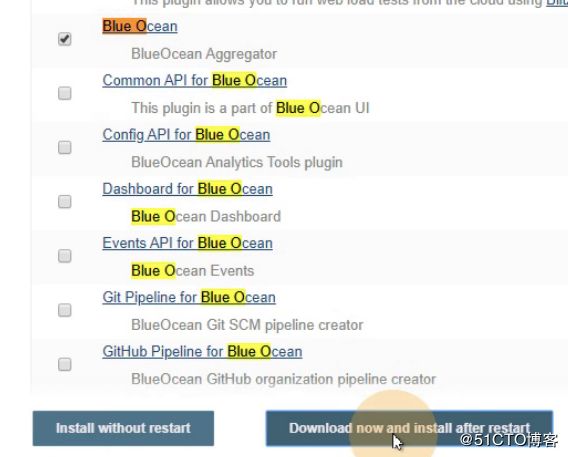

然后我们开始装插件;

检查工作,验证jenkins的权限是否满足要求:

[root@hdss7-21 ~]# kubectl -n infra get pod

NAME READY STATUS RESTARTS AGE

jenkins-54b8469cf9-fcmvn 1/1 Running 0 154m

[root@hdss7-21 ~]# kubectl exec -it jenkins-54b8469cf9-fcmvn -n infra

error: you must specify at least one command for the container

[root@hdss7-21 ~]# kubectl exec -it jenkins-54b8469cf9-fcmvn -n infra bash

root@jenkins-54b8469cf9-fcmvn:/# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9323b9ff7b8d harbor.od.com/infra/jenkins "/sbin/tini -- /usr/…" 3 hours ago Up 3 hours k8s_jenkins_jenkins-54b8469cf9-fcmvn_infra_cec301b8-63fa-4140-a87c-a408ccfcc0e1_0

d36461637742 harbor.od.com/public/pause:latest "/pause" 3 hours ago Up 3 hours k8s_POD_jenkins-54b8469cf9-fcmvn_infra_cec301b8-63fa-4140-a87c-a408ccfcc0e1_0

396862e4adb4 harbor.od.com/public/coredns "/coredns -conf /etc…" 5 hours ago Up 5 hours k8s_coredns_coredns-6b6c4f9648-mx6d5_kube-system_35eb5c7b-9c44-4c5e-8a8a-62dca52d9932_0

1c8ef9c66894 84581e99d807 "nginx -g 'daemon of…" 5 hours ago Up 5 hours k8s_nginx_nginx-dp-5dfc689474-4dgx5_kube-public_2cad7380-3abf-40f3-a190-00b09a23c92e_0

5214dcb450b4 harbor.od.com/public/pause:latest "/pause" 5 hours ago Up 5 hours k8s_POD_coredns-6b6c4f9648-mx6d5_kube-system_35eb5c7b-9c44-4c5e-8a8a-62dca52d9932_0

83977f4d7cd2 harbor.od.com/public/pause:latest "/pause" 5 hours ago Up 5 hours k8s_POD_nginx-dp-5dfc689474-4dgx5_kube-public_2cad7380-3abf-40f3-a190-00b09a23c92e_0

4b12e5744a9f harbor.od.com/public/dashboard "/dashboard --insecu…" 7 hours ago Up 7 hours k8s_kubernetes-dashboard_kubernetes-dashboard-76dcdb4677-vhf5p_kube-system_9ed0d3e8-fa2a-4964-bbd5-bd504c15e0bc_0

efe266c6a732 harbor.od.com/public/pause:latest "/pause" 7 hours ago Up 7 hours k8s_POD_kubernetes-dashboard-76dcdb4677-vhf5p_kube-system_9ed0d3e8-fa2a-4964-bbd5-bd504c15e0bc_0

1a3a504ac4f0 harbor.od.com/public/traefik "/entrypoint.sh --ap…" 11 hours ago Up 11 hours k8s_traefik-ingress_traefik-ingress-4pdm5_kube-system_8d6fb147-074c-46b3-b5a0-7cff176671ec_0

ae3d9bfee7ba harbor.od.com/public/pause:latest "/pause" 11 hours ago Up 11 hours 0.0.0.0:81->80/tcp k8s_POD_traefik-ingress-4pdm5_kube-system_8d6fb147-074c-46b3-b5a0-7cff176671ec_61

b19e870425c2 harbor.od.com/public/nginx "nginx -g 'daemon of…" 23 hours ago Up 23 hours k8s_my-nginx_nginx-ds-rxfqd_default_c416e0e3-e564-4548-971d-fe565ee2cb31_0

7162a04de248 harbor.od.com/public/pause:latest "/pause" 23 hours ago Up 23 hours k8s_POD_nginx-ds-rxfqd_default_c416e0e3-e564-4548-971d-fe565ee2cb31_0

root@jenkins-54b8469cf9-fcmvn:/# ssh -i /root/.ssh/id_rsa -T [email protected]

Warning: Permanently added 'gitee.com,212.64.62.174' (ECDSA) to the list of known hosts.

Hi StanleyWang (DeployKey)! You've successfully authenticated, but GITEE.COM does not provide shell access.

Note: Perhaps the current use is DeployKey.

Note: DeployKey only supports pull/fetch operations

部署maven软件:

https://archive.apache.org/dist/maven/maven-3/

[root@hdss7-200 tmp]# mkdir /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

[root@hdss7-200 tmp]# tar -xf apache-maven-3.6.1-bin.tar.gz -C /data/nfs-volume/jenkins_home/maven-3.6.1-8u232/

[root@hdss7-200 maven-3.6.1-8u232]# mv apache-maven-3.6.1/* .

[root@hdss7-200 maven-3.6.1-8u232]# ll

总用量 28

drwxr-xr-x 2 root root 97 3月 28 20:50 bin

drwxr-xr-x 2 root root 42 3月 28 20:50 boot

drwxr-xr-x 3 501 games 63 4月 5 2019 conf

drwxr-xr-x 4 501 games 4096 3月 28 20:50 lib

-rw-r--r-- 1 501 games 13437 4月 5 2019 LICENSE

-rw-r--r-- 1 501 games 182 4月 5 2019 NOTICE

-rw-r--r-- 1 501 games 2533 4月 5 2019 README.txt

添加镜像源:

[root@hdss7-200 maven-3.6.1-8u232]# vim conf/settings.xml

开始制作dubbo微服务运行时的底包镜像:

[root@hdss7-200 jre8]# docker pull docker.io/stanleyws/jre8:8u112

8u112: Pulling from stanleyws/jre8

cd9a7cbe58f4: Downloading [============================================> ] 45.34MB/51.48MB

cd9a7cbe58f4: Pull complete

8372fab2fcdf: Pull complete

54746b802c92: Pull complete

969413759d76: Pull complete

3a44edd3f51d: Pull complete

Digest: sha256:921225313d0ae6ce26eac31fc36b5ba8a0a841ea4bd4c94e2a167a9a3eb74364

Status: Downloaded newer image for stanleyws/jre8:8u112

docker.io/stanleyws/jre8:8u112

[root@hdss7-200 jre8]# docker images|grep jre

stanleyws/jre8 8u112 fa3a085d6ef1 2 years ago 363MB

[root@hdss7-200 jre8]# docker tag fa3a085d6ef1 harbor.od.com/public/jre:8u112

[root@hdss7-200 jre8]# docker push harbor.od.com/public/jre:8u112

The push refers to repository [harbor.od.com/public/jre]

0690f10a63a5: Pushed

c843b2cf4e12: Pushed

fddd8887b725: Pushed

42052a19230c: Pushed

8d4d1ab5ff74: Pushed

8u112: digest: sha256:733087bae1f15d492307fca1f668b3a5747045aad6af06821e3f64755268ed8e size: 1367

mkdir -p /data/dockerfile/jre8

[root@hdss7-200 jre8]# cat Dockerfile

FROM harbor.od.com/public/jre:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD config.yml /opt/prom/config.yml

ADD jmx_javaagent-0.3.1.jar /opt/prom/

WORKDIR /opt/project_dir

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

[root@hdss7-200 jre8]# cat config.yml

---

rules:

- pattern: '.*'

[root@hdss7-200 jre8]# cat entrypoint.sh

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS}

JAR_BALL=${JAR_BALL}

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

创建一个base仓库

[root@hdss7-200 jre8]# docker build . -t harbor.od.com/base/jre8:8u112

Sending build context to Docker daemon 372.2kB

Step 1/7 : FROM harbor.od.com/public/jre:8u112

---> fa3a085d6ef1

Step 2/7 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

---> Running in c09cb027a946

Removing intermediate container c09cb027a946

---> a44bd8128b35

Step 3/7 : ADD config.yml /opt/prom/config.yml

---> 1c2456f896dc

Step 4/7 : ADD jmx_javaagent-0.3.1.jar /opt/prom/

---> 7842c2c3a6e7

Step 5/7 : WORKDIR /opt/project_dir

---> Running in 7de1f27b3bc7

Removing intermediate container 7de1f27b3bc7

---> ad549e5ceb0c

Step 6/7 : ADD entrypoint.sh /entrypoint.sh

---> 38db59b88afa

Step 7/7 : CMD ["/entrypoint.sh"]

---> Running in 4aeb8ae2828e

Removing intermediate container 4aeb8ae2828e

---> 1c6b29d2c08e

Successfully built 1c6b29d2c08e

Successfully tagged harbor.od.com/base/jre8:8u112

[root@hdss7-200 jre8]# docker push harbor.od.com/base/jre8:8u112

The push refers to repository [harbor.od.com/base/jre8]

896951e302ac: Pushed

2451cd6c54e0: Pushed

0f922a86702b: Pushed

2d7f130363ca: Pushed

6d952b139383: Pushed

0690f10a63a5: Mounted from public/jre

c843b2cf4e12: Mounted from public/jre

fddd8887b725: Mounted from public/jre

42052a19230c: Mounted from public/jre

8d4d1ab5ff74: Mounted from public/jre

8u112: digest: sha256:e6e440e2f322be8da2a5e4935d937eeacd8a99b826d514284074899f020ee08f size: 2405

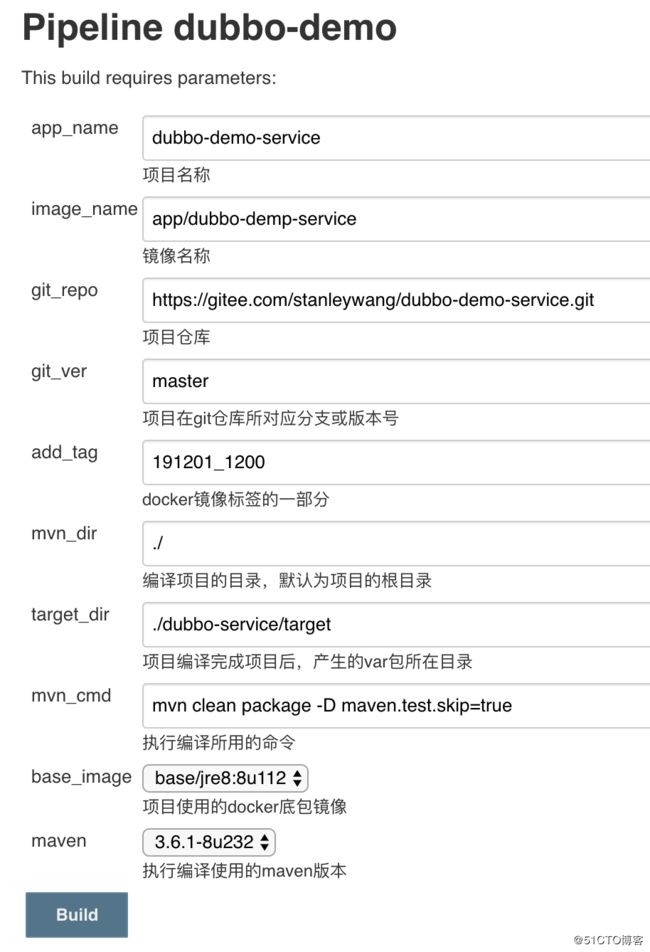

配置jenkins流水线:

创建项目后,进入配置

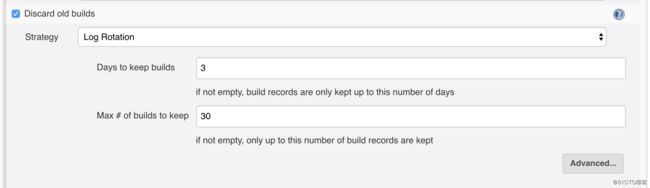

保留3天,30个构建项目

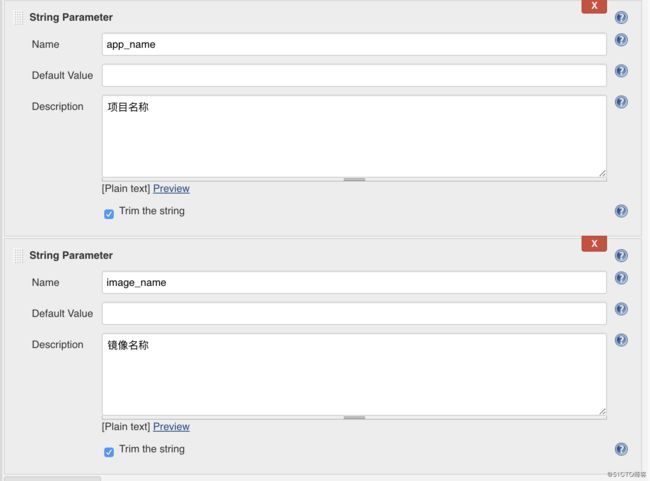

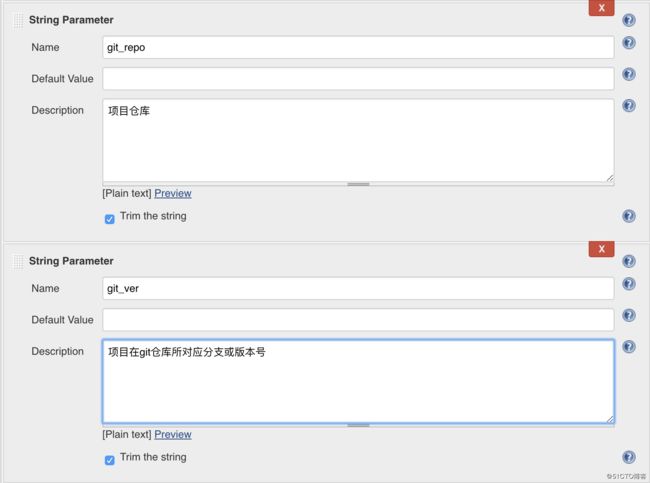

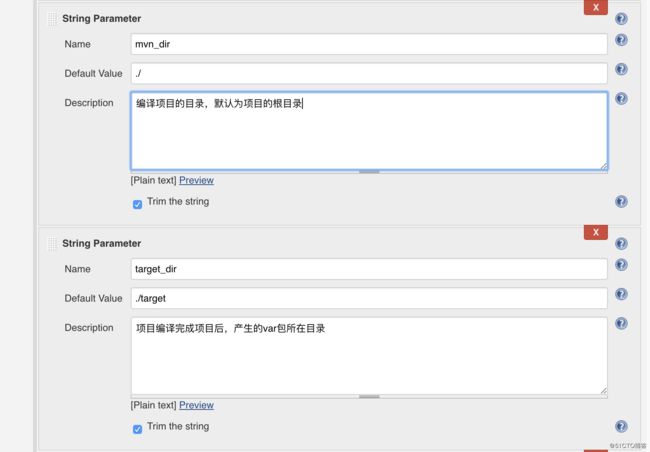

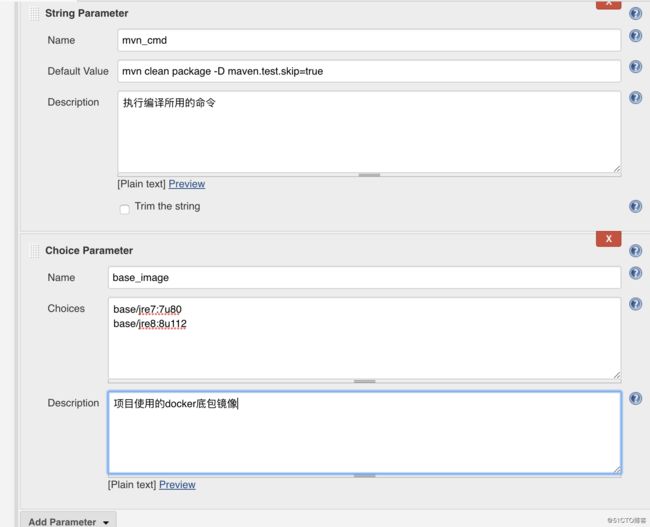

点击参数化构建,我们要添加十个参数,trim一点要勾选,这是帮助我们去掉参数前后的空格:

点击构建开始测试即可

[root@hdss7-21 ~]# kubectl create ns app

namespace/app created

[root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n app

secret/harbor created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-service/dp.yaml

deployment.extensions/dubbo-demo-service created

jenkins上部署成功后查看POD状态:

[root@hdss7-21 ~]# kubectl get pod -n app

NAME READY STATUS RESTARTS AGE

dubbo-demo-service-6b6566cb64-zj64p 1/1 Running 128 18h

POD为成功状态后,在到zk中查看是否注册上来了:

[root@hdss7-21 ~]# /opt/zookeeper/bin/zkCli.sh

Connecting to localhost:2181

2020-03-31 15:59:05,858 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT

2020-03-31 15:59:05,864 [myid:] - INFO [main:Environment@100] - Client environment:host.name=hdss7-21.host.com

2020-03-31 15:59:05,865 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.8.0_221

2020-03-31 15:59:05,870 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/java/jdk1.8.0_221/jre

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/opt/zookeeper/bin/../zookeeper-server/target/classes:/opt/zookeeper/bin/../build/classes:/opt/zookeeper/bin/../zookeeper-server/target/lib/*.jar:/opt/zookeeper/bin/../build/lib/*.jar:/opt/zookeeper/bin/../lib/slf4j-log4j12-1.7.25.jar:/opt/zookeeper/bin/../lib/slf4j-api-1.7.25.jar:/opt/zookeeper/bin/../lib/netty-3.10.6.Final.jar:/opt/zookeeper/bin/../lib/log4j-1.2.17.jar:/opt/zookeeper/bin/../lib/jline-0.9.94.jar:/opt/zookeeper/bin/../lib/audience-annotations-0.5.0.jar:/opt/zookeeper/bin/../zookeeper-3.4.14.jar:/opt/zookeeper/bin/../zookeeper-server/src/main/resources/lib/*.jar:/opt/zookeeper/bin/../conf::/usr/java/jdk/lib:/usr/java/jdk/lib/tools.jar

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2020-03-31 15:59:05,871 [myid:] - INFO [main:Environment@100] - Client environment:os.version=3.10.0-957.27.2.el7.x86_64

2020-03-31 15:59:05,872 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root

2020-03-31 15:59:05,872 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root

2020-03-31 15:59:05,872 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/root

2020-03-31 15:59:05,874 [myid:] - INFO [main:ZooKeeper@442] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@799f7e29

2020-03-31 15:59:05,932 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1025] - Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

Welcome to ZooKeeper!

JLine support is enabled

2020-03-31 15:59:06,155 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@879] - Socket connection established to localhost/127.0.0.1:2181, initiating session

2020-03-31 15:59:06,209 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1299] - Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x300066e3b080001, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls /dubbo

[com.od.dubbotest.api.HelloService]

[zk: localhost:2181(CONNECTED) 1]

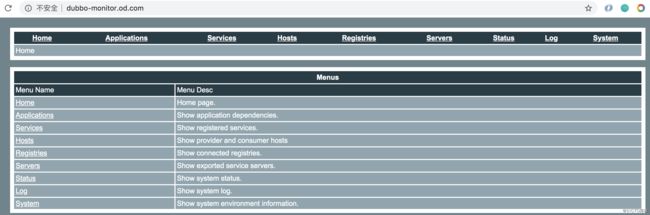

dubbo-monitor工具:

unzip dubbo-monitor-master.zip -d /data/dockerfile

mv /data/dockerfile/dubbo-monitor-master /data/dockerfile/dubbo-monitor

# cat dubbo-monitor/dubbo-monitor-simple/conf/dubbo_origin.properties

dubbo.registry.address=zookeeper://zk1.od.com:2181?backup=zk2.od.com:2181,zk3.od.com:2181

dubbo.protocol.port=20880

dubbo.jetty.port=8080

dubbo.jetty.directory=/dubbo-monitor-simple/monitor

dubbo.statistics.directory=/dubbo-monitor-simple/statistics

dubbo.charts.directory=/dubbo-monitor-simple/charts

dubbo.log4j.file=logs/dubbo-monitor.log

# cat dubbo-monitor-simple/bin/start.sh

#!/bin/bash

sed -e "s/{ZOOKEEPER_ADDRESS}/$ZOOKEEPER_ADDRESS/g" /dubbo-monitor-simple/conf/dubbo_origin.properties > /dubbo-monitor-simple/conf/dubbo.properties

cd `dirname $0`

BIN_DIR=`pwd`

cd ..

DEPLOY_DIR=`pwd`

CONF_DIR=$DEPLOY_DIR/conf

SERVER_NAME=`sed '/dubbo.application.name/!d;s/.*=//' conf/dubbo.properties | tr -d '\r'`

SERVER_PROTOCOL=`sed '/dubbo.protocol.name/!d;s/.*=//' conf/dubbo.properties | tr -d '\r'`

SERVER_PORT=`sed '/dubbo.protocol.port/!d;s/.*=//' conf/dubbo.properties | tr -d '\r'`

LOGS_FILE=`sed '/dubbo.log4j.file/!d;s/.*=//' conf/dubbo.properties | tr -d '\r'`

if [ -z "$SERVER_NAME" ]; then

SERVER_NAME=`hostname`

fi

PIDS=`ps -f | grep java | grep "$CONF_DIR" |awk '{print $2}'`

if [ -n "$PIDS" ]; then

echo "ERROR: The $SERVER_NAME already started!"

echo "PID: $PIDS"

exit 1

fi

if [ -n "$SERVER_PORT" ]; then

SERVER_PORT_COUNT=`netstat -tln | grep $SERVER_PORT | wc -l`

if [ $SERVER_PORT_COUNT -gt 0 ]; then

echo "ERROR: The $SERVER_NAME port $SERVER_PORT already used!"

exit 1

fi

fi

LOGS_DIR=""

if [ -n "$LOGS_FILE" ]; then

LOGS_DIR=`dirname $LOGS_FILE`

else

LOGS_DIR=$DEPLOY_DIR/logs

fi

if [ ! -d $LOGS_DIR ]; then

mkdir $LOGS_DIR

fi

STDOUT_FILE=$LOGS_DIR/stdout.log

LIB_DIR=$DEPLOY_DIR/lib

LIB_JARS=`ls $LIB_DIR|grep .jar|awk '{print "'$LIB_DIR'/"$0}'|tr "\n" ":"`

JAVA_OPTS=" -Djava.awt.headless=true -Djava.net.preferIPv4Stack=true "

JAVA_DEBUG_OPTS=""

if [ "$1" = "debug" ]; then

JAVA_DEBUG_OPTS=" -Xdebug -Xnoagent -Djava.compiler=NONE -Xrunjdwp:transport=dt_socket,address=8000,server=y,suspend=n "

fi

JAVA_JMX_OPTS=""

if [ "$1" = "jmx" ]; then

JAVA_JMX_OPTS=" -Dcom.sun.management.jmxremote.port=1099 -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false "

fi

JAVA_MEM_OPTS=""

BITS=`java -version 2>&1 | grep -i 64-bit`

if [ -n "$BITS" ]; then

JAVA_MEM_OPTS=" -server -Xmx128m -Xms128m -Xmn32m -XX:PermSize=16m -Xss256k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 "

else

JAVA_MEM_OPTS=" -server -Xms128m -Xmx128m -XX:PermSize=16m -XX:SurvivorRatio=2 -XX:+UseParallelGC "

fi

echo -e "Starting the $SERVER_NAME ...\c"

exec java $JAVA_OPTS $JAVA_MEM_OPTS $JAVA_DEBUG_OPTS $JAVA_JMX_OPTS -classpath $CONF_DIR:$LIB_JARS com.alibaba.dubbo.container.Main > $STDOUT_FILE 2>&1

制作镜像:

[root@hdss7-200 dubbo-monitor]# docker build . -t harbor.od.com/infra/dubbo-monitor:latest

Sending build context to Docker daemon 26.21MB

Step 1/4 : FROM jeromefromcn/docker-alpine-java-bash

latest: Pulling from jeromefromcn/docker-alpine-java-bash

Image docker.io/jeromefromcn/docker-alpine-java-bash:latest uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

420890c9e918: Pull complete

a3ed95caeb02: Pull complete

4a5cf8bc2931: Pull complete

6a17cae86292: Pull complete

4729ccfc7091: Pull complete

Digest: sha256:658f4a5a2f6dd06c4669f8f5baeb85ca823222cb938a15cfb7f6459c8cfe4f91

Status: Downloaded newer image for jeromefromcn/docker-alpine-java-bash:latest

---> 3114623bb27b

Step 2/4 : MAINTAINER Jerome Jiang

---> Running in 56e4eb1a59b9

Removing intermediate container 56e4eb1a59b9

---> b6a943ad2d64

Step 3/4 : COPY dubbo-monitor-simple/ /dubbo-monitor-simple/

---> 7204576fcdaa

Step 4/4 : CMD /dubbo-monitor-simple/bin/start.sh

---> Running in 5f9c9e4fab9a

Removing intermediate container 5f9c9e4fab9a

---> 85dcad427be0

Successfully built 85dcad427be0

Successfully tagged harbor.od.com/infra/dubbo-monitor:latest

[root@hdss7-200 dubbo-monitor]#

[root@hdss7-200 dubbo-monitor]#

[root@hdss7-200 dubbo-monitor]#

[root@hdss7-200 dubbo-monitor]# docker push harbor.od.com/infra/dubbo-monitor:latest

The push refers to repository [harbor.od.com/infra/dubbo-monitor]

17b25d354796: Pushed

6c05aa02bec9: Pushed

1bdff01a06a9: Pushed

5f70bf18a086: Mounted from public/pause

e271a1fb1dfc: Pushed

c56b7dabbc7a: Pushed

latest: digest: sha256:41416c8ba539e4f533d253f280be0185a7ad418866121348489e0d83e0e233e8 size: 2400

[root@hdss7-200 k8s-yaml]# mkdir /data/k8s-yaml/dubbo-monitor

[root@hdss7-200 k8s-yaml]# cd /data/k8s-yaml/dubbo-monitor

# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.od.com/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

# cat svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-monitor

namespace: infra

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-monitor

# cat ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

spec:

rules:

- host: dubbo-monitor.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-monitor

servicePort: 8080

应用资源配置清单:

kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/dp.yaml

kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/svc.yaml

kubectl apply -f http://k8s-yaml.od.com/dubbo-monitor/ingress.yaml

[root@hdss7-21 ~]# kubectl get pod -n infra

NAME READY STATUS RESTARTS AGE

dubbo-monitor-5bb45c8b97-dnl22 1/1 Running 0 103s

jenkins-54b8469cf9-hlwmh 1/1 Running 0 23h

发布成功后准备资源配置清单:

mkdir /data/k8s-yaml/dubbo-demo-consumer

cd /data/k8s-yaml/dubbo-demo-consumer

# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.od.com/app/dubbo-demo-consumer:master_191201_1600

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

# cat svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-demo-consumer

# cat ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

rules:

- host: demo.od.com

http:

paths:

- path: /

backend:

serviceName: dubbo-demo-consumer

servicePort: 8080

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/dp.yaml

deployment.extensions/dubbo-demo-consumer created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/svc.yaml

service/dubbo-demo-consumer created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dubbo-demo-consumer/ingress.yaml

ingress.extensions/dubbo-demo-consumer created

[root@hdss7-21 ~]# kubectl get pod -n app

NAME READY STATUS RESTARTS AGE

dubbo-demo-consumer-7f57887dd4-2zqlk 1/1 Running 0 32s

dubbo-demo-service-6b6566cb64-zj64p 1/1 Running 128 25h