1,问题描述

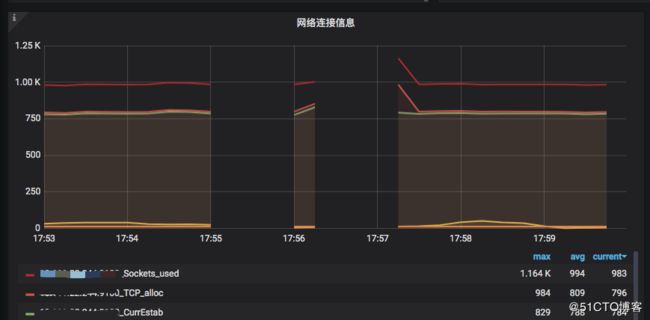

收到告警平台告警,Rockemq集群有异常,CPU ,内存 IO, 网络都有异常,具体见以下图:

RocketMQ节点cpu飙高,

网络丢包100%

IO有飙高

内存中断

2,查看broker日志

2.1查看GC日志并无异常

$cd /dev/shm/

2020-02-23T23:02:11.864+0800: 11683510.551: Total time for which application threads were stopped: 0.0090361 seconds, Stopping threads took: 0.0002291 seconds 2020-02-23T23:03:36.815+0800: 11683595.502: [GC pause (G1 Evacuation Pause) (young) 11683595.502: [G1Ergonomics (CSet Construction) start choosing CSet, _pending_cards: 7992, predicted base time: 5.44 ms, remaining time: 194.56 ms, target pause time: 200.00 ms] 11683595.502: [G1Ergonomics (CSet Construction) add young regions to CSet, eden: 255 regions, survivors: 1 regions, predicted young region time: 1.24 ms] 11683595.502: [G1Ergonomics (CSet Construction) finish choosing CSet, eden: 255 regions, survivors: 1 regions, old: 0 regions, predicted pause time: 6.68 ms, target pause time: 200.00 ms] , 0.0080468 secs] [Parallel Time: 4.1 ms, GC Workers: 23] [GC Worker Start (ms): Min: 11683595502.0, Avg: 11683595502.3, Max: 11683595502.5, Diff: 0.5] [Ext Root Scanning (ms): Min: 0.6, Avg: 0.9, Max: 1.5, Diff: 0.9, Sum: 20.5] [Update RS (ms): Min: 0.0, Avg: 1.0, Max: 2.5, Diff: 2.5, Sum: 23.7] [Processed Buffers: Min: 0, Avg: 11.8, Max: 35, Diff: 35, Sum: 271] [Scan RS (ms): Min: 0.0, Avg: 0.0, Max: 0.1, Diff: 0.1, Sum: 0.7] [Code Root Scanning (ms): Min: 0.0, Avg: 0.0, Max: 0.0, Diff: 0.0, Sum: 0.2] [Object Copy (ms): Min: 0.0, Avg: 1.1, Max: 1.8, Diff: 1.8, Sum: 26.4] [Termination (ms): Min: 0.0, Avg: 0.2, Max: 0.3, Diff: 0.3, Sum: 5.4] [Termination Attempts: Min: 1, Avg: 3.5, Max: 7, Diff: 6, Sum: 80] [GC Worker Other (ms): Min: 0.0, Avg: 0.0, Max: 0.1, Diff: 0.1, Sum: 1.0] [GC Worker Total (ms): Min: 3.2, Avg: 3.4, Max: 3.6, Diff: 0.5, Sum: 77.9] [GC Worker End (ms): Min: 11683595505.6, Avg: 11683595505.6, Max: 11683595505.7, Diff: 0.1] [Code Root Fixup: 0.1 ms] [Code Root Purge: 0.0 ms] [Clear CT: 0.8 ms] [Other: 3.0 ms] [Choose CSet: 0.0 ms] [Ref Proc: 1.1 ms] [Ref Enq: 0.0 ms] [Redirty Cards: 1.0 ms] [Humongous Register: 0.0 ms] [Humongous Reclaim: 0.0 ms] [Free CSet: 0.3 ms] [Eden: 4080.0M(4080.0M)->0.0B(4080.0M) Survivors: 16.0M->16.0M Heap: 4341.0M(16.0G)->262.4M(16.0G)] [Times: user=0.07 sys=0.00, real=0.01 secs] |

2.2查看broker日志

2020-02-23T23:02:11.864 ERROR BrokerControllerScheduledThread1 - SyncTopicConfig Exception, x.x.x.x:10911 org.apache.rocketmq.remoting.exception.RemotingTimeoutException: wait response on the channel at org.apache.rocketmq.remoting.netty.NettyRemotingAbstract.invokeSyncImpl(NettyRemotingAbstract.java:427) ~[rocketmq-remoting-4.5.2.jar:4.5.2] at org.apache.rocketmq.remoting.netty.NettyRemotingClient.invokeSync(NettyRemotingClient.java:375) ~[rocketmq-remoting-4.5.2.jar:4.5.2] |

通过查看RocketMQ的集群和GC日志,可以看出网络超时,造成主从同步问题;并未发现Broker自身出问题了

通过监控看到网络、CPU、磁盘IO都出现了问题;到底是磁盘IO引起CPU飙高的?引起网络问题;还是CPU先飙高引起网络中断和磁盘IO的。机器上只有一个RocketMQ进程,而且负载并不高;所以不是由应用进程导致CPU、网络、磁盘IO等问题。那会不会阿里云抖动呢?有可能是阿里云网络抖动引起的,如果是阿里云网络抖动,为何一个集群只有几个节点抖动,同一机房的业务机器也没有出现抖动。接着分析linux日志

3,查看系统日志

| #grep -ci "page allocation failure" /var/log/messages* |

通过查看系统日志,发现页分配失败“page allocation failure. order:0, mode:0x20”,也就Page不够了。

通过查询资料发现问题

https://access.redhat.com/solutions/90883

设置这俩参数设置一下,Increase vm.min_free_kbytes value, for example to a higher value than a single allocation request.

Change vm.zone_reclaim_mode to 1 if it's set to zero, so the system can reclaim back memory from cached memory.

3.1 修改配置文件

| $ sed -i '/swappiness=1/a\vm.zone_reclaim_mode = 1\nvm.min_free_kbytes = 512000' /etc/sysctl.conf && sysctl -p /etc/sysctl.conf |

zone_reclaim_mode默认为0即不启用zone_reclaim模式,1为打开zone_reclaim模式从本地节点回收内存;

min_free_kbytesy允许内核使用的最小内存

简单的说,就是Rocketmq是吃内存大户,如果没有开启内核限制,Rocketmq不断的向系统索要内存,系统将内存耗尽,当内存耗尽后,系统无法响应请求,导致网络丢包,cpu飙高。

broker节点的操作系统版本为Centos6.10,可能将系统升级到7版本以上不存在这种问题

4,分析原因

在系统空闲内存低于 watermark[low]时,开始启动内核线程kswapd进行内存回收,直到该zone的空闲内存数量达到watermark[high]后停止回收。如果上层申请内存的速度太快,导致空闲内存降至watermark[min]后,内核就会进行direct reclaim(直接回收),即直接在应用程序的进程上下文中进行回收,再用回收上来的空闲页满足内存申请,因此实际会阻塞应用程序,带来一定的响应延迟,而且可能会触发系统OOM。这是因为watermark[min]以下的内存属于系统的自留内存,用以满足特殊使用,所以不会给用户态的普通申请来用。

min_free_kbytes大小的影响

min_free_kbytes设的越大,watermark的线越高,同时三个线之间的buffer量也相应会增加。这意味着会较早的启动kswapd进行回收,且会回收上来较多的内存(直至watermark[high]才会停止),这会使得系统预留过多的空闲内存,从而在一定程度上降低了应用程序可使用的内存量。极端情况下设置min_free_kbytes接近内存大小时,留给应用程序的内存就会太少而可能会频繁地导致OOM的发生。

min_free_kbytes设的过小,则会导致系统预留内存过小。kswapd回收的过程中也会有少量的内存分配行为(会设上PF_MEMALLOC)标志,这个标志会允许kswapd使用预留内存;另外一种情况是被OOM选中杀死的进程在退出过程中,如果需要申请内存也可以使用预留部分。这两种情况下让他们使用预留内存可以避免系统进入deadlock状态。

备注:以上分析来自网络