- 解压zk到指定的文件夹

tar zxvf zookeeper-3.4.5.tar.gz -C ../src/

- 修改zoo.cfg中日志文件存在内容

新建文件目录:/usr/local/app/tmp/zookeeper

dataDir=/usr/local/app/tmp/zookeeper

3.添加zk对应的环境变量~/.bashrc

export ZK_HOME=/usr/local/src/zookeeper-3.4.5

export PATH=$ZK_HOME/bin:$PATH

source一下,使环境变量生效: source ~/.bashrc

测试一下,echo $ZK_HOME

4.解压kafka到指定文件夹

tar zxvf kafka_2.11-0.10.2.1.tgz -C ../src/

5.配置环境变量

export KAFKA_HOME=/usr/local/src/kafka_2.11-0.10.2.1

export PATH=$KAFKA_HOME/bin:$PATH

source一下,source ~/.bashrc

echo一下, echo $KAFKA_HOME

6.修改kafka配置文件 $KAFKA_HOME/config/server.properties:

broker.id=0

listeners=PLAINTEXT://master:9092

log.dirs=/usr/local/app/tmp/kafka-logs

zookeeper.connect=master:2181

- 启动zk

zkServer.sh start $ZK_HOME/conf/zoo.cfg

查看启动状态:jps

[root@master bin]# jps

13094 Application

7636 Application

13432 Application

1711 SecondaryNameNode

16347 Jps

16330 QuorumPeerMain

1571 NameNode

查看运行的进程: jps -m

[root@master bin]# jps -m

13094 Application --conf-file /usr/local/src/apache-flume-1.6.0-bin/conf/exec_avro_console.conf --name a1

7636 Application --conf-file exec_hdfs.conf --name a1

13432 Application --conf-file /usr/local/src/apache-flume-1.6.0-bin/conf/exec_avro_console.conf --name a1

16359 Jps -m

1711 SecondaryNameNode

16330 QuorumPeerMain /usr/local/src/zookeeper-3.4.5/conf/zoo.cfg

1571 NameNode

8.启动kafka

kafka-server-start.sh $KAFKA_HOME/config/server.properties

执行jps查看启动状态;

执行jps -m查看正在运行的进程

- 创建topic

kafka-topics.sh --create --zookeeper master:2181 --replication-factor 1 --partitions 1 --topic hello_topic

查看zk上已注册的topic列表:

kafka-topics.sh --list --zookeeper master:2181

查看zk上所有topic的详细信息:

kafka-topics.sh --describe --zookeeper master:2181

# 查看指定topic的详细信息

kafka-topics.sh --describe --zookeeper master:2181 --topic hello_topic

10.发送消息:

kafka-console-producer.sh --broker-list master:9092 --topic hello_topic

11.消费消息

kafka-console-consumer.sh --bootstrap-server master:9092 --topic hello_topic --from-beginning

或

kafka-console-consumer.sh --zookeeper master:2181 --topic hello_topic --from-beginning

参数说明:

--from-beginning #表示从最开始消费消息

12.查看当前offset的值:

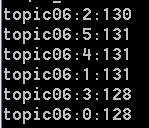

kafka-consumer-offset-checker.sh --zookeeper localhost:2181 --group group-1 --topic topic06

运行结果如下:

13.查询topic的offset的范围

用下面命令可以查询到topic:topic06 broker:localhost:9092的

offset的最小值:

kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list localhost:9092 —topic topic06 --time -2

查询offset的最大值:

kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list localhost:9092 —topic topic06 --time -1

从上面的输出可以看出topic:topic06有6个partition:0 offset范围分别为:

0 - [0,128]

1 - [0,131]

2 - [0,130]

3 - [0,128]

4 - [0,131]

5 - [0,131]

14.单节点多broker模式:

修改kafka的配置文件server.properties:

config/server-1.properties:

broker.id=1

listeners=PLAINTEXT://master:9093

log.dir=/usr/local/app/tmp/kafka-logs-1

config/server-2.properties:

broker.id=2

listeners=PLAINTEXT://:9094

log.dir=/usr/local/app/tmp/kafka-logs-2

启动多个kafka:

kafka-server-start.sh $KAFKA_HOME/config/server-1.properties &

kafka-server-start.sh $KAFKA_HOME/config/server-2.properties &